A Practical Guide to Handheld Spectrometer Alignment and Verification for Biomedical Research

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to verify and maintain the alignment of handheld spectrometers.

A Practical Guide to Handheld Spectrometer Alignment and Verification for Biomedical Research

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to verify and maintain the alignment of handheld spectrometers. It covers foundational principles of spectrometer optics, step-by-step verification procedures using standard reference materials, common troubleshooting scenarios, and advanced validation techniques including machine learning and MTF analysis. The guide is designed to ensure data integrity, improve measurement accuracy, and support compliance in biomedical and clinical research applications.

Understanding Spectrometer Optics and the Critical Need for Alignment

This guide supports research on handheld spectrometer alignment verification, providing troubleshooting and procedures for researchers and drug development professionals.

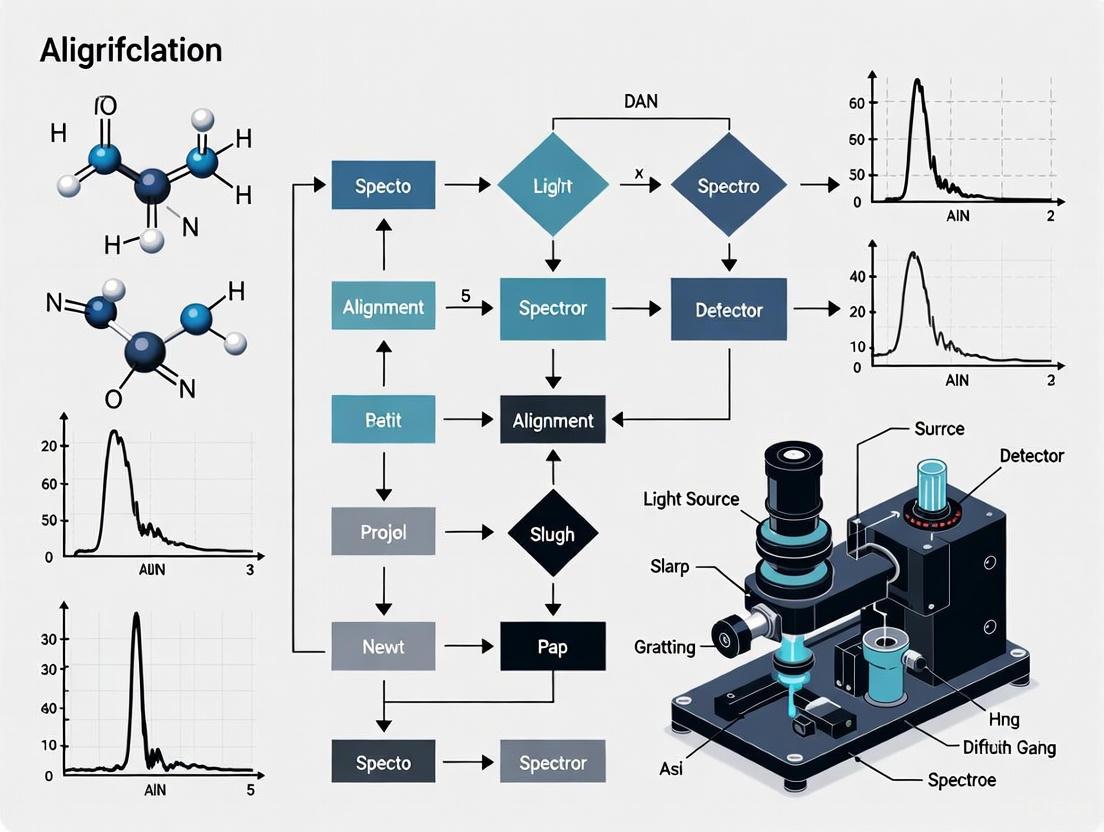

Core Components and Functions

The four core optical components work together to ensure light is properly prepared, dispersed, and measured.

- Entrance Slit: Controls the amount of light entering the system and defines the optical resolution. A narrower slit provides better resolution but reduces light throughput [1].

- Collimating Mirror: Takes the diverging light from the slit and creates a parallel (collimated) beam, which is essential for accurate dispersion by the diffraction grating [2].

- Diffraction Grating: Disperses the collimated light into its constituent wavelengths. Groove density and blaze angle determine the system's dispersion and efficiency [2] [1].

- Focusing Mirror: Refocuses the dispersed parallel beams of light onto the detector plane or exit slit, creating a sharp spectrum [2].

Diagram: Spectrometer Optical Path

Troubleshooting FAQs and Solutions

Q1: My spectrometer shows low signal across all wavelengths. What should I check?

A: Low signal often stems from component misalignment or obstruction.

- Check the Entrance Slit: Ensure it is not mechanically obstructed or dirty. A partially blocked slit severely reduces light intake [3].

- Verify Mirror Alignment: Misaligned collimating or focusing mirrors will not properly handle the light beam. The collimating mirror must perfectly parallelize light toward the grating, and the focusing mirror must correctly image onto the detector [2].

- Inspect the Diffraction Grating: Confirm it is seated correctly in its mount and that the grating is not degraded. Holographic gratings are less susceptible to stray light than ruled gratings [1].

Q2: My spectral resolution has degraded. Which component is most likely at fault?

A: Resolution degradation is frequently linked to the entrance slit or grating.

- Re-verify Slit Width: If the slit mechanism is damaged or has vibrated open, its effective width may have changed, directly impacting resolution [1].

- Assess Grating Performance: Over time or in harsh environments, a grating's efficiency can degrade. Check manufacturer efficiency curves for your model. Consider that ruled gratings typically offer superior efficiency at their blaze wavelength, while holographic gratings produce less stray light [2] [1].

Q3: I observe strange peaks or a high background in my spectrum. What causes this?

A: This is typically a sign of stray light or internal reflections.

- Identify Stray Light Paths: Light may be taking an unintended path due to internal scattering. Baffles within the optical chamber are designed to prevent this. Also, ensure the grating is not being overfilled with light, as this can cause stray light [1].

- Clean Optical Windows: Dirty windows on the fiber optic input or light pipe can cause scattering and poor analysis readings, contributing to a high background. Clean these regularly according to manufacturer guidelines [3].

Experimental Protocol: Alignment Verification

This procedure validates the alignment of a handheld spectrometer's core optical components using a calibrated light source.

Scope

This protocol applies to the alignment verification of handheld spectrometers used for research within a GxP environment, focusing on the slit, collimating mirror, diffraction grating, and focusing mirror [4].

Principle

Alignment is verified by measuring the system's response to reference materials and comparing output against specifications for wavelength accuracy, photometric accuracy, and resolution. This follows an integrated Analytical Instrument Qualification (AIQ) and Computerized System Validation (CSV) approach [4].

Materials and Equipment

- Calibrated Light Source: A tungsten or deuterium lamp with a known, stable spectral output [5].

- Wavelength Standards: Holmium oxide or other NIST-traceable standards for wavelength calibration [5].

- Absorbance Standards: Nickel sulfate solutions for verifying photometric accuracy [5].

- Intralipid Phantoms: Phantoms made from Intralipid solution and methylene blue to simulate tissue scattering and absorption properties for system validation in biomedical applications [6].

Research Reagent Solutions

| Item | Function |

|---|---|

| Holmium Oxide Filter | NIST-traceable standard for validating wavelength accuracy and calibration [5]. |

| Nickel Sulfide Solutions | Used for verifying the photometric accuracy of absorbance measurements [5]. |

| Intralipid 20% Fat Emulsion | Mimics tissue scattering properties; used to validate system performance in biomedical imaging [6]. |

| Methylene Blue Solution | Absorbing agent used with Intralipid to create phantoms simulating a range of tissue optical properties [6]. |

Procedure

Pre-Alignment Setup

- Turn on the spectrometer and light source, allowing a 15-30 minute warm-up for the lamp to stabilize [7].

- Ensure the spectrometer is connected to a stable platform to minimize vibrations [7].

Wavelength Accuracy Verification

- Place the holmium oxide wavelength standard in the light path.

- Acquire a spectrum and identify key emission peaks.

- Compare the measured peak wavelengths to the certified values. The deviation should be within the instrument's specification (e.g., ±1.5 nm) [5].

Resolution and Slit Function Check

- Use a light source with a very narrow emission line (e.g., a low-pressure mercury lamp).

- Measure the Full Width at Half Maximum (FWHM) of the peak. This value, in nanometers, is the instrumental resolution and is primarily controlled by the entrance slit width [5].

Photometric Accuracy Verification

- Using a matched set of cuvettes, measure the absorbance of a series of certified nickel sulfate solutions [5].

- Prepare a proper blank using the same solvent for the blank measurement [7].

- Compare the measured absorbance values to the known standards. The deviation should be within the instrument's specification (e.g., ±5.0%) [5].

Diagram: Alignment Verification Workflow

Performance Specification Tables

Wavelength Accuracy and Resolution Specifications

| Component | Typical Specification | Verification Standard |

|---|---|---|

| Entrance Slit | Resolution (FWHM): < 3.0 nm [5] | FWHM of mercury emission line |

| Diffraction Grating | Wavelength Accuracy: ±1.5 nm [5] | Holmium oxide filter |

| Overall System | Photometric Accuracy: ±5.0% [5] | Nickel sulfate solutions |

Diffraction Grating Selection Guide

| Grating Type | Key Feature | Best Use Case |

|---|---|---|

| Ruled Reflection | Superior efficiency at design (blaze) wavelength [1] | High light throughput applications at specific wavelengths |

| Holographic Reflection | Reduced stray light [1] | Applications requiring high signal-to-noise ratio and low background |

| Echelle | Highest resolving power and dispersion [1] | Demanding applications like atomic resolution and astronomy |

| Transmission | In-line optical path; easier to clean [2] | Simple spectrographs; limited to transmitting spectral regions |

Troubleshooting Guides

Guide 1: Troubleshooting Spectral Accuracy Issues

Problem: Spectrometer is producing inconsistent or inaccurate analysis results on the same sample.

Explanation: Inaccurate results can stem from various alignment and maintenance issues. Misalignment affects the instrument's ability to correctly measure light intensity and wavelength, directly compromising data integrity [3].

Troubleshooting Steps:

- Check for Contaminated Samples: Ensure samples are not contaminated by skin oils, grinding coolants, or other substances. Always use a new grinding pad and avoid touching sample surfaces [3].

- Verify Window Cleanliness: Check and clean the windows in front of the fiber optic and in the direct light pipe. Dirty windows cause instrument drift and poor analysis readings [3].

- Inspect Vacuum Pump (for OES): Monitor for constant low readings for carbon, phosphorus, and sulfur. A malfunctioning vacuum pump can cause these lower-wavelength elements to lose intensity or disappear from the spectrum, leading to incorrect values [3].

- Recalibrate the Instrument: Perform a recalibration using a properly prepared sample. Follow the software's recalibration sequence precisely, without deviation. Analyze the first sample five times in a row; the relative standard deviation (RSD) should not exceed 5 [3].

Guide 2: Addressing Signal and Baseline Problems

Problem: The spectrometer has low signal intensity or an unstable baseline.

Explanation: Low signal and baseline instability are often related to optical misalignment, environmental factors, or component failure. These issues prevent the detector from receiving a stable, strong signal [8].

Troubleshooting Steps:

- Perform Instrument Alignment: Use the software's alignment function. Ensure the system has been powered on for at least one hour for temperature stabilization before aligning [8].

- Check Environmental Conditions: Verify that humidity is within specifications. Check the humidity indicator and replace the desiccant if needed. A stable environment is crucial for a stable baseline [8] [9].

- Inspect Optical Components: Check sample compartment windows for fogging and ensure all accessories are correctly installed and aligned [8].

- Verify Warm-Up and Purge Times: After opening the instrument cover, allow it to purge for 10-15 minutes after closing. For cooled detectors, allow at least 15 minutes for the detector to cool [8].

Frequently Asked Questions (FAQs)

Q1: What are the most critical parameters to control for ensuring spectral accuracy in hyperspectral imaging? A systematic study identified eight key parameters that significantly impact spectral accuracy [10]. Their effects and mitigation strategies are summarized below:

| Parameter | Impact on Spectral Accuracy | Effective Mitigation Strategy |

|---|---|---|

| Ambient Light | Significant spectral distortion | Perform measurements in a dark room; normalization is less effective [10]. |

| Camera Warm-Up Time | Introduces spectral noise | Warm up light sources and cameras for one hour; normalization is less effective [10]. |

| Exposure Time | Low exposure causes spectral noise | Maximize signal without saturating any spectral band [10]. |

| Spatial Averaging | Small region of interest (ROI) increases noise | Use a larger ROI for spatial averaging [10]. |

| Camera Focus | Affects spectral measurement | Ensure the system is in correct focus [10]. |

| Working Distance | Changes spectral response | Keep a fixed, optimized working distance [10]. |

| Illumination Angle | Alters spectral signature | Control and fix the angle of illumination [10]. |

| Target Angle | Alters spectral signature | Keep the target perpendicular to the optical axis (0°) [10]. |

Q2: How does a misaligned lens affect my data? A misaligned lens fails to focus correctly on the light's origin. This means the instrument cannot collect the full intensity of light, leading to highly inaccurate readings because the core measurement is based on light intensity. It is analogous to a camera flash aimed away from the subject, resulting in a dark and unusable photo [3].

Q3: What is the consequence of stray light on my spectrophotometric measurements? Stray light, or "Falschlicht," is light of wavelengths outside the monochromator's bandpass that reaches the detector. It is a critical error, especially at the ends of the instrument's spectral range. Stray light causes significant deviations in transmittance and absorbance readings, leading to false concentration calculations and poor data integrity [11].

Q4: How can I verify the wavelength accuracy of my instrument? Wavelength accuracy is a fundamental spectral characteristic. Verification methods depend on your equipment [11]:

- Using Emission Lines: The most accurate method is to use the known emission lines of a deuterium or other line source.

- Using Absorption Bands & Filters: If an emission source is unavailable, you can use materials with sharp, known absorption bands (e.g., holmium oxide solution or glass) or special interference filters with a certified transmission maximum.

The Scientist's Toolkit: Key Research Reagent Solutions

The following standards and materials are essential for verifying instrument performance and conducting reliable experiments.

| Item | Function & Application |

|---|---|

| Holmium Oxide (HoO) Solution/Glass | Provides sharp, known absorption bands for verifying the wavelength accuracy of a spectrophotometer [11]. |

| Didymium Glass Filter | A traditional, though less precise, filter with wide absorption bands for basic wavelength checks [11]. |

| Neutral Density Absorbing Solid Filters | Used with master instruments to test the photometric linearity and performance of other spectrophotometers [11]. |

| Certified Diffuse Reflectance Targets | Targets with validated reflectance spectra (e.g., White, Red, Erbium Oxide) are critical for characterizing and validating the performance of Hyperspectral Imaging (HSI) systems [10]. |

| Potassium Dichromate & Chromate Solutions | Historically used in inter-laboratory comparisons to test for photometric accuracy and stray light, revealing high coefficients of variation among labs [11]. |

| VB124 | VB124, CAS:2230186-18-0, MF:C23H23ClN2O4, MW:426.9 g/mol |

| GF 15 | GF 15, MF:C23H21ClO6, MW:428.9 g/mol |

Experimental Protocol: Machine Learning-Assisted Spectrometer Alignment

This protocol, adapted from research at the BESSY II beamline, details a method to reduce alignment time from one hour to under five minutes using a surrogate neural network model [12].

Objective: To automate the alignment of a soft X-ray spectrometer by determining the optimal position of its optical components (a Reflection Zone Plate, RZP) relative to the sample and detector.

Workflow: The diagram below illustrates the four-step process, combining offline simulation with real-world optimization.

Detailed Methodology:

Simulation (Offline): An in-house, GPU-accelerated ray-tracing software (RAYX) is used to simulate the spectrometer setup. A large dataset (one million simulations) is generated by systematically varying key parameters within their mechanical limits [12]:

- RZP x, y, z positions (e.g., ±5.0 mm)

- Detector xy-coordinate offsets

- Ratio of Manganese to Oxygen (for fluorescence specificity)

- Data augmentation (applying artificial camera offsets and intensity scaling) is used to bridge the simulation-to-reality gap [12].

Neural Network Training (Offline): A deep neural network is trained exclusively on the simulated dataset. The model learns to map the input parameters (positions, offsets, etc.) to the resulting spectral image [12].

Experimental Data Acquisition (Online at Beamline): With the physical spectrometer, 10-25 reference measurements are collected, covering the expected alignment search space [12].

Optimization (Online): An optimizer is deployed to find the seven key parameters that minimize the average difference between the neural network's predictions and the real measurements. The optimized parameters are [12]:

- Absolute x, y, z coordinates of the RZP

- Camera offsets in x and y

- Manganese-to-oxygen ratio

- An overall intensity scaling factor

This method demonstrates that models trained on simulated data can be effectively applied to real-world instruments, drastically reducing alignment time and conserving valuable beam time [12].

Relationship Between Misalignment Types and Data Integrity

The following diagram categorizes common misalignments and operational failures, tracing their direct consequences on spectral data and the resulting risks to research outcomes.

Frequently Asked Questions (FAQs)

1. Why is wavelength accuracy so critical for both quantitative and qualitative spectroscopic analysis?

Wavelength accuracy is fundamental because all quantitative and qualitative methods assume the x-axis (wavelength or wavenumber) of your spectroscopic data is precisely aligned. Without this, regression models and spectral comparison algorithms are invalid, as they rely on the data channels being perfectly aligned with only the y-axis amplitude changing in relation to analyte concentration [13].

2. What are the symptoms of a spatial misalignment in a spectrometer's optical path?

Spatial misalignment can manifest in several ways. For lens-based systems, improper alignment means the lens does not focus on the source of the light, resulting in the collection of light that is not intense enough for accurate results. This leads to highly inaccurate intensity readings. In more complex systems, such as the submillimeter spectrometer DESHIMA 2.0, misalignment can significantly degrade the aperture efficiency, meaning the instrument fails to effectively couple light from the source to the detector [3] [14].

3. How can I verify the intensity readings from my spectrometer are reliable?

Unreliable intensity readings, such as drift or inconsistent values, can stem from several issues. Common culprits include an aging light source (e.g., a lamp that needs replacement), a need for a longer instrument warm-up time, or dirty optics (e.g., sample cuvettes, windows, or lenses). Regular calibration with certified reference standards is essential to ensure intensity accuracy [15].

4. Are there real-time methods to assess spectrometer alignment?

Yes, advanced methods are being developed. One novel approach uses a low-coherence interferometer to generate sinusoidal patterns on the spectrometer's sensor. By analyzing the modulation transfer function (MTF) of these patterns in real-time, researchers can continuously evaluate the alignment and spectral resolution of the instrument during its alignment phase, allowing for immediate corrections [16].

Troubleshooting Guides

Issue 1: Inaccurate Wavelength Calibration

- Symptom: Peaks in your spectrum appear at incorrect wavelengths, leading to misidentification of compounds or inaccurate quantitative results.

- Required Materials: Certified wavelength reference standard relevant to your spectral region (see Table 1).

- Procedure:

- Measure the reference standard using your spectrometer's standard procedure.

- Record the peak positions from the resulting spectrum.

- Compare the measured peak positions to the certified values provided with the standard.

- If the deviations exceed your method's tolerance, perform a wavelength calibration using the spectrometer's software, using the reference standard to align the x-axis.

Issue 2: Drifting or Unstable Intensity Signals

- Symptom: Baseline instability or inconsistent intensity readings for the same sample.

- Required Materials: Clean, certified reference materials for intensity checks, isopropyl alcohol, lint-free wipes.

- Procedure:

- Check the light source: Ensure the spectrometer has warmed up sufficiently. If instability persists, the lamp may be near the end of its life and require replacement [15].

- Inspect and clean optics: Check the sample cuvette for scratches or residue. Clean it thoroughly. Inspect other accessible optical windows (e.g., in front of the fiber optic or in the direct light pipe) and clean them if dirty [3] [15].

- Perform a baseline correction: Execute a full baseline correction or recalibration using the correct reference solution (e.g., a pure solvent blank) [15].

- Verify the environment: Ensure the instrument is on a stable surface, safe from vibrations and temperature fluctuations.

Issue 3: Poor Spatial Focus or Beam Misalignment

- Symptom: Low signal-to-noise ratio, distorted peaks, or a significant loss in sensitivity and aperture efficiency.

- Required Materials: Alignment tools specified by the manufacturer, a cold background source (e.g., liquid nitrogen), a specialized chopper [14].

- Procedure (Based on a advanced astronomical spectrometer method):

- Utilize a sky chopper: Employ a chopper with a small aperture that couples to a cold source (like cold sky or liquid nitrogen).

- Scan the beam: Use a motor-controlled hexapod or alignment mechanism to scan the instrument's beam across the chopper's entrance aperture.

- Find the null point: The configuration that produces the lowest signal on the detectors indicates that the beam is fully coupled to the cold source and not the warm surroundings, signifying optimal alignment [14].

- For lens-based systems: Ensure operators are trained to perform simple lens alignment checks as part of regular maintenance to ensure the lens is focused on the light source [3].

Key Research Reagents and Materials

The following table lists essential reference materials used for the alignment and verification of spectrometer key parameters.

Table 1: Essential Reference Materials for Spectrometer Alignment

| Material Name | Function | Key Application / Spectral Region |

|---|---|---|

| Holmium Oxide (Liquid or Glass) [13] | Wavelength calibration standard | Ultraviolet-Visible (UV-Vis) |

| NIST SRM 2036 [13] | Reflectance wavelength standard | Visible (Vis) and Near-Infrared (NIR) |

| Polystyrene [13] | Wavelength verification standard | Near-Infrared (NIR) |

| Certified Intensity Reference | Reflectance/Transmittance calibration | Verifying y-axis (intensity) accuracy across wavelengths |

Experimental Protocols for Alignment Verification

Protocol 1: Wavelength Axis Verification using Holmium Oxide

This is a standard method for verifying the wavelength accuracy of UV-Vis spectrometers.

- Objective: To verify and correct the wavelength scale of a UV-Vis spectrometer by measuring a holmium oxide reference standard with known, stable absorption peaks.

- Materials:

- Holmium oxide liquid wavelength standard (traceable to NIST SRM 2034) in a sealed quartz cell, or Holmium oxide glass wavelength standard [13].

- UV-Vis spectrometer.

- Appropriate software for calibration.

- Workflow:

- The spectrometer's light source illuminates the holmium oxide standard.

- Light is passed through a monochromator to isolate individual wavelengths.

- The transmitted light is measured by a detector (transducer).

- The intensity data is converted into an absorbance spectrum.

- The certified peak positions (e.g., at 241.5 nm, 279.4 nm, etc. for holmium oxide) are compared to the measured spectrum.

- Any deviation is used by the software to perform a wavelength calibration, ensuring the x-axis is correctly aligned [13] [17].

The following diagram illustrates the core workflow and logical relationships of this verification protocol.

Protocol 2: Real-Time Spatial Alignment using MTF Measurement

This protocol describes a modern method for assessing the spatial and resolution alignment of a spectrometer in real-time.

- Objective: To assess the modulation transfer function (MTF) of a spectrometer in real-time during the alignment process to ensure optimal spatial focus and spectral resolution.

- Materials:

- Spectrometer under test.

- Low-coherence interferometer (e.g., Michelson interferometer).

- Broadband light source matching the spectrometer's range.

- Workflow:

- A broadband light source is directed into the interferometer.

- The interferometer generates an amplitude-modulated spectrum with a sinusoidal pattern; the spatial frequency of this pattern is adjusted by changing the optical path difference (OPD) in the interferometer.

- This modulated light is coupled into the spectrometer being tested.

- The spectrometer's sensor captures the modulated spectrum.

- The modulation contrast is calculated at different spatial frequencies to determine the MTF.

- The MTF curve provides a quantitative measure of the spectrometer's spectral resolution and spatial alignment. Technicians can then adjust the spectrometer's optics while monitoring the MTF in real-time to achieve optimal performance [16].

The logical flow of this advanced alignment technique is summarized below.

Within the framework of handheld spectrometer alignment verification procedure research, understanding the impact of environmental conditions is paramount. For researchers and drug development professionals, ensuring the integrity of spectroscopic data is a foundational aspect of quality control and material identification. The alignment of a handheld spectrometer is not a static setting but is highly susceptible to changes in its operating environment. Factors such as temperature fluctuations, humidity levels, and mechanical shock can induce subtle yet critical misalignments, leading to inaccurate elemental analysis and compromised data integrity. This guide details the specific effects of these environmental factors and provides targeted troubleshooting protocols to maintain optimal instrument performance [18] [19].

FAQ: Environmental Impacts on Spectrometer Alignment

How do temperature fluctuations affect spectrometer alignment? Temperature changes cause materials within the spectrometer to expand or contract. This can shift the position of critical optical components like lenses, mirrors, and gratings, disrupting the precise path that light must travel. Such shifts can lead to calibration drift and inaccurate analysis results, particularly for elements requiring high precision, such as carbon and phosphorus. Furthermore, electronic components are sensitive to temperature, which can alter their electrical properties and contribute to measurement errors [18] [19].

Why is humidity a concern for optical instruments? High humidity can lead to condensation on optical surfaces, such as lenses and windows, scattering light and reducing signal intensity. Over time, it can also promote corrosion of electronic components and metal surfaces. Conversely, very low humidity can increase the risk of static electricity discharge, which can damage sensitive electronics. Both scenarios can degrade the signal-to-noise ratio and destabilize the instrument's calibration [20] [19].

Can mechanical shock really misalign a handheld spectrometer? Yes. Handheld spectrometers are particularly vulnerable to mechanical shock and vibration from being moved and used in the field. Jarring and impacts can loosen fasteners, shift optical components, and damage computer hardware. This often results in intermittent performance issues, a complete loss of alignment, and inconsistent analytical results. The bright light and loud noise during a metal analysis can be symptoms of poor probe contact resulting from misalignment [20] [18].

What are the symptoms of an environmentally-induced misalignment? Key indicators include:

- Drifting Calibration: The instrument requires frequent recalibration.

- Inconsistent Results: Successive tests on the same homogeneous sample yield significantly different values.

- Low Intensity: The instrument reports low light intensity or signal strength.

- Element-Specific Errors: Consistently low or erratic readings for elements sensitive to atmospheric interference, such as Carbon (C), Phosphorus (P), and Sulfur (S), which can also indicate vacuum pump issues [3] [18].

Troubleshooting Guides

Troubleshooting Temperature-Related Drift

- Problem: Analysis results are inconsistent, and the instrument will not hold a stable calibration, especially in environments with fluctuating temperatures.

- Solution:

- Acclimate: Allow the spectrometer to stabilize in the new environment for a recommended period before use.

- Control the Environment: Perform calibration and critical measurements in a temperature-controlled room, if possible.

- Verify: If drift is suspected, recalibrate the instrument using certified reference materials in a stable environment [19].

Troubleshooting Humidity-Related Issues

- Problem: Condensation is visible on optical windows, or the instrument shows signs of corrosion. Analysis may drift more often.

- Solution:

- Inspect and Clean: Regularly inspect and, if necessary, clean the optical windows using approved materials and procedures.

- Use Desiccants: Store the instrument with desiccant packs in its case to control moisture.

- Control Humidity: Operate the instrument in an environment where humidity is maintained between 40% and 60% [3] [19].

Troubleshooting Vibration and Shock Damage

- Problem: The instrument has been dropped or jarred, and now produces erratic results or will not initiate tests properly.

- Solution:

- Visual Inspection: Check for any visible physical damage.

- Functional Check: Run a test on a certified reference material to verify performance.

- Seek Professional Service: If the instrument fails the performance check, contact a qualified service technician for internal inspection and realignment. Annual or semi-annual preventative maintenance is recommended for handheld units to address loosened components from vibration [18].

The following tables summarize the critical environmental parameters and their measurable effects on spectrometer components and output.

Table 1: Environmental Factor Targets and Limits

| Environmental Factor | Target Operating Range | Observed Negative Impact Beyond Range |

|---|---|---|

| Temperature | Controlled, Stable Ambient | Material expansion/contraction, electronic signal drift [19] |

| Relative Humidity | 40% - 60% | Condensation (optical surfaces), corrosion, static discharge [19] |

| Mechanical Shock | Vibration-Free | Physical misalignment of optics, loose fasteners and connectors [18] |

Table 2: Symptom-Based Diagnostic Guide

| Observed Symptom | Potential Environmental Cause | Key Elements Typically Affected |

|---|---|---|

| Frequent calibration drift | Temperature fluctuations, dirty windows from high humidity/dust | All, especially trace elements [3] [19] |

| Low results for C, P, S | Vacuum pump failure (affected by environment/age), dirty optics | Carbon, Phosphorus, Sulfur, Nitrogen [3] |

| High analysis variability | Contaminated argon, unstable temperature/humidity, mechanical shock | All elements [3] [19] |

| Unusual instrument noise/bright light | Probe misalignment from shock or convex surface contact | N/A (Operational failure) [3] |

Experimental Protocols for Alignment Verification

A core aspect of the broader thesis research involves developing robust verification protocols. The following methodology, adapted from studies on material degradation, provides a framework for systematically quantifying the impact of environmental stress on spectrometer alignment.

Protocol: Simulating and Assessing Shock-Variable Environmental Stress

- Objective: To quantitatively determine the effects of combined temperature and humidity shocks on the mechanical and optical alignment stability of a handheld spectrometer.

- Materials:

- Handheld Optical Emission Spectrometer (OES)

- Environmental chamber (or controlled ovens/freezers)

- Certified reference materials (CRMs) for calibration verification

- Data logging software

- Methodology:

- Baseline Measurement: Perform a full instrument calibration and then conduct a minimum of 10 measurements on a stable CRM. Record the mean and standard deviation for key elements (e.g., C, Mn, P, S) to establish a baseline performance metric.

- Stress Application (One Cycle):

- Humidity Phase: Expose the spectrometer to a high-humidity environment (e.g., >80% RH) at a stable, moderate temperature (e.g., +25°C) for 72 hours [20].

- Freezing Phase: Immediately transfer the instrument to a low-temperature freezer (e.g., -20°C) for 24 hours [20].

- Heating Phase: Immediately transfer the instrument to an elevated temperature (e.g., +70°C) for 72 hours in a dry atmosphere [20].

- Post-Stress Verification: After the cycle, without recalibrating, immediately repeat the measurement process from the baseline step. Conduct another 10 measurements on the same CRM.

- Data Analysis: Compare the pre- and post-stress data. Key metrics include the shift in the mean value for each element (indicating bias) and the change in the standard deviation (indicating loss of precision or instability).

- Interpretation: Significant changes in the mean values or a marked increase in standard deviation after one or more shock cycles provide quantitative evidence of environmentally-induced misalignment or performance degradation [20].

Workflow Visualization

The following diagram illustrates the logical workflow for diagnosing and addressing environmental alignment issues, as outlined in this guide.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Alignment and Verification Research

| Item | Function in Research Context |

|---|---|

| Certified Reference Materials (CRMs) | Provides a ground truth with known elemental composition to verify spectrometer accuracy and detect calibration drift caused by environmental stress [3]. |

| Handheld Optical Emission Spectrometer (OES) | The primary instrument under test for evaluating the robustness of alignment verification procedures in field conditions [18]. |

| Environmental Chamber | Allows for precise control and cycling of temperature and humidity to simulate various operating and storage conditions in a controlled laboratory setting [20]. |

| Calibration Standards | A set of certified materials used to establish the initial calibration curve for the spectrometer, against which any deviations can be measured [18]. |

| Vibration Test Equipment | Used to simulate the mechanical shocks and vibrations experienced during transportation and field use, quantifying their impact on alignment [18]. |

| K284-6111 | K284-6111, MF:C30H37N3O4S, MW:535.7 g/mol |

| (E/Z)-Mirin | Z-5-(4-Hydroxybenzylidene)-2-imino-1,3-thiazolidin-4-one (Mirin) |

Step-by-Step Alignment Verification Procedures and Reference Standards

Selecting and Using Certified Reference Materials (CRMs) for Wavelength Verification

FAQs on Wavelength Verification and CRMs

1. What is wavelength verification, and why is it critical for handheld spectrometer data integrity?

Wavelength verification is the process of confirming that your spectrometer accurately measures the wavelength of light. Within the context of handheld spectrometer alignment verification procedure research, it is a fundamental check to ensure the instrument's baseline accuracy. Without proper verification, all subsequent data collected for drug development or material analysis is suspect, as shifts in wavelength alignment can lead to incorrect material identification or quantitative results [21] [22].

2. How often should I perform wavelength verification on my spectrometer?

Verification should be performed regularly. The frequency depends on usage, the criticality of your measurements, and operational changes. It is explicitly required following any hardware maintenance or replacement, such as installing a new flow cell [22]. Furthermore, leading pharmacopoeias like the US Pharmacopeia (USP) and European Pharmacopoeia (EP) mandate periodic instrument qualification, which includes wavelength checks, to maintain compliance in regulated environments [21].

3. My wavelength verification test failed. What are the most common causes?

A verification failure indicates a discrepancy between the instrument's reported wavelength and the certified value of the reference material. Common causes include:

- Recent Hardware Changes: As noted in a Waters support article, a common culprit is replacing a component like the flow cell without performing a subsequent calibration [22].

- Optical Misalignment: Physical shocks or prolonged use can misalign the internal optics [3].

- Deteriorating Light Source: The performance of the instrument's lamp can degrade over time, affecting output [23].

- Use of an Incorrect or Unstable CRM: The reference material may be unsuitable for the verification or may have degraded [24].

4. Are there specific CRMs recommended for verifying wavelength accuracy in the far-UV range?

Yes, while Holmium oxide solutions or filters are the most common CRMs for the 240-650 nm range, verification in the far-UV (below 240 nm) requires different materials. For the far-UV range, a Cerium oxide solution is recommended [24]. Specific qualification kits are available that extend verification down to 200 nm using references like Cerium cells [21].

Troubleshooting Guide: Wavelength Verification Failures

The table below outlines common symptoms, their potential causes, and recommended corrective actions.

| Symptom | Potential Cause | Corrective Action |

|---|---|---|

| Verification fails after flow cell replacement | Detector not calibrated post-maintenance [22] | Execute a full system calibration after any hardware change. Ensure the flow cell is filled with a transparent solvent like methanol or water [22]. |

| Consistent drift in low-wavelength elements (C, P, S) | Malfunctioning vacuum pump in optical emission spectrometers [3] | Check pump for leaks, noise, or overheating. Monitor readings for Carbon and Phosphorus as early indicators [3]. |

| General inaccurate or inconsistent analysis | Dirty optical windows or lenses [3] [23] | Clean the windows located in front of the fiber optic cable and in the direct light pipe according to manufacturer guidelines using approved materials [3]. |

| Poor signal intensity or noisy baseline | Contaminated sample or aging light source [3] [23] | Ensure samples are properly prepared and not contaminated by oils or coatings. Inspect and replace the lamp if it is near the end of its service life [3] [23]. |

Experimental Protocol: Executing a Wavelength Accuracy Verification

This protocol provides a detailed methodology for verifying the wavelength accuracy of a spectrophotometer using a holmium oxide CRM, as required by pharmacopoeial standards [21] [24].

1. Principle The instrument's measured peak wavelengths for a holmium oxide solution are compared against its certified values. The difference between the measured and certified values must fall within the instrument's specified tolerance.

2. Research Reagent Solutions

| Item | Function in Protocol |

|---|---|

| Holmium Oxide CRM (Solution or Filter) | Certified reference material with known absorption peaks used to qualify wavelength accuracy across the UV-Vis range (e.g., 240-650 nm) [21] [24]. |

| Spectrophotometer Cuvettes | High-quality, matched cuvettes for holding the reference solution. |

| Lint-Free Wipes | For handling and cleaning optical components without introducing scratches or contaminants [23]. |

3. Procedure

- Instrument Preparation: Power on the spectrophotometer and allow it to warm up for the time specified by the manufacturer to ensure signal stability.

- Baseline Correction: Perform a baseline correction with a blank solvent in the light path.

- CRM Measurement: Place the holmium oxide CRM (in a cuvette or filter holder) in the sample compartment.

- Spectral Acquisition: Acquire an absorption spectrum across the applicable range (e.g., 240-650 nm).

- Peak Identification: Use the instrument's software to identify the measured wavelength of key holmium oxide peaks (e.g., 241.0 nm, 287.5 nm, 361.5 nm, 536.0 nm, etc.).

- Calculation: For each certified peak, calculate the difference: Δλ = Measured Wavelength - Certified Wavelength.

- Acceptance Criteria: The absolute value of Δλ for each peak must be less than or equal to the tolerance specified for your instrument (e.g., ±0.5 nm for UV/Vis, ±1.0 nm for PDA detectors).

Wavelength Verification Workflow

The following diagram illustrates the logical workflow for diagnosing and resolving a wavelength verification failure, incorporating steps from the troubleshooting guide and experimental protocol.

Holmium Oxide and other NIST-Traceable Standards for UV-Vis Alignment

Why is wavelength calibration critical for handheld spectrometer data integrity?

Accurate wavelength calibration is the foundation of reliable spectroscopic data. For handheld spectrometers used in field and quality control settings, verification ensures that measurements like absorbance peaks are recorded at their true wavelengths, which is vital for material identification, quantification, and meeting regulatory requirements in drug development [25]. Proper calibration directly impacts the validity of your alignment verification procedure.

Troubleshooting FAQs

Q1: My handheld spectrometer's reading for the holmium oxide 241.5 nm peak is consistently offset. What could be wrong?

This is a common issue. First, confirm that your holmium oxide standard is certified for the 241.5 nm band, as some filters have a less distinct or absent peak at this wavelength due to variations in the base glass composition [26]. If the standard is valid, the offset likely originates from your instrument.

- Check Instrument Parameters: Ensure the spectral bandwidth (SBW) of your measurement does not exceed 2 nm. Larger bandwidths can cause errors in locating the minimum transmittance due to band asymmetry [26]. Consult your instrument manual for setting a narrower slit width.

- Environmental Factors: While holmium oxide glass is robust and relatively insensitive to normal temperature and humidity ranges, extreme conditions can potentially affect performance [26]. Allow the instrument and standard to acclimate to the laboratory environment.

- Service Required: If the above steps don't resolve the issue and the offset is consistent across multiple peaks, the wavelength drive of your spectrometer may need mechanical adjustment by a qualified service technician [25].

Q2: How can I achieve NIST-traceable calibration below 230 nm if my holmium oxide standard's lowest certified peak is at 241.5 nm?

You can extend traceability to lower wavelengths by using a combination of standards. One established method involves:

- Primary Calibration with Holmium Oxide: First, calibrate your detector using a holmium oxide standard as per the instructions, typically for wavelengths at 241 nm and above [27].

- Secondary Calibration with Caffeine: Next, use a caffeine solution standard. Determine its wavelength bands at 205 nm and 273 nm [27].

- Establishing Traceability: The caffeine band at 273 nm overlaps with the official NIST range established by the holmium oxide. Once your detector is verified at 273 nm, the 205 nm band becomes NIST-traceable by association, allowing you to qualify your detector down to 205 nm [27].

Q3: What does it mean for a standard to be "NIST-traceable," and who is responsible for this claim?

The International Vocabulary of Metrology defines metrological traceability as the "property of a measurement result whereby the result can be related to a reference through a documented unbroken chain of calibrations, each contributing to the measurement uncertainty" [28].

- NIST's Role: NIST provides calibrations and reference materials that are themselves traceable to national standards. They also provide tools and technical information to support the establishment of traceability [28].

- Your Responsibility: The provider of a measurement result (in this case, your laboratory) is responsible for supporting any claim of traceability. This is done by documenting the unbroken chain of calibrations from your working standard (your holmium oxide filter) back to a NIST standard, often through the certificate provided by your supplier [28] [25]. Assessing the validity of such a claim is the responsibility of the users of your data (e.g., regulators, clients).

Experimental Protocol: Wavelength Accuracy Verification with a Holmium Oxide Glass Filter

This protocol provides a step-by-step methodology for verifying the wavelength scale of a UV-Vis spectrophotometer, including handheld spectrometers, using a holmium oxide glass filter.

Scope and Application

This procedure is applicable to verifying the wavelength accuracy of UV-Vis spectrophotometers over the range of 241 nm to 641 nm. It is a key component of a spectrometer alignment verification procedure [26] [25].

Required Materials and Equipment

- Handheld or benchtop UV-Vis Spectrophotometer

- NIST-Traceable Holmium Oxide Glass Wavelength Standard [25]

- Lint-free cloth and lens cleaning solution

Step-by-Step Procedure

- Instrument Preparation: Turn on the spectrometer and allow it to warm up for the time specified by the manufacturer. Set the instrument parameters to a spectral bandwidth of ≤ 2 nm and a moderate scan speed [26].

- Standard Handling: Using lint-free gloves, carefully remove the holmium oxide glass filter from its protective case. Inspect the glass surfaces for fingerprints or dust; clean gently if necessary.

- Blank Measurement: Perform a baseline correction or blank measurement with an empty compartment (or as recommended for the filter type).

- Sample Measurement: Place the holmium oxide filter squarely in the light path of the spectrometer. Initiate a transmission or absorbance scan from approximately 220 nm to 650 nm.

- Peak Identification: From the resulting spectrum, identify the wavelength value (in nm) at the point of minimum transmittance (absorbance maximum) for each major peak. The workflow for this verification process is outlined below.

Data Analysis and Acceptance Criteria

- Compile Data: Record the measured wavelength for each certified peak from your scan.

- Determine Tolerance: Consult your spectrophotometer's manufacturer manual to find the specified wavelength accuracy tolerance (e.g., ± 0.5 nm). Add this manufacturer's tolerance to the expanded uncertainty (typically 0.2 nm, k=2) of the holmium oxide standard [26] [25].

- Compare Values: For each peak, check that the measured value falls within the combined tolerance range of the certified value. Certified values for holmium oxide glass are listed in the table below.

Table 1: Certified Wavelengths for Holmium Oxide Glass Filter (Spectral Bandwidth ≤ 2 nm) [26]

| Band Number | Certified Wavelength (nm) | Expanded Uncertainty (nm, k=2) |

|---|---|---|

| 1 | 241.5 | ± 0.2 |

| 2 | 279.3 | ± 0.2 |

| 3 | 287.6 | ± 0.2 |

| 4 | 333.8 | ± 0.2 |

| 5 | 360.8 | ± 0.2 |

| 6 | 385.8 | ± 0.2 |

| 7 | 418.5 | ± 0.2 |

| 8 | 453.4 | ± 0.2 |

| 9 | 459.9 | ± 0.2 |

| 10 | 536.4 | ± 0.2 |

| 11 | 637.5 | ± 0.2 |

Interpretation of Results

- Pass: If all measured peaks are within the calculated tolerance, the wavelength scale of your instrument is verified and acceptable for use.

- Fail: If any peaks fall outside the tolerance, consult a qualified service technician to adjust and recalibrate the instrument. Document the out-of-specification result and the subsequent corrective actions [25].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Materials for UV-Vis Spectrophotometer Wavelength Verification

| Item | Function & Description |

|---|---|

| Holmium Oxide Glass Filter | Primary solid wavelength standard. Used to verify wavelength scale accuracy across the UV-Vis range (241-641 nm) via its sharp, stable absorption peaks [26] [25]. |

| Holmium Oxide Solution (in Perchloric Acid) | Liquid primary wavelength standard. Provides similar functionality to the glass filter but may exhibit slightly different band positions; not to be used interchangeably with glass certified values [26]. |

| Caffeine Solution Standard | Secondary standard used in combination with holmium oxide to extend NIST-traceable wavelength calibration down to 205 nm [27]. |

| Neutral Density Filters | Used for calibrating the transmittance (photometric) scale of the spectrophotometer. Note: Holmium oxide standards should not be used for transmittance scale calibration [26]. |

| Hydroquinine | Hydroquinine, CAS:23495-98-9, MF:C20H26N2O2, MW:326.4 g/mol |

| Biacetyl monoxime | Biacetyl monoxime, CAS:17019-25-9, MF:C4H7NO2, MW:101.10 g/mol |

### Safety Precautions

Why are specific safety protocols necessary for this alignment procedure? This procedure involves the use of a Class 3B or Class 4 laser [29] [30]. Laser radiation from these classes is powerful enough to cause serious and permanent eye injury and skin burns [30]. The safety protocols below are mandatory to mitigate these risks.

- Laser Protective Eyewear: All personnel in the lab must wear laser safety goggles that are optical density (OD) rated for the specific wavelength of the alignment laser [29] [30]. Eyewear must be clearly labeled with its wavelength and OD [29].

- Controlled Access: The laser work area must be restricted to authorized, trained personnel only [30].

- Beam Path Management: The laser beam path must be enclosed wherever possible and kept above or below eye level to minimize the risk of direct eye exposure [30].

- Warning Signs: Approved laser warning signs must be posted at all entrances to the lab when the laser is in use [29] [30].

- Training: All users must complete general laser safety training and equipment-specific training provided by the Principal Investigator or an experienced user before operating the laser [29].

### Materials and Equipment

Table: Essential Materials for Laser Alignment Procedure

| Item | Specification/Function |

|---|---|

| Laser Pointer | Low-power, visible wavelength (e.g., 635nm or 650nm red). Used as a collimated visual guide for the optical path. |

| Laser Safety Goggles | Optical Density (OD) rated for the specific wavelength of the laser pointer. Protects eyes from accidental exposure [29] [30]. |

| Beam Stops/Attenuators | Dense, opaque material (e.g., anodized aluminum). Safely blocks and absorbs the laser beam during setup and when not in use [30]. |

| Alignment Targets | Cards or papers with a precise crosshair or pinhole. Aids in visualizing and centering the beam through optical components. |

| Optical Breadboard or Table | A stable, vibration-damped surface. Ensures the optical path remains stable during and after alignment. |

### Step-by-Step Alignment Methodology

1. Preparation and Setup * Verify Safety: Ensure all personnel in the lab are wearing the correct laser safety eyewear [30]. Confirm that laser warning signs are displayed [29]. * Clear Path: Remove all unnecessary tools, reflective objects, and optical components from the anticipated beam path. * Position Laser: Securely mount the laser pointer at the starting point of the intended optical path, which is typically at the entrance slit or the source position of the spectrometer.

2. Initial Beam Path Establishment * Define Optical Axis: Using the alignment targets, project the laser beam to define the primary optical axis of your system. The beam should be parallel to the table and at a standard height. * Align to First Component: Position the first optical component (e.g., a mirror or lens). Adjust the component's mounts until the laser beam's center is aligned with the component's optical center, as verified by a target.

3. Sequential Component Alignment * Iterative Alignment: Move to the next optical component in the path. Use an alignment target to center the beam on this component. * Verify Path: After each component is aligned, verify that the beam continues to propagate along the intended path to the final target point, which is typically the spectrometer's detector slit. * Final Target Verification: The alignment is complete when the laser beam is correctly centered on the final target without any obstructions or misalignments along the entire path.

Workflow Diagram

### Troubleshooting Guide

Problem: The laser beam is not visible or is too dim.

- Potential Cause 1: Low battery in the laser pointer.

- Solution: Replace the laser pointer batteries with new ones.

- Potential Cause 2: Incorrect use of laser safety goggles blocking the specific wavelength.

- Solution: Verify that the laser safety goggles are rated for the correct wavelength and have an appropriate Optical Density (OD). Consult the Laser Safety Officer if unsure [29].

Problem: The beam path is unstable or drifts over time.

- Potential Cause 1: Loose mounting or mechanical instability.

- Solution: Check and tighten all mounts for the laser pointer and optical components. Ensure the system is on a vibration-damped table.

- Potential Cause 2: Thermal expansion in the lab.

- Solution: Allow the laser system to warm up for 15-30 minutes before critical alignment. Monitor lab temperature for significant fluctuations.

Problem: The beam does not reach the final target after sequential alignment.

- Potential Cause 1: Cumulative misalignment from multiple optical components.

- Solution: Work backwards from the point of failure. Re-align the last component where the beam was correct, then proceed forward step-by-step.

- Potential Cause 2: A single component is significantly misaligned.

- Solution: Isolate the problem by checking the beam input and output for each component individually.

Problem: The spectrometer analysis results are inaccurate or unstable after alignment.

- Potential Cause 1: Contaminated optical surfaces (e.g., lenses, windows) [3].

- Solution: Inspect and clean all optical windows in the path, including those on the spectrometer itself, using approved materials and techniques [3].

- Potential Cause 2: Improper probe contact or argon contamination in the spectrometer (for certain spectrometer types) [3].

- Solution: Ensure the spectrometer's probe is making correct contact and that the argon supply is pure, as contaminated argon can lead to unstable results [3].

### Frequently Asked Questions (FAQs)

Q1: Can I use any visible laser pointer for this alignment? A: No. You must use a laser that is approved for your specific lab and application. The laser class (3B or 4) dictates the required safety controls. Always consult your Laser Safety Officer before introducing a new laser into the lab [29].

Q2: What should I do if the laser beam is accidentally exposed to someone's eye? A: Immediately turn off the laser. Seek medical attention without delay, even if no immediate symptoms are present. Report the incident to your supervisor and the Environmental Health & Safety department as required by your institution's emergency response protocol [29].

Q3: How often should I perform this alignment procedure? A: The frequency should be determined by the stability requirements of your experiment and the spectrometer's performance. It is recommended before starting a new series of experiments or if you suspect the optical path has been disturbed. Regular verification ensures data integrity in your research.

Q4: Where can I get laser safety training? A: Contact your institution's Environmental Health & Safety or Radiation Safety office. Laser worker safety training is required for all users of Class 3B and Class 4 lasers [29] [30].

### Compliance and Documentation

This procedure is designed to ensure compliance with the ANSI Z136.1 Standard for the Safe Use of Lasers and other relevant safety standards [29] [30]. All laser use must be registered with the appropriate safety office [29]. Principal Investigators are required to maintain records of laser equipment, safety inspections, and user authorizations [29] [30].

Frequently Asked Questions (FAQs)

1. What does "grating order" mean, and why is verifying it important? The diffraction grating in your spectrometer splits incoming light into its constituent wavelengths, creating multiple replicated spectra known as orders. The "first order" is typically the brightest and most commonly used for analysis. Verifying that the correct order (e.g., the first order) is directed onto the detector array is a critical alignment step [31]. An incorrect grating order will result in a spectrum with the wrong wavelengths being measured, leading to inaccurate data and failed calibrations.

2. What are the symptoms of an improperly focused spectrum on the detector? An out-of-focus spectrum on the detector array manifests through several clear symptoms in your data [32]:

- Very Noisy or Unstable Data: The signal appears erratic, with a poor signal-to-noise ratio.

- Low Signal Intensity: The overall signal is weak, which can cause absorbance readings to appear abnormally high (sometimes stuck at 3.0) or fail calibration altogether [32].

- Broadened or Distorted Peaks: Spectral features lose their sharpness, reducing the effective resolution of your instrument.

3. What tools do I need to perform this verification? A successful verification requires a few key tools [31]:

- A Laser Pointer: Used as a coherent light source for initial rough alignment of the optical path.

- A Known Light Source: For final focusing and calibration. A neon or mercury lamp is ideal, as they emit sharp, well-defined spectral lines [31].

- Allen Wrenches/Drivers: Typically 2mm and 4mm, for making precise adjustments to the mirror and grating mounts [31].

- Power Meter (Optional but helpful): For quantitatively measuring the optical power reaching the detector to find the maximum, a technique used in automated alignment systems [33].

4. My spectrum is focused but the wavelengths are incorrect. Is this a grating order issue? Yes, this is a classic sign of a grating order problem. If the grating is rotated to the wrong angle, it will direct a different spectral order (e.g., the second order instead of the first) onto the detector. This means the light detected at a given pixel corresponds to an entirely different wavelength than expected. You must adjust the grating rotation to select the correct order [31].

Troubleshooting Guide

Problem: The spectrum is not focused on the detector, resulting in a weak and noisy signal.

Solution: Perform a detailed alignment of the focusing mirror.

- Gain Access: Ensure you have clear physical access to the adjustment screws on the focusing mirror mount. You may need to remove a cover or the top half of the spectrometer [31].

- Introduce a Light Source: Use your known light source (e.g., a neon lamp) and observe the live output from the detector array in your software.

- Adjust the Mirror: Using the appropriate tools, gently adjust the screws on the focusing mirror mount. The goal is to center the brightest and sharpest image of the spectrum on the active area of the detector array [31].

- Clockwise or counter-clockwise turns on the screws will translate the beam on the detector. The exact effect depends on your spectrometer's optical layout.

- Make small, incremental adjustments and observe the change in signal intensity and sharpness.

- Verify Focus: The spectrum is correctly focused when the signal intensity is maximized and the spectral lines from your known source are at their narrowest.

Problem: The correct grating order is not hitting the detector.

Solution: Adjust the rotational angle of the diffraction grating.

- Initial Check: With a laser pointer or white light source, observe where the diffracted light lands. You can use a blank piece of paper or the palm of your hand held near the detector port to see the beam [31].

- Locate the Grating Mount: Identify the mechanism that allows the grating to rotate. This may be a manual adjuster or a motorized stage.

- Find the Correct Order: Slowly rotate the grating while monitoring the detector's output. You are looking for the position where the most intense and clear spectrum appears. For a white light source, the correct first order should show a smooth continuum from red to violet [31]. For a laser, you will be looking for a specific, bright spot.

- Secure the Grating: Once the correct order is confirmed, securely fasten the grating in place.

This protocol outlines the manual procedure for verifying and aligning the grating order and focus, a critical step in handheld spectrometer verification research.

Objective: To ensure the desired diffraction order is correctly focused onto the detector array for optimal signal intensity and spectral fidelity.

Materials and Reagents:

- Assembled spectrometer prototype

- Laser pointer (e.g., 5 mW)

- Neon or mercury calibration lamp

- Set of Allen wrenches/drivers (e.g., 2mm, 4mm)

- Computer with spectrometer control and data acquisition software

Methodology:

- Safety First: Put on appropriate laser safety glasses before beginning alignment [31].

- Laser-based Path Verification:

- With the top cover of the spectrometer removed, activate the laser pointer and direct its beam through the entrance slit [31].

- Verify the beam is centered on the collimating mirror. Adjust the collimating mirror's screws to center the resulting horizontal line on the diffraction grating [31].

- Observe the beam's reflection from the grating. The goal is to have the beam (representing the diffraction order) land on the center of the focusing mirror [31].

- Grating Order Selection:

- Observe where the beam reflected from the grating lands. Manually rotate the grating until the beam is directed onto the focusing mirror [31].

- For a more precise check, replace the laser with a known light source and rotate the grating while monitoring the software until a strong, well-defined spectrum appears.

- Final Focusing on the Detector:

- With the known light source active and the correct grating order selected, observe the live spectral output in the software.

- Using the adjustment screws on the focusing mirror mount, direct and focus the spectrum onto the center of the detector array. The adjustment is complete when the signal intensity is maximized and the spectral lines are sharp [31].

- Calibration and Validation:

- Using the known light source, perform a wavelength calibration. The observed peaks should match the known emission lines of the source (e.g., a neon lamp has specific peaks at 540.1 nm, 585.2 nm, etc.) [13].

- Record the final signal-to-noise ratio and resolution for your research records.

Research Reagent Solutions

The following standards are essential for the precise calibration and validation of spectrometer alignment.

| Research Reagent | Function in Alignment Verification |

|---|---|

| Holmium Oxide Solution (4% Ho₂O₃ in 10% HClO₄) [13] | A stable liquid wavelength standard with multiple sharp absorption peaks (e.g., 241.5 nm, 287.5 nm) used to calibrate and verify the wavelength (x-axis) accuracy of UV-Vis spectrometers [13]. |

| Holmium Oxide Glass [13] | A solid glass filter containing holmium oxide, traceable to NIST SRM 2034. Used for the same purpose as the liquid standard, offering convenience and long-term stability for verifying spectrometer alignment [13]. |

| Neon or Mercury Calibration Lamp | Emits light at specific, well-defined wavelengths. These sharp emission lines are used to fine-tune the focus on the detector and perform the final wavelength calibration [31]. |

| NIST SRM 2036 [13] | A reflectance standard glass with certified reflectance bands in the visible and NIR regions. Useful for verifying the alignment and wavelength accuracy of spectrometers configured for diffuse reflectance measurements [13]. |

| Polystyrene Film [13] | A crystalline solid that provides a well-characterized transmission spectrum with specific peaks in the infrared and near-infrared regions, serving as a common wavenumber standard for NIR and IR spectrometers [13]. |

Workflow Visualization

The following diagram illustrates the logical decision-making and action process for troubleshooting grating order and focus issues.

Troubleshooting Grating Order and Focus

Wavelength Calibration Reference Data

The following tables summarize key specifications for common calibration lamps, which are essential for verifying the wavelength accuracy of handheld spectrometers.

| Lamp Model | Lamp Type | Operating Current | Rated Life (Hours) | Power Supply Model (115 VAC) |

|---|---|---|---|---|

| 6032 | Neon | 10 ±4 mA | 250 | 6045 |

| 6035 | Mercury-Argon (Hg(Ar)) | 18 ±5 mA | 5000 | 6047 |

| 6034 | Mercury-Neon (Hg(Ne)) | 18 ±5 mA | 500 | 6047 |

| Lamp Type | Output Range | Optical Power (in 600 µm fiber) | Warm-up Time | Lamp Lifetime |

|---|---|---|---|---|

| Neon (Ne) | 337 - 1084.5 nm | 1.6 µW | 1 minute | 5000 hours |

| Mercury-Argon (HgAr) | 253.6 - 922.5 nm | 1.6 µW | 1 minute | 5000 hours |

Troubleshooting Guides & FAQs

Lamp Operation and Setup

Q: My calibration lamp does not turn on. What should I check? A: First, verify the power supply. Ensure the unit is connected to the correct, compatible power source (e.g., 12 VDC for Mini models [34] or a specific AC power supply for pencil-style lamps [35]). Check all connections, including the SMA-905 fiber optic connector, to ensure they are secure.

Q: How long does a calibration lamp typically last? A: Lamp lifetime varies by model and gas type. For instance, Mercury-Argon lamps can last 5000 hours, whereas Neon lamps may have a rated life of 250 to 5000 hours depending on the specific design and operating current [34] [35]. Always refer to the manufacturer's specifications for your specific model.

Q: Why is warm-up time important for calibration lamps? A: Warm-up time allows the gas vapor inside the lamp to stabilize, ensuring the emission lines are at their characteristic wavelengths and intensities. A typical warm-up time is about one minute for Neon and Mercury-Argon lamps to achieve vapor stabilization [34].

Data Quality and Accuracy

Q: During calibration, my spectrometer is detecting emission lines, but the reported wavelengths are consistently incorrect. What is the likely cause? A: This indicates a wavelength accuracy error in your spectrometer. Your calibration source is functioning, but the spectrometer's internal "ruler" is misaligned. Follow the calibration procedure in your spectrometer's software to map the detected known lines (e.g., from Neon or Mercury-Argon) to their true values. This corrects the instrument's wavelength assignment [36].

Q: The intensity of the calibration lines seems low and unstable. How can I troubleshoot this? A: Unstable readings can often be traced to the sample path, even for calibration sources. First, confirm the instrument and lamp are fully warmed up. Then, inspect and thoroughly clean the windows in front of the fiber optic and in the direct light pipe, as a dirty window can cause drift and poor analysis readings [3]. Also, ensure the fiber optic connection is clean and secure.

Q: After calibration, my analysis results on identical samples are inconsistent. What could be wrong? A: Inconsistent results on the same sample often point to an issue with the calibration itself or the instrument's photometric accuracy. Ensure your calibration standards are clean and not contaminated. We recommend preparing the sample by grinding or machining it flat and following the software's recalibration sequence precisely without deviation. The relative standard deviation (RSD) for repeated analyses should not exceed 5 [3].

Experimental Protocol: Spectrometer Wavelength Calibration

This protocol details the methodology for verifying and calibrating the wavelength axis of a handheld spectrometer using Neon and Mercury-Argon calibration lamps.

Objective: To verify and correct the wavelength accuracy of a handheld spectrometer by aligning detected emission peaks to the known wavelengths of a calibration source.

Principle: Spectrometers assign wavelengths to pixels based on an internal model. This model can drift. By measuring a light source with known, sharp emission lines and performing a linear regression (Peak Detected vs. Peak Known), a correction function can be applied.

Materials:

- Handheld spectrometer with fiber optic input

- Neon or Mercury-Argon calibration light source (e.g., AvaLight-CAL series [34])

- SMA-905 terminated fiber optic cable

- Spectrometer operating software (e.g., software with automatic recalibration procedure [34])

- Computer

Step-by-Step Procedure:

System Setup: Connect the calibration lamp to the spectrometer's fiber optic input using the SMA-905 connector. Power on the calibration lamp and allow it to warm up for at least one minute to ensure vapor stabilization and output stability [34].

Data Acquisition: Initiate data collection in the spectrometer software. Expose the spectrometer to the calibration lamp and acquire a spectrum. Ensure the signal intensity is within the linear range of the detector (not saturated).

Peak Identification: The software will automatically identify the prominent peaks in the captured spectrum. Alternatively, manually identify key peaks. For a Mercury-Argon lamp, primary lines include 253.6 nm, 435.8 nm, and 546.1 nm. For a Neon lamp, primary lines include 540.1 nm, 585.2 nm, and 703.2 nm [34].

Calibration Execution: In the spectrometer software, initiate the "automatic recalibration procedure". The software will map the detected peak positions (in pixels) to the known, standard wavelengths of the lamp and compute a new wavelength calibration function.

Validation: After calibration, measure the calibration lamp again. Verify that the known emission lines now appear at their correct wavelengths within the manufacturer-specified tolerance for your instrument.

Workflow Visualization

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Wavelength Calibration

| Item | Function & Application | Key Characteristics |

|---|---|---|

| Neon Calibration Lamp | Provides known emission lines for wavelength calibration in the visible to NIR range (e.g., 337-1084.5 nm). Used to verify spectrometer accuracy [34]. | Narrow, discrete emission lines; long lifetime (e.g., 5000 hours); low optical power (e.g., 1.6 µW) [34]. |

| Mercury-Argon (HgAr) Calibration Lamp | Provides a broad set of known emission lines from UV to NIR (e.g., 253.6-922.5 nm). Ideal for wide-range calibration [34]. | Intense UV lines; used for initial and periodic calibration; requires specific power supply [34] [35]. |

| SMA-905 Fiber Optic Cable | Connects the calibration lamp to the spectrometer, guiding the light for analysis. | Standard connector for easy integration; ensures consistent light delivery [34]. |

| NIST-Traceable Calibration Standards | Certified reference materials (like holmium oxide filters) provide an independent verification of wavelength accuracy post-calibration [36]. | Documented, certified values with an unbroken chain of comparisons to national standards; essential for audits [36]. |

| Lint-Free Wipes & Powder-Free Gloves | Used to clean optical components (e.g., spectrometer windows, calibration standards) without introducing contamination [3] [36]. | Prevents scratches and oil contamination that can cause calibration drift and inaccurate analysis [36]. |

| TC AQP1 1 | TC AQP1 1, CAS:23713-86-2, MF:C12H10O4, MW:218.20 g/mol | Chemical Reagent |

| Galanthamine | Galanthamine |

Software-Assisted Calibration and Automated Sequence Protocols

Troubleshooting Guides

Calibration Failures and Error Resolution

Problem: Wavelength Accuracy Check Fails

- Symptoms: The measured peak wavelengths of a certified standard (e.g., holmium oxide filter) do not match the certified values within the specified tolerance [37].

- Potential Causes & Solutions:

- Expired Standard: Verify the calibration certificate of your standard has not expired. Replace if necessary [37].

- Contaminated Standard: Clean the calibration standard thoroughly using lint-free wipes and ensure it is handled with powder-free gloves to avoid oil contamination [37].

- Instrument Drift: Spectrometers naturally drift over time due to environmental effects. Re-run the wavelength calibration procedure to correct for this drift [38].

- Mechanical Fault: If the above steps fail, the instrument may have an internal mechanical issue requiring service by a qualified technician [37].

Problem: Photometric Accuracy Check Fails

- Symptoms: When measuring a certified neutral density filter, the recorded absorbance or reflectance value is outside the acceptable range (e.g., reading 0.515 AU on a 0.500 AU standard) [37].

- Potential Causes & Solutions:

- Contaminated Standard: This is the most common cause. Meticulously clean the photometric standard [37].

- Unstable Readings: Ensure the instrument has been powered on and allowed to warm up for the manufacturer-recommended time. Re-clean the sample and standard, and ensure the operating environment is stable [37].

- Stray Light: Perform a stray light check. Stray light, caused by internal light leaks, can lead to inaccurate photometric readings, especially at high absorbance levels [37].

Problem: Unstable or Drifting Readings During Automated Sequence

- Symptoms: Measurements are not repeatable, and the signal fluctuates over time during a calibration run.

- Potential Causes & Solutions:

- Insufficient Warm-up Time: Confirm the instrument's light source and electronics have stabilized by allowing adequate warm-up time before starting sequences [37].

- Environmental Fluctuations: Check for drafts, temperature swings, or humidity changes near the instrument. Provide a stable operating environment [37].

- Hardware Connection: Inspect cables and connections for looseness or damage as part of routine preventative maintenance [37].

Software and Automation Protocol Issues

Problem: Automated Calibration Sequence Halts or Errors

- Symptoms: The software sequencer stops unexpectedly, fails to send commands to the instrument, or cannot read data.

- Potential Causes & Solutions:

- Communication Failure: Verify the physical connection (e.g., USB, RS-232) between the computer and the instrument. Check the software configuration for the correct COM port, baud rate, parity, data bits, and stop bits [39].

- SCPI Command Error: Review the script for the instrument's Standard Commands for Programmable Instruments (SCPI). Ensure commands are correct and formatted properly for your specific instrument model [39].

- Stabilization Time: The script may not be allowing enough time for the instrument to stabilize at each set point before taking a measurement. Increase the delay between sending a command and taking a reading [39].

Problem: Model Transfer Between Instruments Yields Poor Results

- Symptoms: A calibration model developed on one spectrometer (the "master") performs poorly when applied to data from another spectrometer (the "slave"), even when measuring the same samples [40].

- Potential Causes & Solutions:

- Systematic Bias: Differences in optical components, gratings, or detectors create systematic spectral variations [40].

- Solution: Apply model transfer algorithms like Piecewise Direct Standardization (PDS) or Spectral Space Transformation (SST) to correct for these systematic differences and align the slave instrument's data with the master's model [40].

- Insufficient Transfer Standards: The set of standards used for the transfer may not adequately represent the spectral range or characteristics of your samples. Use a diverse and representative set of transfer standards [40].

Frequently Asked Questions (FAQs)

Q1: How often should I perform a full wavelength and photometric calibration on my handheld spectrometer? A: The frequency depends on your usage rate, operating environment, and regulatory requirements. For high-precision work or high-volume use, weekly or daily verification may be necessary. For general use, a quarterly schedule is a common baseline. Always consult the manufacturer's guide and consider your risk assessment [37].

Q2: What is the benefit of automating calibration sequences? A: Automation significantly improves efficiency, reduces human error, and ensures consistent, reliable calibration data. Automated systems can run multiple calibrations simultaneously and operate continuously, drastically reducing the time required and freeing up personnel for other tasks [41] [39].