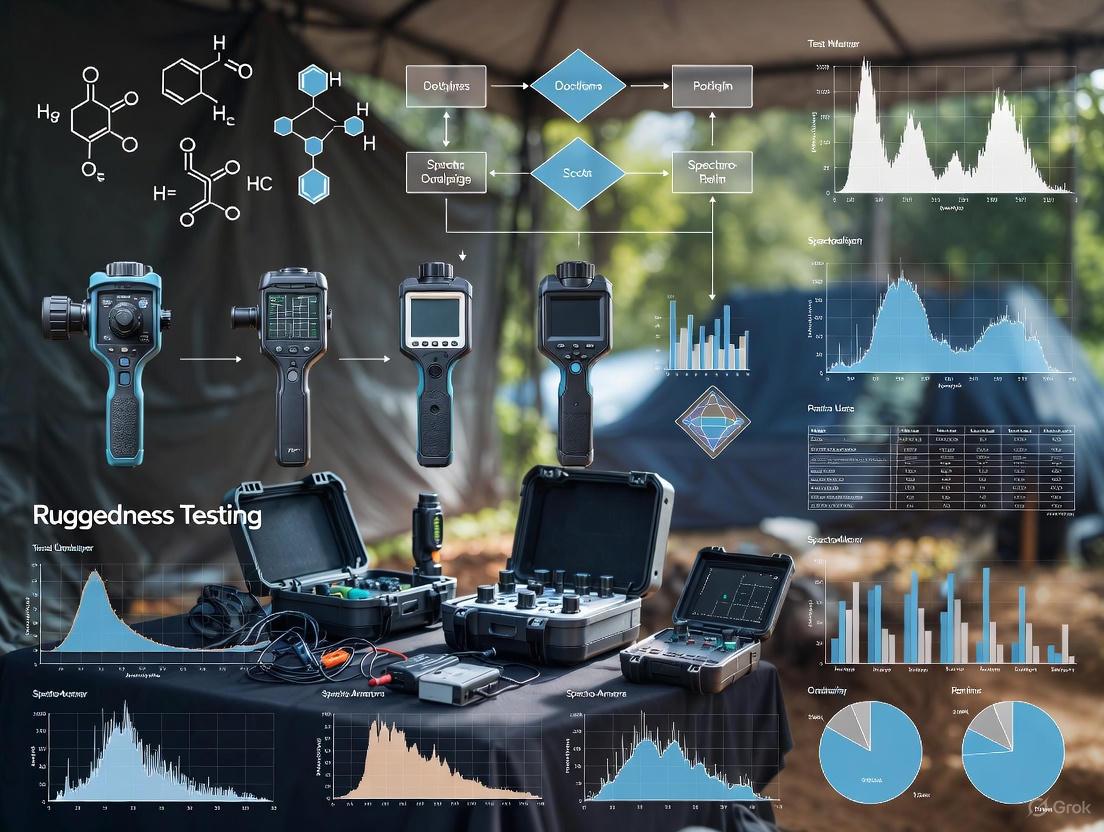

Beyond the Lab: Rigorous Ruggedness Testing of Handheld Spectrometers for Demanding Field Conditions

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to evaluate the ruggedness and reliability of handheld spectrometers in real-world field conditions.

Beyond the Lab: Rigorous Ruggedness Testing of Handheld Spectrometers for Demanding Field Conditions

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals to evaluate the ruggedness and reliability of handheld spectrometers in real-world field conditions. It explores the foundational standards defining ruggedness, details methodological approaches for application-specific testing, offers strategies for troubleshooting and optimizing device performance, and establishes protocols for the validation and comparative analysis of different spectrometer technologies. The insights herein are critical for ensuring data integrity, operational safety, and analytical confidence in biomedical and clinical research applications outside the controlled laboratory environment.

Defining Ruggedness: The Critical Standards and Specifications for Field-Ready Spectrometers

For researchers and scientists deploying analytical instruments in the field, understanding ruggedness standards is not merely an exercise in technical compliance—it is a critical component of experimental design and data integrity. Handheld spectrometers, used in applications from pharmaceutical raw material identification to environmental analysis, are increasingly deployed outside the controlled laboratory environment. These field conditions introduce variables such as rain, dust, shock, and extreme temperatures that can compromise instrument function and render data unreliable.

The IP (Ingress Protection) rating and MIL-STD-810H standard provide a quantifiable and internationally recognized framework for evaluating an instrument's ability to withstand these challenges. For drug development professionals conducting clinical trials or raw material verification in remote locations, specifying instruments that meet these standards is essential for ensuring that results are reproducible, accurate, and defensible.

IP Ratings: Decoding Ingress Protection

The International Electrotechnical Commission (IEC) developed the IP Code to provide a clear, standardized classification for the level of protection an electrical enclosure offers against the intrusion of solids and liquids. Vague marketing terms like "water-resistant" or "durable" are replaced with a precise, two-digit code that allows for objective comparison [1] [2].

The IP Code Structure

The IP code follows a simple format: "IP" followed by two digits (e.g., IP67). The first digit indicates protection against solid particles, and the second digit denotes protection against moisture. If a product is not tested for one of these categories, the digit is replaced with an "X" (e.g., IP6X means dust-tight but not rated for moisture) [1].

The tables below provide a detailed breakdown of each digit's meaning, which is essential for assessing a spectrometer's suitability for specific field conditions, such as a windy, sandy desert or a humid, rainy climate.

Table: First Digit - Solid Particle Protection

| Level | Effective Against | Description |

|---|---|---|

| 0 | — | No protection. |

| 1 | >50 mm | Large body surfaces, like hands. |

| 2 | >12.5 mm | Fingers or similar objects. |

| 3 | >2.5 mm | Tools, thick wires. |

| 4 | >1 mm | Most wires, screws, large ants. |

| 5 | Dust Protected | Dust ingress is not entirely prevented, but it cannot enter in sufficient quantity to interfere with operation. |

| 6 | Dust Tight | No dust ingress; complete protection against contact. |

Table: Second Digit - Liquid Ingress Protection

| Level | Effective Against | Description |

|---|---|---|

| 0 | — | No protection. |

| 1 | Dripping water | Vertically falling drops (e.g., condensation). |

| 2 | Dripping water (tilted) | Vertically dripping water when enclosure is tilted up to 15°. |

| 3 | Spraying water | Water sprayed at any angle up to 60° from vertical. |

| 4 | Splashing water | Water splashed from any direction. |

| 5 | Water jets | Water projected by a nozzle (6.3mm) from any direction. |

| 6 | Powerful water jets | Water projected in powerful jets (12.5mm nozzle) from any direction. |

| 7 | Immersion (1m) | Ingress of water is not possible when immersed under defined conditions (up to 1m for 30 min). |

| 8 | Immersion (>1m) | Suitable for continuous immersion under conditions specified by the manufacturer. |

Application in Spectrometry

In handheld spectrometry, common ratings include IP65, which offers complete dust protection and resistance to low-pressure water jets, and IP67, which adds the ability to withstand temporary immersion. For instance, the Viavi MicroNIR OnSite-W spectrometer carries an IP67 rating, meaning it is dust-tight and can be submerged in water, making it viable for wet agricultural or pharmaceutical manufacturing environments [3]. Similarly, the Metrohm TacticID-1064 ST Raman spectrometer boasts an IP68 rating, offering a higher level of protection against prolonged immersion [4].

MIL-STD-810H: The Standard for Environmental Durability

While IP ratings focus on ingress protection, MIL-STD-810H is a comprehensive U.S. military standard that covers a wide range of environmental conditions. It is crucial to understand that this standard is a set of test methods rather than a pass/fail certification. The philosophy behind MIL-STD-810H is to engineer a product to withstand the specific environmental stresses it will encounter throughout its life cycle, and then use the standard's methods to verify that durability [5].

Key Test Methods and Their Research Implications

The standard includes numerous laboratory test methods designed to simulate conditions such as low pressure at high altitude, extreme temperatures, shock, vibration, and exposure to fungi or salt fog [1] [2]. For a handheld spectrometer used in field research, several of these tests are particularly relevant.

Table: Key MIL-STD-810H Test Methods for Handheld Spectrometers

| Test Method | Simulated Condition | Impact on Field Research |

|---|---|---|

| Method 500.6 - Low Pressure | High altitude (up to 12,000 m) [5]. | Ensures functionality during aerial surveys or work at high elevations. |

| Method 501.7 - High Temperature | Operation in hot, arid environments [5]. | Prevents data loss or instrument shutdown in desert climates or hot industrial settings. |

| Method 502.7 - Low Temperature | Operation in cold arctic environments [5]. | Ensures reliability in cold storage or outdoor winter conditions. |

| Method 503.7 - Temperature Shock | Rapid temperature transitions [5]. | Validates performance when moving between climate-controlled labs and harsh field environments. |

| Method 506.6 - Rain | Wind-blown and freezing rain [5]. | Protects the instrument during unexpected weather, common in outdoor data collection. |

| Method 507.5 - Humidity | Humid, tropical conditions [5]. | Prevents fungal growth and moisture damage to internal electronics and optics. |

| Method 510.7 - Sand & Dust | Dusty and sandy environments [5]. | Critical for archaeology, geology, and mining applications to avoid instrument failure. |

| Method 514.8 - Vibration | Vehicle-induced vibration during transport [5]. | Maintains optical alignment and component integrity when the device is used in a moving vehicle. |

| Drop Test (MIL-STD-810H) | Repeated drops from typically 4 feet onto hard surfaces [1]. | Ensures the device survives accidental drops, a common occurrence during mobile use. |

Products like the Viavi MicroNIR OnSite-W and the Metrohm TacticID-1064 ST are tested compliant with MIL-STD-810, providing researchers with confidence in their mechanical robustness against shock and vibration [3] [4].

Comparative Analysis of Spectrometer Ruggedness

The true value of these standards becomes apparent when comparing different handheld spectrometers. For example, a spectrometer with an IP65 rating is well-suited for general outdoor use where it might be exposed to rain and dust, while one with an IP68 rating is necessary for applications requiring submersion, such as in marine research or where decontamination via full immersion is needed.

The following diagram illustrates the logical decision-making process for selecting a spectrometer based on its ruggedness specifications, directly linking environmental threats to the required standards.

Case Studies: Rugged Spectrometers in Action

Viavi MicroNIR OnSite-W Spectrometer: This near-infrared instrument is a prime example of a device built for harsh environments. It holds an IP67 rating, meaning it is dust-tight and can be temporarily immersed in water. Furthermore, it carries a MIL-PRF-28800F Class 2 rating for shock and vibration, making it a robust tool for agricultural, pharmaceutical, and manufacturing field applications [3].

Metrohm TacticID-1064 ST Handheld Raman Spectrometer: Designed for first responders and HazMat teams—arguably one of the most demanding field environments—this device is built to the highest standards. It features an IP68 rating for superior dust and water protection and is MIL-STD-810H drop compliant, ensuring it can survive the rigors of tactical use [4]. Its ability to identify chemicals through containers is a critical safety feature for drug development professionals handling unknown or hazardous substances.

Experimental Protocols for Ruggedness Testing

Understanding the laboratory protocols behind these standards is vital for appreciating the level of durability they represent. The tests are designed to be rigorous and repeatable.

Protocol: IP67 Testing

- Dust Ingress Test (First Digit: 6): The device is placed in a test chamber containing circulating fine dust. The test lasts up to 8 hours, and a vacuum may be applied. After testing, the device must show no dust ingress that would interfere with its operation [1].

- Water Immersion Test (Second Digit: 7): The device is immersed in water to a depth of 1 meter for 30 minutes. The manufacturer must specify whether this is done with the device in its normal operating position or in various orientations. After immersion, the enclosure must show no harmful ingress of water [1] [2].

Protocol: MIL-STD-810H Drop Test

- Procedure: The device is subjected to a series of 26 drops from a height of 4 feet (approx. 1.2 meters) onto a hard surface, such as plywood over concrete. The drops are performed at different angles and orientations to simulate real-world accidents. The device is inspected for damage after each drop [1].

- Pass/Fail Criterion: The device must remain fully functional with no critical damage, such as cracked casings or internal component failures, that would prevent its operation.

Protocol: MIL-STD-810H Temperature Shock

- Procedure: The device is placed in a test chamber where the temperature is cycled rapidly between extreme highs (e.g., +70°C) and lows (e.g., -40°C). One test loop involves 30 minutes at each extreme, and this loop may be repeated 32 times. The device may be tested both in storage and operating conditions [1] [5].

- Pass/Fail Criterion: The device must power on and operate normally after the test, with no physical damage or performance degradation caused by the thermal stress.

Essential Research Reagent Solutions for Field Spectrometry

Deploying spectrometry in the field requires more than just a rugged instrument. It involves a suite of tools and reagents to ensure data quality and operational efficiency. The following table details key solutions for researchers in this domain.

Table: Research Reagent Solutions for Field Spectrometry

| Item / Solution | Function & Application |

|---|---|

| Validation Standards | Certified reference materials (e.g., polystyrene caps) used to validate spectrometer calibration and performance before and after field deployment, ensuring data continuity [4]. |

| Ultrapure Water Systems | Systems like the Milli-Q SQ2 series provide ultrapure water essential for preparing mobile phases, buffers, and for sample dilution and cleaning in the field or mobile labs [6]. |

| Specialized Sampling Accessories | Probes (e.g., immersion, right-angle) and adaptors (e.g., for vials, large bottles) that extend the spectrometer's capability to analyze samples in confined spaces or through containers without direct contact [4]. |

| Customizable Spectral Libraries | Extensive and customizable software libraries of chemical signatures (e.g., for narcotics, explosives, raw materials) that are essential for the rapid and accurate identification of unknown substances on-site [4]. |

| Portable Power Solutions | Swappable, rechargeable Li-ion batteries and external power banks that provide extended operational time (>4-10 hours), which is critical for prolonged data collection sessions away from power sources [3] [4]. |

| Ruggedized Device Management Software | Software suites like MicroNIR Pro or TOS that provide chemometric modeling, user management, and data transfer capabilities (via USB, Wi-Fi, Bluetooth), streamlining the entire field workflow [3] [4]. |

For the modern researcher, the choice of a field-deployable spectrometer must be guided by scientific need and operational reality. IP and MIL-STD-810H ratings provide the critical, objective data required to make an informed decision.

When specifying an instrument, researchers should:

- Identify Environmental Threats: Clearly define the environmental stresses (dust, water immersion, shock, temperature extremes) the instrument will face.

- Match Threats to Standards: Use the IP code and MIL-STD-810H test methods to create a minimum specification. For example, a spectrometer for coastal geology may require IP68 for submersion and MIL-STD-810H, Method 509.8 for salt fog corrosion.

- Verify, Don't Assume: Look for products that state they are "tested compliant" to relevant standards, rather than those vaguely "designed to meet" them.

Ultimately, investing in a properly ruggedized spectrometer is an investment in data integrity. It ensures that the valuable data collected in the field is a true reflection of the sample, and not an artifact of a failed instrument.

For researchers and scientists conducting field analysis, the durability of a handheld spectrometer is as critical as its analytical performance. Unlike controlled laboratory environments, field conditions in drug development, environmental monitoring, and forensic investigation present significant challenges including dust, moisture, mechanical shocks, and temperature fluctuations. Ruggedized design ensures that instruments provide reliable data while withstanding the physical stresses encountered during mobile operation. This guide examines three core design elements—shock resistance, ingress protection, and thermal stability—through the lens of experimental data and standardized testing protocols, providing a framework for evaluating spectrometer durability in field research contexts.

The fundamental shift from benchtop to portable analysis necessitates this focus on physical robustness. As noted in evaluations of handheld spectrometers, their primary advantage lies in enabling "researchers to test materials in the field" without removing specimens from their natural context [7]. This capability, however, comes with the requirement that the instrumentation must maintain functionality and accuracy despite exposure to conditions that would rarely impact laboratory equipment.

Ingress Protection (IP) Standards and Testing

IP Rating Decoding and Specifications

Ingress Protection (IP) ratings, defined by the International Electrotechnical Commission standard IEC 60529, provide a standardized classification for an enclosure's resistance to solid particles and liquids [8]. The rating consists of the letters "IP" followed by two digits: the first digit indicates protection against solids (e.g., dust), while the second digit indicates protection against liquids (e.g., water) [8]. Higher numbers signify better protection.

For handheld spectrometers intended for field use, a minimum of IP54 is often recommended, though more rigorous ratings provide greater assurance in harsh environments. The table below details the significance of each digit in the IP code:

Table: Breakdown of Ingress Protection (IP) Rating Codes

| Digit | Protection Level | Meaning | Testing Specification |

|---|---|---|---|

| First Digit: Solids | 2 | Protection from solids larger than 12.5 mm | Tested with fingers or similar objects [8]. |

| 4 | Protection from solids larger than 1 mm | Tested with wires or strips [8]. | |

| 5 | Dust protected | Ingress of dust is not prevented, but it must not enter in sufficient quantity to interfere with safe operation [8]. | |

| 6 | Dust tight | No dust ingress after 8 hours under vacuum in a dust chamber [8]. | |

| Second Digit: Liquids | 4 | Protection from splashed water | 10-minute spray from low-pressure water jets (50-150 KPa) [8]. |

| 5 | Protection from water jets | 15-minute jet of water at a distance of 3m (30 KPa pressure) [8]. | |

| 6 | Protection from powerful water jets | 3-minute jet of water at a distance of 3m (100 KPa pressure) [8]. | |

| 7 | Protection from immersion | Immersion in water up to 1 meter for 30 minutes [8]. | |

| 8 | Protection from continuous immersion | Immersion under conditions specified by the manufacturer, exceeding IPX7 [8]. |

Experimental Protocols for IP Validation

IP testing is a two-step process. The first step involves exposing the device to standardized challenges. For solids, the device is placed in a dust chamber where calibrated dust is suspended and covers the unit. For liquids, tests range from dripping water and spray to immersion, depending on the targeted rating [8]. The second step is a functional test, which can be a simple optical inspection for damage or a full performance verification to ensure all electronic and optical functions operate normally post-exposure [8].

Specific experimental setups for water ingress include:

- Drip Testing: The device is exposed to rainfall of 1-3 mm/minute for a specified duration [8].

- Spray Testing: Low-pressure water jets (50-150 KPa) spray the enclosure from all angles for 5-10 minutes [8].

- Immersion Testing: The device is submerged in water to a depth of 1 meter for 30 minutes for IPX7 certification [8].

Comparative Performance Data

Instrument manufacturers design products to meet specific IP grades based on intended use cases. For instance, Hach's DR6000 laboratory spectrophotometer has an IP-20 rating, meaning it is protected against solid objects larger than 12.5mm but has no specific protection against water, making it unsuitable for field use [9]. In contrast, the company's DR1900 portable spectrophotometer is marketed as "waterproof" and built for "demanding, rugged conditions," implying a significantly higher, though unspecified, IP rating suitable for field deployment [9].

Figure 1: Ingress Protection (IP) Testing Workflow. The process involves standardized exposure to solids and liquids, followed by a functional performance check.

Thermal Stability Characterization

Fundamentals of Thermal Stability

Thermal stability in spectrometers refers to an instrument's ability to maintain accurate calibration and component integrity across a range of operating temperatures. For the device itself, this involves the resilience of mechanical structures, electronics, and optical components to thermal expansion and stress. Furthermore, from an application standpoint, thermal stability is a critical parameter for the samples being analyzed, particularly in biologics development where it directly impacts therapeutic efficacy [10].

Advanced instruments like the Prometheus Panta characterize thermal stability by monitoring several key parameters during a controlled temperature ramp:

- Melting Temperature (Tm): The temperature at which 50% of a protein is unfolded [10].

- Onset of Unfolding (Tonset): The temperature at which unfolding begins, with higher values indicating greater thermostability [10].

- Onset of Aggregation (Turbidity): The temperature at which large, amorphous aggregates begin to form, which can compromise drug safety and efficacy [10].

Experimental Protocols for Thermal Analysis

Thermal stability assessment involves both the characterization of the spectrometer's operational limits and the analysis of samples it is designed to study.

For evaluating the device's own thermal performance, tests are conducted in environmental chambers where temperature is cycled between specified extremes while the instrument's analytical performance is monitored against known standards. Key metrics include wavelength accuracy, photometric stability, and signal-to-noise ratio.

For sample analysis, a standard protocol using a tool like the Prometheus Panta involves:

- Sample Loading: Loading a small volume (e.g., 10 µL) of the biologic sample into a capillary tube [10].

- Thermal Ramp: Subjecting the sample to a controlled, continuous temperature increase [10].

- Multi-Parameter Monitoring: Simultaneously collecting data on thermal unfolding via intrinsic fluorescence (nanoDSF), particle size via Dynamic Light Scattering (DLS), and aggregation via backreflection and Static Light Scattering (SLS) throughout the entire thermal ramp [10].

- Data Analysis: Determining key stability parameters (Tm, Tonset, Tsize, Tturbidity) from the collected data to compare the relative stability of different candidates or formulations [10].

Table: Key Thermal Stability Parameters in Biologics Development

| Parameter | Symbol | Description | Significance in Development |

|---|---|---|---|

| Melting Temperature | Tm | Temperature at which 50% of the protein is unfolded [10]. | Higher Tm generally indicates a more thermostable candidate, preferred for development [10]. |

| Unfolding Onset | Tonset | Temperature at which unfolding begins [10]. | Candidates with Tonset close to Tm are more uniformly folded and stable [10]. |

| Aggregation Onset | Tturbidity | Onset temperature for large aggregate formation [10]. | High Tturbidity or no aggregation is desired for safety and efficacy [10]. |

| Size Increase Onset | Tsize | Temperature at which average particle size begins to increase [10]. | Indicates unfolding and colloidal stability; higher Tsize indicates greater stability [10]. |

Case Study: Predictive Thermal Hazard Analysis

Thermal stability kinetics can be used to predict large-scale hazards. In a study on HTPE solid propellants, thermal decomposition kinetic parameters were calculated from small-scale Differential Scanning Calorimetry (DSC) data. These parameters were then used in simulation software to predict behavior in larger scales. The model accurately predicted a self-accelerating decomposition temperature of 94.2°C for a 50 kg-scale propellant, demonstrating a deviation of less than 2% from experimental results [11]. This highlights how controlled, small-scale thermal stability testing can reliably predict performance and hazards in real-world, large-scale scenarios.

Figure 2: Multi-Parameter Thermal Stability Workflow. A single sample provides conformational and colloidal stability data through simultaneous measurement techniques.

Shock Resistance and Mechanical Durability

Defining Shock Resistance

Shock resistance refers to a spectrometer's ability to withstand sudden impacts, drops, and vibrations without suffering functional degradation or physical damage. While specific standardized testing protocols for handheld spectrometers were not detailed in the search results, the general principle involves designing instruments with ruggedized enclosures and internal mounting systems that protect sensitive optical and electronic components from mechanical shocks.

The importance of this characteristic is implicitly acknowledged in product descriptions, such as the DR1900 portable spectrophotometer which is noted for its "rugged, compact" design built for "demanding, rugged conditions" [9]. This suggests a design philosophy that prioritizes physical robustness alongside analytical performance.

Testing Methodologies

Standard shock and vibration tests often reference military standards (e.g., MIL-STD-810) which provide rigorous procedures for evaluating equipment durability. Although not explicitly outlined for spectrometers in the search results, typical testing protocols include:

- Drop Testing: Repeatedly dropping the unit from a specified height (e.g., 1 meter) onto a hard surface like concrete, followed by functional verification.

- Vibration Testing: Subjecting the device to defined vibration profiles that simulate transportation or use in vehicles and machinery.

- Operational Testing: Ensuring the instrument maintains calibration and performance before and after mechanical shock events.

The Researcher's Toolkit for Durability Assessment

Evaluating a spectrometer's durability requires an understanding of both the instrument's specifications and the standardized tests that validate them. The following table summarizes key resources and their functions in this assessment process.

Table: Essential Resources for Durability Assessment and Testing

| Resource | Function | Relevance to Ruggedness Testing |

|---|---|---|

| IEC 60529 Standard | Defines the IP Code system for ingress protection ratings against solids and liquids [8]. | The definitive reference for interpreting and specifying IP ratings on instrument datasheets. |

| MIL-STD-810 | A U.S. military standard that outlines environmental test methods for equipment durability, including shock and vibration. | Provides proven methodologies for validating mechanical robustness, though not specifically cited in results. |

| Prometheus Panta | An instrument for multi-parameter biophysical characterization [10]. | Used for application-specific thermal stability analysis of biologic samples (e.g., Tm, Tagg). |

| DSC (Differential Scanning Calorimetry) | A thermal analysis technique that measures heat flow associated with material transitions as a function of temperature [11]. | Used for small-scale determination of thermal decomposition kinetics and stability parameters. |

| Environmental Test Chamber | An enclosure that allows precise control of temperature and humidity. | Used for validating the operational temperature range specified for the spectrometer. |

| MJO445 | MJO445, CAS:1968-56-5, MF:C15H11N3S, MW:265.3 g/mol | Chemical Reagent |

| DN-F01 | DN-F01, CAS:30388-31-9, MF:C22H16N2O2, MW:340.4 g/mol | Chemical Reagent |

The durability of handheld spectrometers is a multi-faceted attribute underpinned by three core design elements: Ingress Protection, Thermal Stability, and Shock Resistance. Through standardized testing protocols like IP codes (IEC 60529), thermal ramping with multi-parameter detection, and mechanical stress tests, manufacturers can quantitatively validate the ruggedness of their instruments. For researchers and drug development professionals, understanding these specifications and the methodologies behind them is crucial for selecting field-deployable equipment that will deliver reliable data in demanding environments, from the manufacturing floor to remote outdoor locations. As portable spectroscopy continues to evolve, the integration of these durability features will remain a critical differentiator and a necessary component of field-ready analytical tools.

For researchers conducting field analysis—whether in pharmaceutical development, environmental monitoring, or material science—the ruggedness of a handheld spectrometer is paramount. Ruggedness is traditionally associated with resistance to physical shock, dust, and water. However, battery life and intelligent power management are equally critical components of operational ruggedness. An instrument cannot be considered truly field-ready if it cannot deliver uninterrupted, accurate data through a full day of on-site measurements without requiring grid power.

This guide objectively compares the battery technologies and power management strategies that underpin operational longevity in handheld spectrometers. By presenting performance data and experimental protocols, we aim to provide researchers with a framework for evaluating these essential characteristics, ensuring that their field data collection is both efficient and reliable.

Comparative Analysis of Spectrometer Battery Technologies

The core of a spectrometer's field longevity is its battery. The choice of battery chemistry involves a trade-off between runtime, weight, lifespan, and performance stability. The following table summarizes the key characteristics of the predominant battery types used in professional handheld instruments.

Table 1: Comparative Analysis of Battery Chemistries for Handheld Spectrometers

| Battery Characteristic | Lithium-ion (Li-ion) | Nickel-Metal Hydride (NiMH) | Alkaline |

|---|---|---|---|

| Energy Density | High | Moderate | Low |

| Typical Runtime | Long | Moderate | Short |

| Charge Cycles | 500 - 1000 | 300 - 500 | Non-rechargeable |

| Self-Discharge Rate | Low (~2% per month) | Moderate (~30% per month) | Moderate |

| Memory Effect | No | Yes | No |

| Impact on Measurement Stability | Provides stable voltage for consistent accuracy [12] | Voltage drop can affect component performance [12] | Significant voltage decay risks inaccurate readings [12] |

| Operational Temperature Range | Wide | Moderate | Moderate |

| Environmental Impact | Recyclable | Recyclable | Disposable |

| Key Advantage | Longest runtime, stable power | Lower initial cost, recyclable | Wide availability, no maintenance |

| Key Disadvantage | Higher initial cost, safety concerns if damaged [12] | Requires periodic full discharge, shorter lifespan [12] | High long-term cost, environmental waste [12] |

Analysis for Research Applications: For the demands of field research, Lithium-ion batteries are the industry standard. Their high energy density and stable discharge profile are crucial for maintaining the consistent power that spectrometer optics and detectors require for accurate measurements [12]. While NiMH batteries offer a budget-friendly and recyclable alternative, their higher self-discharge rate makes them less reliable if the instrument is stored between field campaigns without a recharge. Alkaline batteries are suitable only for emergency use, as their voltage drops continuously during use, which can introduce drift and inaccuracy in spectral measurements [12].

Experimental Protocols for Evaluating Power Management

To objectively assess the ruggedness of a spectrometer's power system, researchers can adopt or specify the following experimental protocols. These tests move beyond manufacturer specifications to evaluate performance under realistic and demanding conditions.

Protocol 1: Continuous Operational Runtime Test

This test establishes the baseline battery life under a simulated typical workflow.

- Objective: To determine the maximum continuous operational time on a single full charge.

- Methodology:

- Fully charge the spectrometer battery.

- Place the instrument in an environmental chamber set to a standard room temperature (e.g., 23°C).

- Program an automated stage to present a standard reference material at regular intervals.

- Configure the spectrometer to execute a predefined measurement cycle (e.g., 30-second integration time) continuously.

- Operate the instrument until it automatically powers down due to low battery.

- Record the total number of measurements and total operational time.

- Key Metrics: Total measurement count; Total runtime (hours:minutes).

Protocol 2: Temperature-Dependent Performance & Drainage Test

This test evaluates the impact of environmental extremes, a critical aspect of ruggedness.

- Objective: To quantify the reduction in battery runtime and the stability of analytical performance at high and low temperatures.

- Methodology:

- Conduct the Continuous Operational Runtime Test at room temperature (23°C) to establish a baseline.

- Repeat the test in a controlled environmental chamber set to a high field-operational temperature (e.g., 40°C).

- Repeat the test at a low field-operational temperature (e.g., 5°C).

- At the beginning, midpoint, and end of each test cycle, measure a certified standard and record the signal-to-noise ratio and the accuracy of the quantitative result.

- Key Metrics: Percentage reduction in runtime vs. baseline; Drift in analytical result for the certified standard over the discharge cycle.

Protocol 3: AI-Optimized Charging Strategy Validation

Modern power management can leverage artificial intelligence (AI) to extend battery health and efficiency. This protocol validates such advanced systems.

- Objective: To verify the performance of AI-driven charging strategies in improving efficiency and preserving long-term battery health.

- Methodology:

- Use a controlled DC system to simulate substation-level battery charging and discharging loads [13].

- Apply a traditional charging method (e.g., constant current-constant voltage) to a set of battery cells and record the efficiency and temperature rise.

- Apply an AI-optimized charging strategy (e.g., using a model like DSAN-N-BEATS for predictive management) to another set of identical cells [13].

- The AI model uses time-series forecasting to optimize the charging current and voltage in real-time, reducing stress on the battery chemistry [13].

- Measure the charging efficiency, time to full charge, and peak cell temperature for both methods.

- Cycle cells through repeated charge/discharge cycles to model long-term health degradation.

- Key Metrics: Charging efficiency (%); Charge cycle time (minutes); Peak cell temperature (°C); Capacity retention after 500 cycles (%).

The workflow for designing and executing these tests can be summarized as follows:

Advanced Power Management: From Hardware to AI

Beyond battery chemistry, advanced power management systems are crucial for maximizing field operational time. These systems function on multiple levels:

Hardware-Level Optimizations: Modern portable spectrometers, like the Bruker MOBILE-IR II, incorporate high-capacity internal batteries designed for all-day operation and components like thermoelectrically cooled (TE) detectors that offer a favorable balance of performance and power consumption compared to older, more energy-intensive detector technologies [14].

Algorithmic and AI-Driven Management: Research demonstrates the power of AI for predictive power management. For instance:

- The DSAN-N-BEATS model, which combines a self-attention network with a time-series forecasting model, has been shown to improve battery charging and discharging efficiency by 20% compared to traditional methods. This model accurately captures complex battery state data to optimize strategies [13].

- Frameworks like BatLiNet use deep learning to predict battery lifetime across diverse ageing conditions. By leveraging "inter-cell learning," it compares pairs of battery cells to discern lifetime differences, enhancing prediction stability and enabling proactive management of battery health in field equipment [15].

The Researcher's Toolkit for Power Analysis

Table 2: Essential Research Reagent Solutions for Power Testing

| Item | Function in Experiment |

|---|---|

| Certified Reference Materials (CRMs) | Provides a known spectral signature to validate analytical performance stability throughout the battery discharge cycle. |

| Environmental Chamber | Creates controlled temperature and humidity conditions to test battery and instrument performance under thermal stress. |

| Data Logging Multimeter / Power Analyzer | Precisely monitors voltage, current, and power draw from the battery in real-time during testing. |

| Battery Cycle Tester | Automates the process of charging and discharging battery cells to measure lifespan and capacity degradation over time. |

| AI-Based Predictive Models (e.g., DSAN-N-BEATS) | Provides a software framework for optimizing charging strategies and predicting long-term battery health [13]. |

| Oregonin | Oregonin, CAS:55303-93-0, MF:C24H30O10, MW:478.5 g/mol |

| GOT1 inhibitor-1 | N-(4-Chlorophenyl)-4-(1H-indol-4-yl)piperazine-1-carboxamide |

Battery life is not merely a convenience feature but a foundational element of a truly rugged field instrument. As this guide illustrates, a combination of high-quality Lithium-ion chemistry and intelligent, AI-driven power management provides the most robust solution for ensuring operational longevity.

Future developments in spectrometer power management will likely focus on the deeper integration of AI, not just for charging but for predictive shutdown of non-essential components and task-based power allocation. Furthermore, the emergence of chip-scale spectrometers based on photonic integrated circuits promises a significant reduction in the core power requirements of the analytical engine itself [16] [17]. For the field researcher, these advancements will translate to instruments that are not only more resilient but also capable of longer, more ambitious data collection campaigns in the world's most remote and challenging environments.

The field of spectroscopy is undergoing a transformative shift toward miniaturization, driven by the need for on-site analytical capabilities across diverse fields including pharmaceutical development, environmental monitoring, and forensic science. The global miniaturized spectrometer market, valued at $1.04 billion in 2024, is projected to grow at a robust compound annual growth rate of 12.8%, reaching $1.91 billion by 2029 [18]. This growth trajectory underscores the increasing reliance on portable analytical technologies. For researchers and drug development professionals, this trend presents a critical engineering challenge: achieving portability without compromising the instrument ruggedness and analytical performance required for reliable field-based measurements. Modern handheld spectrometers incorporate advanced technologies including micro-electro-mechanical systems, smartphone integration, and AI-enhanced spectral data interpretation to deliver laboratory-grade capabilities in field-deployable packages [18]. This comparison guide examines how miniaturization impacts spectrometer durability and performance, providing evidence-based insights for scientific professionals making procurement and implementation decisions.

Performance Comparison: Miniaturized vs. Conventional Systems

Quantitative Performance Metrics

Modern miniaturized spectrometers demonstrate performance characteristics that increasingly rival their benchtop counterparts for qualitative identification applications, though trade-offs remain in resolution and quantitative precision.

Table 1: Performance Comparison Between Spectrometer Formats

| Performance Characteristic | Handheld/Ruggedized Systems | Benchtop Laboratory Systems |

|---|---|---|

| Portability | <2 kg; battery operated [19] | 10-50 kg; requires AC power [19] |

| Analysis Speed | 10-30 seconds per sample [19] | 1-5 minutes per sample [19] |

| Spectral Resolution | 8-19 cmâ»Â¹ (Raman) [19] | 0.3-4 cmâ»Â¹ (Raman) [19] |

| Sample Preparation | None required; measures through packaging [19] | Often requires mounting or extraction [19] |

| Typical Identification Accuracy | >95% for library-matched materials [19] | >99% for library-matched materials [19] |

| Environmental Operating Range | -10°C to 50°C; IP65+ rated [19] | Laboratory conditions (typically 15°C-30°C) [6] |

Technology-Specific Performance Trade-offs

Different spectroscopic technologies exhibit varying degrees of performance retention when miniaturized. A 2018 study directly compared portable and bench-top Fourier-Transform Infrared (FTIR) spectrometers for soil analysis, finding that measurements and multivariate calibrations with the handheld device were as good as or slightly better than a benchtop instrument equipped with a DRIFT accessory, though not as accurate as directional hemispherical reflectance data collected with an integrating sphere [20]. For Raman spectroscopy, portable systems have demonstrated particular effectiveness in pharmaceutical quality control and law enforcement applications, with identification accuracy exceeding 95% for materials within their reference libraries [19].

Table 2: Application-Specific Performance of Miniaturized Spectrometers

| Application Domain | Technology Used | Performance Level | Key Limiting Factors |

|---|---|---|---|

| Pharmaceutical Raw Material ID | Handheld Raman | >95% accuracy vs. reference methods [19] | Library completeness, fluorescence [19] |

| Soil Analysis | Portable FTIR | Equivalent to benchtop DRIFT [20] | Soil moisture, particle size [20] |

| Narcotics Identification | Handheld Raman | Superior to color tests; minimal false positives [21] | Fluorescence, colored samples [21] [19] |

| Food Safety Screening | Portable NIR | Rapid contaminant detection [22] | Sample homogeneity, concentration levels [22] |

Experimental Protocols for Ruggedness Assessment

Standardized Ruggedness Testing Methodology

Evaluating the field-readiness of miniaturized spectrometers requires systematic testing beyond conventional laboratory calibration. The following experimental protocol provides a framework for assessing how portability requirements impact analytical performance under realistic field conditions.

Detailed Testing Protocols

Mechanical Durability Assessment

Drop Testing Protocol: Following MIL-STD-810G specifications [19], devices undergo repeated 1.5-meter drops onto concrete surfaces from various orientations. Post-test evaluation includes:

- Visual inspection for housing damage, screen integrity, and component displacement

- Functional testing of all buttons, interfaces, and connectivity features

- Performance validation using NIST-traceable reference standards to quantify any spectral accuracy degradation

Vibration Testing Protocol: Simulating transport conditions, devices undergo 2-hour vibration cycles at 5-500 Hz. Performance is monitored continuously during testing to identify:

- Laser alignment stability in Raman systems

- Optical component displacement affecting spectral resolution

- Electrical connection integrity for detectors and light sources

Environmental Ruggedness Validation

Temperature Cycling Protocol: Devices are subjected to temperature extremes from -10°C to 50°C [19] with performance measurements at 10°C intervals:

- Battery performance monitoring including runtime and charging capability

- Electronic stability assessment via signal-to-noise ratio measurements

- Optical component performance evaluating lens coatings and mirror substrates

Ingress Protection Testing: Following IP65/IP67 standards [19], devices are exposed to dust and water immersion while operational:

- Internal contamination inspection via internal visual access points

- Circuit board corrosion assessment through electrical continuity testing

- Optical path integrity verification using calibrated reflectance standards

The Researcher's Toolkit: Essential Materials for Field Spectroscopy

Table 3: Research Reagent Solutions for Field Spectrometer Validation

| Reagent/Material | Function in Testing | Application Context |

|---|---|---|

| NIST-Traceable Reference Standards | Spectral calibration and wavelength verification | Method validation, instrument qualification [20] |

| Stable Chemical Compounds (e.g., acetaminophen, aspirin) | Performance verification for specific applications | Pharmaceutical raw material identification [19] |

| Custom Spectral Libraries | Application-specific method development | Targeted screening (narcotics, explosives, pharmaceuticals) [19] |

| Environmental Challenge Samples | Simulated field condition testing | Soil analysis, contaminated material screening [20] |

| Portable Calibration Accessories | Field recalibration capabilities | Extended deployment without laboratory access [6] |

| ALK4290 | Lazucirnon|CCR3 Antagonist|For Research | |

| N-Boc-dolaproine | N-Boc-dolaproine, CAS:157967-06-1, MF:C14H25NO5, MW:287.35 g/mol | Chemical Reagent |

Performance-Durability Trade-offs: Engineering Compromises

The miniaturization process necessitates careful engineering compromises that directly impact field performance. Understanding these trade-offs is essential for selecting appropriate instrumentation for specific research applications.

The miniaturization of spectroscopic instruments presents a complex balance between performance retention and ruggedness enhancement. For researchers and drug development professionals, successful implementation requires careful consideration of application-specific requirements. Portable FTIR and Raman spectrometers have demonstrated sufficient performance for field-based identification tasks, with studies showing performance comparable to benchtop systems for qualitative analysis [20]. The key to successful deployment lies in understanding that miniaturized instruments are not merely compact versions of laboratory systems, but specialized tools designed for specific field applications. Current technological trends, including AI-enhanced spectral interpretation, smartphone integration, and multi-technology platforms are rapidly addressing existing limitations [18] [23]. As the market continues to evolve at a remarkable pace, with the chip-scale spectrometer segment projected to grow from less than $2 million in 2019 to over $1.6 billion in 2024 [24], researchers can expect continued improvement in both the performance and durability of field-deployable spectroscopic tools. Strategic selection criteria should prioritize application-specific performance validation alongside traditional ruggedness specifications to ensure reliable results in field-based research environments.

From Specification to Practice: Methodologies for Application-Specific Ruggedness Testing

The deployment of handheld spectrometers in field conditions—ranging from pharmaceutical quality control to environmental monitoring—demands rigorous validation under realistic operational scenarios. Simulated field tests are indispensable for evaluating how these portable analytical instruments perform when exposed to environmental stressors such as temperature fluctuations, vibration, humidity, and physical shock. The core objective of ruggedness testing is to bridge the gap between controlled laboratory performance and reliable field operation, ensuring that devices deliver accurate, reproducible results despite the challenges of the real world. The evolution of these tests is closely linked to advancements in spectrometer technology itself, with the market seeing a distinct trend toward miniaturized, field-ready equipment [6]. A comprehensive ruggedness testing framework not only validates a device's operational limits but also provides critical data for researchers and professionals who must select the right tool for demanding applications in drug development, security, and industrial compliance.

The necessity for such testing is underscored by the expanding applications of handheld spectrometers. These devices are no longer confined to laboratory benchtops but are routinely used for on-site material verification in manufacturing, contaminant detection in food and pharmaceuticals, and elemental analysis in mining and geology [25]. In each scenario, the instrument must withstand the specific environmental pressures of its operational context. A spectrometer used for quality control on a manufacturing floor, for instance, must tolerate constant vibration and dust, while a device deployed for environmental screening may face rain and wide temperature variations. Therefore, designing simulated field tests that accurately reproduce these stressors is a critical component of modern spectrometer development and selection, providing a empirical basis for confidence in field-based data.

Key Handheld Spectrometer Technologies and Their Environmental Tolerance

The landscape of handheld spectroscopy is characterized by diverse technologies, each with unique principles, applications, and susceptibilities to environmental factors. Understanding these technologies is the first step in designing meaningful field tests. The following table summarizes the primary handheld spectrometer types, their operating principles, and the environmental stressors most critical to their performance.

Table 1: Key Handheld Spectrometer Technologies and Critical Stressors

| Technology | Operating Principle | Common Field Applications | Key Environmental Stressors |

|---|---|---|---|

| Handheld XRF | Measures elemental composition by exciting a sample and analyzing the emitted X-ray fluorescence spectrum [26]. | Mining, geology, environmental soil screening, compliance testing for restricted substances [26] [25]. | Temperature extremes (affecting detector stability), physical shock (damaging the fragile X-ray tube), humidity (potential for electrical damage). |

| Handheld Raman | Shines a laser on a sample and detects the inelastically scattered light, providing a molecular fingerprint [6]. | Pharmaceutical raw material verification, identification of narcotics and hazardous chemicals [6] [25]. | Ambient light (can swamp the weak Raman signal), vibration (misaligns optical components), sample fluorescence (interferes with spectrum). |

| Handheld NIR | Measures absorption of near-infrared light to determine molecular overtone and combination vibrations [6]. | Agriculture (grain, moisture analysis), pharmaceutical QC, food authenticity [6] [25]. | Temperature & Humidity (significantly alter water absorption bands in NIR region), sample presentation (requires consistent pressure and placement). |

| Handheld FTIR | Uses an interferometer to measure all infrared frequencies simultaneously, creating a broad-spectrum molecular signature [6]. | Polymer and plastic identification, environmental contaminant analysis, explosive detection [6] [27]. | Vibration (critical for interferometer mirror alignment), dust (contaminates optical surfaces), temperature (can cause thermal drift in the signal). |

The performance of these technologies under stress is not merely about durability; it is about analytical integrity. For example, a handheld XRF analyzer like the SPECTRO xSORT is designed for extended one-handed use in challenging field conditions, with ergonomic improvements aimed at reducing user fatigue and improving data consistency during prolonged operation [26]. Meanwhile, devices intended for military or emergency response, such as certain explosive detectors, may be built to meet stringent robustness standards like MIL-STD-810H, which certifies their ability to withstand drops, vibration, and exposure to rain and dust [27]. When designing field tests, it is imperative to move beyond generic stress applications and tailor the protocols to the specific technological vulnerabilities and intended use-cases of the device under test.

Experimental Protocols for Simulated Field Testing

A robust simulated field testing protocol must subject handheld spectrometers to a sequence of controlled stressors that mimic real-world conditions, while simultaneously quantifying their impact on analytical performance. The following workflow provides a generalized structure for such testing, which can be adapted for specific spectrometer technologies and application scenarios.

Diagram 1: Field testing workflow for spectrometer ruggedness evaluation.

Detailed Testing Methodology

The workflow outlined above is operationalized through a series of specific, repeatable experimental procedures. The table below details the core protocols for applying key environmental stressors and measuring the corresponding performance metrics.

Table 2: Experimental Protocols for Key Environmental Stressors

| Stress Category | Simulation Protocol | Performance Metrics & Measurement | Acceptance Criteria |

|---|---|---|---|

| Thermal Cycling | Place device in an environmental chamber. Cycle between -5°C and 45°C with 80% relative humidity at the high-temperature stage. Dwell at each extreme for 2 hours. Complete 3 full cycles [27]. | Measure wavelength shift (cmâ»Â¹ for IR, nm for Raman) on a NIST-traceable polystyrene standard. Record signal-to-noise ratio (SNR) at each temperature extreme. | Wavelength shift ≤ 1 cmâ»Â¹ (IR) or 0.1 nm (Raman). SNR degradation ≤ 15% from baseline. |

| Vibration & Shock | Mount device on a vibration table. Apply random vibration per MIL-STD-810H Profile 21 (0.01 g²/Hz, 5-500 Hz). For shock testing, use a drop test from 1 meter onto plywood over concrete [27]. | Pre- and post-test, acquire a spectrum of a certified reference material. Check for physical damage and optical misalignment via visual inspection and automated software diagnostics. | No physical damage or permanent functional failure. Analytical result of reference material remains within certified uncertainty range. |

| Ambient Light & Weather | Operate the spectrometer in a controlled arena with adjustable full-spectrum lighting (0 to 100,000 lux). For weather resistance, a dust chamber and water spray apparatus are used to simulate field conditions. | For ambient light: Measure the standard deviation of the baseline signal in a dark vs. bright environment. For weather: Inspect for ingress of dust/water and test electrical safety and function. | Baseline noise increase ≤ 10% under 50,000 lux. Device remains fully operational with an IP54 rating or better. |

Performance Benchmarking and Validation

The ultimate goal of simulated field testing is to generate comparable, quantitative data on how different handheld spectrometers perform under duress. This allows researchers and engineers to benchmark devices against each other and against the requirements of a specific application. The following table provides a hypothetical comparison of how different technologies might perform against the key metrics outlined in the protocols.

Table 3: Example Performance Benchmarking Under Stressors

| Spectrometer Model (Tech) | Thermal Shift (cmâ»Â¹) | SNR Degradation | Vibration Tolerance | Overall Ruggedness Score |

|---|---|---|---|---|

| Model A (FTIR) | 0.8 | 12% | High (No misalignment) | 9/10 |

| Model B (Raman 1064nm) | 0.2 | 5% | Medium (Minor recalibration needed) | 8/10 |

| Model C (NIR) | 1.5 | 20% | High (No misalignment) | 7/10 |

| Model D (XRF) | N/A (Elemental) | 8% | Low (Required detector reset) | 6/10 |

Note: Data is illustrative, compiled from generalized performance characteristics reported in the literature [6] [26] [27].

Validation of the entire testing process is achieved by including control measurements and standardized materials at every stage. The use of well-characterized calibration standards, such as NIST-traceable polymers for wavelength accuracy or certified reference materials (CRMs) for analytical accuracy, is non-negotiable [25]. Furthermore, the testing protocol itself should be validated for repeatability by running the same battery of tests on multiple units of the same spectrometer model. This structured approach to benchmarking provides drug development professionals and other researchers with an objective, data-driven foundation for selecting the instrument best suited to their specific field conditions.

The Researcher's Toolkit for Field Testing

Implementing a comprehensive ruggedness testing program requires access to specific tools, materials, and analytical standards. The following table details the essential components of a "research reagent solutions" kit for designing and executing simulated field tests for handheld spectrometers.

Table 4: Essential Research Toolkit for Spectrometer Field Testing

| Tool / Material | Function in Testing | Application Example |

|---|---|---|

| Environmental Chamber | Precisely controls temperature and humidity to simulate climatic extremes encountered in the field. | Cycling a device from -5°C to 45°C with 80% RH to test thermal stability and lens fogging [27]. |

| Vibration Table & Drop Test Rig | Applies standardized mechanical shocks and vibrations to simulate transportation and rough handling. | Using a MIL-STD-810H vibration profile to assess the integrity of optical alignments and internal components [27]. |

| NIST-Traceable Standards | Provides a ground truth for verifying spectral accuracy (wavelength) and signal intensity before, during, and after stress tests. | Using a polystyrene standard to measure wavelength shift in a handheld FTIR after thermal cycling [6]. |

| Certified Reference Materials (CRMs) | Materials with a certified composition for validating the analytical accuracy of the device under test. | Analyzing a soil CRM with a known lead concentration using a handheld XRF to check for analytical drift post-vibration [26]. |

| Portable Light Meter & Lux Source | Quantifies ambient light levels to test a spectrometer's immunity to external light interference. | Measuring the signal-to-noise ratio of a handheld Raman spectrometer under 50,000 lux to simulate outdoor use. |

| Methyl Salicylate | Methyl-d3 salicylate-OD|High-Quality Isotope Labeled Standard | Methyl-d3 salicylate-OD is a deuterated stable isotope-labeled internal standard for precise quantification in GC-MS and LC-MS research applications. This product is for Research Use Only (RUO). Not for human or veterinary diagnostic or therapeutic use. |

| 6-B345TTQ | 6-Bromo-4-(3,4,5-trimethoxyphenyl)-3,4-dihydrobenzo[h]quinolin-2(1H)-one |

Designing simulated field tests for handheld spectrometers is a multidisciplinary endeavor that integrates materials science, environmental engineering, and analytical chemistry. By systematically applying controlled stressors—thermal, mechanical, and optical—and meticulously measuring their impact on critical performance metrics, researchers can generate a complete ruggedness profile for any device. The experimental protocols and benchmarking frameworks outlined here provide a roadmap for objectively comparing the field-readiness of different spectrometer technologies, from handheld XRF and Raman to NIR and FTIR. As these devices continue to evolve, becoming more integrated with AI and cloud data systems [25], the methods for testing their resilience must similarly advance. For the scientific and drug development community, adopting such rigorous and standardized testing methodologies is paramount for ensuring that the data collected in the field is as reliable as that produced in the laboratory, thereby upholding the integrity of research and compliance activities in increasingly challenging environments.

In the highly regulated pharmaceutical and biomedical industries, the accurate identification of raw materials and detection of contaminants are critical steps to ensure product safety and efficacy. Vibrational spectroscopic techniques, particularly those deployed in portable and handheld instruments, have emerged as powerful tools for rapid, non-destructive analysis directly at the point of need. Mid-infrared (MIR) and Raman spectroscopy provide molecular fingerprinting capabilities that enable unambiguous material verification and quality assessment [28]. The ruggedness and reliability of these handheld spectrometers under variable field conditions have become a focal point of research, especially as the technology transitions from controlled laboratory environments to diverse real-world settings such as manufacturing floors, warehouses, and distribution centers.

The fundamental advantage of spectroscopic methods lies in their ability to provide immediate chemical-specific information without extensive sample preparation. As Richard Crocombe notes in European Pharmaceutical Review, "Over the last twenty years, the combination of advances in consumer electronics, telecommunications optics and photonics miniaturisation has driven the development of a wide variety of portable spectrometers," making this technology increasingly accessible for pharmaceutical applications [28]. This guide provides a comprehensive comparison of the performance characteristics of leading spectroscopic technologies, supported by experimental data and detailed methodologies for their validation in field conditions.

Comparative Analysis of Spectroscopic Technologies

Performance Characteristics of Portable Spectrometers

The selection of an appropriate spectroscopic technique depends heavily on the specific application requirements, including detection limits, susceptibility to interferences, and operational constraints. The following table summarizes the key performance characteristics of major portable spectroscopic technologies based on current research findings:

Table 1: Performance Comparison of Portable Spectroscopic Technologies

| Performance Characteristic | Portable IR Spectroscopy | Portable Raman Spectroscopy | Color-Based Field Tests |

|---|---|---|---|

| Limit of Detection (Cocaine HCl) | 25% with common adulterants [21] | Higher than IR due to fluorescence issues [21] | 10% with all adulterants tested [21] |

| False Positive Rate | Minimal [21] | Minimal [21] | High (73 known interfering compounds) [21] |

| Analysis Time | Seconds to minutes [21] | 10-30 seconds [19] | Several minutes [21] |

| Sample Preparation | Minimal | None required; measures through packaging [19] | Required sampling, potential exposure risk [21] |

| Destructive Testing | Non-destructive [21] | Non-destructive [19] | Destructive [21] |

| Fluorescence Interference | Not affected | Significant for common drugs like heroin [21] | Not applicable |

| Adulterant Interference | Less severe [21] | More severe [21] | Significant, with many known interferents [21] |

| Initial Investment | High [21] | High [21] | Low (but recurring costs) [21] |

Portable IR spectroscopy demonstrates particular advantages for pharmaceutical applications due to its lower limit of detection and reduced susceptibility to adulterant interference compared to Raman alternatives [21]. However, portable Raman spectrometers offer the distinct advantage of through-package analysis, enabling verification of materials without breaching container integrity—a valuable feature for raw material identification (RMID) in pharmaceutical manufacturing and counterfeit detection [28] [19].

Handheld vs. Benchtop Spectrometer Performance

A critical consideration in method development is whether portable instruments can provide comparable performance to laboratory-based systems. Research examining portable Fourier-Transform Infrared (FTIR) spectrometers has demonstrated promising results in this regard. One study directly compared a portable Agilent 4300 Handheld FTIR spectrometer against a Bruker Tensor 27 bench-top instrument for the analysis of soil properties relevant to pharmaceutical applications (e.g., organic carbon, nitrogen, clay content) [20].

Table 2: Portable vs. Benchtop FTIR Performance Comparison

| Performance Metric | Portable Handheld FTIR (DRIFT) | Bench-top FTIR (DRIFT) | Bench-top FTIR (DHR with Integrating Sphere) |

|---|---|---|---|

| Spectral Quality | Good, slightly higher noise | Good | Best |

| Prediction Accuracy for SOC | High (comparable to bench-top DRIFT) | High | Slightly better |

| Prediction Accuracy for N | High (comparable to bench-top DRIFT) | High | Slightly better |

| Noise Level | Moderate (did not markedly affect calibration accuracy) | Low | Lowest |

| Key Spectral Regions | Good match with bench-top DHR for SOC and N | Good match with portable | Reference standard |

| Multivariate Calibration Performance (PLS) | As good or slightly better than bench-top DRIFT | Good | Best for some parameters |

The study concluded that "portable FTIR instruments are a viable alternative for MIR measurements in the laboratory and offer great potential for on-site applications" [20]. This finding is particularly significant for pharmaceutical applications where on-site verification of raw materials can significantly streamline quality control processes while maintaining analytical rigor.

Experimental Protocols for Ruggedness Testing

Framework for Ruggedness Assessment

Ruggedness testing, as defined by the International Conference on Harmonization (ICH), is "a measure of [an analytical procedure's] capacity to remain unaffected by small but deliberate variations in method parameters and provides an indication of its reliability during normal usage" [29]. In the context of handheld spectrometers used in field conditions, ruggedness testing evaluates how environmental factors and operational variations impact analytical results.

The setup and execution of ruggedness tests involve several methodical steps [29]:

- Selection of factors and their levels: Choosing parameters most likely to affect results (e.g., temperature, humidity, operator skill, sample presentation)

- Selection of experimental design: Deciding between One Factor At a Time (OFAT) or Design of Experiments (DoE) approaches

- Selection of responses: Identifying relevant assay and system suitability test responses to monitor

- Definition of experimental protocol: Establishing sequence and conditions for experiments

- Execution of experiments: Conducting tests according to protocol, often in randomized order

- Estimation of factor effects: Quantifying the impact of each varied parameter

- Graphical and statistical analysis: Interpreting results to determine significance of effects

- Drawing conclusions: Implementing measures to control influential factors

Experimental Designs for Ruggedness Testing

Two primary experimental approaches are used in ruggedness testing of analytical methods:

One Factor At a Time (OFAT) approach varies individual factors while holding others constant, as illustrated in the following example for an HPLC method [30]:

Table 3: Example of OFAT Experimental Design

| Experiment | Actual Order | Factor A: pH | Factor B: Additive Conc. | Factor C: Column Temp. | Response: Retention Time |

|---|---|---|---|---|---|

| 1 | 3 | Optimal | Optimal | + | 7.95 |

| 2 | 6 | Optimal | Optimal | - | 8.13 |

| 3 | 5 | Optimal | + | Optimal | 8.12 |

| 4 | 1 | Optimal | - | Optimal | 7.72 |

| 5 | 4 | + | Optimal | Optimal | 8.32 |

| 6 | 2 | - | Optimal | Optimal | 9.82 |

| 7 | 7 | Optimal | Optimal | Optimal | 8.03 |

This approach is straightforward to implement and interpret, making it suitable for routine laboratories [30]. However, it cannot detect interactions between factors.

Design of Experiments (DoE) approaches, such as fractional factorial or Plackett-Burman designs, simultaneously vary multiple factors in a structured pattern. These designs are more efficient for evaluating multiple parameters and can detect interactions, but require greater statistical expertise to implement and interpret [29] [30]. For evaluating ruggedness as defined by the United States Pharmacopeia—which involves assessment across different laboratories, analysts, instruments, and days—nested designs may be more appropriate than two-level screening designs [31].

Methodology for Handheld Raman Spectrometer Evaluation

A robust protocol for evaluating handheld Raman spectrometers in pharmaceutical applications should include the following steps, adapted from research on raw material identification [32]:

Instrument Calibration: Develop methods using pure reference standards for each compound of interest, collecting a minimum of 20 scans to account for variations in sampling position, packaging materials, and operator technique.

Method Development: Create customized identification methods using proprietary algorithms that compare unknown spectra to reference methods, generating a numerical P-value (where 1.000 represents a perfect fit) for PASS/FAIL determination.

Validation Testing: Test all materials against specific methods generated from pure reference standards, with typical analysis times of approximately 20 seconds per sample.

Data Interpretation: Evaluate results based on both P-value thresholds and Hit Quality Index (HQI) values, where an HQI of 100% indicates identical spectra, with values decreasing as spectra become less similar.

This methodology was successfully applied to differentiate between various cellulose materials and food additives—compounds that are particularly challenging due to their similar appearance and composition [32]. The study demonstrated that modern handheld Raman instruments with advanced optical designs, thermally stabilized detectors, and intelligent algorithms can achieve reliable identification of these complex materials.

The Scientist's Toolkit: Essential Research Reagent Solutions

The implementation of spectroscopic methods requires not only instrumentation but also appropriate standards and reagents for method development and validation. The following table outlines key materials used in the development and validation of spectroscopic methods for pharmaceutical applications:

Table 4: Essential Materials for Spectroscopic Method Development

| Material/Standard | Function in Research & Development | Example Applications |

|---|---|---|

| Cellulose Compounds | Model challenging materials for method validation; represent common excipients [32] | Differentiation between cellulose, microcrystalline cellulose in pharmaceutical binders/fillers |

| Hydroxypropyl Methylcellulose (HPMC) Compounds | Test specificity for chemically similar compounds; challenge spectral library resolution [32] | Identification of various HPMC types (Methocel, Metolose, Hypromellose) as binding agents |

| Lactose | Represent common pharmaceutical sweetening agent/filler; validate methods for common excipients [32] | Raw material verification of sweetening agents in solid dosage forms |

| Maltodextrin | Challenge methods with food additives/sweetening agents; test fluorescence mitigation [32] | Identification of food additives and excipients in pharmaceutical formulations |

| Calcium Monohydrogen Phosphate | Model inorganic pharmaceutical additives; validate methods for mineral-based compounds [32] | Verification of binder/dietary supplement materials |

| Spectral Library | Reference database for compound identification; essential for accurate material verification [19] | All spectroscopic identification applications |

| System Suitability Standards | Verify instrument performance meets specifications; quality control check [29] | Daily instrument qualification and method validation |

| JA2131 | JA2131, CAS:6505-99-3, MF:C13H19N5O2S2, MW:341.5 g/mol | Chemical Reagent |

| AS2444697 | 4-(2-(2-Methylpyridin-4-yl)oxazole-4-carboxamido)-1-(tetrahydro-2H-pyran-4-yl)-1H-pyrazole-3-carboxamide | High-quality 4-(2-(2-methylpyridin-4-yl)oxazole-4-carboxamido)-1-(tetrahydro-2H-pyran-4-yl)-1H-pyrazole-3-carboxamide for research. This product is For Research Use Only. Not for human or veterinary use. |

Visualization of Experimental Workflows

Ruggedness Testing Methodology

Spectrometer Selection Logic

Handheld spectroscopic instruments have evolved to offer performance characteristics that in many applications rival their benchtop counterparts, while providing unparalleled flexibility for field-based analysis. The selection between portable IR and Raman technologies depends heavily on specific application requirements, with IR offering advantages in detection limits and reduced interference, while Raman provides unique through-package analysis capabilities. Ruggedness testing through structured experimental designs is essential to validate these instruments for use in variable field conditions, ensuring reliable performance across different operators, environments, and sample presentations. As the technology continues to advance, with improvements in detector sensitivity, fluorescence mitigation, and spectral library development, handheld spectrometers are poised to play an increasingly central role in pharmaceutical and biomedical testing applications, from raw material verification to contaminant detection.

Handheld spectrometers have become indispensable in field-based research and drug development, enabling rapid, on-site identification and quantification of substances. However, their analytical performance in real-world, non-laboratory conditions is challenged by environmental stressors such as temperature fluctuations, physical shock, and varying sample presentation. These factors can induce signal instability and calibration drift, phenomena where a spectrometer's readings gradually deviate from their calibrated baseline over time. For researchers and scientists, this drift can compromise data integrity, leading to inaccurate conclusions.

This guide objectively compares the performance of leading handheld spectrometer technologies, focusing on their resilience to signal decay and the methodologies used to detect and correct for calibration drift. Framed within a broader thesis on ruggedness testing, it provides a foundation for selecting the right instrument based on empirical data and proven experimental protocols.

Technology Comparison: Handheld Spectrometers at a Glance

The market offers various handheld spectrometer technologies, each with unique strengths and vulnerabilities. The table below summarizes the core characteristics and documented performance of key systems.

Table 1: Performance Comparison of Handheld Spectrometer Technologies Under Field Conditions

| Technology | Primary Applications | Key Ruggedness Features | Reported Performance Under Duress |

|---|---|---|---|

| Handheld Raman (e.g., Agilent Resolve) | Narcotics, explosives, and chemical identification through sealed containers [33]. | Proprietary Spatially Offset Raman Spectroscopy (SORS); tested for 10 hours in freezing and overheating conditions [33]. | Identifies materials in less than 28 seconds through opaque containers; ranked top-performing by the U.S. Department of Homeland Security [33]. |

| Handheld XRF (e.g., SPECTRO xSORT) | Elemental analysis for geology, mining, and environmental compliance screening [26]. | Redesigned compact, balanced body for one-handed use; physical buttons for gloved operation [26]. | Delivers rapid, accurate measurements across diverse sample types, even in remote or challenging field conditions [26]. |

| Ion Mobility Spectrometers | Explosive and chemical detection for security applications [34]. | High sensitivity and rapid response time; designed for rugged, unpredictable conditions [34]. | Projected to hold a 38.6% market revenue share in 2025, attributed to portability and reliability in field environments [34]. |

Experimental Protocols for Assessing Signal Stability

To ensure data quality, researchers must employ standardized protocols for stress-testing handheld spectrometers. The following methodologies are critical for evaluating signal stability.

Environmental Stress Testing

Agilent's manufacturing process for the Resolve handheld Raman spectrometer involves a rigorous environmental stress protocol. Each unit undergoes a sequence of extreme temperature exposures to validate component stability and signal integrity. The specific protocol is as follows [33]:

- Cold Soak: The instrument is placed in a controlled environment for 10 hours at freezing temperatures.

- Heat Soak: The instrument is subsequently exposed to 10 hours of overheating conditions.

- Thermal Shock: The unit is subjected to rapid temperature cycling to induce thermal stress.

- Continuous Scanning: Throughout and after the stress tests, the device performs constant self-scans to verify that its analytical performance remains consistent and within specified tolerances.

This protocol helps identify components susceptible to thermal expansion or contraction, which can cause misalignment in optical paths and lead to signal drift.

Signal Stability Detection for Inertial Sensors

While developed for Nuclear Magnetic Resonance (NMR) sensors, a published Signal Stability Detection (SSD) algorithm offers a generalizable framework for identifying stable signal periods in noisy data streams. This is crucial for distinguishing true analytical signal from low-frequency drift and high-frequency noise [35].

The method involves:

- Data Segmentation: The continuous signal from the sensor is divided into a fixed number of data points per segment.

- Variance Calculation: The variance of each data segment is calculated.

- Stability Judgment: The variance of the current segment is compared to a predefined stability threshold. If the variance is below the threshold, the signal is classified as "stable"; otherwise, it is "unstable".

This simple yet effective algorithm allows for real-time assessment of signal quality, which can inform data acquisition and instrument operation.

Methodologies for Monitoring and Correcting Calibration Drift

Calibration drift is a primary concern for long-term field deployments. Researchers have developed advanced computational and modeling techniques to monitor and suppress this drift.

Dynamic Calibration Curves with Online Learning