Spectroscopy in Pharma 2025: AI-Driven Methods, Market Trends, and Regulatory Advances

This article provides a comprehensive overview of the latest spectroscopic technologies and their transformative applications in the pharmaceutical industry.

Spectroscopy in Pharma 2025: AI-Driven Methods, Market Trends, and Regulatory Advances

Abstract

This article provides a comprehensive overview of the latest spectroscopic technologies and their transformative applications in the pharmaceutical industry. Tailored for researchers, scientists, and drug development professionals, it explores foundational principles, cutting-edge methodological advances like AI-powered Raman and portable NIR, and strategic approaches for troubleshooting and optimization. It further examines evolving validation paradigms and offers a comparative analysis of techniques, synthesizing key trends to guide instrument selection, enhance analytical workflows, and meet stringent regulatory standards for drug development and quality control.

The Expanding Role of Spectroscopy in Modern Pharma: Market Drivers and Core Technologies

Molecular spectroscopy, the study of the interaction between matter and electromagnetic radiation, has become an indispensable tool in the pharmaceutical industry. This analytical technique provides critical insights into molecular structures, composition, and dynamics across all stages of drug development and manufacturing. The global molecular spectroscopy market is positioned for substantial growth, projected to increase from USD 7.15 billion in 2025 to approximately USD 9.04 billion by 2034, representing a compound annual growth rate (CAGR) of 2.64% [1]. This growth trajectory underscores the technique's expanding role in pharmaceutical research, quality control, and process optimization. The increasing demand for advanced analytical techniques in drug discovery, development, and quality assurance, coupled with technological innovations, is driving market expansion. This whitepaper provides an in-depth technical analysis of the molecular spectroscopy market, with a specific focus on its applications, methodologies, and future trends within the pharmaceutical and biopharmaceutical sectors.

Market Size and Growth Projections

The molecular spectroscopy market demonstrates robust growth potential, though reported figures vary slightly between research firms due to differing methodologies and segment definitions. The overall consensus confirms a steady expansion driven by pharmaceutical and biotechnology applications.

Table 1: Molecular Spectroscopy Market Size and Growth Projections

| Metric | Towards Healthcare Projections | Allied Market Research Projections |

|---|---|---|

| Base Year (2024) Value | USD 6.97 billion [1] | USD 3.9 billion [2] |

| 2025 Market Size | USD 7.15 billion [1] | - |

| 2034 Market Size | USD 9.04 billion [1] | USD 6.4 billion [2] |

| Forecast Period | 2025-2034 [1] | 2025-2034 [2] |

| CAGR | 2.64% [1] | 5% [2] |

Despite varying figures, both sources indicate consistent market growth, particularly in pharmaceutical and biotechnology applications. The differing valuations can be attributed to variations in market definition, with some reports focusing on specific technique segments while others provide broader industry coverage.

Key Market Segments and Regional Analysis

Technology Segment Analysis

Different spectroscopic technologies contribute variably to market growth, each with distinct applications and adoption rates in pharmaceutical research and quality control.

Table 2: Market Share and Growth by Technology Segment

| Technology | Market Share (2024) | Growth Potential | Primary Pharmaceutical Applications |

|---|---|---|---|

| NMR Spectroscopy | Dominating share [1] | Steady growth | Drug discovery, metabolomics, structural biology [1] [2] |

| Mass Spectroscopy | Significant segment | Fastest growth [1] | Proteomics, genomics, therapeutic drug monitoring [1] |

| Raman Spectroscopy | Growing segment | Fastest CAGR [2] | Molecular imaging, bioprocess monitoring [3] |

| IR Spectroscopy | Established segment | Stable growth | Raw material verification, quality control [4] |

| UV-Visible Spectroscopy | Mature segment | Moderate growth | Concentration analysis, dissolution testing [3] |

Regional Market Distribution

The adoption of molecular spectroscopy varies significantly across geographic regions, reflecting differences in healthcare infrastructure, research funding, and industrial development.

North America: Dominated the market in 2024, attributed to well-established healthcare infrastructure, significant R&D investments, and the presence of major pharmaceutical and biotechnology companies [1] [2]. Federal agencies in the U.S. allocated over $42 billion to research and development in 2022, with significant portions supporting advanced analytical instrumentation [2].

Asia-Pacific: Expected to witness the fastest growth during the forecast period, driven by rapid industrialization, expanding pharmaceutical R&D capabilities, and increasing government initiatives to strengthen scientific research infrastructure [1] [2]. China's 14th Five-Year Plan specifically emphasizes advanced instrumentation development to reduce import reliance [2].

Europe: Maintains a strong market position supported by a well-established academic and research ecosystem, stringent regulatory frameworks for food safety and environmental monitoring, and robust pharmaceutical manufacturing capabilities, particularly in Germany, Switzerland, and the UK [2].

Molecular Spectroscopy in Pharmaceutical Applications

Technical Applications in Drug Development

Molecular spectroscopy serves as a critical analytical tool throughout the pharmaceutical development lifecycle, providing essential data for decision-making and quality assurance.

Drug Discovery and Development: Nuclear Magnetic Resonance (NMR) spectroscopy is unparalleled for determining the structure of organic compounds and understanding molecular interactions [5]. It provides detailed information about molecular structure and conformational subtleties through the interaction of nuclear spin properties with an external magnetic field [3]. Infrared (IR) and Raman spectroscopy offer insights into functional groups and bond types, enabling researchers to characterize potential drug candidates efficiently [5].

Biopharmaceutical Characterization: The analysis of therapeutic proteins presents unique challenges that spectroscopic techniques are well-suited to address. Size exclusion chromatography coupled with inductively coupled plasma mass spectrometry (SEC-ICP-MS) has emerged as a valuable strategy for differentiating between ultra-trace levels of metals interacting with proteins and free metals in solution [3]. This is critical for understanding protein-metal interactions that can affect therapeutic efficacy, safety, and stability.

Process Analytical Technology (PAT): Spectroscopy forms a cornerstone of PAT initiatives, enabling real-time monitoring and control of pharmaceutical manufacturing processes [5]. Near-infrared (NIR) spectroscopy is particularly valuable for its ability to measure parameters like moisture content, particle size, and drug content without disrupting manufacturing processes [5]. Raman spectroscopy has been successfully implemented for real-time measurement of product aggregation and fragmentation during clinical bioprocessing, with hardware automation and machine learning enabling product quality measurements every 38 seconds [3].

Quality Control and Assurance: Ultraviolet-visible (UV-Vis) spectroscopy allows for fast, non-destructive analysis of Active Pharmaceutical Ingredient (API) concentration, purity, and formulation at various production stages [5]. The development of non-invasive in-vial fluorescence analysis provides innovative approaches to monitor protein denaturation without compromising sterility or product integrity [3].

Experimental Protocols and Methodologies

Real-Time Bioprocess Monitoring Using Raman Spectroscopy

Objective: To enable real-time monitoring of product aggregation and fragmentation during clinical bioprocessing using inline Raman spectroscopy with hardware automation and machine learning [3].

Materials and Equipment:

- Raman spectrometer with fiber optic probes

- Bioreactor system

- Automated sampling system

- Computational infrastructure for machine learning algorithms

- Reference analytical instruments (e.g., HPLC, SEC) for validation

Methodology:

- System Integration: Interface Raman spectrometer with bioreactor using immersion or flow-through probes suitable for sterile conditions.

- Calibration: Develop multivariate calibration models using representative samples spanning expected process variations. Employ machine learning algorithms to reduce calibration effort and enhance model robustness.

- Data Acquisition: Collect Raman spectra continuously at predetermined intervals (e.g., every 38 seconds). Implement automated quality control checks to identify and eliminate anomalous spectra.

- Spectral Processing: Process raw spectra using preprocessing algorithms including cosmic ray removal, baseline correction, and normalization.

- Multivariate Analysis: Apply partial least squares (PLS) regression or comparable chemometric techniques to correlate spectral features with critical quality attributes.

- Model Validation: Validate prediction accuracy against reference analytical methods throughout the process duration.

- Real-Time Monitoring: Implement controlled bioprocesses based on spectroscopic measurements to ensure consistent product quality.

This approach has demonstrated capability to accurately monitor multiple cell culture components simultaneously, with Q² values (predictive R-squared values) exceeding 0.8 for 27 different components [3].

Protein-Stability Assessment via FT-IR Spectroscopy with Hierarchical Cluster Analysis

Objective: To evaluate the stability of protein drugs under various storage conditions using Fourier-transform infrared spectroscopy (FT-IR) coupled with hierarchical cluster analysis (HCA) [3].

Materials and Equipment:

- FT-IR spectrometer with attenuated total reflection (ATR) accessory

- Protein drug samples

- Temperature-controlled storage facilities

- Python programming environment with scientific computing libraries (e.g., SciPy, scikit-learn)

Methodology:

- Sample Preparation: Dispense protein drug formulations into appropriate containers under controlled conditions.

- Storage Conditions: Store samples under varied temperature conditions relevant to intended storage and potential stress scenarios.

- Spectral Collection: Weekly, collect FT-IR spectra of samples across appropriate wavelength range (e.g., 4000-400 cmâ»Â¹) with sufficient resolution. Maintain consistent instrument parameters throughout study.

- Spectral Preprocessing: Process spectra using second derivatives and vector normalization to enhance protein-specific spectral features, particularly in the Amide I region (1600-1700 cmâ»Â¹) which reports on secondary structure.

- Hierarchical Cluster Analysis: Implement HCA in Python to assess similarity of secondary protein structures across different storage conditions and timepoints.

- Data Interpretation: Interpret clustering patterns to evaluate structural stability, identify significant changes, and determine optimal storage conditions.

This methodology has revealed that protein drug stability was maintained across temperature conditions, with closer similarity among samples than anticipated, suggesting FT-IR coupled with HCA as a valuable tool for future drug stability studies [3].

Emerging Trends and Innovations

The molecular spectroscopy landscape is evolving rapidly, with several technological advancements shaping its future applications in pharmaceutical research and development.

Integration of Artificial Intelligence and Machine Learning: AI and ML are revolutionizing spectral data interpretation, enabling more accurate predictions and faster analysis. These technologies facilitate real-time decision making, predictive maintenance, and technologically-assisted process optimization, making spectroscopy systems smarter and more effective [6]. The intersection of spectroscopy and data science represents the next generation of enabling technologies for pharmaceutical process development [7].

Miniaturization and Portable Devices: There is growing demand for smaller, portable, and mobile methods of spectroscopy analysis to service on-site testing and increase applicability in field-based applications [6]. Portable NIR and Raman spectrometers are increasingly deployed for point-of-care diagnostic applications and therapeutic drug monitoring [7] [8].

Advanced Raman Techniques: Surface-Enhanced Raman Spectroscopy (SERS) and Tip-Enhanced Raman Spectroscopy (TERS) are expanding the capabilities of conventional Raman spectroscopy, enabling non-destructive, real-time analysis of protein dynamics and aggregation mechanisms with significantly enhanced sensitivity [3]. These techniques provide insights into molecular events with potential applications in diverse fields, including biopharmaceuticals and point-of-care devices.

Hyphenated Techniques: The combination of separation techniques with spectroscopic detection continues to advance pharmaceutical analysis. Size exclusion chromatography coupled with inductively coupled plasma mass spectrometry (SEC-ICP-MS) has been developed to speciate and quantify target metals in cell culture media, aiding in quality control, contaminant identification, and assessment of media stability and cell metal uptake [3].

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of molecular spectroscopy in pharmaceutical research requires specific reagents, reference materials, and specialized equipment.

Table 3: Essential Research Reagents and Materials for Pharmaceutical Spectroscopy

| Item | Function/Application | Technical Specifications |

|---|---|---|

| Cell Culture Media | Matrix for biopharmaceutical production; metal speciation studies [3] | Defined formulations; characterized metal content (Mn, Fe, Co, Cu, Zn) |

| Therapeutic Proteins | Analytical targets for structural and interaction studies [3] | Monoclonal antibodies, recombinant proteins; high purity (>95%) |

| Size Exclusion Columns | Separation of protein-metal complexes in SEC-ICP-MS [3] | Appropriate molecular weight range; biocompatible materials |

| Reference Standards | Instrument calibration and method validation [5] | USP/PhEur compliant; certified purity; traceable documentation |

| ATR Crystals | FT-IR sampling for protein secondary structure analysis [3] | Diamond, ZnSe, or Ge crystals; appropriate refractive index |

| SERS Substrates | Signal enhancement in Surface-Enhanced Raman Spectroscopy [3] | Gold/silver nanoparticles; reproducible enhancement factors |

| NMR Solvents | Sample preparation for structural analysis[ccitation:8] | Deuterated solvents (D₂O, CDCl₃, DMSO-d6); high isotopic purity |

| Process Probes | Inline monitoring in bioreactors [3] [5] | Steam-sterilizable materials; compatible with PAT frameworks |

| ML307 | ML307, MF:C28H36ClN7O, MW:522.1 g/mol | Chemical Reagent |

| HFI-437 | HFI-437, MF:C23H20N2O5, MW:404.4 g/mol | Chemical Reagent |

The molecular spectroscopy market demonstrates steady growth potential, with projections indicating an increase from USD 7.15 billion in 2025 to USD 9.04 billion by 2034. This expansion is largely driven by pharmaceutical and biotechnology applications, where spectroscopic techniques provide critical analytical capabilities throughout the drug development lifecycle. The continued adoption of Process Analytical Technology (PAT) initiatives, advancements in spectroscopic instrumentation, and integration of artificial intelligence and machine learning for data analysis represent significant growth opportunities. Despite challenges related to instrument costs and operational complexity, the essential role of molecular spectroscopy in ensuring drug quality, safety, and efficacy ensures its continued importance in pharmaceutical research and development. The ongoing miniaturization of devices and development of portable instruments will further expand applications in point-of-care testing and field-based analysis, solidifying molecular spectroscopy's position as a cornerstone technology in modern pharmaceutical science.

The pharmaceutical industry is undergoing a profound transformation, driven by three interconnected forces: the acceleration of research and development (R&D), the rise of personalized medicine, and the critical advancement of biologics characterization. For researchers, scientists, and drug development professionals, understanding these drivers is essential for navigating the current landscape. These trends are not only reshaping therapeutic development but also placing new demands on analytical technologies, including advanced spectroscopy, which provides the foundational data for quality control and product understanding. This whitepaper examines the current state, technological enablers, and future directions of these key market drivers, providing a technical guide for industry professionals.

The Imperative for R&D Acceleration

Facing unprecedented pressures, the pharmaceutical industry is prioritizing R&D acceleration to improve productivity and sustainability.

Current Challenges in Pharmaceutical R&D

The industry contends with a paradox of high activity but declining productivity. A record 23,000 drug candidates are currently in development, with over 10,000 in clinical stages, supported by annual R&D spending exceeding $300 billion [9]. Despite this investment, R&D productivity has not kept pace. The success rate for Phase 1 drugs plummeted to just 6.7% in 2024, down from 10% a decade ago, and the internal rate of return for R&D investment has fallen to 4.1%—well below the cost of capital [9]. Furthermore, the industry is approaching the largest patent cliff in history, with an estimated $350 billion in revenue at risk between 2025 and 2029 [9]. Shareholder returns have also lagged, with a PwC pharma index returning 7.6% from 2018-2024 compared to over 15% for the S&P 500 [10].

Strategies for Accelerating R&D

To counter these challenges, leading organizations are deploying several key strategies:

AI and Data-Driven Development: Approximately 85% of biopharma executives plan to invest in data, digital, and AI for R&D in 2025 [11]. These technologies deliver tangible benefits; Amgen has doubled clinical trial enrollment speed using machine learning, and Sanofi collaborates with OpenAI to reduce patient recruitment timelines "from months to minutes" [11].

Smarter Trial Designs: Companies are moving away from exploratory trials toward critical experiments with clear success/failure criteria [9]. There is also a strong focus on inclusive trials through community-based and decentralized models, with companies like BMS reporting that over 60% of its research sites are in highly diverse communities [11].

Portfolio Focus and Strategic Exits: Companies are making bold decisions to exit markets, functions, and categories where they lack competitive advantages [10]. Roche, for example, announced its intention to trim targeted disease areas to 11, with particular focus on just five [11].

Table 1: Global Pharmaceutical R&D Metrics and Trends

| Metric | Historical Benchmark | Current Status (2024-2025) | Data Source |

|---|---|---|---|

| Phase 1 Success Rate | 10% (a decade ago) | 6.7% (2024) | Evaluate [9] |

| R&D Internal Rate of Return | Not specified | 4.1% (below cost of capital) | Evaluate [9] |

| Annual R&D Spending | Not specified | >$300 Billion | Evaluate [9] |

| Companies Investing in AI for R&D | Not specified | 85% of biopharma executives | ZS [11] |

| Revenue at Risk from Patent Cliff | Not specified | $350 Billion (2025-2029) | Evaluate [9] |

The Rise of Personalized Medicine

Personalized medicine represents a paradigm shift from the traditional "one-size-fits-all" approach to healthcare, tailoring medical decisions and treatments to individual patient characteristics.

Market Size and Growth Projections

The personalized medicine market demonstrates robust growth across multiple forecasts, though specific valuations vary by methodology and segmentation.

Table 2: Personalized Medicine Market Size and Growth Projections

| Market Scope | 2024/2025 Base Value | 2030/2034 Projected Value | CAGR | Source |

|---|---|---|---|---|

| Global Market | $531.7 Billion (2024) | $869.9 Billion (2030) | 8.5% | ResearchAndMarkets.com [12] |

| Global Market | $614.2 Billion (2024) | $1,315.4 Billion (2034) | 8.1% | Precedence Research [13] |

| U.S. Market | $56.4 Billion (2024) | $252.9 Billion (2034) | 17.32% | Custom Market Insights [14] |

| Global Market | $89.2 Billion (2025) | $169.5 Billion (2032) | 9.6% | Coherent Market Insights [15] |

Key Application Areas and Technologies

Oncology Dominance: Oncology leads personalized medicine applications, accounting for approximately 40% of the market share [12] [13] [15]. This leadership is driven by molecular understanding of cancer biology and advancements in targeted therapies like immunotherapies and companion diagnostics.

Personalized Therapeutics and Nutrition: The personalized medicine therapeutics segment is anticipated to be the fastest-growing product category [13], while personalized nutrition and wellness currently holds the largest market share at 45.9% [12].

Technology Enablers: Pharmacogenomics is the largest technology segment (30.2% share) [12]. Artificial intelligence and machine learning represent the fastest-growing technology category, projected to expand at a CAGR of 11% from 2024-2030 [12]. AI enables analysis of vast genetic, clinical, and lifestyle datasets to identify disease risks and predict treatment responses [15].

Regional Landscape

North America, particularly the U.S., dominates the personalized medicine market, holding a 44-45% share [13] [15]. This leadership is supported by advanced healthcare infrastructure, substantial R&D investment, and supportive regulatory frameworks. However, the Asia-Pacific region is poised for the fastest growth, projected at a CAGR of 11.4% [12], driven by large patient populations, increasing healthcare investment, and government initiatives.

Biologics Characterization: Ensuring Quality and Consistency

As biopharmaceuticals dominate the therapeutic landscape, rigorous characterization becomes paramount for ensuring product quality, safety, and efficacy.

The Characterization Imperative

Biologics characterization is the comprehensive analysis of biological drug products to determine their molecular and product attributes [16]. Unlike small-molecule drugs, biologics are produced in living systems and exhibit inherent molecular heterogeneity due to factors like post-translational modifications (e.g., glycosylation), charge variants, and higher-order structure differences [16] [17]. The global biopharmaceutical market was valued at approximately $452 billion in 2024 and is projected to reach $740 billion by 2030, with monoclonal antibodies (mAbs) dominating at 61% of total revenue [17].

Analytical Workflow for Biologics Characterization

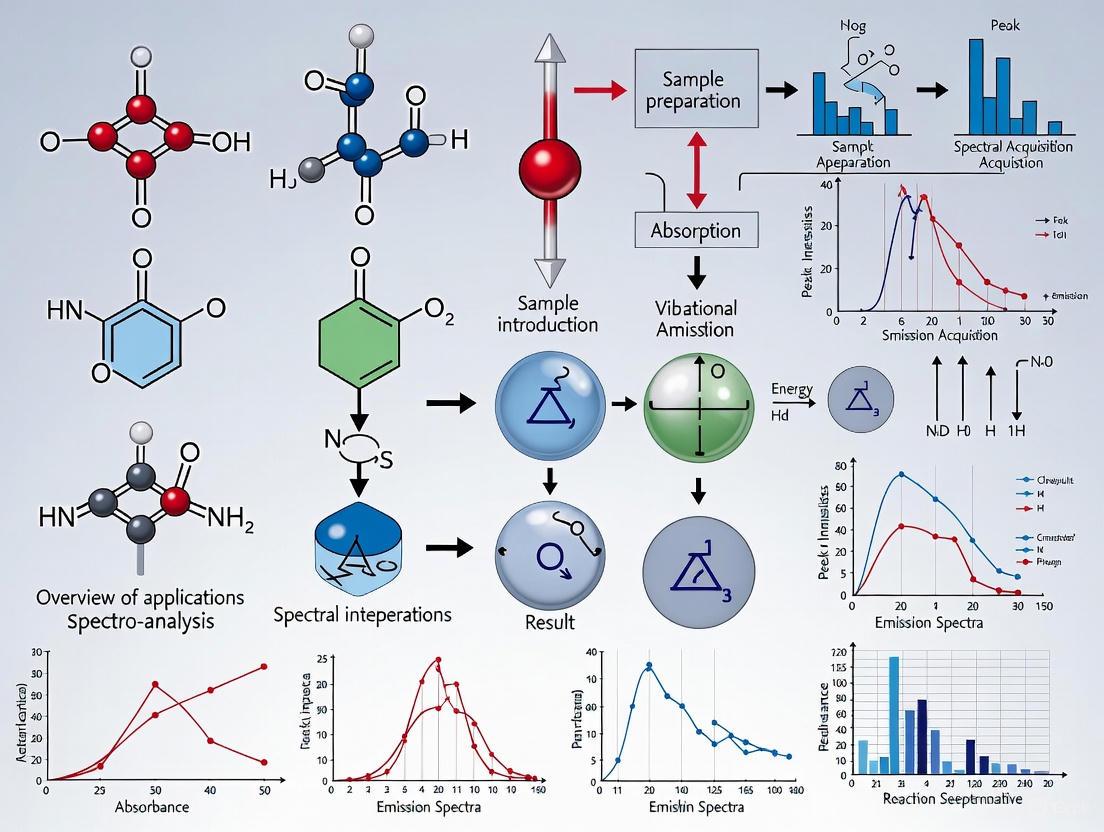

A comprehensive characterization program leverages orthogonal analytical techniques. The following diagram illustrates the integrated workflow for structural and functional analysis of biologics.

Diagram 1: Integrated Workflow for Biologics Characterization

Regulatory Expectations and Phase-Appropriate Strategy

Regulatory agencies require extensive characterization data, guided by standards like ICH Q6B, to confirm identity, purity, potency, and safety [16] [17]. A phase-appropriate strategy is crucial:

- Early Development (IND): Focus on safety and proof of concept using platform methods; method qualification is not yet required [18].

- Late Development (BLA): Requires a "complete package" with deep characterization using qualified methods, demanding 100% amino acid sequence coverage and impurity characterization down to the 0.1% level [18].

Failure to qualify characterization methods and understand method performance is a crucial risk that can lead to significant project delays [18]. Demonstrating batch-to-batch consistency through rigorous analytical comparability is essential, especially after manufacturing changes [16].

The Scientist's Toolkit: Essential Research Reagents and Materials

The following table details key reagents and materials essential for conducting biologics characterization, supporting the experimental workflows described in this whitepaper.

Table 3: Essential Research Reagents for Biologics Characterization

| Reagent/Material | Function/Application | Technical Specification Notes |

|---|---|---|

| Reference Standard | Serves as the benchmark for identity, purity, and potency assays. Critical for comparability studies. | Should be well-characterized and representative of the final commercial process [18]. |

| Cell Lines (e.g., CHO) | Expression systems for biologic production. Source of product heterogeneity. | Choice impacts post-translational modifications (e.g., glycosylation) [16] [17]. |

| Enzymes (Trypsin, Lys-C) | Proteolytic digestion for peptide mapping and LC-MS analysis. | Required for determining amino acid sequence and identifying post-translational modifications [16]. |

| LC-MS Grade Solvents | Mobile phase for chromatographic separation and mass spectrometric detection. | High purity is essential to minimize background noise and ion suppression. |

| Surface Plasmon Resonance (SPR) Chip | Immobilization surface for binding partners in kinetic analysis. | Enables determination of association/dissociation rate constants (kon, koff) [16]. |

| Cell-Based Assay Reagents | Components for functional bioassays (e.g., ADCC, CDC). | Includes effector cells, reporter systems, and cytokines to measure biological potency [16]. |

| Kv2.1-IN-1 | Kv2.1-IN-1, MF:C22H29N3O3, MW:383.5 g/mol | Chemical Reagent |

| SRC-1 (686-700) | SRC-1 (686-700), MF:C77H131N27O21, MW:1771.0 g/mol | Chemical Reagent |

Interconnected Drivers and Future Outlook

The three market drivers of R&D acceleration, personalized medicine, and biologics characterization are deeply intertwined. The push for R&D acceleration necessitates more efficient characterization technologies. The rise of personalized medicine, particularly advanced modalities like cell and gene therapies, produces increasingly complex biologics that demand more sophisticated characterization strategies [13] [17]. In turn, robust biologics characterization provides the foundational data required to ensure the safety and efficacy of these novel, targeted therapies, thereby enabling their successful development and regulatory approval.

Looking forward, the industry will be shaped by several key trends. The integration of AI and machine learning will continue to advance across all three areas, from drug discovery and patient stratification to analytical data analysis [11] [17]. Multi-attribute methods (MAM) and other advanced analytical platforms will increase efficiency in characterization [16]. Furthermore, the industry must navigate a evolving regulatory landscape and ongoing pricing pressures [10] [11], making the efficient convergence of these three drivers more critical than ever for delivering innovative therapies to patients.

The International Council for Harmonisation (ICH) guidelines Q2(R2) and Q14 represent a significant evolution in the pharmaceutical analytical landscape. These guidelines, officially adopted in November 2023, foster a more robust, science- and risk-based approach to analytical procedure development and validation [19]. Concurrently, regulatory initiatives like the FDA's Emerging Technology Program (ETP) are actively encouraging the adoption of innovative manufacturing and quality control strategies, including Real-Time Release Testing (RTRT) [20]. RTRT is an advanced approach that evaluates and ensures product quality based on process data, rather than relying solely on end-product testing [21]. This whitepaper explores this converging regulatory and technological landscape, detailing how modern spectroscopic tools are enabling compliance and transforming quality assurance into an integrated, data-driven activity for researchers and drug development professionals.

The paradigm for ensuring pharmaceutical product quality is shifting from a traditional, reactive model (Quality by Test) to a proactive, knowledge-based framework (Quality by Design, QbD) [22]. This evolution is codified in the latest ICH guidelines and supported by regulatory agencies worldwide to enhance product understanding, control, and ultimately, patient safety.

ICH Q2(R2): Validation of Analytical Procedures

ICH Q2(R2) provides updated guidance on validating analytical procedures for drug substances and products. It expands upon the previous Q2(R1) to include more detailed consideration for validating a broader range of analytical techniques, including those used for biological and biotechnological products [23]. The guideline outlines the key validation characteristics that must be demonstrated, such as accuracy, precision, specificity, and linearity, ensuring that analytical methods are fit for their intended purpose throughout their lifecycle [23] [19].

ICH Q14: Analytical Procedure Development

ICH Q14 introduces, for the first time, comprehensive harmonized guidance on the science- and risk-based development of analytical procedures [24] [19]. It encourages a more systematic approach to understanding the procedure's performance, establishing an Analytical Procedure Control Strategy, and managing the procedure over its entire lifecycle. This includes provisions for multivariate models and real-time release testing, directly facilitating the adoption of modern PAT tools [24].

The Synergy between Q2(R2), Q14, and RTRT

The combination of Q14's structured development principles and Q2(R2)'s modernized validation requirements provides a clear and supportive regulatory pathway for implementing advanced quality assurance strategies like RTRT. RTRT is defined as "the ability to evaluate and ensure the quality of in-process and/or final product based on process data" [21]. This typically includes a combination of Process Analytical Technology (PAT) tools, material attributes, and process controls [21] [22]. By building a deep understanding of the process and product through Q14, and validating the associated analytical methods per Q2(R2), manufacturers can justify the release of a batch without performing traditional end-product testing [25].

The Role of Spectroscopy in Enabling RTRT

Spectroscopic techniques are cornerstone PAT tools in RTRT strategies due to their ability to provide rapid, non-destructive, and quantitative analysis of materials in real-time or near-real-time.

Key Spectroscopic Techniques for PAT/RTRT

- Raman Spectroscopy: A vibrational spectroscopy technique well-suited for PAT applications, especially where information about molecular composition and variance is required. It is non-destructive, can probe through glass packaging, and is highly specific, making it ideal for raw material identification, monitoring cell culture media, and final product testing of biologics [26].

- Near-Infrared (NIR) Spectroscopy: Another vibrational technique widely used in pharmaceutical unit operations. It is commonly applied to monitor processes such as blending, granulation, and coating by measuring critical quality attributes like blend uniformity and moisture content [21] [22].

A Practical Workflow for Spectroscopic RTRT

The following diagram illustrates a generalized workflow for developing and implementing a spectroscopic method within an RTRT framework, aligning with ICH Q14 and Q2(R2) principles.

The Scientist's Toolkit: Essential Reagents and Materials for Spectroscopic RTRT

Table 1: Key research reagents and materials used in developing and validating spectroscopic RTRT methods.

| Item | Function in RTRT Development | Example Application |

|---|---|---|

| Reference Standards | To build and validate chemometric models for identity and quantitative analysis. | High-purity drug substance for building a PLS model to predict API concentration [26]. |

| Process Samples | To capture natural process variability and ensure model robustness (as per Q14). | Samples collected from various stages of blending to model and monitor blend uniformity [22]. |

| Chemometric Software | For multivariate data analysis, model development (e.g., PLS, PCA), and method validation. | TQ Analyst Software or equivalent for discriminant analysis and quantitative calibration [26]. |

| PAT Instrumentation | The core hardware for in-process data acquisition (e.g., Raman, NIR spectrometers). | A Raman spectrometer with a fiber optic probe for non-contact analysis in bioreactors or through glass vials [26]. |

| Validation Samples | A statistically sound set of samples, independent of the calibration set, for assessing method performance per Q2(R2). | Samples with known concentrations of preservatives to determine accuracy and precision of a quantitative method [26]. |

| M4K2281 | M4K2281, MF:C27H31N3O4, MW:461.6 g/mol | Chemical Reagent |

| Logmalicid B | Logmalicid B, MF:C21H30O14, MW:506.5 g/mol | Chemical Reagent |

Experimental Protocols and Methodologies

Detailed Protocol: Final Product Identity and Preservative Concentration Using Raman Spectroscopy

The following protocol is adapted from a feasibility study conducted by Thermo Fisher Scientific for a multinational drug manufacturer, which successfully differentiated 15 biologic drug products and quantified two preservatives [26].

1. Objective: To replace a compendial final product identity test (e.g., peptide mapping) and an HPLC test for preservative concentration with a single, non-destructive Raman spectroscopic method.

2. Materials and Equipment:

- Spectrometer: Thermo Scientific DXR3 SmartRaman Spectrometer with universal sampling plate and 180-degree sampling module.

- Laser Source: 532 nm laser operating at 40 mW power.

- Software: Thermo Scientific TQ Analyst Software for chemometric analysis.

- Samples: Drug products in their native glass vials (3 ml and 10 ml). Concentrations: Drug product (0.5 - 6.0 mg/ml), Preservative A (0.85 - 5.0 mg/ml), Preservative B (0.42 - 3.91 mg/ml).

3. Method Development (Aligning with ICH Q14):

- Spectral Acquisition: Acquire spectra with a 1-minute scanning time. Collect ten spectra per sample to account for variability from glass vials and scattering effects.

- Data Analysis:

- For Identity Testing: Use Discriminant Analysis based on Principal Component Analysis (PCA). The software computes the Mahalanobis distance to classify unknown samples against known product classes. All classes in the feasibility study were correctly identified with no false positives [26].

- For Quantitative Analysis: Use Partial Least Squares (PLS) regression to build a model relating the spectral data to the known concentrations of the preservatives.

4. Method Validation (Aligning with ICH Q2(R2)):

- Demonstrate method performance characteristics as required by Q2(R2).

- Specificity: Confirm the ability to unequivocally assess the analyte in the presence of other components. This was shown by detecting significant spectral differences between the drug product and its placebo (see Figure 2 in [26]).

- Linearity & Range: Evaluate over the specified range of preservative concentrations. The PLS model for Preservative A showed a correlation coefficient of 0.998, indicating excellent linearity [26].

- Accuracy: Assessed by comparing the predicted concentration from the Raman model against the known reference value. Metrics like Root Mean Square Error of Calibration (RMSEC) and Root Mean Square Error of Prediction (RMSEP) are used. For the cited study, the RMSEC for Preservative A was 0.0425 mg/ml [26].

The Regulatory Push and Implementation Support

Regulatory agencies are not merely passive observers but are actively facilitating the adoption of these advanced approaches through dedicated programs.

Regulatory Support Initiatives

- FDA's Emerging Technology Program (ETP): Established to promote and facilitate the adoption of innovative pharmaceutical manufacturing and quality control. The ETP allows companies to engage with the FDA's Emerging Technology Team (ETT) for pre-submission guidance on novel technologies, including PAT and RTRT, thereby de-risking the regulatory submission process [21] [20]. The program has already "graduated" its first technology, Continuous Direct Compression (CDC), indicating that FDA reviewers are now sufficiently experienced with this technology to assess it through the standard review process [20].

- EMA's Notification Model: The European Medicines Agency has adopted a "do and then tell" model for certain PAT applications, which avoids manufacturing stoppages while waiting for regulatory approvals, contrasting with the traditional "prior approval" model [21].

Experience Bands for Regulatory Submissions

The FDA's ETP has defined "experience bands" for technologies like CDC, which serve as a useful reference for the maturity of supporting technologies. The table below summarizes key criteria for CDC, a process that heavily relies on PAT and RTRT.

Table 2: Selected Experience Bands for Continuous Direct Compression (CDC) as defined by the FDA's Emerging Technology Program [20].

| Category | Criteria for Standard Assessment Pathway |

|---|---|

| Drug Product | Immediate release; single API or fixed-dose combination; BCS Class 1, 2, 3, or 4. |

| Advanced In-process Controls | Include ratio control for loss-in-weight (LIW) feeders and quantitative spectroscopic measurement for blend uniformity. |

| Material Diversion | Includes residence time distribution (RTD) based diversion strategies. |

| Real Time Release (RTR) | For assay and content uniformity (CU) using spectroscopic measurement & tablet weight; dissolution via compendial test or RTR based on PLS models. |

The confluence of updated regulatory guidelines (ICH Q14 and Q2(R2)) and proactive regulatory programs (like the ETP) has created a uniquely supportive environment for the pharmaceutical industry to modernize its quality control systems. Real-Time Release Testing (RTRT) represents the pinnacle of this evolution, shifting quality assurance upstream and making it a continuous, data-driven activity. Spectroscopic techniques, particularly Raman and NIR, are proving to be enabling technologies for RTRT, supported by robust chemometric models. For researchers and drug development professionals, mastering the principles of Q14 for analytical development, Q2(R2) for validation, and the practical application of spectroscopy is no longer a forward-looking concept but a present-day imperative for achieving efficient, robust, and compliant pharmaceutical manufacturing.

Spectroscopic techniques form the analytical backbone of the modern pharmaceutical industry, providing critical data that ensures the safety, efficacy, and quality of therapeutic products from discovery through manufacturing. These methods enable scientists to elucidate molecular structures, verify identity, assess purity, and quantify drug substances with unparalleled precision. Within the highly regulated pharmaceutical environment, techniques including Ultraviolet-Visible (UV-Vis), Infrared (IR), Nuclear Magnetic Resonance (NMR), Mass Spectrometry (MS), and Raman spectroscopy serve as indispensable tools for characterizing both small molecule drugs and complex biologics [27] [28]. Their non-destructive nature, accuracy, and ability to provide real-time data support compliance with stringent Good Manufacturing Practice (GMP) and other regulatory standards, making them fundamental to pharmaceutical research, development, and quality control [27] [28]. This whitepaper examines the fundamental principles, specific applications, and experimental protocols of these core spectroscopic techniques within the context of pharmaceutical development.

Comparative Analysis of Core Spectroscopic Techniques

The following table summarizes the fundamental characteristics, strengths, and primary applications of the five core spectroscopic techniques in pharmaceutical analysis.

Table 1: Comparison of Core Spectroscopic Techniques in Pharmaceutical Analysis

| Technique | Fundamental Principle | Key Pharmaceutical Applications | Key Advantages | Key Limitations |

|---|---|---|---|---|

| UV-Vis Spectroscopy [28] | Measures electronic transitions from ground state to excited state (190-800 nm). | Content uniformity testing, dissolution profiling, concentration determination of APIs, impurity monitoring [28]. | Rapid, simple, inexpensive, high-throughput, excellent for quantification [28]. | Requires chromophores, limited structural information, susceptible to matrix interference. |

| IR Spectroscopy [28] | Measures vibrational transitions of molecular bonds (functional group "fingerprinting"). | Raw material identification, polymorph screening, contaminant detection, formulation verification [27] [28]. | Excellent for qualitative analysis, minimal sample preparation (especially ATR-FTIR), non-destructive [28]. | Difficulty analyzing complex mixtures, can be affected by water, requires extensive sample prep for some techniques [29]. |

| NMR Spectroscopy [30] [28] | Measures absorption of radiofrequency by atomic nuclei in a magnetic field. | Structural elucidation, stereochemical verification, impurity profiling, quantitative NMR (qNMR) for potency [30] [28]. | Provides definitive atomic-level structural detail, non-destructive, quantitative without standards, excellent for stereochemistry [30]. | Lower sensitivity compared to MS, requires deuterated solvents, high instrument cost, complex data interpretation. |

| Mass Spectrometry (MS) [31] | Measures mass-to-charge ratio ((m/z)) of gas-phase ions. | Biomolecule characterization, metabolite identification, quantification of APIs and impurities, high-throughput screening [31]. | Extremely high sensitivity and specificity, provides molecular weight, can analyze complex mixtures, hyphenation with LC/GC. | Destructive technique, requires standards for quantification, complex data analysis, high instrument cost. |

| Raman Spectroscopy [32] | Measures inelastic scattering of light from molecular vibrations. | Raw material verification, polymorph identification, real-time process monitoring, counterfeit drug detection [32]. | Minimal sample preparation, non-destructive, can analyze aqueous solutions, suitable for through-packaging testing [32]. | Weak signal susceptible to fluorescence interference, can require high laser power potentially damaging samples. |

Fundamental Roles and Experimental Protocols

Ultraviolet-Visible (UV-Vis) Spectroscopy

UV-Vis spectroscopy is a fundamental quantitative analytical technique in pharmaceutical quality control laboratories due to its simplicity, speed, and cost-effectiveness [28].

- Fundamental Role: Its primary role is the quantification of active pharmaceutical ingredients (APIs) in various matrices, such as tablets, capsules, and liquid formulations. It is routinely applied in content uniformity testing, dissolution profile testing, and impurity monitoring based on absorbance changes [28].

- Experimental Protocol for API Quantification in a Tablet:

- Standard Solution Preparation: Accurately weigh and dissolve a certified reference standard of the API in a suitable solvent (e.g., water, methanol, buffer) to create a stock solution. Prepare a series of standard solutions of known concentrations via serial dilution.

- Sample Solution Preparation: Weigh and finely powder not less than 20 tablets. Accurately weigh a portion of the powder equivalent to the claimed API content. Dissolve the powder in the solvent using sonication and shaking, then filter or centrifuge to obtain a clear supernatant.

- Instrumental Analysis: Using a UV-Vis spectrophotometer, zero the instrument with the pure solvent in a quartz or glass cuvette. Measure the absorbance of each standard and sample solution at the predetermined wavelength of maximum absorption (λmax).

- Quantification: Construct a calibration curve by plotting the absorbance of the standard solutions versus their concentrations. Use the linear regression equation from this curve to calculate the concentration of the API in the sample solution [28].

Infrared (IR) Spectroscopy

IR spectroscopy is the workhorse for qualitative analysis and identity testing in the pharmaceutical industry, providing a unique molecular "fingerprint" [28] [29].

- Fundamental Role: It is predominantly used for raw material identification (ID) before they are released for manufacturing. Regulatory standards mandate that every raw material, including APIs and excipients, must undergo 100% ID testing, for which FTIR with an ATR (Attenuated Total Reflectance) accessory is extensively used due to its minimal sample preparation needs [28] [29].

- Experimental Protocol for Raw Material Identity Testing:

- Background Collection: Acquire a background spectrum of the clean, empty ATR crystal.

- Sample Preparation (ATR Method): Place a small amount of the solid raw material directly onto the ATR crystal. For liquids, a drop is sufficient. Apply firm, consistent pressure to ensure good contact between the sample and the crystal.

- Spectral Acquisition: Acquire the IR spectrum of the sample over a standard wavenumber range (e.g., 4000-400 cmâ»Â¹).

- Data Interpretation and Comparison: Compare the acquired spectrum of the test material against a reference spectrum from a certified standard or a validated spectral library. The identity is confirmed if the sample spectrum exhibits all significant absorption bands (peak positions and relative intensities) present in the reference spectrum [28].

Nuclear Magnetic Resonance (NMR) Spectroscopy

NMR spectroscopy is the most powerful technique for unambiguous structural determination, providing detailed information about the carbon-hydrogen framework of a molecule [30] [33].

- Fundamental Role: Its primary role is the definitive structural elucidation and stereochemical verification of complex drug molecules, impurities, degradants, and natural products. It is critical for confirming the identity of a synthesized API and for characterizing novel compounds during drug discovery [30] [33].

- Experimental Protocol for Small Molecule Structure Elucidation:

- Sample Preparation: Dissolve 2-10 mg of the pure, dry compound in 0.5-0.7 mL of a high-purity deuterated solvent (e.g., CDCl₃, DMSO-d₆). Filter the solution into a high-quality, clean NMR tube to remove any particulate matter.

- Data Acquisition:

- Run a ¹H NMR spectrum to identify the number and type of hydrogen environments, integration (number of H), and spin-spin coupling (neighboring H).

- Run a ¹³C NMR spectrum (often with DEPT editing) to determine the number and type of carbon environments (CH₃, CH₂, CH, or quaternary C).

- For complex structures, acquire 2D NMR spectra:

- COSY: Identifies protons that are coupled to each other.

- HSQC: Identifies direct ¹H-¹³C connectivity.

- HMBC: Identifies long-range ¹H-¹³C couplings over 2-3 bonds, crucial for establishing connectivity between molecular fragments.

- Data Interpretation: Analyze all spectral data (chemical shift, coupling constants, integration, 2D correlations) to piece together the complete molecular structure, including relative stereochemistry if NOESY/ROESY experiments are performed [30].

Mass Spectrometry (MS)

Mass spectrometry provides exceptional sensitivity for detecting and quantifying analytes based on their molecular weight and fragmentation pattern, making it vital for bioanalysis and impurity profiling [31].

- Fundamental Role: MS, particularly when coupled with liquid chromatography (LC-MS), is essential for the identification and quantification of drugs, metabolites, and impurities in complex biological matrices (e.g., plasma, urine). It is also indispensable for the characterization of large biomolecules like proteins and antibodies [31].

- Experimental Protocol for LC-MS/MS Bioanalysis of a Drug in Plasma:

- Sample Preparation: Precipitate proteins from plasma samples (e.g., with acetonitrile) containing the drug and an internal standard. After vortexing and centrifuging, collect the supernatant for analysis.

- Chromatographic Separation: Inject the supernatant onto a reverse-phase UHPLC column to separate the analyte from the matrix components and potential interferences.

- Mass Spectrometric Detection: The eluent from the LC is ionized (typically using Electrospray Ionization - ESI) and introduced into the tandem mass spectrometer.

- The first quadrupole (Q1) selects the precursor ion of the drug.

- The second quadrupole (Q2) acts as a collision cell to fragment the precursor ion.

- The third quadrupole (Q3) selects a specific product ion.

- Quantification: The intensity of the selected reaction monitoring (SRM) transition for the drug is compared to that of the internal standard. A calibration curve is constructed from spiked plasma standards to calculate the concentration of the drug in the unknown samples with high accuracy and precision [31].

Raman Spectroscopy

Raman spectroscopy complements IR spectroscopy and is highly valuable for non-destructive, in-situ analysis, often requiring no sample preparation [32].

- Fundamental Role: It is widely used for raw material verification and polymorph identification. Its ability to analyze samples through packaging (glass, plastic) makes it ideal for rapid, on-site inspection and for preventing cross-contamination in quality control laboratories [32].

- Experimental Protocol for Handheld Raman Verification of a Raw Material:

- Method Setup: Load the validated reference spectrum for the specific raw material into the handheld Raman device's library.

- Sample Analysis: Point the handheld spectrometer's laser probe at the sample. For materials in clear packaging, the analysis can often be performed through the container. Trigger the measurement to acquire the Raman spectrum in seconds.

- Automated Verification: The instrument's software automatically compares the collected spectrum to the reference library using a pre-defined algorithm and match threshold (e.g., Hit Quality Index - HQI). The result is a simple "PASS/FAIL" output, confirming the material's identity without requiring expert spectral interpretation for routine checks [32].

Workflow Visualization: Spectroscopic Techniques in Drug Development

The following diagram illustrates how the core spectroscopic techniques are integrated across the various stages of pharmaceutical drug development, from discovery to quality control.

Essential Research Reagent Solutions

The successful application of spectroscopic techniques relies on a suite of specialized reagents and materials. The following table details key items essential for pharmaceutical analysis.

Table 2: Key Research Reagents and Materials for Spectroscopic Analysis

| Reagent/Material | Function | Primary Technique |

|---|---|---|

| Certified Reference Standards [28] | Provides a known substance with certified purity and composition for instrument calibration, method validation, and quantification. | UV-Vis, MS, NMR, IR, Raman |

| Deuterated Solvents (e.g., CDCl₃, DMSO-d₆) [28] | Provides a solvent that does not produce interfering signals in the proton frequency range, allowing for lock signal and shimming. | NMR |

| ATR Crystals (Diamond, ZnSe) [28] | Serves as the internal reflection element in ATR accessories, enabling direct analysis of solids and liquids with minimal preparation. | IR |

| Potassium Bromide (KBr) [28] | Used to create transparent pellets for transmission-mode IR analysis of solid samples. | IR |

| High-Purity Solvents (HPLC/UV Grade) [28] | Minimizes background interference and UV absorbance for sensitive quantitative analysis and chromatography. | UV-Vis, LC-MS |

| Quartz Cuvettes [28] | Provides optical cells transparent in the UV-Vis range for liquid sample analysis. | UV-Vis |

| Internal Standards (for qNMR) [30] | A compound with a known concentration and distinct NMR signal used for precise quantification of analytes. | NMR |

The core spectroscopic techniques—UV-Vis, IR, NMR, MS, and Raman—collectively provide a comprehensive analytical toolkit that is fundamental to every stage of the pharmaceutical lifecycle. UV-Vis spectroscopy offers robust quantification, IR and Raman spectroscopy deliver rapid molecular fingerprinting, MS provides ultra-sensitive detection and identification, and NMR affords unparalleled structural detail. The integration of these techniques, supported by appropriate reagents and standardized protocols, enables pharmaceutical scientists to ensure the identity, strength, quality, purity, and stability of drug substances and products. As the industry advances with more complex drug modalities like biologics and personalized medicines, the evolution and synergistic application of these spectroscopic methods will continue to be a cornerstone of pharmaceutical innovation and regulatory compliance.

The pharmaceutical industry is experiencing a paradigm shift in analytical spectroscopy, moving from centralized laboratories to decentralized, on-site analysis. Portable and handheld spectroscopic devices are revolutionizing drug development and quality control by providing immediate, actionable data at the point of need. This transition from bringing samples to the spectrometer to bringing the spectrometer to the sample is transforming traditional workflows, enabling real-time decision-making, and accelerating pharmaceutical development cycles [34]. The global portable spectrometer market, valued at approximately USD 2.2 billion in 2024, is projected to reach between USD 4.47 billion by 2032, reflecting a compound annual growth rate (CAGR) of around 9.3% [35]. This growth is fueled by technological advancements that have dramatically increased instrument capabilities while reducing their size and weight, driven by developments in consumer electronics, computing power, and ongoing R&D innovation [34].

Market Dynamics and Growth Trajectory

Quantitative Market Landscape

The expansion of portable spectroscopy is reflected in several key market segments. The table below summarizes the current market size and growth projections for portable spectrometers and the broader molecular spectroscopy market, in which pharmaceutical applications play a significant role.

Table 1: Portable and Molecular Spectroscopy Market Overview

| Market Segment | 2024/2025 Base Value | 2032/2034 Projected Value | CAGR | Key Drivers |

|---|---|---|---|---|

| Portable Spectrometer Market [35] | USD 2,202.30 Million (2024) | USD 4,472.52 Million (2032) | 9.30% | Demand for on-site analysis, pharmaceutical & chemical industry growth |

| Portable Handheld Spectrometer Market [36] | ~USD 1.5 Billion (2025) | - | 6.5% (through 2033) | Quality control needs, regulatory compliance, technology advancements |

| Molecular Spectroscopy Market [37] | USD 6.97 Billion (2024) | USD 9.04 Billion (2034) | 2.64% | Pharmaceutical R&D, diagnostic applications, personalized medicine |

Technology and End-User Segmentation

The portable spectrometer market demonstrates distinct segmentation patterns by technology type and end-user application. Mass spectrometers currently lead in market share within the portable spectrometer segment, while Nuclear Magnetic Resonance (NMR) spectroscopy dominates the broader molecular spectroscopy market [35] [37].

Table 2: Portable Spectrometer Market Segmentation by Type and End-User (2024)

| Segment Category | Leading Sub-Segment | Market Share (2024) | Key Applications and Drivers |

|---|---|---|---|

| By Type [35] | Mass Spectrometer | 39.27% | High sensitivity, faster analysis, isotope differentiation |

| Optical Spectrometer | Fastest Growing | Quantitative metal/alloy analysis in metallurgy | |

| By End-User [35] | Pharmaceutical | Highest Share | Drug identity/purity testing, crystalline structure analysis |

| Chemical | Fastest Growing | Purity assessment, chemical characteristics determination | |

| By Technology [37] | NMR Spectroscopy | Dominating Share | Drug discovery, metabolomics, non-destructive analysis |

The pharmaceutical sector represents the largest application segment for portable spectrometers, driven by increasing pharmaceutical production and the need for rapid analysis of drug identity, purity, and crystalline structures [35]. The chemical industry segment is expected to witness the fastest growth, propelled by investments in chemical manufacturing infrastructure and rising production of industrial chemicals [35].

Core Technologies and Pharmaceutical Applications

Key Spectroscopic Techniques in Pharma

Portable spectroscopic devices leverage multiple technologies, each with distinct advantages for pharmaceutical applications:

Raman Spectroscopy: Utilizes laser light to measure molecular vibrations, providing chemical fingerprints for material identification. Portable Raman systems are particularly valuable for raw material verification, monitoring chemical reactions, and counterfeit drug detection [34] [3]. Recent advancements include the use of 1064 nm excitation to reduce fluorescence interference and spatially offset Raman spectroscopy (SORS) for analyzing samples through packaging [34].

Near-Infrared (NIR) Spectroscopy: Measures overtone and combination molecular vibrations, ideal for quantitative analysis of active pharmaceutical ingredients (APIs), moisture content, and blend uniformity in solid dosage forms. NIR's non-destructive nature and minimal sample preparation make it well-suited for Process Analytical Technology (PAT) initiatives [36] [5].

X-ray Fluorescence (XRF): Provides elemental analysis capabilities critical for detecting catalyst residues, heavy metal impurities, and verifying metal-based APIs. Handheld XRF has seen significant adoption with cumulative shipments exceeding 100,000 units [36] [34].

Ultraviolet-Visible (UV-Vis) Spectroscopy: Used for concentration verification of APIs and excipients in solution. Recent portable systems enable real-time monitoring of protein chromatography purification processes [3] [38].

Emerging Technological Capabilities

The capabilities of portable spectrometers have expanded significantly, with several emerging trends enhancing their pharmaceutical utility:

Hybrid Instrumentation: Combined technologies such as Raman-NIR, Raman-XRF, and FT-IR systems provide complementary data from a single device, increasing analytical confidence and application range [34].

Enhanced Data Analytics: Integration of machine learning and artificial intelligence enables more sophisticated data processing, including identification of complex mixtures and detection of subtle spectral changes indicative of product quality issues [36] [3].

Miniaturization and Connectivity: Shrinking component sizes have enabled truly handheld devices without sacrificing performance. Cloud connectivity and mobile app integration facilitate real-time data sharing and collaborative analysis [36].

Experimental Protocols and Implementation

Raw Material Identification Protocol

Objective: To verify the identity and purity of incoming raw materials using portable Raman spectroscopy.

Materials:

- Portable Raman spectrometer with 785 nm or 1064 nm laser

- Reference spectral library of approved materials

- Sample presentation accessory (if applicable)

- Safety equipment (laser safety glasses)

Procedure:

- Instrument Calibration: Perform wavelength and intensity calibration according to manufacturer specifications using built-in standards.

- Spectral Acquisition:

- Place a representative sample of the material in the instrument's sampling area.

- Apply the laser to the sample and collect spectra with appropriate integration time (typically 1-10 seconds).

- Average multiple acquisitions to improve signal-to-noise ratio.

- Spectral Matching:

- Process spectra using preprocessing algorithms (e.g., baseline correction, normalization).

- Compare acquired spectrum against reference spectral library using correlation algorithms.

- Document match quality with statistical confidence metrics.

- Result Interpretation:

- Match values exceeding predefined thresholds (e.g., >95%) confirm material identity.

- Spectral mismatches trigger failure mode analysis and laboratory confirmation testing.

Validation Parameters: Specificity, precision, robustness, and detection limits should be established during method validation [5] [3].

Real-Time Bioprocess Monitoring Protocol

Objective: To monitor critical quality attributes during pharmaceutical manufacturing using inline NIR spectroscopy.

Materials:

- Fiber-optic coupled portable NIR spectrometer

- Inline or at-line immersion probe

- Multivariate calibration model for target analytes

- Data acquisition and analysis software

Procedure:

- System Configuration:

- Install NIR probe directly into bioreactor or flow cell for continuous monitoring.

- Establish communication between spectrometer and process control system.

- Calibration Model Application:

- Load validated partial least squares (PLS) calibration models for target analytes (e.g., glucose, lactate, product titer).

- Verify model performance with standard samples before process initiation.

- Real-Time Monitoring:

- Collect spectra at predetermined intervals (e.g., every 30-60 seconds).

- Apply calibration models to convert spectral data to concentration values.

- Monitor trends and trigger alerts when parameters deviate from established ranges.

- Data Integration:

- Feed analytical results to process control system for potential parameter adjustments.

- Document all data for batch record completeness and regulatory compliance.

Application Example: A 2024 study demonstrated inline Raman spectroscopy for real-time monitoring of product aggregation and fragmentation during clinical bioprocessing, achieving measurements every 38 seconds through automation and machine learning integration [3].

Diagram 1: Portable spectrometer implementation involves a structured, phased approach from planning through operation, with continuous improvement feeding back into requirement definition.

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of portable spectroscopy in pharmaceutical settings requires specific reagents and materials to ensure analytical accuracy and reproducibility.

Table 3: Essential Research Reagent Solutions for Portable Spectroscopy

| Item | Function | Application Examples |

|---|---|---|

| Validation Standards | Instrument performance verification and method validation | Polystyrene standards for wavelength verification in Raman; NIST-traceable reference materials for quantitative calibration |

| Spectral Libraries | Reference databases for material identification | Custom libraries of APIs, excipients, and raw materials; Commercial databases for general chemical identification |

| Sample Presentation Accessories | Standardized sampling interfaces | Quartz cuvettes for UV-Vis; Diamond ATR crystals for FT-IR; Vial holders for liquid samples |

| Calibration Transfer Sets | Maintaining consistency across multiple instruments | Well-characterized samples representing expected analyte ranges for model transfer between laboratory and portable devices |

| Cleaning Solvents | Preventing cross-contamination between samples | HPLC-grade solvents appropriate for material types analyzed (e.g., methanol, acetonitrile) |

| Quality Control Materials | Ongoing method performance verification | Stable, homogeneous materials with established reference values for daily system suitability testing |

| TEAD-IN-13 | TEAD-IN-13, MF:C23H22F3N3O4, MW:461.4 g/mol | Chemical Reagent |

| TNG348 | TNG348, MF:C27H23F6N9O, MW:603.5 g/mol | Chemical Reagent |

Advantages and Implementation Challenges

Driving Forces and Benefits

The adoption of portable spectroscopic devices in pharmaceuticals is driven by several significant advantages:

Real-Time Decision Making: Immediate analytical results at the point of need enable rapid decisions in manufacturing, quality control, and research settings, reducing delays associated with laboratory sample submission [34].

Enhanced PAT Implementation: Portable devices serve as ideal tools for Process Analytical Technology, supporting real-time release testing and continuous manufacturing through inline, online, or at-line analysis [5].

Cost Efficiency: Reduced sample transport, faster analysis cycles, and decreased laboratory workload contribute to significant operational cost savings [39].

Non-Destructive Analysis: Most spectroscopic techniques are non-destructive, allowing valuable samples to be preserved for additional testing or reference purposes [5].

Regulatory Compliance: Portable methods support compliance with evolving regulatory expectations for quality-by-design and real-time product quality assessment [5].

Implementation Challenges and Limitations

Despite the compelling benefits, several challenges must be addressed for successful implementation:

Initial Cost Barriers: While decreasing, high-performance portable spectrometers still represent significant capital investment, particularly for advanced technologies like portable mass spectrometers [36].

Technical Limitations: Portable devices may have reduced sensitivity and resolution compared to laboratory instruments, potentially limiting applications for trace analysis or complex matrices [34].

Model Development Requirements: Quantitative applications require robust calibration models developed with extensive sample sets, representing significant upfront method development investment [36].

Data Management Complexity: Distributed analytical systems generate substantial data volumes requiring sophisticated data management, integration, and integrity strategies [36].

Regulatory Acceptance: While increasing, regulatory acceptance of portable methods for definitive quality decisions may require extensive validation and comparison with established laboratory methods [35].

Diagram 2: A decision tree logic guides the appropriate use of field-portable analysis versus traditional laboratory testing based on analytical requirements and method readiness.

The future of portable spectroscopy in pharmaceuticals points toward increasingly sophisticated, connected, and intelligent analytical systems. Several emerging trends will shape further adoption:

AI-Enhanced Analytics: Integration of artificial intelligence and machine learning will enable more sophisticated spectral interpretation, anomaly detection, and predictive analytics, potentially identifying subtle quality issues before they impact product quality [7] [3].

Multi-Technology Platforms: The development of hybrid instruments combining complementary techniques (e.g., Raman-LIBS, Raman-XRF) will expand application ranges and analytical confidence [34].

Miniaturization Advancements: Continuing component miniaturization will enable even smaller form factors without performance compromise, potentially leading to smartphone-integrated spectroscopic capabilities [34].

Expanded Biopharma Applications: As portable technologies mature, applications will expand from small molecules to complex biologics, including monitoring of protein structure, aggregation, and post-translational modifications [3].

Standardization and Regulatory Alignment: Increasing industry acceptance will drive standardization of methods, validation approaches, and regulatory alignment, supporting broader implementation for quality decisions [36].

The pharmaceutical industry's adoption of portable and handheld spectroscopic devices represents a fundamental shift in analytical philosophy, moving from centralized laboratory testing to distributed, real-time quality assessment. This transition supports the industry's evolution toward continuous manufacturing, real-time release, and more agile development processes. While implementation challenges remain, the compelling benefits of immediate analytical results, enhanced process understanding, and accelerated development timelines ensure that portable spectroscopy will play an increasingly central role in pharmaceutical research, development, and manufacturing. As technologies continue to advance and validation frameworks mature, field-based analysis is poised to become an integral component of the modern pharmaceutical quality ecosystem.

Advanced Applications: From Drug Discovery to Quality Control with Next-Gen Spectroscopy

Raman spectroscopy, a non-destructive analytical technique known for its high sensitivity and molecular specificity, has become a cornerstone of pharmaceutical analysis. Its integration with artificial intelligence (AI), particularly deep learning, is now revolutionizing the field, enabling breakthroughs in drug development, quality control, and clinical diagnostics [40]. This synergy enhances the accuracy, efficiency, and application scope of Raman techniques by overcoming traditional challenges such as background noise, complex data interpretation, and manual feature extraction [40]. This technical guide explores the transformative impact of AI-powered Raman spectroscopy, with a specific focus on its dual role in pharmaceutical impurity detection and disease diagnosis, providing detailed methodologies and resources for researchers and drug development professionals.

Technical Foundations: Raman Spectroscopy and AI Integration

Raman spectroscopy probes molecular vibrations to provide a characteristic "fingerprint" of a sample's chemical composition. The core advantage of being non-destructive makes it ideal for analyzing precious pharmaceutical compounds and biological specimens [40] [41]. However, the high-dimensional, noisy, and multicollinear nature of Raman data often makes manual interpretation and traditional chemometric analysis labor-intensive and prone to error [40] [42].

Deep learning algorithms address these limitations by automatically identifying complex patterns and meaningful features from raw spectral data with minimal manual intervention [40]. Key architectures include:

- Convolutional Neural Networks (CNNs): Excel at identifying localized spectral features and shapes, such as characteristic peak patterns of specific impurities or biomarkers [42] [41].

- Transformers: Utilize attention mechanisms to capture long-range dependencies and relationships across different spectral regions, which is useful for identifying correlated features [40] [42].

- Generative Adversarial Networks (GANs): Can be employed for data augmentation to enhance model training where experimental data is scarce [40].

A significant challenge in deploying these "black box" models in regulated environments like pharmaceuticals is their interpretability. Researchers are addressing this by developing explainable AI (XAI) methods, such as SHAP (SHapley Additive exPlanations) and attention mechanisms, which provide insights into which spectral regions (wavenumbers) are most influential in a model's decision-making process [40] [42] [41]. This transparency is crucial for regulatory acceptance and building trust in AI-driven results [40].

Application 1: Impurity Detection and Pharmaceutical Quality Control

The detection and profiling of impurities—organic, inorganic, and residual solvents—are critical for ensuring drug safety, efficacy, and regulatory compliance [43]. AI-powered Raman spectroscopy significantly enhances this domain.

Experimental Protocol: Impurity Identification and Quantification

The following workflow details a standard methodology for using AI-powered Raman spectroscopy in impurity analysis:

Sample Preparation:

- Prepare standard solutions of the Active Pharmaceutical Ingredient (API) with known, spiked concentrations of target impurities [43] [41].

- For solid dosage forms, homogenize tablets or powders to ensure a representative sample. Analysis can often be performed non-invasively [41].

Data Acquisition:

- Use a Raman spectrometer (e.g., Endress+Hauser Raman Rxn2 analyzer) with a 785 nm laser to minimize fluorescence [41].

- Collect multiple spectra from each sample to account for heterogeneity. Key parameters include a spectral resolution of 1 cmâ»Â¹ and a range covering the fingerprint region (e.g., 150–1150 cmâ»Â¹) where most molecular vibrations occur [41].

- Implement daily wavelength and intensity calibration using certified standards (e.g., cyclohexane) to ensure data reproducibility [41].

Data Preprocessing:

- Apply standard preprocessing steps: dark noise subtraction, cosmic ray filtering, and intensity correction [41].

- For AI models, additional steps like spectral cropping to the fingerprint region can reduce dimensionality and focus the model on chemically informative features [41].

AI Model Training and Analysis:

- Train a 1D-CNN or Support Vector Machine (SVM) on a dataset of preprocessed spectra from pure API and impurity-containing samples [41].

- For quantification, structure the task as a regression problem to predict impurity concentration.

- Apply SHAP analysis to the trained model to identify the specific Raman shifts (wavenumbers) most predictive of impurity presence. This links the AI's decision to underlying chemical structures, providing interpretability [41].

The following diagram illustrates this experimental and computational workflow:

Diagram 1: Workflow for AI-powered impurity detection.

Performance of ML Models in Pharmaceutical Classification

In a benchmark study classifying 32 pharmaceutical compounds, various machine learning models demonstrated exceptional performance, underscoring their readiness for quality control applications [41].

Table 1: Benchmark performance of machine learning models on Raman spectra of 32 pharmaceutical compounds [41].

| Machine Learning Model | Reported Accuracy (%) |

|---|---|

| Linear Support Vector Machine (SVM) | 99.88% |

| 1D Convolutional Neural Network (CNN) | 99.26% |

| Random Forest | 98.30% |

| XGBoost | 98.30% |

| LightGBM | 97.99% |

| k-Nearest Neighbors (k-NN) | 97.12% |

Application 2: Disease Diagnosis and Clinical Biomarker Discovery

In clinical diagnostics, AI-powered Raman spectroscopy offers a non-invasive, rapid, and highly accurate method for detecting diseases at the molecular level, often before morphological changes occur [40].

Experimental Protocol: Serum-Based Disease Diagnosis

This protocol is adapted from research on diagnosing autoimmune diseases using serum Raman spectroscopy [44].

Sample Collection and Preparation:

- Collect fresh blood samples from patients and healthy controls under fasting conditions to minimize dietary interference [44].

- Centrifuge blood samples at 4000 rpm at 4°C to separate serum. Aliquot and store the serum at -80°C until analysis [44].

- For measurement, transfer a small aliquot (e.g., 10 µL) of serum onto a tinfoil-lined slide and allow it to air dry partially (approx. 13 minutes) [44].

Data Acquisition:

- Use a high-resolution confocal Raman spectrometer for analysis [44].

- Collect multiple spectra per serum sample to ensure statistical robustness.

Addressing the Label Scarcity Challenge: Medical data, especially for complex conditions like autoimmune diseases, is often poorly labeled. Unsupervised Domain Adaptation (UDA) techniques can be employed to leverage knowledge from a labeled source domain (e.g., one disease dataset) to perform diagnosis on an unlabeled target domain (e.g., a new, related disease) [44].

- Framework: A Pseudo-label-based Conditional Domain Adversarial Network (CDAN-PL) can be used [44].

- Process: The model generates high-confidence pseudo-labels for the unlabeled target domain and uses adversarial training to align the feature distributions of the source and target domains. This allows for accurate diagnosis even without initial labels for the target data [44].

- Performance: This approach has achieved an average accuracy of 92.3% in homologous transfer tasks (between similar diseases) and 90.05% in non-homologous tasks, demonstrating strong generalization [44].

The following diagram visualizes this adaptive diagnostic process:

Diagram 2: Unsupervised Domain Adaptation for disease diagnosis.

Advanced Feature Selection for Enhanced Diagnostic Interpretability

The high dimensionality of Raman data necessitates robust feature selection to improve model performance and interpretability. Explainable AI-based feature selection methods have proven highly effective [42].

Table 2: Comparison of feature selection methods for medical Raman spectroscopy [42].

| Feature Selection Method | Basis of Selection | Reported Outcome |

|---|---|---|

| CNN-based GradCAM | Gradient-weighted Class Activation Mapping to highlight important spectral regions. | Highest average accuracy, selecting only 10% of features. |

| Transformer Attention Scores | Weights from self-attention layers identifying relevant wavenumbers. | Comparable accuracy with significant data compression. |