Resolving Inaccurate Spectrometer Results: A 2025 Troubleshooting Guide for Scientists

This guide provides researchers and drug development professionals with a comprehensive framework for diagnosing, troubleshooting, and preventing inaccurate spectrometer analysis.

Resolving Inaccurate Spectrometer Results: A 2025 Troubleshooting Guide for Scientists

Abstract

This guide provides researchers and drug development professionals with a comprehensive framework for diagnosing, troubleshooting, and preventing inaccurate spectrometer analysis. Covering foundational error sources, advanced methodological applications, step-by-step troubleshooting for common issues, and robust validation techniques, it synthesizes the latest 2025 instrumentation trends and practical solutions to ensure data integrity and instrument performance in biomedical research.

Understanding the Root Causes of Spectrometer Inaccuracy

Stray Light: Troubleshooting Guide

Q1: What is stray light and how does it affect my spectrophotometric measurements?

Stray light, often referred to as "false" light, is any detected signal composed of wavelengths outside the intended measurement bandpass [1] [2]. It is a significant source of error in spectrophotometry. Its effect is most pronounced when measuring samples with high absorbance (low transmittance) [3]. Stray light causes a negative deviation from the Beer-Lambert law, meaning that measured absorbance values will be lower than the true absorbance, leading to inaccurate quantitative results, especially at high sample concentrations [4] [3].

Q2: What are the common symptoms of a stray light problem in my data?

- Absorbance values plateau or decrease at high concentrations instead of increasing linearly [3].

- Inaccurate chromaticity values when measuring light sources [1].

- Poor resolution of sharp spectral features, such as the Sun's edge in UV measurements [1].

Q3: What are the primary causes of stray light within a spectrometer? Stray light originates from imperfections in the optical path [1] [4]:

- Scattering at optical components, especially from ruled diffraction gratings which have more mechanical irregularities compared to holographic gratings [4] [5].

- Higher diffraction orders from the grating that are not adequately filtered [1] [3].

- Inter-reflections between mirrors, the detector, grating, and housing surfaces [1].

- Internal reflections within optical components like prisms [6].

- In Digital Micro-mirror Device (DMD)-based spectrometers, stray light can be classified into wavelength-related variable stray light and wavelength-unrelated intrinsic stray light [7].

Q4: What methodologies can I use to identify and quantify stray light? A standard method involves the use of sharp-edge (long-pass) filters [1]. For example, a Schott GG475 filter blocks virtually all light below 475 nm. When a broadband halogen lamp is measured through this filter, any signal detected below 475 nm is a direct measure of the system's stray light plus noise [1]. This test is best visualized on a logarithmic scale.

Q5: What are the main strategies for reducing the impact of stray light?

- Optical Design Improvements: Use spectrometers with holographic gratings and high-quality mirror coatings to minimize scattering [4] [5]. Double-monochromator designs offer superior stray light suppression by passing light through two sets of dispersive elements [3].

- Mathematical Correction: High-end spectrometers can be characterized using a tunable laser (e.g., an Optical Parametric Oscillator, OPO) to determine their Line Spread Function (LSF) across all wavelengths [1]. This data forms a stray light correction matrix that can be applied during data processing, reducing stray light by 1-2 orders of magnitude [1].

- Optical Filtering: Some advanced spectrometers integrate automated filter wheels containing long-pass and bandpass filters. This reduces the broadband radiation entering the spectroradiometer, significantly cutting the potential for stray light generation, particularly in the UV range [1].

Table 1: Stray Light Identification and Mitigation Techniques

| Method | Description | Key Application |

|---|---|---|

| Edge Filter Test | Uses a sharp-cutoff filter to directly measure stray light in blocked spectral regions [1]. | System characterization and diagnostic. |

| Mathematical Stray Light Correction | Applies a pre-characterized correction matrix to measured data [1]. | Post-processing correction for high-accuracy measurements. |

| Double Monochromator | Uses two gratings in series to achieve very high rejection of stray light [3]. | Measuring highly absorbing or scattering samples. |

| Integrated Filter Wheels | Automatically switches internal filters to limit broadband light input [1]. | Stray light suppression in critical regions like the UV. |

The following workflow outlines a systematic approach for diagnosing and resolving stray light issues:

Spectral Bandwidth: Troubleshooting Guide

Q1: What is spectral bandwidth and why is it critical for resolution? The Spectral Bandwidth (SBW) is the full width at half maximum (FWHM) of the triangular intensity distribution of light exiting the monochromator [3]. It is not the slit width itself, but is directly related to it via the grating's properties and focal length [3]. The SBW determines the instrument's ability to distinguish between two closely spaced spectral features (i.e., its resolution) [3].

Q2: How do I select the appropriate spectral bandwidth for my experiment? A general rule is to set the instrument's SBW to 1/10 of the natural width (FWHM) of the sample's absorption peak [3]. This ensures the recorded peak shape is not artificially broadened by the instrument.

Q3: What is the trade-off between spectral bandwidth and signal-to-noise ratio? There is a fundamental trade-off between resolution and signal-to-noise ratio (SNR) [3]:

- Narrow SBW: Provides better spectral resolution, allowing closely spaced peaks to be differentiated. However, it allows less light to reach the detector, which results in a lower SNR [3].

- Wide SBW: Allows more light to pass through, increasing the signal and thus the SNR. The downside is poorer resolution, which can cause sharp peaks to collapse and broaden [3].

Table 2: Impact of Spectral Bandwidth on Measurement Performance

| Bandwidth Setting | Effect on Resolution | Effect on Signal-to-Noise (SNR) | Recommended Use |

|---|---|---|---|

| Narrow (e.g., 1 nm) | High | Lower | Resolving fine spectral structure; sharp peaks. |

| Wide (e.g., 5 nm) | Low | Higher | Measuring broad, smooth spectral features; low-light situations. |

Q4: How can I check the bandwidth of my spectrometer? The most direct method is to irradiate the monochromator with an isolated, sharp emission line (e.g., from a mercury or deuterium lamp) and record the signal as a function of wavelength. The resulting profile's FWHM is the bandwidth [2]. For users without a line source, a practical check is to see if the instrument can resolve two known, closely spaced absorption bands [2].

Noise: Troubleshooting Guide

Q1: What are the primary sources of noise in a spectroscopic detector? Noise in a detector arises from several independent sources, and the total noise is the square root of the sum of their squares [8]:

- Photon Shot Noise (

n_phot): A fundamental noise source from the statistical variation in the arrival rate of photons. It is proportional to the square root of the signal [8] [5]. - Dark Noise (

n_dark): Noise from the thermally generated current in the detector. It is temperature-dependent and increases with integration time [8] [5]. - Read Noise (

n_read): A fixed noise introduced by the electronics during the conversion of the charge to a digital signal. It is independent of signal strength and integration time [8]. - Stray Light: While often considered a systematic error, unwanted stray light acts as a background signal that contributes to the overall noise level [5].

Q2: How is the Signal-to-Noise Ratio (SNR) calculated and what does it mean? SNR is the ratio of the average signal power to the average noise power [5]. A high SNR (>>1) means the signal is clear and distinguishable from noise, leading to reliable data. An SNR near 1 means the signal is buried in noise and the measurement is unreliable [5]. The overall SNR is derived from the individual noise components [8].

Q3: Under what conditions is each type of noise dominant?

- Shot Noise Limited: This is the ideal scenario for high-SNR measurements. It occurs when the signal is strong and much larger than the dark current. The SNR increases with the square root of the number of photons [8].

- Read Noise Limited: This occurs with very weak signals and/or short integration times, where the fixed read noise is the dominant factor. The SNR increases linearly with the signal [8].

- Dark Noise Limited: This is common in measurements with low signal levels and non-cooled detectors, where the thermally generated dark current becomes significant [8].

Q4: What practical steps can I take to improve my SNR?

- Maximize Signal Throughput: Ensure your optical system is clean and well-aligned. Increase integration time, but be aware this also increases dark current [8] [5].

- Cool the Detector: Using a Thermo-Electric Cooler (TEC) dramatically reduces dark current and is especially effective for long integration times [5].

- Use Holographic Gratings: These generate less stray light than ruled gratings, reducing optical noise [5].

- Average Multiple Scans: Averaging sequential spectra can improve SNR by a factor of the square root of the number of scans.

- Optimize Gain Settings: Configure the detector's electronics for the best balance between signal amplification and noise [8].

Table 3: Detector Performance Comparison (Representative Data) [8]

| Detector Model | Technology | Pixel Size (µm) | Read Noise (counts) | Maximum SNR |

|---|---|---|---|---|

| Hamamatsu S10420 | CCD | 14 x 896 | 16 | 475 |

| Hamamatsu S11156-01 | CCD | 14 x 1000 | 21 | 390 |

| Hamamatsu S11639 | CMOS | 14 x 200 | 26 | 360 |

| Sony ILX511B | CCD | 14 x 200 | 53 | 215 |

The relationship between signal level, noise sources, and the resulting SNR can be visualized as follows:

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 4: Key Reagents and Tools for Spectrometer Characterization and Troubleshooting

| Item | Function / Application |

|---|---|

| Sharp-Cutoff Edge Filters (e.g., Schott GG475, OG515) | Diagnostic tools for directly measuring and quantifying stray light in a spectrometer [1]. |

| Holmium Oxide Solution or Glass Filter | A wavelength accuracy standard with well-defined sharp absorption bands for verifying the wavelength scale [2]. |

| Neutral Density Filters | Used to test photometric linearity and dynamic range across various absorbance levels. |

| Stray Light Correction Matrix | A pre-measured characterization of the spectrometer's stray light properties, enabling mathematical correction of acquired data [1]. |

| Thermo-Electric Cooler (TEC) | A device integrated into detectors to reduce thermal dark noise, crucial for low-light measurements and long integration times [5]. |

| Holographic Grating | An optical component that produces less stray light than a ruled grating, improving SNR and measurement accuracy, especially in UV applications [4] [5]. |

| 5-Tetradecene, (Z)- | 5-Tetradecene, (Z)-, CAS:41446-62-2, MF:C14H28, MW:196.37 g/mol |

| Midaglizole, (R)- | Midaglizole, (R)-, CAS:747378-51-4, MF:C16H17N3, MW:251.33 g/mol |

FAQs: Resolving Inaccurate Spectrophotometric Analysis Results

Q1: How does cuvette selection impact the accuracy of my UV-Vis measurements?

The choice of cuvette is critical because different materials have distinct optical properties that affect how light passes through them. Using the wrong type of cuvette can lead to significant absorption of the incident light, resulting in inaccurate readings.

- Glass Cuvettes: Suitable for measurements in the visible light range (typically ~340-1000 nm). They are not suitable for ultraviolet (UV) measurements as they absorb UV light.

- Quartz Cuvettes: Essential for measurements in the ultraviolet range (typically ~190-400 nm) and also transmit visible light. They must be used for any UV or UV-Vis analysis [9].

- Plastic Cuvettes: Generally for visible light measurements and are disposable. They are not suitable for UV light and can be easily scratched, which scatters light [9].

Q2: What are the common signs of a dirty cuvette, and how should I clean it?

A dirty cuvette can cause a variety of problems, including unstable readings, drifting baselines, and inaccurate absorbance values. Signs include visible residue on the optical windows, inconsistent readings between replicates, and an inability to zero the instrument properly [9].

A standard cleaning protocol is as follows:

- Rinse immediately after use with an appropriate solvent (e.g., distilled water, ethanol).

- Wash with a mild laboratory detergent and a soft bottle brush if needed.

- Rinse thoroughly multiple times with distilled water to remove all traces of detergent.

- Final rinse with a volatile solvent like acetone or ethanol can help with quick drying and prevent water spots.

- Air-dry upside down on a clean, lint-free tissue [10]. Always handle cuvettes by the frosted or ribbed sides to avoid getting fingerprints on the clear optical windows [9].

Q3: How can improper optical alignment in a spectrometer affect my results?

Misalignment in the spectrometer's optics, such as a misaligned lens in a probe, can prevent the instrument from collecting the full intensity of light from your sample. Since spectrophotometers measure light intensity, this results in highly inaccurate absorbance or transmittance readings [11]. Symptoms include consistently low signal, unexpected baseline shifts, and results that vary significantly between tests of the same sample.

Troubleshooting Guides

Problem: Unstable or Drifting Readings

| Possible Cause | Recommended Solution |

|---|---|

| Insufficient Warm-Up | Allow the instrument lamp to warm up for at least 15-30 minutes before use to stabilize [9]. |

| Air Bubbles in Sample | Gently tap the cuvette to dislodge bubbles before placing it in the instrument [9]. |

| Dirty Cuvette | Clean the cuvette following the protocol above and wipe with a lint-free cloth [9]. |

| Contaminated Optics | If internal lenses or windows are dirty, the instrument may require professional servicing [11] [12]. |

Problem: Inconsistent Replicate Measurements

| Possible Cause | Recommended Solution |

|---|---|

| Inconsistent Cvette Orientation | Always place the same cuvette into the holder in the same orientation [9]. |

| Sample Degradation | If the sample is light-sensitive, perform readings quickly after preparation to minimize photobleaching [9]. |

| Cuvette Not Matched | For the highest precision, use the exact same cuvette for both the blank and sample measurements [9]. |

Problem: Negative Absorbance Readings

| Possible Cause | Recommended Solution |

|---|---|

| "Dirtier" Blank | The blank solution absorbed more light than the sample. Ensure the blank cuvette is as clean as the sample cuvette and use the same cuvette for both if possible [9]. |

| Optically Mismatched Cuvettes | If using different cuvettes for blank and sample, ensure they are an optically matched pair [9]. |

Experimental Protocols

Protocol 1: Testing Cuvette Cleanliness

This method, derived from a patent for automated analysis, outlines a functional test for cuvette cleanliness using optical radiation [10].

- Fill the cuvette with a "reference" liquid, typically pure water or alcohol.

- Direct a beam of optical radiation (wavelengths from UV to IR) at the wall portion containing the reference liquid.

- Investigate the radiation that has passed through the cuvette and the liquid, either by direct transmission or by measuring scattered light.

- Determine cleanliness by analyzing the investigated radiation. A deviation from the expected signal for the pure reference liquid indicates the presence of contamination [10].

Protocol 2: Wavelength Calibration for a Spectrophotometer

Regular wavelength calibration ensures the instrument accurately identifies and measures the desired light wavelengths.

- Obtain a standard with known, sharp emission lines (e.g., a mercury or neon lamp) [13].

- Scan the standard and measure the observed wavelength of its emission lines.

- Compare results to the known, accepted wavelengths of the standard.

- Align the instrument's wavelength scale to correct any deviations found. The instrument's software typically performs this alignment based on the measured data [2] [13].

Research Reagent and Material Solutions

| Item | Function/Best Practice |

|---|---|

| Quartz Cuvettes | Essential for accurate measurements in the ultraviolet (UV) range, as they do not absorb UV light [9]. |

| Lint-Free Wipes | For cleaning cuvette optical surfaces without leaving scratches or fibers that scatter light [9]. |

| Certified Wavelength Standards | Substances with known, precise emission lines (e.g., Holmium oxide solution) used to verify the accuracy of the spectrometer's wavelength scale [2] [13]. |

| Neutral Density Filters | Filters with known absorbance values used for photometric calibration to verify the instrument's accuracy in measuring light intensity [13]. |

| High-Purity Solvents | Use the same high-purity solvent for the blank as is used to prepare the sample to ensure an accurate baseline correction [9] [14]. |

Logical Workflow for Diagnosing Optical Component Issues

The following diagram outlines a systematic approach to troubleshooting problems related to cuvettes and alignment, connecting the FAQs, guides, and protocols above.

Within the context of spectrometer output research, inaccurate analytical results often originate long before the measurement is taken. The integrity of any spectral analysis is fundamentally dependent on the quality of the sample introduced into the instrument. This guide addresses the three pillars of reliable sample preparation—contamination, homogeneity, and degradation—by providing targeted troubleshooting and methodologies to resolve these common yet critical issues, thereby ensuring the accuracy and reproducibility of your research data.

Troubleshooting Common Sample Preparation Errors

The following tables summarize the primary errors, their impact on spectrometry, and recommended solutions.

Table 1: Contamination Errors and Solutions

| Error Source | Impact on Spectrometric Analysis | Corrective & Preventive Actions |

|---|---|---|

| Keratin (from skin, hair) [15] | Obscures target protein peaks in MS; high background in IR around 3300 cmâ»Â¹ (O-H/N-H stretch) [16]. | Use gloves; work in laminar flow hoods or low-turbulence environments; wear non-fleece lab coats [15]. |

| Water Contamination | Strong, broad IR absorption bands at 3200-3500 cmâ»Â¹, masking sample O-H/N-H signals [16]. | Use anhydrous solvents and dry KBr; ensure complete sample desiccation [16]. |

| Residual Solvents & Polymers | Overwhelming MS spectra with solvent (e.g., acetone) or polymer peaks; leachates from non-low-bind plastics [15]. | Use HPLC-grade reagents and low-bind tubes; avoid autoclaved tips in organic solvents [15]. |

| Cross-Contamination from Equipment | Appearance of unexpected peaks from previous samples or cleaning agents [15]. | Thoroughly clean equipment; use dedicated tools; implement "waste plates" during manual prep to track discards [17]. |

Table 2: Homogeneity and Degradation Errors and Solutions

| Error Source | Impact on Spectrometric Analysis | Corrective & Preventive Actions |

|---|---|---|

| Inadequate Grinding (KBr Pellets) | Light scattering (Christiansen effect) causes distorted, sloping baselines in IR, obscuring subtle peaks [16]. | Grind sample and KBr to a fine, uniform powder to ensure a transparent pellet [16]. |

| Incorrect Sample Concentration/Thickness | Too thick: Saturated, flat-topped peaks at 0% transmittance in IR. Too thin: Weak signals and poor signal-to-noise ratio [16]. | For liquids, use capillary-thin films between plates. For solids, optimize sample-to-matrix ratio [16]. |

| Sample Degradation | Altered proteome profiles in MS; poor library complexity in NGS; general loss of spectral integrity [18]. | Process fresh samples immediately; use protease/phosphatase inhibitors; store at -80°C with snap-freezing [18]. |

| Improper Cell Lysis | Incomplete protein extraction leads to biased representation in MS and low yield [18]. | Match lysis method to cell type (e.g., gentle detergents for mammalian cells, harsher methods for bacteria) [18]. |

Key Experimental Protocols for Error Mitigation

Protocol for Preparing Robust KBr Pellets for IR Spectroscopy

This protocol is designed to minimize light scattering and baseline distortion by ensuring optimal sample homogeneity.

- Principle: A solid sample is uniformly dispersed in a potassium bromide (KBr) matrix and pressed into a transparent pellet for transmission analysis [16].

- Materials:

- Anhydrous, spectroscopy-grade KBr [16]

- Hydraulic press

- Mortar and pestle

- Desiccator

- Step-by-Step Methodology:

- Grinding: Grind approximately 1 mg of your dry solid sample with 100-200 mg of dry KBr. Grind until the mixture has the consistency of a fine, flour-like powder and is completely homogeneous [16].

- Pellet Formation: Transfer the mixture into a die and place it under a hydraulic press. Apply significant pressure (typically several tons) for one to two minutes to form a transparent pellet [16].

- Storage: If not used immediately, store the pellet in a desiccator to prevent absorption of atmospheric moisture [16].

- Troubleshooting: A cloudy or opaque pellet indicates incomplete grinding or moisture contamination, which will lead to light scattering and a sloping baseline. Repeat the grinding step with drier materials [16].

Protocol for Paucicellular Sample Preparation for Mass Spectrometry

This protocol maximizes protein/peptide recovery and minimizes contamination when working with low cell numbers (e.g., 300-1000 cells), a common scenario in clinical and developmental research [18].

- Principle: To maintain cell viability, prevent protein degradation, and achieve efficient lysis and digestion while minimizing sample loss across multiple processing steps [18].

- Materials:

- Step-by-Step Methodology:

- Cell Collection & Washing:

- Collect cells in a chilled, protein-containing medium if long sorting times are involved to maintain viability [18].

- Wash cells in cold PBS (pH 7.4) by centrifuging at 300-500g for 5 minutes. *Caution: The pellet may be loose and invisible. Carefully aspirate the supernatant to avoid discarding cells. Perform a maximum of 3 wash rounds [18].

- Cell Lysis:

- Use a chemical lysis method with a detergent-based buffer suited to your cell type (e.g., gentle non-ionic detergents for mammalian cells). Avoid harsh mechanical methods to prevent cross-contamination and protein denaturation [18].

- Keep samples at 4°C throughout to slow metabolic activity and prevent proteolysis [18].

- Digestion and Peptide Recovery:

- Cell Collection & Washing:

- Troubleshooting: Low protein identification rates can stem from apoptosis during sorting (optimize collection medium), sample loss during washing (exercise extreme caution with pellets), or keratin contamination (improve technique and workspace).

Workflow and Decision Pathways

The following diagrams outline a generalized sample preparation workflow and a systematic troubleshooting decision tree.

Sample Preparation Workflow

Troubleshooting Decision Tree

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Materials for Error-Free Sample Preparation

| Item | Function & Rationale |

|---|---|

| Low-Bind Tubes & Tips | Minimizes adsorption of proteins and peptides to plastic surfaces, critical for preventing sample loss in MS and NGS workflows [15]. |

| HPLC-Grade Solvents | High-purity reagents (water, acetonitrile, methanol) prevent introduction of small-molecule contaminants and metal ions that cause adducts in MS [15]. |

| Anhydrous KBr | Essential for preparing clear IR pellets; hygroscopic KBr must be dry to avoid strong, broad water absorption bands obscuring the sample spectrum [16]. |

| Protease/Phosphatase Inhibitors | Added during cell lysis to prevent protein degradation and preserve the native proteome state, especially critical for paucicellular samples [18]. |

| Certified Reference Materials (CRMs) | Used for regular wavelength and photometric calibration of spectrophotometers to correct for instrumental drift and ensure measurement accuracy [19]. |

| Cholest-8-ene-3,15-diol | Cholest-8-ene-3,15-diol, CAS:73390-02-0, MF:C27H46O2, MW:402.7 g/mol |

| Einecs 283-783-3 | Einecs 283-783-3, CAS:84712-93-6, MF:C27H51N3O8S, MW:577.8 g/mol |

Frequently Asked Questions (FAQs)

Q1: My IR spectrum shows a huge, broad peak around 3300 cmâ»Â¹. What is this, and how do I fix it? This is almost certainly caused by water contamination [16]. Water is a strong IR absorber and can be introduced via moisture in your KBr, solvents, or from the atmosphere. Ensure all materials are dry, prepare pellets quickly, and store them in a desiccator.

Q2: I am consistently identifying keratins in my mass spectrometry runs. Where could they be coming from? Keratins from skin, hair, and clothing are the most common protein contaminants [15]. Beyond standard glove use, ensure you work in a clean environment (e.g., laminar flow hood), avoid wearing wool or fleece in the lab, and keep all sample tubes and tip boxes closed when not in use.

Q3: My NGS library yield is unexpectedly low, even though my input DNA seemed fine. What are the main culprits? Low yield often stems from suboptimal purification or quantification. Common causes include: using the wrong bead-to-sample ratio during cleanup, inaccurate pipetting leading to sample loss, or using UV absorbance (NanoDrop) which overestimates concentration by counting contaminants. Always use fluorometric quantification (e.g., Qubit) for nucleic acids and validate with an electrophoregram [17].

Q4: How can I prevent sample degradation when working with limited cell numbers? To maintain cell viability and protein integrity: process samples fresh or snap-freeze pellets at -80°C, keep cells at 4°C during preparation to slow metabolism, use an appropriate cell viability medium during sorting, and include protease inhibitors in your lysis buffer [18].

For researchers in drug development and analytical sciences, the integrity of spectrometer data is paramount. Inaccurate analysis results can derail experiments, invalidate findings, and lead to costly setbacks. A primary source of such inaccuracies is the interplay between environmental conditions, instrument operation, and the inherent drift of calibration over time. This guide details the critical roles of temperature, warm-up time, and calibration drift, providing targeted troubleshooting and FAQs to help you maintain peak instrument performance and ensure the reliability of your spectroscopic data.

Frequently Asked Questions (FAQs)

1. How does ambient temperature specifically cause calibration drift?

Temperature fluctuations directly impact the physical components of a spectrometer. Virtually all scintillators, including NaI(Tl), are temperature-dependent [20]. An ambient temperature change of just a few degrees Celsius can be sufficient to shift peak positions away from their calibrated energies [20]. For High Purity Germanium (HPGe) spectrometry systems, temperature variations are a documented factor leading to energy calibration drift over time [21].

2. What is the minimum warm-up time required for a stable baseline?

A sufficient warm-up period is non-negotiable for stable readings. While specific needs vary by instrument, a general rule is to allow the spectrometer to warm up for at least 15 to 30 minutes before taking measurements [9]. Some protocols, especially those for UV-Vis spectrophotometers, recommend a longer warm-up of 30 to 45 minutes to ensure the light source and electronics have fully stabilized [22] [23]. This allows the lamp's output to stabilize, which is crucial for both a steady baseline and consistent photometric readings [9].

3. Can calibration drift be quantified?

Yes, studies have quantified calibration drift in specific systems. For instance, analysis of a laboratory HPGe detector demonstrated an energy calibration drift ranging from 0.014 keV/day to 0.041 keV/day, with the magnitude depending on the energy level [21]. The study further showed that at 1,332 keV, one day after calibration, up to half of the total error in energy calibration could be attributed to this drift [21].

4. How often should I check my spectrometer's calibration?

The frequency depends on usage, environmental stability, and application criticality.

- Best Practice: A calibration check before each experiment is recommended for serious research [20].

- High-Use Environments: For instruments in constant use, such as in quality control, daily or start-of-shift blank verification may be necessary [22].

- Routine Schedule: Full photometric and wavelength checks should be performed weekly or monthly, with an annual certified (e.g., ISO/IEC 17025) calibration for traceability [22].

Troubleshooting Guides

Problem 1: Unstable or Drifting Readings During an Experiment

| Symptom | Possible Cause | Solution |

|---|---|---|

| Absorbance or intensity values fluctuate over time. | Insufficient warm-up time. [9] | Let the instrument warm up for at least 30 minutes before use. [22] [9] |

| Readings are inconsistent between replicates. | Temperature fluctuations in the lab. [20] [24] | Place the spectrometer on a stable bench away from drafts, heating vents, and other sources of temperature change. [9] |

| Air bubbles in the cuvette. [9] | Gently tap the cuvette to dislodge bubbles before measurement. [9] | |

| Sample is evaporating or reacting. [9] | Keep the cuvette covered and minimize the time between measurements. [9] |

Experimental Protocol for Diagnosis:

- Turn on the instrument and note the exact time.

- Prepare a stable reference standard (e.g., a holmium oxide filter for wavelength or a NIST-traceable photometric filter).

- Measure the absorbance or intensity at a specific wavelength every 5 minutes for 60 minutes.

- Plot the measured values against time. The point at which the values plateau (typically within ±0.001 AU) indicates the required warm-up time for your specific instrument and environment.

Problem 2: Inaccurate Quantitative Analysis Results

| Symptom | Possible Cause | Solution |

|---|---|---|

| Analysis of a known standard returns incorrect values. | Calibration drift due to temperature. [20] | Recalibrate the instrument using traceable standards. Implement a drift monitoring program. [24] |

| Dirty optical windows. [11] | Clean the windows in front of the fiber optic and in the direct light pipe according to the manufacturer's instructions. [11] | |

| Contaminated argon gas (for OES). [11] | Ensure argon gas supply is pure and check for white, milky burns, which indicate contamination. [11] | |

| Use of incorrect cuvettes (e.g., glass for UV). [9] | Use quartz cuvettes for measurements in the ultraviolet range (typically below 340 nm). [9] |

Experimental Protocol for Verification:

- Photometric Accuracy Check: Use a NIST-traceable neutral density filter or a standard like potassium dichromate [22] [23].

- Procedure:

- Warm up the spectrophotometer for 45 minutes [23].

- Select the wavelengths specified in your manual (e.g., 235, 257, 313, 350 nm for potassium dichromate).

- Perform a blank measurement with the appropriate solvent.

- Measure the standard and record the absorbance values.

- Compare your results to the certified values on the standard's certificate. Deviations should be within the manufacturer's specified tolerances [22] [23].

The following table summarizes documented drift rates and tolerances from the literature.

Table 1: Documented Calibration Drift and Tolerances

| Instrument Type | Documented Drift/Tolerance | Key Environmental/Optical Factor | Citation |

|---|---|---|---|

| High Purity Germanium (HPGe) Spectrometer | 0.014 to 0.041 keV/day (dependent on energy) | Ambient temperature, line voltage, electronic variation [21] | [21] |

| General Spectrometer (Theoretical) | Accuracy on the order of 0.1 cmâ»Â¹ achievable post-calibration | Diffraction angle, spectrometer included angle [25] | [25] |

| NIST-Traceable Photometric Standard | Typical uncertainty of ±0.0023 AU | Handling (fingerprints), cleanliness of optics [23] | [23] |

Workflow Diagram

The diagram below illustrates the logical relationship between environmental factors, instrument status, and the resulting data integrity issues, along with the recommended corrective actions.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table lists key materials and reagents required for effective spectrometer calibration and maintenance.

Table 2: Essential Materials for Spectrometer Calibration and Maintenance

| Item | Function/Benefit | Example Use Case |

|---|---|---|

| NIST-Traceable Calibration Standards (Filters, Holmium Oxide, etc.) | Provides an absolute reference for verifying photometric and wavelength accuracy, ensuring traceability [22] [23]. | Periodic performance qualification (PQ) and after major maintenance. |

| Certified Gamma Calibration Sources (e.g., Cs-137, Co-60) | Calibrates gamma spectrometers prior to acquisition; checks for linearity and drift [20]. | Energy calibration of HPGe and NaI detectors for radionuclide identification. |

| Polystyrene Film | A known standard for calibrating Infrared (IR) spectrophotometers to verify peak presence and intensity [26]. | Routine calibration check of an FT-IR instrument. |

| Ausmon Drift Monitors | Used to determine the stability of an XRF spectrometer and support long-term drift correction [24]. | Monitoring for drift in XRF instruments used in cement or mining analysis. |

| High-Purity Argon Gas | Used as a purge gas in optical emission spectrometers (OES) to create a clear path for low wavelengths [11]. | Routine analysis of metals to prevent loss of carbon, phosphorus, and sulfur signals. |

| Quartz Cuvettes | Allow light transmission in the UV range (below ~340 nm), where glass or plastic cuvettes absorb light [9]. | UV-Vis analysis of proteins or nucleic acids at 280 nm or 260 nm, respectively. |

| Einecs 250-770-9 | Einecs 250-770-9, CAS:31702-83-7, MF:C30H37NO8S, MW:571.7 g/mol | Chemical Reagent |

| 2',3'-Dideoxy-secouridine | 2',3'-Dideoxy-secouridine, CAS:130515-71-8, MF:C9H14N2O4, MW:214.22 g/mol | Chemical Reagent |

Advanced Techniques and Best Practices for Reliable Analysis

Troubleshooting Guides

Guide 1: Resolving Inaccurate Absorbance Readings

Problem: Absorbance readings are unstable, imprecise, or do not match expected values for standard solutions.

Solutions:

- Check Cuvette and Sample Preparation: Ensure cuvettes are clean, free of scratches, and matched for path length. Use high-purity solvents and ensure samples are homogeneous and free of air bubbles, which can scatter light and cause errors [27].

- Verify Instrument Calibration: Perform photometric calibration using a potassium dichromate solution as a reference standard. The measured values of A(1%, 1cm) should fall within accepted limits at specific wavelengths (e.g., 124.5 at 235 nm, 144.0 at 257 nm) [28].

- Investigate Stray Light: Stray light can cause significant errors, especially at high absorbances. Test for stray light using a 1.2% potassium chloride solution, which should have an absorbance greater than 2 at 200 nm [28]. Reduce stray light by ensuring the sample compartment is closed and optical components are clean [27].

- Confirm Wavelength Accuracy: Use holmium oxide or didymium filters to verify that the instrument's wavelength scale is accurate. An inaccurate wavelength will lead to measuring absorbance at the wrong point on the spectrum [27] [28].

Guide 2: Troubleshooting Poor Spectral Resolution

Problem: Inability to distinguish between closely spaced absorption peaks; spectral bands appear broad and overlapped.

Solutions:

- Optimize Slit Width: The slit width directly controls the spectral bandpass. A narrower slit width improves resolution but reduces light intensity. A wider slit increases signal but blurs fine details [29]. Find the optimal balance for your sample.

- Test Resolution Power: Validate the instrument's resolution using a 0.02% v/v solution of toluene in hexane. The ratio of the absorbance at the maximum (~269 nm) to the minimum (~266 nm) should be not less than 1.5 [28].

- Check for System Deficiencies: Poor resolution can also stem from a degraded light source, misaligned optics, or a malfunctioning monochromator. Regular maintenance is essential [27].

Guide 3: Optimizing Signal-to-Noise Ratio (SNR)

Problem: The absorbance signal is weak and noisy, making it difficult to detect low concentrations or small spectral features.

Solutions:

- Adjust Slit Width Strategically: The slit width has a profound effect on SNR. Widening the slit increases light intensity. In photon-noise-limited systems (e.g., with photomultiplier tubes), SNR is directly proportional to slit width. In detector-noise-limited systems, SNR is proportional to the square of the slit width [30].

- Understand the Trade-off: Recognize the inherent compromise between resolution and SNR. For trace analysis where detection limit is critical, a wider slit may be beneficial even if it slightly reduces resolution [30].

- Ensure Proper Light Throughput: Check that the light path is not obstructed. Clean the windows in front of the fiber optic and the light pipe, as dirt can drastically reduce light intensity and cause drift [11].

- Use a Blank Correctly: Always run a proper blank (solvent or reference) for baseline correction to account for background noise [27].

Frequently Asked Questions (FAQs)

FAQ 1: What is the optimal slit width for my absorption spectroscopy experiment?

The optimal slit width depends on your analytical goal and the characteristics of your sample [30].

- For recording accurate absorption spectra: Use a sufficiently small slit width so that the spectral bandpass is less than one-tenth of the narrowest absorption band in your spectrum. This minimizes polychromaticity deviations from the Beer-Lambert Law [30].

- For quantitative trace analysis: Where a high signal-to-noise ratio is critical, a wider slit width can be used. The simulation from the search results shows that increasing the spectral bandpass can increase the photon SNR by a factor of 5 and the detector SNR by a factor of 30 [30]. The optimum for photon SNR often occurs when the spectral bandpass equals the absorber width [30].

FAQ 2: How does slit width affect my measurements beyond just resolution?

Slit width influences your measurements in two key ways:

- Light Throughput: It controls the amount of light entering the spectrometer. A wider slit allows more light, increasing the signal [30] [29].

- Spectral Bandpass: It determines the range of wavelengths simultaneously measured. A wider slit increases the bandpass, which can lead to deviations from the Beer-Lambert Law if it is too large relative to the width of the absorber's absorption band [30]. These two effects operate independently, meaning the light level incident on the sample increases with the square of the slit width [30].

FAQ 3: What is the recommended absorbance range for accurate quantitative analysis?

While spectrophotometers can technically measure very high absorbances, the accuracy of quantitative analysis decreases as absorbance increases due to instrumental deviations like stray light and polychromatic effects. For highly accurate work, it is generally recommended to work within an absorbance range of 0.1 to 1.0 or up to 2.0, provided the instrument's calibration curve has been validated for linearity in this range [30]. Always use calibration standards that bracket the expected concentration of your unknown samples.

FAQ 4: How often should I calibrate my spectrophotometer, and what does calibration involve?

Calibration frequency depends on use and required accuracy, but a monthly schedule is common [28]. Key calibration procedures include:

- Wavelength Accuracy: Using holmium oxide filters or the instrument's built-in mercury or deuterium emission lines to verify the wavelength scale [27] [28].

- Photometric Accuracy: Using potassium dichromate solution to verify the accuracy of absorbance readings [28].

- Stray Light Check: Using potassium chloride solution to ensure stray light is below an acceptable threshold [28] [13].

- Resolution Check: Using a toluene in hexane solution to confirm the instrument can resolve fine spectral features [28].

Data Presentation

Table 1: Effect of Relative Spectral Bandpass on Signal-to-Noise Ratio and Linearity

This table summarizes the simulated trade-offs when increasing the slit width (and thus spectral bandpass) relative to the absorption peak width, at an absorbance of 1.0 [30].

| Relative Spectral Bandpass (SB/Absorber Width) | Photon SNR (Relative Increase) | Detector SNR (Relative Increase) | Analytical Curve Linearity (Average Prediction Error) |

|---|---|---|---|

| 0.1 | Baseline | Baseline | ~0.1% error (Excellent) |

| 0.5 | 5x | 30x | ~1% error (Good) |

| 1.0 | 9x | 140x | ~3% error (Use with caution) |

Table 2: Spectrophotometer Calibration Standards and Acceptance Criteria

This table outlines key parameters, standards, and acceptance criteria for calibrating a UV-Visible spectrophotometer [28].

| Parameter | Standard Used | Experimental Procedure | Acceptance Criteria |

|---|---|---|---|

| Absorbance | Potassium Dichromate in 0.005M Hâ‚‚SOâ‚„ | Measure absorbance at 235, 257, 313, and 350 nm. Calculate A(1%, 1cm). | A(1%, 1cm) must be within specified limits (e.g., 142.8 - 145.7 at 257 nm) [28]. |

| Resolution | 0.02% v/v Toluene in Hexane | Scan spectrum from 260-420 nm. Measure absorbance at 269 nm and 266 nm. | Ratio of Abs (269 nm) / Abs (266 nm) ≥ 1.5 [28]. |

| Stray Light | 1.2% w/v Potassium Chloride | Measure absorbance with water as a blank at 200 nm (±2 nm). | Absorbance > 2 [28]. |

| Wavelength | Holmium Oxide Filter / Inbuilt Test | Measure absorption peaks or run instrument's self-test. | e.g., Observed peak at 656.1 ± 0.3 nm [28]. |

Experimental Protocols

Protocol 1: Control of Absorbance Using Potassium Dichromate

Purpose: To verify the photometric accuracy of the UV-Visible spectrophotometer [28].

Methodology:

- Standard Preparation: Dry potassium dichromate at 130°C to constant weight. Accurately weigh between 57.0 mg and 63.0 mg. Transfer to a 1000 ml volumetric flask, dissolve, and make up to volume with 0.005M sulfuric acid [28].

- Measurement: Using the solvent (0.005M Hâ‚‚SOâ‚„) as a blank, measure the absorbance of the prepared potassium dichromate solution at 235, 257, 313, and 350 nm [28].

- Calculation: For each wavelength, calculate the specific absorbance using the formula:

- A(1%, 1cm) = (Measured Absorbance × 10000) / (Weight of potassium dichromate in mg) [28].

Validation: The calculated A(1%, 1cm) values must fall within the pharmacopeia-specified limits (e.g., 144.0 with limits of 142.8 to 145.7 at 257 nm) [28].

Protocol 2: Determining the Limit of Stray Light

Purpose: To ensure stray light does not exceed levels that would cause significant photometric errors [28].

Methodology:

- Standard Preparation: Prepare a 1.2% w/v solution of potassium chloride (KCl) in water [28].

- Measurement: Using water as a blank, measure the absorbance of the KCl solution at 198.0, 199.0, 200.0, 201.0, and 202.0 nm [28].

- Analysis: Record the absorbance values. The high absorbance of this solution at these wavelengths means any measured light is primarily stray light.

Validation: The measured absorbance at each wavelength must be greater than 2. A value lower than this indicates an unacceptable level of stray light [28].

Workflow and Relationship Visualization

The Scientist's Toolkit: Key Research Reagent Solutions

Table 4: Essential Calibration and Validation Materials for Spectrophotometry

| Reagent / Material | Function |

|---|---|

| Potassium Dichromate | A primary standard for verifying the photometric accuracy and linearity of absorbance readings across key wavelengths [28]. |

| Holmium Oxide Filter | Used for wavelength calibration and verification of the instrument's wavelength scale accuracy [27]. |

| Potassium Chloride (KCl) | Aqueous solution used to test for stray light at the lower end of the UV spectrum (around 200 nm) [28]. |

| Toluene in Hexane | Used to assess the resolution power of the instrument by evaluating its ability to distinguish closely spaced absorption peaks [28]. |

| Stable Isotope-Labeled Internal Standards | (For LC-MS/MS) Used to compensate for matrix effects and ionization efficiency variations, improving quantitative accuracy [31]. |

| Certified Reference Materials (CRMs) | Materials with certified absorbance values used to validate the overall accuracy and reliability of the spectrophotometric method [27] [13]. |

| Cupric isononanoate | Cupric isononanoate, CAS:72915-82-3, MF:C18H34CuO4, MW:378.0 g/mol |

| PEG-3 caprylamine | PEG-3 caprylamine, CAS:119524-12-8, MF:C14H31NO3, MW:261.40 g/mol |

Proper Use of Blanks, Reference Standards, and Solvents

FAQs on Resolving Inaccurate Spectrometer Analysis

1. Why is my blank sample showing a high signal, and how can I fix it? A high signal in your blank run, often called a "carryover" or "contaminated blank," indicates that unwanted substances are being detected, which can obscure your actual sample data. To resolve this:

- Check for System Contamination: Flush the system with clean, appropriate solvents to remove any residual sample or contaminants from previous runs [32].

- Verify Solvent Purity: Ensure the solvents used for preparing the blank are of high, instrument-grade quality. Trace impurities in solvents are a common cause of high background signal [16].

- Inspect and Clean Components: For techniques like IR spectroscopy, ensure optical components (e.g., salt plates, ATR crystals) are clean before acquiring a background scan [16]. A contaminated background will create inverted peaks in your final sample spectrum.

- Review Sample Preparation: Use clean labware and ensure all materials are free from contaminants like plasticizers or detergents.

2. My reference standard values are drifting. What are the likely causes and solutions? Inaccurate or drifting mass values from reference standards often point to issues with calibration or instrument stability [32].

- Recalibrate the Instrument: Regularly calibrate the mass axis of your spectrometer using a certified calibration standard appropriate for your mass range.

- Check Standard Integrity: Verify that your reference standard has been prepared correctly and has not degraded or expired. Improper storage can also lead to degradation.

- Monitor Environmental Conditions: Ensure the instrument room has stable temperature and humidity, as fluctuations can affect instrument performance and lead to calibration drift [33] [19].

- Inspect the Ionization Source: In mass spectrometry, a contaminated or misaligned ionization source can lead to unstable signals and inaccurate mass readings. Regular inspection and cleaning are recommended [34].

3. How can residual solvents in my sample lead to analytical errors? Residual solvents are a common source of error, particularly in pharmaceutical analysis and IR spectroscopy.

- Spectral Interference: In IR spectroscopy, strong solvent peaks (e.g., from acetone or ethanol) can overwhelm the peaks from your actual sample, leading to incorrect interpretations [16].

- Toxicity and Compliance: Regulatory guidelines like ICH Q3C and USP <467> classify residual solvents (e.g., Class 1 and 2) and set strict concentration limits (see Table 1). Exceeding these limits can render a pharmaceutical product non-compliant [35].

- Ion Suppression/Enhancement: In mass spectrometry, residual solvents can cause ion suppression or enhancement, leading to inaccurate quantification of the target analyte.

Troubleshooting Guide: A Systematic Workflow

When facing inaccurate results, follow this logical troubleshooting pathway to diagnose and resolve the issue.

Research Reagent Solutions: Essential Materials and Their Functions

Proper selection and use of reagents are fundamental to achieving accurate and reliable results. The table below details key materials used in spectroscopic analysis.

Table 1: Essential Research Reagents and Materials

| Item | Function | Key Considerations |

|---|---|---|

| Headspace Grade Solvents (e.g., Water, DMSO, DMF) | Used for trace-level residual solvent analysis to minimize background interference [35]. | Low volatile organic impurity content is critical for meeting pharmacopeial standards like USP <467> [35]. |

| Certified Reference Standards | Calibrating the instrument and quantifying analytes to ensure measurement accuracy [32]. | Must be traceable, stored correctly, and used before expiration to prevent calibration drift. |

| Deuterated Solvents | Used as the locking solvent in NMR spectroscopy to provide a stable frequency lock. | High isotopic purity is required. The choice of solvent should not interfere with the sample peaks. |

| Anhydrous Salts & Materials (e.g., KBr) | Used for preparing solid samples in techniques like IR spectroscopy to form transparent pellets [16]. | Must be kept dry to prevent water contamination, which creates a broad, interfering peak around 3200-3500 cmâ»Â¹ [16]. |

| Certified Calibration Tiles | Used to calibrate the photometric scale of color spectrophotometers [33]. | White, black, and green standards must be kept free of debris and damage to ensure flawless precision. |

Table 2: Residual Solvent Classes and Limits (Based on ICH Q3C [35])

| Solvent Class | Description | Examples (PDE Limit) |

|---|---|---|

| Class 1 | Solvents to be avoided (known human carcinogens, strong environmental hazards). | Benzene (2 ppm), Carbon Tetrachloride (4 ppm) |

| Class 2 | Solvents to be limited (non-genotoxic animal carcinogens, or other irreversible toxicities). | Chloroform (60 ppm), Dichloromethane (600 ppm), Toluene (890 ppm) |

| Class 3 | Solvents with low toxic potential (PDE ≥ 50 mg/day). | Acetone, Ethanol, Ethyl Acetate |

Experimental Protocols for Accurate Analysis

Protocol 1: Establishing a Reliable Baseline with Proper Blanks This protocol is critical for identifying and minimizing background interference.

- Solvent Blank: Use the same high-purity solvent that your sample is dissolved in. This blank corrects for impurities in the solvent itself.

- Method Blank: Prepare a blank that undergoes the exact same sample preparation procedure as your real samples (e.g., same extraction, dilution, and derivatization steps). This identifies contamination introduced during preparation.

- Acquisition: Run the appropriate blank immediately before and after your sample sequence.

- Data Analysis: Inspect the blank chromatogram or spectrum. Any significant peaks in the blank that appear in your samples indicate potential contamination and must be investigated before proceeding.

Protocol 2: Calibration and Quality Control with Reference Standards This ensures the accuracy and traceability of your quantitative results.

- Preparation: Reconstitute or dilute your certified reference material according to the certificate of analysis, using the correct solvent.

- Calibration Curve: Prepare a series of standards at a minimum of five concentration levels across the expected range of your samples.

- Analysis: Run the calibration standards and a solvent blank at the beginning of the sequence. Include a mid-level calibration verification standard periodically throughout the sequence to monitor for drift.

- Acceptance Criteria: The calibration curve should have a correlation coefficient (R²) of ≥ 0.99. The back-calculated concentration of the verification standard should be within ±15% of the theoretical value.

Protocol 3: Mitigating Residual Solvent Interference Follow this to prevent solvents from compromising your analysis [16] [35].

- Selection: Choose a solvent with low spectral interference or toxicity for your target analyte (refer to Table 2).

- Drying: For solid samples, ensure complete removal of the process solvent. Use a vacuum dryer or other appropriate equipment to evaporate solvents to levels below regulatory limits [35].

- Verification: For techniques like IR spectroscopy, ensure the sample is completely dry. In GC-MS for residual solvent analysis, use headspace-grade solvents to avoid introducing new contaminants [35].

Leveraging AI and Machine Learning for Enhanced Spectral Interpretation

Spectrophotometric analysis is a cornerstone technique in scientific research and drug development, yet it is inherently susceptible to measurement inaccuracies. A revealing comparative test highlighted this challenge, showing that measurements of the same solution across different laboratories yielded absorbance values with coefficients of variation as high as 22% [2]. These inaccuracies stem from a complex interplay of instrumental limitations, sample-related issues, and environmental factors [19]. The emergence of Artificial Intelligence (AI) and Machine Learning (ML) offers a transformative pathway to overcome these traditional limitations, enabling researchers to move from simple data collection to enhanced, intelligent interpretation [36]. This technical support center guide provides actionable troubleshooting methodologies and FAQs, framed within the thesis that integrating AI with robust experimental practice is key to resolving inaccurate spectrometer output.

Troubleshooting Guides: Identifying and Resolving Common Spectrometer Issues

Fundamental Instrumental Errors and Mitigation

Instrumental problems are a primary source of error. The following table summarizes common issues and their solutions.

| Error Type | Symptoms | Root Cause | Mitigation Strategies |

|---|---|---|---|

| Wavelength Calibration Error [2] [19] | Incorrect readings at specific wavelengths; shifts in known peak positions. | Misalignment of the monochromator or light source; mechanical wear. | Regular calibration with certified reference materials (CRMs) or emission lines (e.g., Deuterium) [2]. |

| Stray Light Interference [2] [37] | Absorbance readings are unstable or nonlinear, especially at high values (e.g., >1.0 AU). | Unwanted light outside the intended bandwidth reaches the detector. | Use proper optical filters; ensure regular maintenance and cleaning of optical components [37]. |

| Light Source Instability [37] | Measurement drift over time; inconsistent signal intensity. | Aging or failing deuterium or tungsten lamps; inconsistent power supply. | Regular monitoring and scheduled replacement of lamps; ensure stable power connection [37]. |

| Photomultiplier Tube (PMT) Sensitivity [19] | Inconsistent signal detection; noisy baseline. | Variations in sensitivity across the PMT cathode; improper beam positioning. | Regular calibration of the PMT using standard samples with known properties [19]. |

Experimental Protocol: Wavelength Accuracy Check

- Objective: Verify the accuracy of the spectrometer's wavelength scale.

- Materials: Holmium oxide solution or holmium glass filter (certified standard).

- Method:

- Place the standard in the sample holder.

- Scan the absorption spectrum across the recommended range (e.g., 240-650 nm).

- Record the wavelengths of the characteristic absorption peaks.

- Analysis: Compare the measured peak wavelengths to the certified values. Deviations greater than the instrument's specification (e.g., ±1 nm) indicate a need for professional recalibration [2].

Sample-Related and Procedural Errors

Sample preparation is a critical and often overlooked source of error.

| Error Type | Symptoms | Root Cause | Mitigation Strategies |

|---|---|---|---|

| Spectral Interference [37] | Complex, overlapping peaks; difficulty isolating the analyte signal. | Multiple compounds in the sample absorb light at similar wavelengths. | Employ selective extraction; use spectral deconvolution algorithms or choose an alternative wavelength [37]. |

| Matrix Effects [37] | Inaccurate calibration; signal suppression or enhancement. | Sample matrix components alter the analyte's absorbance properties. | Use matrix-matching for calibration standards; implement sample pre-treatment (e.g., solid-phase extraction) [37]. |

| Photodegradation [37] | Absorbance decreases over time during measurement. | The analyte undergoes a chemical change upon exposure to the light source. | Minimize exposure to light; use amber glassware; shorten analysis times [37]. |

| Inner-Filter Effect [38] | Apparent decrease in emission quantum yield in fluorescence; distorted bandshape. | Re-absorption of emitted radiation by the sample itself. | Ensure sample absorbance is below 0.1 at the excitation wavelength for fluorescence measurements [38]. |

Experimental Protocol: Stray Light Assessment

- Objective: Qualitatively assess the level of stray light in the instrument.

- Materials: A high-purity, concentrated solution with a sharp cut-off wavelength (e.g., potassium chloride for UV regions).

- Method:

- Fill a cuvette with the solution and place it in the spectrometer.

- Measure the absorbance at a wavelength well below the cut-off where the sample should absorb all light (e.g., 200 nm for KCl).

- Analysis: Any measured absorbance value significantly less than a very high value (e.g., 3 AU) indicates the presence of stray light, as stray light is being measured as transmitted light [2].

AI and Machine Learning in Spectral Interpretation: FAQs

FAQ 1: How can AI directly help me interpret complex spectra?

AI and ML models, particularly deep learning frameworks, are trained on vast datasets of spectral information. They can deconvolute overlapping signals, identify subtle patterns invisible to the human eye, and directly predict molecular structures or local chemical environments from spectral data [36]. For instance, one AI model for interpreting 31P-NMR spectra achieved a 77.69% Top-5 accuracy in predicting the local environment around a phosphorus atom, outperforming expert chemists by 25% in assignment tasks [39].

FAQ 2: My data is noisy. Can AI help with this?

Yes. A primary application of ML is in the preprocessing of spectral data. ML-powered search engines and analysis frameworks integrate sophisticated algorithms to filter noise, perform baseline correction, and accurately detect peaks in high-dimensional data, such as from mass spectrometry, leading to more reliable classification and biomarker screening [40] [41].

FAQ 3: I have a large archive of old spectra. Is this data useful for AI?

Absolutely. This is a powerful application of ML. Machine learning can be used to create search engines that "re-interrogate" tera-scale archives of existing spectral data (e.g., HRMS) to discover new reactions or compounds that were overlooked in initial manual analyses. This approach, termed "experimentation in the past," provides a cost-efficient and green alternative to new experiments [41].

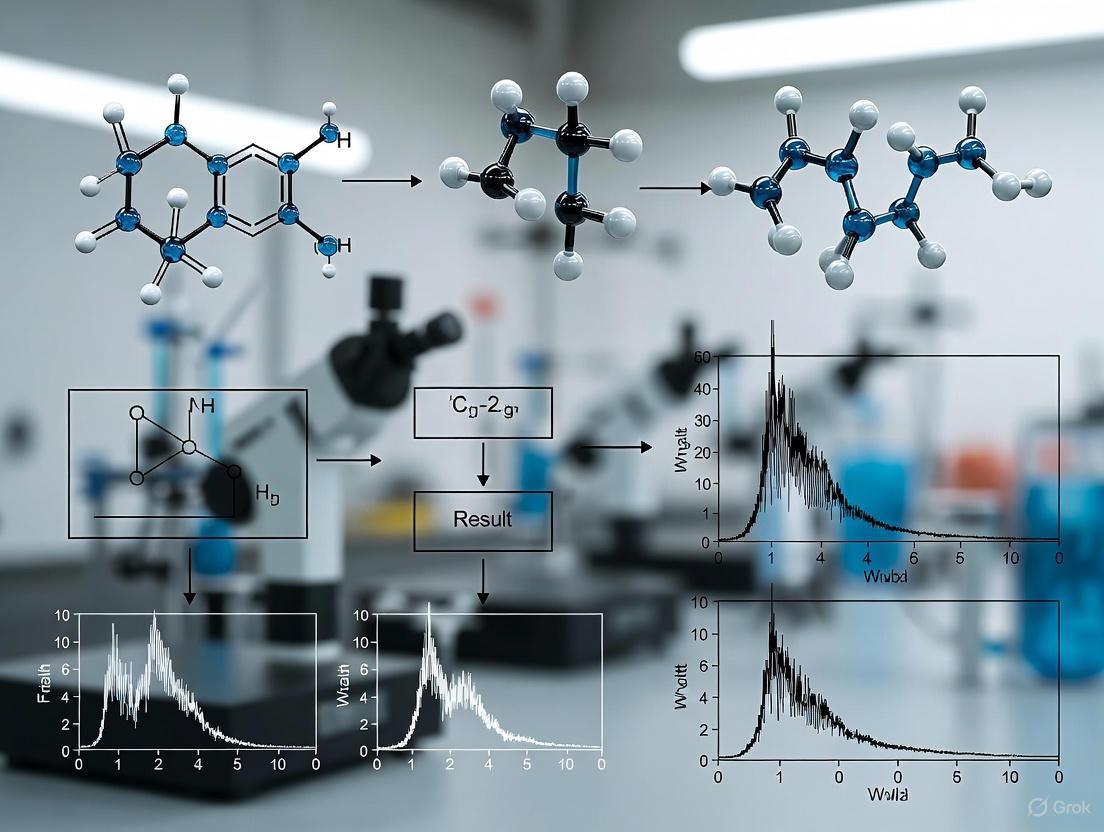

FAQ 4: What is the basic workflow for an ML-powered spectral analysis?

The following diagram illustrates a generalized workflow for machine learning-enhanced spectral interpretation, integrating concepts from cited research on MS and NMR [39] [40] [41]:

FAQ 5: Will AI replace the need for fundamental troubleshooting and calibration?

No. AI models are powerful supplements, not replacements, for sound instrument maintenance. The principle of "garbage in, garbage out" still applies. AI models trained on poor-quality, uncalibrated data will produce unreliable predictions. Regular calibration and sample preparation best practices remain the foundational step for ensuring accurate analysis, with AI acting as a powerful tool on top of this foundation [19] [36].

The Scientist's Toolkit: Essential Research Reagents and Materials

The following table details key materials required for maintaining spectrometer accuracy and conducting AI-enhanced studies.

| Item | Function | Application Notes |

|---|---|---|

| Certified Reference Materials (CRMs) [19] | Calibrating wavelength and photometric scales; verifying instrument performance. | Use holmium oxide solution for UV-Vis wavelength checks. Use neutral density filters for photometric linearity. |

| High-Purity Solvents [37] | Serving as the blank solvent; dissolving samples without introducing interference. | Ensure solvent is spectrophotometric grade and free from contaminants that absorb in your wavelength range. |

| Quartz Cuvettes (UV-VIS) [42] | Holding liquid samples for measurement in UV and visible light ranges. | Ensure pathlength is correct and cuvettes are clean. Not all plastic cuvettes are suitable for UV light. |

| Stray Light Filters [2] [37] | Blocking specific wavelengths to assess and mitigate stray light interference. | Useful for diagnostic tests and improving measurement accuracy at high absorbance values. |

| Curated Spectral Datasets [39] [36] | Training and validating AI/ML models for spectral prediction and interpretation. | These can be proprietary, published by researchers, or generated synthetically to augment real data. |

| O-Methyllinalool, (-)- | O-Methyllinalool, (-)-, CAS:137958-48-6, MF:C11H20O, MW:168.28 g/mol | Chemical Reagent |

| 2-Methyl-2,4,6-octatriene | 2-Methyl-2,4,6-octatriene, CAS:18304-15-9, MF:C9H14, MW:122.21 g/mol | Chemical Reagent |

Troubleshooting Guides

Guide 1: Resolving Inaccurate Concentration Analysis in mAbs

Problem: Reported protein concentration or aggregation levels from the A-TEEM spectrometer do not match expected values or results from orthogonal methods.

Solution: This issue often stems from sample preparation errors, instrument calibration drift, or interference from sample matrix components.

Step 1: Verify Sample Integrity and Preparation

- Check the buffer composition against the method protocol. Even small changes in pH or ionic strength can affect fluorescence and absorbance.

- Ensure the monoclonal antibody (mAb) sample has been properly stored and is not subjected to multiple freeze-thaw cycles, which can induce aggregation.

- Confirm that the sample is within the ideal concentration range for the instrument's detection limits. Prepare a dilution series to test for linearity.

Step 2: Perform Instrument Calibration and Qualification

- Use certified reference materials (CRMs) to verify the accuracy of the absorbance and fluorescence wavelength scales [43].

- Run a control sample of a known, stable mAb to monitor the spectrometer's performance. A deviation in the control sample's results indicates potential instrument drift [43].

- If deviations persist after standard calibration, consider performing a Type Standardization. This process fine-tunes the calibration using a sample similar in composition to your unknown material to correct for matrix effects [43].

Step 3: Investigate and Mitigate Matrix Effects

- Compare the A-TEEM results of the mAb in its standard buffer to the results in the experimental buffer. A significant shift suggests matrix interference.

- If using an intrinsic fluorescence method, try to eliminate or reduce the concentration of excipients that absorb or fluoresce in the same spectral region as tryptophan and tyrosine residues.

Guide 2: Addressing High Noise or Poor Signal-to-Noise Ratio in EEMs

Problem: The resulting Excitation-Emission Matrix (EEM) is noisy, making it difficult to distinguish spectral features or perform accurate data analysis.

Solution: High noise typically results from low signal strength, light scattering, or environmental factors.

Step 1: Optimize Signal Strength

- For low concentration samples: Increase the integration time to collect more photons. Use a higher sample concentration if possible.

- For high concentration samples: If the signal is saturated, it can create artifacts and noise. Dilute the sample to bring it within the instrument's optimal dynamic range.

Step 2: Minimize Scattering Effects

- Centrifuge or filter samples to remove particulate matter that causes Rayleigh and Raman scattering.

- Ensure the cuvette is clean and free of scratches or fingerprints.

- Use the instrument software's scattering correction algorithms during data processing.

Step 3: Control the Environment

- Allow the spectrometer lamp to warm up for the manufacturer's recommended time to achieve stable output.

- Ensure the instrument is located in a stable environment away from vibrations and significant temperature fluctuations.

Frequently Asked Questions (FAQs)

FAQ 1: Why is a Type Standardization sometimes necessary for my A-TEEM analyzer even after a standard calibration with CRMs?

Deviations can occur even after calibration with certified reference materials (CRMs) for several reasons. Alloys or biological matrices on the more complex end of the spectrum can deviate strongly from the calibration matrix. Furthermore, many CRMs are manufactured synthetically and may not perfectly correspond to the composition or physical structure of your actual mAb sample. Type Standardization acts as a fine-tuning step, applied just before analyzing one or more samples of a similar alloy type (e.g., a specific mAb class), to correct for these subtle, matrix-specific inaccuracies [43].

FAQ 2: What are the ALCOA principles, and why are they critical for data integrity in spectrometer output research?

ALCOA is a framework defining the key characteristics of data integrity for regulatory compliance. It stands for:

- Attributable: Data must clearly show who acquired it and when.

- Legible: Data must be permanent and readable.

- Contemporaneous: Data must be recorded at the time the work is performed.

- Original: The first or source capture of the data must be preserved.

- Accurate: Data must be free from errors [44].

Adherence to ALCOA principles is non-negotiable in biopharmaceutical quality control. For A-TEEM data, this means ensuring electronic records have secure audit trails, proper user management, and that data backup procedures are in place to protect the original spectral files [44].

FAQ 3: Our brand guidelines use specific colors. How can I ensure the diagrams in my reports are accessible to all colleagues, including those with color vision deficiency (CVD)?

Designing for accessibility is crucial for clear scientific communication. The best practice is to use a combination of high-contrast colors and supplemental design elements. Avoid problematic color combinations like red/green, green/brown, and blue/purple, which are difficult for individuals with the most common types of CVD to distinguish [45] [46]. Instead, use a palette with strong contrasting colors, such as blue and orange, which are generally discernible. Furthermore, do not rely on color alone. Use patterns, textures, and symbols (like different shapes or line styles) in your charts and diagrams to convey information, ensuring that the message is perceivable even without color [45] [46].

Protocol: A-TEEM for Characterizing Monoclonal Antibody Aggregation

Objective: To utilize Absorbance-Transmission and Fluorescence Excitation-Emission Matrix (A-TEEM) spectroscopy to detect and quantify aggregation levels in a formulated monoclonal antibody sample.

Materials:

- Purified monoclonal antibody (mAb) sample.

- Formulation buffer (e.g., Histidine buffer).

- Certified Reference Material (CRM) for spectrometer validation.

- A-TEEM spectrometer.

- Quartz cuvette (appropriate pathlength).

- Centrifugal filters or centrifuge.

Methodology:

- Instrument Warm-up and Standardization: Power on the A-TEEM spectrometer and allow the xenon flash lamp to stabilize for the recommended time (typically 15-30 minutes). Perform a wavelength and intensity calibration using the CRM as per the manufacturer's instructions [43].

- Sample Preparation:

- Dilute the mAb sample to a concentration within the linear range for both absorbance and fluorescence (e.g., 0.1 - 0.5 mg/mL) using the formulation buffer.

- To remove pre-existing aggregates, centrifuge the diluted sample at ~10,000-15,000 x g for 5-10 minutes.

- Data Acquisition:

- Place the formulated buffer in the cuvette and acquire a blank EEM.

- Load the clarified mAb sample and acquire the A-TEEM data. Standard parameters might include an excitation range of 240-300 nm and an emission range of 300-450 nm to capture the intrinsic fluorescence of tryptophan and tyrosine residues.

- Data Analysis:

- Subtract the blank EEM from the sample EEM.

- Use the unique fluorescence signature (or "fingerprint") of the native mAb. Aggregates will often exhibit a shift in the fluorescence peak maximum and/or a change in the overall EEM landscape due to altered microenvironments of fluorophores.

- Apply multivariate analysis or the manufacturer's software algorithms to deconvolute the spectra and quantify the relative contribution of the aggregated species.

Data Presentation

Table 1: Common A-TEEM Spectral Features for mAb Quality Attributes

| Quality Attribute | Absorbance Signature | Fluorescence EEM Signature | Key Interpretation |

|---|---|---|---|

| Native Monomer | Peak at ~280 nm | Distinct peak (e.g., Ex/Em 280/350 nm) | Characteristic of folded, intact mAb structure. |

| Soluble Aggregates | Increased scattering at lower wavelengths | Peak shift to longer wavelengths (red-shift); Altered peak shape | Indicates protein unfolding and non-covalent clustering. |

| Chemical Degradation (e.g., Oxidation) | Possible subtle shift in A280 profile | Change in peak intensity; Emergence of new peaks | Reflects modification of aromatic residues (Trp, Tyr). |

| Fragment Formation | Unchanged (if no mass loss) | Altered spectral profile or reduced intensity | Suggests cleavage of the mAb, potentially losing fluorophores. |

Table 2: Research Reagent Solutions for mAb A-TEEM Analysis

| Reagent / Material | Function | Key Considerations |

|---|---|---|

| Certified Reference Materials (CRMs) | Calibrate spectrometer wavelength and intensity scales to ensure analytical accuracy [43]. | Must be traceable to national standards. Essential for data credibility and troubleshooting deviations. |

| Formulation Buffers | Provide a stable, consistent chemical environment for the mAb, mimicking drug product conditions. | Buffer composition (pH, salts) can significantly affect spectral results; use high-purity reagents. |

| Quartz Cuvettes | Hold liquid sample for analysis in the spectrometer. | Must be of high optical quality and clean to avoid light scattering and absorption artifacts. |

| Centrifugal Filters | Clarify samples by removing large aggregates and particulates prior to analysis. | Prevents scattering interference and protects the instrument's flow cell or cuvette. |

Workflow and Pathway Visualizations

A-TEEM mAb Analysis Workflow

mAb Spectral Signature Pathway

Data Integrity (ALCOA) Workflow

A Step-by-Step Diagnostic and Resolution Protocol

Troubleshooting Guide: Unstable or Drifting Readings

| Symptom | Possible Causes | Recommended Solutions | Experimental Verification Protocol |

|---|---|---|---|

| Readings are unstable or drift over time | 1. Instrument lamp not stabilized [9].2. Sample concentration too high (Absorbance >1.5 AU) [9].3. Air bubbles in the sample [9].4. Improper sample mixing [9].5. Environmental vibrations or temperature fluctuations [9]. | 1. Allow lamp to warm up for 15-30 minutes before use [9].2. Dilute sample to bring absorbance into optimal range (0.1-1.0 AU) [9].3. Gently tap cuvette to dislodge bubbles [9].4. Mix sample thoroughly before measurement [9].5. Place instrument on a stable, level surface away from drafts [9]. | Protocol for RSD Validation:1. Prepare a standard solution of known concentration (e.g., 0.1 M Copper Sulfate) [47].2. After 30-minute lamp warm-up, take 10 consecutive absorbance readings [9].3. Calculate the Relative Standard Deviation (RSD).4. RSD < 1% indicates acceptable stability. |

| Instrument fails to "Zero" (baseline) | 1. Sample compartment lid not closed [9].2. High humidity affecting internal components [9]. | 1. Ensure compartment lid is securely shut [9].2. Allow instrument to acclimate; replace desiccant packs if present [9]. | Protocol: Verify dark current reading with compartment closed and no cuvette. |

| Negative Absorbance Values | 1. Blank solution is "dirtier" than the sample [9].2. Using different cuvettes for blank and sample [9].3. Sample absorbance is near instrument noise level [9]. | 1. Use the exact same cuvette for both blank and sample measurements [9].2. Re-clean cuvette and prepare a fresh blank [9].3. Concentrate sample if possible [9]. | Protocol: Use an optically matched pair of cuvettes and confirm blank solution purity via HPLC. |

Troubleshooting Guide: Blank Screen & Calibration Failures

| Symptom | Possible Causes | Recommended Solutions | Underlying Principle |

|---|---|---|---|

| Blank screen or failure to calibrate | 1. Insufficient light reaching the detector [47].2. Weak or burned-out light source [47].3. Incorrect cuvette type blocking light [47]. | 1. Check and clear the light path; ensure cuvette is correctly inserted [47].2. Switch to uncalibrated mode to check light source spectrum; replace if output is flat [47].3. Use quartz cuvettes for UV measurements [47]. | Calibration requires a sufficient difference between light and dark signals. A weak source or blocked path prevents this [47]. |

| Cannot set to 100% Transmittance (fails to blank) | 1. Light source is near end of life [9].2. Optics are dirty or misaligned [9]. | 1. Check lamp usage hours; replace if necessary [9].2. Instrument likely requires professional servicing [9]. | |

| "Absorbance too high" errors or noisy data | Sample is too concentrated [47]. | Dilute samples so absorbance falls between 0.1 and 1.0 AU [47]. | Excessive absorbance reduces the light signal to the detector, increasing the noise-to-signal ratio [47]. |

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function & Rationale |

|---|---|

| Quartz Cuvettes | Essential for ultraviolet (UV) range measurements (typically below 340 nm) as they do not absorb UV light, unlike standard plastic or glass [9] [47]. |

| Optically Matched Cuvettes | A matched pair ensures nearly identical light paths, critical for preventing errors like negative absorbance when using different cuvettes for blank and sample [9]. |

| Lint-Free Wipes | For cleaning cuvette optical surfaces without introducing scratches or fibers that can scatter light and cause inaccurate readings [9]. |

| High-Purity Solvents | Used for preparing blanks and samples. Impurities can absorb light and lead to significant measurement errors, especially in the UV region [47] [48]. |

| Holmium Oxide Filter | A wavelength accuracy standard used to validate the spectrophotometer's wavelength scale, ensuring spectral data reliability [2]. |

| Neutral Density Filters | Solid filters used for checking the photometric linearity of the instrument across different absorbance values [2]. |

Systematic Troubleshooting Workflow

The following diagram outlines a logical pathway for diagnosing and resolving common spectrophotometer issues.

Frequently Asked Questions (FAQs)

Q1: My readings are consistently unstable, even with a blank solvent. I've already allowed the lamp to warm up. What is the next step? A1: Environmental interference is a likely culprit. Ensure the spectrophotometer is on a sturdy, level bench away from sources of vibration (e.g., centrifuges, heavy foot traffic) and strong drafts. Temperature fluctuations can also cause drift. If the problem persists, the lamp may be failing and require replacement [9] [49].

Q2: Why is it critical to use the same cuvette for both the blank and the sample measurement? A2: Even slight differences in the optical properties, thickness, or cleanliness between two cuvettes can introduce significant error. Using the same cuvette ensures that the instrument is referencing the exact same light path for both the blank and the sample, eliminating this variable and preventing artifacts like negative absorbance values [9].