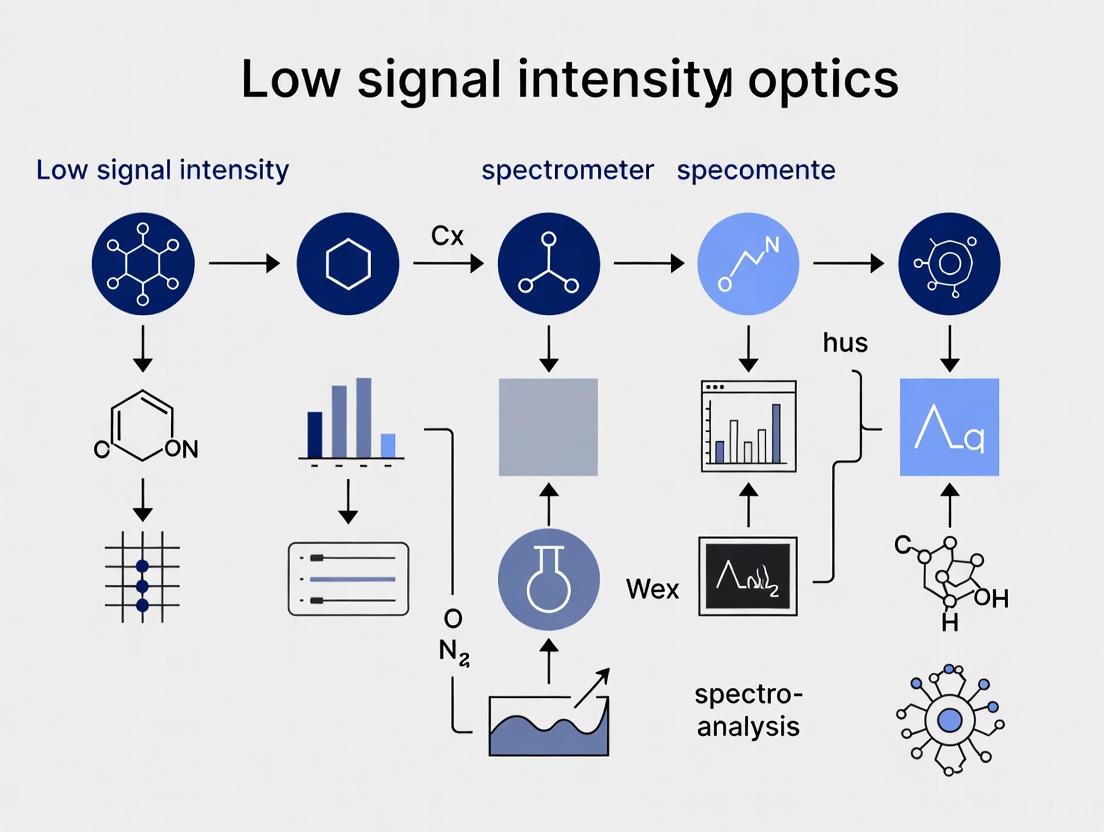

Restoring Spectrometer Performance: A Comprehensive Guide to Diagnosing and Fixing Low Signal Intensity

This article provides a systematic framework for researchers, scientists, and drug development professionals to address the pervasive challenge of low signal intensity in spectrometer optics.

Restoring Spectrometer Performance: A Comprehensive Guide to Diagnosing and Fixing Low Signal Intensity

Abstract

This article provides a systematic framework for researchers, scientists, and drug development professionals to address the pervasive challenge of low signal intensity in spectrometer optics. Covering foundational principles, methodological optimizations, step-by-step troubleshooting, and validation techniques, the guide synthesizes current best practices to restore instrument sensitivity, ensure data integrity, and maintain robust analytical performance in critical applications from trace analysis to quality control.

Understanding Spectrometer Sensitivity: Core Principles and Component Roles

In optical spectrometry, the Signal-to-Noise Ratio (SNR) is the fundamental metric for quantifying the sensitivity of an instrument. It measures the difference between the desired useful signal and the unwanted background noise of a sensor. A high SNR is critical for distinguishing weak spectral features from system noise, directly impacting the accuracy and detection limits of your measurements [1].

The performance of your spectrometer is not defined by signal strength alone. The overall sensitivity is determined by a combination of factors including optical design, light source intensity, collection efficiency, and detector technology. Therefore, the SNR provides a standardized, holistic measure to properly compare system performance under controlled conditions [2].

Core Principles of SNR Calculation

Standard SNR Formulas

The method for calculating SNR can vary depending on your instrument type and detector. The table below summarizes the two most common methodologies.

Table: Common SNR Calculation Methods in Spectrometry

| Method Name | Formula | Application | Key Components |

|---|---|---|---|

| FSD (First Standard Deviation) or SQRT Method [2] | ( SNR = \frac{\text{Peak Signal} - \text{Background Signal}}{\sqrt{\text{Background Signal}}} ) | Ideal for photon counting detectors. Assumes noise follows Poisson statistics. | Peak Signal (e.g., Raman peak intensity); Background Signal (from a region with no signal, e.g., 450 nm). |

| RMS (Root Mean Square) Method [2] | ( SNR = \frac{\text{Peak Signal} - \text{Background Signal}}{\sigma{\rho}} ) where ( \sigma{\rho} = \sqrt{\frac{\sum{i=1}^{n}(Si - \bar{S})^2}{n}} ) | Best for analog detectors. More generalized approach. | Peak Signal; Background Signal; ( \sigma_{\rho} ): Standard deviation of background intensity from multiple time-based measurements. |

Defining Detection Limits

The Limit of Detection (LOD) for an analyte is statistically defined as the concentration where the SNR is greater than or equal to 3. This standard, set by organizations like the International Union of Pure and Applied Chemistry (IUPAC), provides confidence that an observed spectral feature is genuine signal and not random noise [3].

Troubleshooting Guide: Resolving Low SNR Issues

Use this structured workflow to systematically diagnose and resolve common issues that lead to poor SNR in your spectroscopic experiments.

Detailed Troubleshooting Steps

- Check Light Source & Path: A weak or failing light source is a primary cause of low signal. Check the lamp output in uncalibrated mode; a flat graph in certain regions indicates a faulty source that needs replacement [4] [5]. Ensure the optical path is completely clear by inspecting and cleaning any windows or lenses in the system [6].

- Inspect Sample & Cuvette: Sample preparation is critical. Overly concentrated samples can lead to absorbance values above 1.0, resulting in noisy, unreliable data. Dilute samples to keep absorbance between 0.1 and 1.0 for optimal results [4]. Use the correct cuvette material (e.g., quartz for UV measurements, as standard plastic blocks UV light) and ensure it is clean, scratch-free, and properly aligned in the holder [4] [5].

- Verify Instrument Configuration:

- Slit Width: Wider slits allow more light to reach the detector, significantly boosting the signal. Doubling the slit width from 5 nm to 10 nm can increase the SNR by a factor of more than 3, though this trades off with spectral resolution [2].

- Integration Time: Increasing the time the detector collects light at each wavelength step will strengthen the signal. A longer integration time generally improves SNR, but can also lead to increased detector noise if the system is not cooled [2] [7].

- Measure Noise Sources:

- Dark Noise: This is inherent thermal noise from the spectrometer's electronics, present even in complete darkness. It can be reduced by cooling the detector and using signal averaging techniques [1] [7].

- Electronic Noise: Ensure the instrument is properly grounded and isolated from sources of electromagnetic interference (EMI) [1].

Experimental Protocol: The Water Raman SNR Test

The water Raman test is an industry-standard method for determining the relative sensitivity of a fluorometer. It uses a stable, universally available sample—ultrapure water—to provide a robust comparison between instruments [2].

Materials and Equipment

Table: Research Reagent Solutions for Water Raman Test

| Item | Function / Specification |

|---|---|

| Ultrapure Water | Stable, non-fluorescent sample that produces a weak Raman signal, ideal for sensitivity testing [2]. |

| Spectrofluorometer | Must be equipped with a UV-capable light source and detector. A PMT like the Hamamatsu R928P is common [2]. |

| Quartz Cuvettes | Required for UV transmission at the 350 nm excitation wavelength. Plastic cuvettes are not suitable [4]. |

| 5 nm Bandpass Slits | Standard slit size for this test. Using different slit widths will invalidate cross-instrument comparisons [2]. |

Step-by-Step Methodology

Instrument Setup:

- Configure the excitation wavelength to 350 nm.

- Set the emission scan range from 365 nm to 450 nm.

- Set the excitation and emission slit widths to 5 nm.

- Set the integration time to 1 second per wavelength step.

- Ensure no optical filters are in the path unless automatically deployed and documented [2].

Data Acquisition:

- Fill a quartz cuvette with ultrapure water and place it in the sample compartment.

- Run an emission scan to acquire the Raman spectrum of water. The peak will be observed at approximately 397 nm [2].

SNR Calculation (FSD Method):

- Peak Signal (S): Record the intensity value at the Raman peak (397 nm).

- Background Signal (D): Record the average intensity in a region with no Raman signal, typically at 450 nm.

- Apply the FSD formula: ( SNR = \frac{S - D}{\sqrt{D}} ) [2].

The workflow below summarizes the key steps and parameters for this standardized test.

Advanced SNR Enhancement Techniques

Signal Averaging

Averaging multiple spectral scans is one of the most effective ways to improve SNR. The SNR increases with the square root of the number of averaged scans. For example, averaging 100 scans will improve the SNR by a factor of 10 [7]. Modern spectrometers may offer High Speed Averaging Mode (HSAM), which performs this averaging in hardware, dramatically boosting the SNR per unit time for time-critical applications [7].

Multi-Pixel Analysis

For Raman spectroscopy, moving from a single-pixel SNR calculation (using only the intensity of the center pixel of a band) to a multi-pixel method (using the band area or a fitted function) can significantly improve the reported SNR and lower the detection limit. Research on the SHERLOC instrument on the Mars Perseverance rover showed that multi-pixel methods reported a 1.2 to 2-fold larger SNR for the same Raman feature, allowing previously sub-threshold signals to be confidently detected [3].

Frequently Asked Questions (FAQs)

Q1: My spectrometer's datasheet lists an SNR of 1000:1, but I can't achieve this in practice. Why? Datasheet values are often measured under ideal conditions, such as a "dark measurement" (SNR dark), which uses the theoretical maximum signal (65535 for a 16-bit ADC) and the measured dark noise. In real-world measurements (SNR light), your signal strength will be lower, resulting in a lower, more realistic SNR [1].

Q2: Why do my results vary between measurements on the same sample? Temporal instability in the light source and detector can cause drift. For quantitative data, acquire all measurements on the same day and check a replicate sample at the beginning and end of your session. Also, ensure consistent sample presentation and cuvette positioning [8].

Q3: The vacuum pump on my OES spectrometer is making noise. Could this affect SNR? Yes, critically. A malfunctioning vacuum pump allows atmosphere into the optic chamber, which absorbs low-wavelength UV light. This causes a loss of intensity and incorrect values for elements like Carbon, Phosphorus, and Sulfur, severely degrading SNR and data accuracy [6].

Q4: How does detector cooling help improve SNR? Cooling the detector, typically a photomultiplier tube (PMT) or CCD, reduces dark shot noise. This is the thermal noise generated by the detector itself. By lowering the dark noise, the overall SNR is improved, which is especially important for weak signal detection and long integration times [2] [1].

FAQs: Understanding the Core Trade-off

1. What is the fundamental sensitivity-resolution trade-off in a spectrometer? The trade-off exists because higher spectral resolution typically requires narrower entrance slits or finer optical dispersion. This physically blocks more incoming light, reducing the total light throughput (luminosity) to the detector. Consequently, for a given sensitivity, you must sacrifice resolution, and vice versa. This relationship is often described by the resolution-luminosity product (E = RL), which remains constant for a given sensing area [9].

2. Are there spectrometer designs that overcome this trade-off? Yes, innovative optical designs are breaking this traditional compromise. Technologies like the High Throughput Virtual Slit (HTVS) can provide a 10-15x increase in optical throughput without degrading resolution by replacing the physical slit with beam reformatting technology [10]. Similarly, modern metasurface-based spectrometers using guided-mode resonance or quasi-Bound States in the Continuum (qBIC) can enhance photon collection, with some designs showing sensitivity more than ten times greater than conventional grating spectrometers while maintaining high resolution [11] [9].

3. For my application, when should I prioritize resolution over sensitivity? Prioritize resolution when your analysis depends on distinguishing closely spaced spectral peaks or identifying subtle spectral features. This is critical for techniques like:

- Raman spectroscopy: for better library matching and sample identification [10].

- Laser-Induced Breakdown Spectroscopy (LIBS) and Optical Emission Spectroscopy: for resolving atomic emission lines [12].

- Optical Coherence Tomography (OCT): where resolution directly impacts image clarity and depth sensitivity [13].

4. For my application, when should I prioritize sensitivity over resolution? Prioritize sensitivity when measuring weak signals or when speed is essential. This is common in:

- Fluorescence spectroscopy: where signal levels can be low [12].

- Low-light applications: such as measuring low-concentration analytes or working with light-sensitive samples [10] [9].

- High-speed process monitoring: where short integration times are necessary [10].

Troubleshooting Guides

Problem 1: Poor Signal-to-Noise Ratio (SNR) in High-Resolution Mode

Symptoms: Noisy, hard-to-interpret spectra with low peak intensities when the spectrometer is configured for high resolution.

Diagnosis and Solutions:

| Step | Action | Rationale & Additional Tips |

|---|---|---|

| 1 | Verify if your application truly requires the highest resolution setting. | If measuring broad spectral features, reducing resolution can dramatically boost signal. Consult your instrument manual for application-specific settings [12] [14]. |

| 2 | Increase the integration time to allow more light to be collected. | This is a direct way to improve SNR but reduces measurement speed. Be mindful of potential sample degradation with prolonged laser exposure [10]. |

| 3 | Evaluate your light source. Consider using a higher-power source or ensuring optimal illumination of the sample. | A brighter source directly increases signal. However, with high-throughput spectrometer designs, you may achieve good SNR even with lower-power sources, minimizing sample photodegradation [10]. |

| 4 | Check for and clean optical components. Dirty entrance slits, mirrors, or gratings can significantly reduce throughput [14]. | Use a soft cloth, lens paper, or cotton swabs with appropriate solvents. Regularly check and replace worn-out or damaged components [14]. |

| 5 | Utilize signal averaging. If measuring multiple samples is faster, collect and average several spectra. | Averaging multiple scans can improve SNR by reducing random noise [10]. |

Problem 2: Loss of Spectral Peaks or Signal Intensity

Symptoms: Expected spectral peaks are missing, very weak, or disappear entirely during analysis.

Diagnosis and Solutions:

| Step | Action | Rationale & Additional Tips |

|---|---|---|

| 1 | Check the fundamental setup. Confirm the light source is on and stable, all fibers are connected, and the detector is active. | A stable spray in ESI-MS, for example, confirms the source is functioning [15]. |

| 2 | Inspect for optical blockages. Verify that the entrance slit and beam path are not obstructed. | Dust, dirt, or fingerprints on optical surfaces can severely interfere with light transmission [14]. |

| 3 | Investigate fluidic system issues (if applicable). For LC/MS systems, ensure pumps are properly primed and free of air bubbles. | A loss of prime in a pump can halt chromatography, preventing analytes from reaching the detector [15]. |

| 4 | Rule out detector saturation. If the signal is too strong, it can cause saturation and distort peaks, sometimes making them appear missing. | Reduce integration time or source power to check if peaks become visible [14]. |

| 5 | Ensure proper spectrometer alignment. Use a reference source (e.g., a mercury argon lamp) to verify peak positions and alignment [14]. | Perform routine alignment checks and use software tools or manual adjustments to correct any deviations [14]. |

Problem 3: Inability to Resolve Fine Spectral Features

Symptoms: Inability to distinguish between two closely spaced spectral peaks, leading to blurred or merged data.

Diagnosis and Solutions:

| Step | Action | Rationale & Additional Tips |

|---|---|---|

| 1 | Theoretically calculate the required resolution. Ensure your spectrometer's specified resolution is sufficient to resolve the peak separation in your sample. | Resolution (R) is defined as R=λ/Δλ, where Δλ is the minimum separable wavelength difference [12]. |

| 2 | Understand how your manufacturer specifies resolution. Prefer instruments with resolution specified by measured FWHM (Full Width at Half Maximum) rather than theoretical pixel resolution [12]. | "Pixel resolution" (range divided by pixel count) often significantly overstates achievable performance. Ask for measured FWHM data from a reference source [12]. |

| 3 | Use a narrower entrance slit if your instrument allows it. | A narrower slit improves resolution but directly reduces light throughput, re-engaging the trade-off [10] [12]. |

| 4 | Consider advanced spectrometer designs. For critical applications, technologies like VPH (Volume Phase Holographic) gratings and HTVS are engineered to deliver high resolution without the full penalty of light loss [10]. |

Protocol: Evaluating Spectrometer Throughput vs. Resolution Using a Standard Sample

This methodology is adapted from application notes on characterizing high-throughput spectrometers [10].

1. Objective: To quantitatively measure the signal intensity and resolution of a spectrometer at different configuration settings.

2. Materials and Reagents:

- Spectrometer system (e.g., Apex 785 with HTVS or equivalent) [10].

- Stable light source or standard sample (e.g., Paracetamol/Acetaminophen for Raman) [10].

- 100 mW, 785 nm laser diode (for Raman) [10].

- Raman fiber optic probe [10].

- Computer with spectral acquisition software.

3. Procedure: 1. System Setup: Initialize the spectrometer and laser according to manufacturer guidelines. Ensure the environment is stable. 2. Baseline Acquisition: Collect a dark spectrum (with the laser off or source blocked) to account for detector noise. 3. High-Resolution Mode: Configure the spectrometer for its highest resolution setting (e.g., smallest virtual or physical slit). Acquire a spectrum of the standard sample, recording the integration time and the signal intensity of a key peak (e.g., a prominent Raman shift peak in Paracetamol at ~750 ms integration time) [10]. 4. Throughput Mode: Reconfigure the spectrometer for maximum throughput or sensitivity (e.g., widest slit setting). Acquire a spectrum of the same sample, adjusting the integration time to avoid detector saturation. Record the new integration time and the signal intensity of the same key peak. 5. Data Analysis: Measure the Full Width at Half Maximum (FWHM) of an isolated, sharp peak from both spectra to calculate resolution. Compare the signal intensities (normalized for integration time) to assess the gain in throughput.

4. Expected Outcome: A direct comparison of the signal strength and resolution between the two operational modes, illustrating the practical trade-off. A system with advanced design like HTVS will show a less severe drop in signal when in high-resolution mode [10].

Quantitative Comparison of Spectrometer Technologies

The table below summarizes key performance metrics from the cited research, providing a reference for what different technologies can achieve.

| Technology / Instrument | Reported Resolution | Reported Sensitivity / Throughput Gain | Key Application Context |

|---|---|---|---|

| HTVS (Apex 785) [10] | High spectral density over 3800 cmâ»Â¹ Raman shift | 10-15x higher throughput than conventional slit spectrometers | Raman spectroscopy; enables short integration times (e.g., 750 ms for paracetamol) [10] |

| GMRF-based Spectrometer [11] | 0.8 nm (over 370-810 nm) | >10x sensitivity vs. conventional grating spectrometers (in fluorescence assay) | Fluorescence spectroscopy; chemical/biological detection [11] |

| qBIC Metasurface Spectrometer [9] | Tunable, high resolution via geometric parameters | Light throughput increases with resolution; >10x higher luminosity vs. bandpass systems | Ultralow-intensity fluorescence and astrophotonic spectroscopy [9] |

| Conventional Slit Spectrometer [10] [12] | Improves with narrower slit | Throughput drops by 75-95% with narrow slits for high resolution [10] | General purpose; exemplifies the classic trade-off |

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in Experiment |

|---|---|

| Paracetamol (Acetaminophen) | A standard reference material with a well-characterized Raman spectrum used for benchmarking spectrometer performance, particularly in validating resolution and throughput [10]. |

| Mercury Argon (Hg-Ar) Calibration Source | Provides sharp, atomic emission lines at known wavelengths. Essential for the accurate wavelength calibration of the spectrometer and for empirically measuring its resolution (FWHM) [12]. |

| Volume Phase Holographic (VPH) Grating | A high-efficiency diffraction grating used in advanced spectrometers to maximize light throughput and resolution simultaneously, often blazed for a specific laser wavelength (e.g., 830 nm for 785 nm excitation) [10]. |

| Quasi-BIC Metasurface Encoder | A nanoscale photonic filter made from materials like TiO₂ or Si₃N₄. It acts as a "bandstop" filter array in computational spectrometers, enabling high sensitivity and resolution by efficiently encoding spectral information without a physical slit [11] [9]. |

| NIR-enhanced Back-thinned CCD | A detector type that is highly sensitive in the near-infrared region. Its use is critical for low-light applications like Raman spectroscopy to ensure weak signals are captured effectively [10]. |

| Agn 191976 | Agn 191976, MF:C21H34O6, MW:382.5 g/mol |

| SR-302 | SR-302, MF:C32H42N6O5S, MW:622.8 g/mol |

Technology Decision Workflow

Signal Pathway in Advanced Spectrometers

Troubleshooting Guides

Guide 1: Resolving Low Signal-to-Noise Ratio (SNR)

Problem: My spectrometer measurements are noisy, making it difficult to distinguish the signal from the baseline noise.

Solution: A low SNR can be addressed by optimizing several components and settings related to light throughput and signal processing.

1. Verify and Adjust Slit Width: The slit width controls the amount of light entering the spectrometer.

- Action: Increase the slit width. A wider slit allows more light to reach the detector, thereby increasing the signal. Be aware that this may slightly reduce spectral resolution [16].

- Experimental Protocol: Prepare a standard sample and acquire spectra at various slit widths (e.g., 35 µm, 50 µm, 100 µm, 150 µm). Measure the SNR at a key absorption or emission peak for each setting to find the optimal balance between signal intensity and resolution [16].

2. Optimize Detector Settings: Modern detectors have configurable parameters that directly impact noise.

- Action:

- Data Rate: Set the data acquisition rate (Hz) to collect 25-50 data points across the narrowest peak in your chromatogram. Rates that are too high can increase noise, while rates that are too low poorly define peaks [16].

- Filter Time Constant: Apply a noise filter (e.g., "slow" time constant) to reduce high-frequency baseline noise [16].

- Absorbance Compensation: If your detector supports it, enable absorbance compensation using a wavelength range where your analyte does not absorb. This subtracts non-wavelength dependent noise [16].

- Experimental Protocol: Using a stable light source or a low-concentration standard, systematically adjust one parameter at a time (data rate, filter, compensation) while keeping others constant. Measure the noise in a flat region of the spectrum and the peak height to calculate the improvement in SNR [16] [17].

- Action:

3. Increase Signal Averaging: Signal averaging reduces random noise over multiple acquisitions.

- Action: Increase the number of spectral scans that are averaged for a single measurement. The SNR improves with the square root of the number of scans averaged (e.g., averaging 100 scans increases SNR 10-fold) [17].

4. Check Optical Fiber and Light Source:

- Action: Use a larger-diameter optical fiber to capture more light from the source. Ensure your light source is stable and provides sufficient output power for your application [17].

The relationships between these components and settings for improving SNR are summarized in the following workflow:

Guide 2: Addressing Poor Spectral Resolution

Problem: My spectra show broad, poorly separated peaks, which limits my ability to distinguish between similar analytes.

Solution: Poor resolution is often a trade-off with sensitivity and can be improved by adjusting the following components.

1. Decrease Slit Width: A narrower slit provides better spectral resolution by reducing the range of wavelengths that can enter the optical system.

- Action: Use the smallest slit width that still provides an acceptable signal level. This will sharpen peaks but will also reduce the total light intensity [16].

2. Leverage the Diffraction Grating: The diffraction grating is the core component for dispersing light. Its properties and the overall optical design set the fundamental resolution limit.

- Action: Ensure your spectrometer has a grating with sufficient grooves per millimeter and an optical design that minimizes aberrations for a high spectral-range-to-resolution ratio [18] [19].

- Background: A diffraction grating works by creating interference patterns from multiple slits. The condition for constructive interference (a bright fringe) is given by (d\sin\theta = m\lambda), where (d) is the grating spacing, (\theta) is the angle, (m) is the order, and (\lambda) is the wavelength. Adding more slits (grating lines) makes the bright fringes sharper and more defined, which translates to higher resolution in the detected spectrum [19].

3. Optimize Detector Resolution Setting: Some array detectors have a software-based "resolution" setting that averages data from adjacent pixels.

- Action: Lower this resolution setting (e.g., to 1 nm or 2 nm) to minimize pixel binning, which can smear spectral features. Note that this may increase high-frequency noise [16].

The interplay between components that control resolution is illustrated below:

Frequently Asked Questions (FAQs)

FAQ 1: What is the most critical parameter to adjust first when my signal intensity is too low? Start by evaluating the slit width. It has a direct and significant impact on the amount of light entering the system. If you are currently using a narrow slit, slightly widening it will often provide the most immediate gain in signal intensity [16].

FAQ 2: How does the diffraction grating influence my spectrometer's performance? The diffraction grating is fundamental as it determines the wavelength range and the inherent resolution capability of your instrument. A high-quality grating with a suitable groove density will provide better separation of closely spaced wavelengths (higher resolution). Advanced designs also aim for high optical efficiency (>50%) to maximize signal throughput [18] [19].

FAQ 3: I've optimized the optics, but my signal is still noisy. What detector-related factors should I investigate? Review your detector's acquisition settings. Key parameters include:

- Integration Time: Increase the exposure time of the detector to the light to collect more signal.

- Signal Averaging: Use this feature to average multiple scans and reduce random noise.

- Thermal Cooling: For very low-light applications (e.g., Raman spectroscopy), use a thermoelectrically cooled (TE-cooled) detector to significantly reduce thermal (dark) noise [17].

FAQ 4: Is a higher Signal-to-Noise Ratio (SNR) always better? How is it quantified? A higher SNR is generally desirable as it provides a clearer, more reliable measurement. It is quantitatively defined as SNR = (Signal Intensity - Dark Signal) / Noise Standard Deviation. Spectrometer manufacturers typically report the maximum possible SNR obtained at detector saturation. For example, high-sensitivity spectrometers can achieve SNRs of 1000:1 or higher [17].

This table summarizes the experimental optimization of an Alliance iS HPLC PDA Detector for analyzing ibuprofen impurities, showing how deviation from default settings can enhance performance.

| Parameter | Default Setting | Optimized Setting | Effect on Signal-to-Noise (S/N) | Key Trade-off |

|---|---|---|---|---|

| Data Rate | 10 Hz | 2 Hz | S/N met criteria (25) with 31 points/peak | Lower rates may poorly define very narrow peaks |

| Filter Time Constant | Normal | Slow | Highest S/N achieved | Slower filters can broaden peaks |

| Slit Width | 50 µm | 50 µm (No change) | Minimal S/N variation observed | Larger widths increase S/N but reduce resolution |

| Resolution | 4 nm | 4 nm (No change) | Minimal S/N variation observed | Higher values reduce noise but decrease spectral resolution |

| Absorbance Compensation | Off | On (310-410 nm) | 1.5x S/N increase | Requires a wavelength range with no analyte absorption |

| Overall Method | All defaults | All optimizations | 7x S/N increase | Demonstrates cumulative benefit of optimization |

This table provides a comparison of different classes of spectrometers, helping in the selection of an appropriate detector based on application needs.

| Spectrometer Model | Detector Type | Dynamic Range | Signal-to-Noise Ratio (SNR) | Example Applications |

|---|---|---|---|---|

| Flame (T-model) | Linear CCD | 1300:1 | 300:1 | Basic laboratory measurements |

| Ocean HDX | Back-thinned CCD | 12000:1 | 400:1 | Plasma analysis, low-light absorbance |

| QE Pro | TE-cooled CCD | 85000:1 | 1000:1 | Fluorescence, DNA analysis, Raman spectroscopy |

| Maya2000 Pro | Back-thinned CCD | 15000:1 | 450:1 | Low-light fluorescence, gas analysis |

| STS | CMOS | 4600:1 | 1500:1 | Laser analysis, device integration |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Materials for Spectroscopic Method Development

This list details common consumables and standards used in developing and optimizing spectroscopic methods, as referenced in the cited application notes.

| Item | Function / Application |

|---|---|

| XBridge BEH C18 Column | A standard reversed-phase HPLC column used for separating mixtures of organic compounds, such as in the USP ibuprofen impurities method [16]. |

| Ibuprofen Standard (from MilliporeSigma) | A high-purity chemical used as a reference standard to prepare system suitability solutions for method validation and optimization [16]. |

| LCGC Certified Clear Glass Vials | Certified vials with preslit PTFE/silicone septa ensure sample integrity and prevent contamination or evaporation during automated HPLC analysis [16]. |

| Chloroacetic Acid Buffer | Used in mobile phase preparation to control pH (e.g., pH 3.0), which is critical for achieving stable separation of ionizable analytes like ibuprofen [16]. |

| Mobile Phase Solvents (Acetonitrile, Water, Methanol) | High-purity solvents are essential for creating the mobile phase (e.g., 40:60 water:acetonitrile) and for needle wash/seal wash solutions to maintain system cleanliness [16]. |

| Navepdekinra | Navepdekinra, CAS:2467732-66-5, MF:C33H48FN7O4, MW:625.8 g/mol |

| C3N-Dbn-Trp2 | C3N-Dbn-Trp2, MF:C36H32N4O2, MW:552.7 g/mol |

How Dirty Ion Optics and Component Wear Cause Signal Degradation

In mass spectrometry, maintaining optimal signal intensity is fundamental for achieving reliable, sensitive, and accurate results. Signal degradation is a common challenge, often traced back to the physical state of the instrument's core components. Contaminated ion optics and general component wear are two primary culprits behind decreasing sensitivity, rising noise, and unstable performance. This guide details the mechanisms of this degradation and provides researchers with clear, actionable protocols for troubleshooting and resolution.

The Degradation Mechanisms: Ion Optics and Component Wear

The Consequences of Dirty Ion Optics

Ion optics are a series of lenses and electrodes housed within the vacuum system that guide and focus the ion beam from the ionization source to the mass analyzer. When these components become contaminated, several issues arise:

- Ion Burn and Deposits: A visible sign of contamination is "ion burn," a dark, sometimes iridescent deposit on metal surfaces like ion exit apertures and the front ends of quadrupole rods [20]. This burn results from the deposition and subsequent ion- or electron-induced polymerization of non-volatile sample residues [20]. These deposits can build up to the point of flaking off, causing further instability.

- Disrupted Electric Fields: Ion optics operate by creating precisely controlled electric fields. An insulating layer of organic dirt on a lens surface alters these field gradients, defocusing the ion beam and reducing the number of ions that successfully reach the detector [20].

- Signal Artifacts: On quadrupole mass filters, asymmetric ion burn on the rods can distort the electric fields, leading to skewed peak shapes, often visible as a "lift off" on one side of the peak [20].

The Impact of General Component Wear and Tear

Beyond the ion optics, other components degrade with use, directly impacting instrument sensitivity and stability.

- Nebulizer Blockage: The nebulizer is critical for creating a fine aerosol. Microscopic particles from samples can build up at the tip, leading to an erratic spray pattern, reduced sample intake, and significant signal loss [21].

- Interface Cone Corrosion: The sampler and skimmer cones interface the atmospheric-pressure plasma with the high-vacuum mass spectrometer. These cones are exposed to the full force of the plasma and sample stream, making them susceptible to blockage and corrosion from high-matrix or corrosive solutions, which impedes ion transfer [22].

- Peristaltic Pump Tubing Wear: The constant pressure from pump rollers gradually stretches polymer-based pump tubing, changing its internal diameter and causing fluctuations in the sample delivery rate to the nebulizer. This results in poor signal stability and degraded precision [21].

The table below summarizes the key components, their failure modes, and the observed symptoms.

Table 1: Troubleshooting Common Causes of Signal Degradation

| Component | Type of Degradation | Primary Symptoms |

|---|---|---|

| Ion Optics | Contamination from ion burn and polymerized deposits [20] | Decreasing sensitivity; need for progressively higher lens voltages; unstable signal; skewed peak shapes [20] [22] |

| Nebulizer | Blockage from particulates or dissolved solids [21] | Erratic spray pattern; reduced and fluctuating signal; increased background noise |

| Interface Cones (Sampler/Skimmer) | Blockage or corrosion of orifice [22] | Gradual loss of sensitivity; poor stability; requires more frequent calibration |

| Peristaltic Pump Tubing | Wear and stretching from rollers [21] | Poor short-term stability (precision); drifting signal intensity |

Experimental Protocols for Diagnosis and Resolution

Protocol 1: Diagnosing Ion Optics Contamination

This procedure helps confirm whether dirty ion optics are the cause of signal loss.

- Symptom Check: Monitor instrument tuning logs. A consistent need to increase ion lens voltages over time to maintain sensitivity is a strong indicator of accumulating contamination [22].

- Visual Inspection (During Scheduled Maintenance): Following manufacturer guidelines and after safely venting the system, inspect the ion optics. Look for visible discoloration, powdery residues, or "ion burn" [20].

- Cleaning Procedure:

- Frequency: Typically every 3-6 months, depending on sample workload and matrix [22].

- Method: Use abrasive papers or fine polishing compounds as recommended by the manufacturer, followed by rinsing with water and a suitable organic solvent (e.g., methanol or isopropanol) [22].

- Critical Note: Ensure components are thoroughly dry before reinstallation to prevent introducing solvent or water vapor into the mass spectrometer vacuum system. Always wear gloves to avoid contamination [22].

This protocol addresses common issues in the sample path before the mass spectrometer.

- Nebulizer Blockage:

- Diagnosis: Visually inspect the aerosol by aspirating water. A blocked nebulizer will produce an erratic spray with large droplets [21]. A digital thermoelectric flow meter placed in the sample line can precisely detect flow rate drops [21].

- Cleaning: Immerse the nebulizer in a weak acid (e.g., 10% nitric acid) or detergent in an ultrasonic bath. Never use a wire to clear the orifice, as this can cause permanent damage [21]. Commercial nebulizer-cleaning devices that use pressurized cleanser are also effective and safe [21].

- Pump Tubing Wear:

- Prevention & Resolution: Manually stretch new tubing before installation. Release roller pressure when the instrument is not in use. With a high sample workload, change tubing daily or every other day. It is a consumable item and should be replaced at the first sign of wear [21].

The following workflow diagram illustrates the logical process for diagnosing the root cause of signal loss.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Maintenance Items for Signal Integrity

| Item | Function |

|---|---|

| Weak Nitric Acid (2-10%) | Standard cleaning solution for metal components like interface cones and ion optics to dissolve inorganic deposits [22]. |

| High-Purity Solvents (e.g., Methanol, Isopropanol) | Used for final rinsing of cleaned components to remove organic residues and ensure rapid drying [22]. |

| Abrasive Polishing Compound | For gently polishing ion lenses and interface cones to remove stubborn contaminants without damaging surfaces [22]. |

| Polymer-based Pump Tubing | A consumable for peristaltic pumps; a fresh supply is necessary to maintain stable sample flow [21]. |

| Digital Thermoelectric Flow Meter | A diagnostic tool to verify and monitor sample uptake rate, identifying blockages or pump tubing issues [21]. |

| Ultrasonic Bath | Used to enhance the cleaning efficiency of nebulizers and other small parts by agitating them in a cleaning solution [21]. |

| Hsp90-IN-38 | Hsp90-IN-38, MF:C28H35N3O5, MW:493.6 g/mol |

| KwFwLL-NH2 | KwFwLL-NH2, MF:C49H66N10O6, MW:891.1 g/mol |

Frequently Asked Questions (FAQs)

Q1: How often should I clean the ion optics and interface cones? The frequency depends entirely on your sample workload and matrix complexity. For labs running high dissolved solids or numerous samples, weekly inspection of interface cones is advised [22]. Ion optics typically require inspection and potential cleaning every 3 to 6 months [22]. A key sign is the need to constantly increase lens voltages to maintain sensitivity.

Q2: Can I use a wire to unblock a clogged nebulizer tip? No. This is a critical rule. Using a wire or needle can easily scratch and permanently damage the precise orifice of the nebulizer, altering its performance characteristics irreversibly. The recommended methods are immersion in an appropriate acid or solvent, often aided by an ultrasonic bath, or using a manufacturer-recommended nebulizer-cleaning device [21].

Q3: What is the most overlooked maintenance task that causes signal instability? Peristaltic pump tubing. It is often treated as a permanent component rather than a consumable. The constant pressure from rollers stretches the tubing over time, changing the internal diameter and causing fluctuations in sample flow to the nebulizer. This directly leads to poor signal stability (precision). Tubing should be replaced frequently, even daily for high-throughput labs, and pressure should be released when the instrument is not in use [21].

Q4: Are there any visual signs that my instrument components need cleaning? Yes. Upon disassembly during maintenance, look for:

- Ion Burn: A darkish, iridescent smudge on metal surfaces like the filament reflector, ion exit apertures, and the front end of quadrupole rods [20].

- Cone Orifice Condition: Use a magnifying glass (10-20x) to inspect the sampler and skimmer cone orifices for irregular shapes or visible deposits [22].

- Nebulizer Spray: An erratic aerosol pattern with large droplets when aspirating water indicates a partial blockage [21].

FAQs: Addressing Common Environmental Issues

Q1: Why is my spectral baseline unstable or drifting? An unstable or drifting baseline appears as a continuous upward or downward trend in the spectral signal, which introduces errors in peak integration and quantitative measurements. This is often caused by environmental factors.

- Temperature Fluctuations: The instrument requires time for its temperature to stabilize. If the power was recently turned on, allow at least 1 hour for the temperature to stabilize before use [23]. Subtle influences like air conditioning cycles can also disturb optical components [24].

- Acoustic Noise and Vibration: Internal or external vibrations can cause misalignment. Solution: Lower the purge flow rate to minimize acoustic noise inside the instrument until the baseline stabilizes [23]. Ensure the instrument is placed on a stable surface away from vibrating equipment [24].

- Humidity: High humidity can cause fogging on optical components. Solution: Check the instrument's humidity indicator. If it is pink, replace the desiccant and the indicator [23].

Q2: My system scans normally, but the signal intensity is very low. What should I check? Low signal intensity can be caused by several factors, including environmental and alignment issues.

- Misalignment: Vibrations or knocks can misalign the optical path. Solution: Perform an instrument alignment procedure [23]. Ensure any sampling accessories are installed and aligned correctly according to the manufacturer's instructions [23].

- Fogged Windows: Check the sample compartment windows. If they are fogged, they need to be replaced, as this scatters or blocks light [23].

- Detector Temperature: For instruments with cooled detectors (e.g., MCT detectors), ensure the detector has been properly cooled before use. If the detector dewar was recently filled, allow the detector at least 15 minutes to cool [23].

Q3: How do I minimize fluorescence interference in Raman spectroscopy, which can be exacerbated by background light? Fluorescence can swamp the weaker Raman signal, creating a high, sloping background.

- Laser Wavelength Selection: Using a longer wavelength (e.g., 785 nm or 1064 nm) laser excitation can significantly reduce fluorescence, as the laser energy is less likely to excite fluorescent transitions in the sample [25].

- Photobleaching: Employ photobleaching protocols by exposing the sample to the laser for a period before data acquisition to diminish fluorescent components [24].

Q4: My spectral data is very noisy. Could this be from environmental vibrations? Yes, excessive spectral noise appears as random fluctuations that reduce the signal-to-noise ratio. Mechanical vibrations from adjacent equipment or building infrastructure are a common source [24]. Ensure the spectrometer is on a vibration-damping optical table or a stable, heavy bench, away from sources of vibration like pumps, chillers, or heavy foot traffic.

Troubleshooting Protocols and Diagnostic Tables

Baseline Instability Diagnostic Protocol

- Record a fresh blank spectrum under identical conditions to your sample measurement [24].

- Analyze the blank:

- If the blank exhibits similar baseline drift, the problem is instrumental or environmental [24]. Proceed to check temperature stability, purge gas flow, and look for sources of vibration.

- If the blank is stable, the source is sample-related (e.g., contamination, matrix effects) [24]. Re-prepare your sample.

Quantitative Impact of Environmental Factors

| Environmental Factor | Primary Effect on Signal | Secondary Symptoms | Recommended Corrective Action |

|---|---|---|---|

| Temperature Fluctuations | Baseline drift and instability [23] [24] | Shifting peak positions over time | Allow instrument to warm up for 1 hour; stabilize room temperature [23]. |

| Mechanical Vibrations | Increased high-frequency noise; signal loss [24] | Unstable baseline; failed alignment [23] | Use vibration-damping table; relocate instrument away from vibration sources [24]. |

| High Humidity | Reduced light transmission; fogged optics [23] | General signal attenuation; low intensity | Check and replace desiccant; ensure sample compartment seals are intact [23]. |

| Stray Light | Reduced signal intensity | Low signal intensity | Use appropriate filters; ensure the sample compartment is closed properly. |

Experimental Workflow for Isolating Environmental Noise

The following diagram outlines a systematic workflow for diagnosing and resolving issues related to temperature, vibrations, and background light.

The Scientist's Toolkit: Essential Research Reagents and Materials

| Item | Function | Application Note |

|---|---|---|

| Desiccant | Controls humidity within the instrument sample compartment to prevent fogging on optical components and water vapor absorption [23]. | Check the humidity indicator; replace desiccant if indicator is pink [23]. |

| Certified Reference Standards | Verifies instrument calibration and performance, ensuring wavelength accuracy and photometric linearity [26] [24]. | Use for regular performance verification (PV) as part of a quality control protocol [23]. |

| Stable Blank Solvent | Serves as a reference for baseline correction and diagnosing the source of spectral anomalies (instrument vs. sample) [24]. | Use a high-purity solvent that does not absorb in the spectral region of interest. |

| Vibration-Damping Table | Isolates the spectrometer from floor-borne vibrations, which cause noise and baseline instability [24]. | Essential for laboratories in buildings with noticeable vibration or for high-resolution measurements. |

| Purge Gas (e.g., Dry Nâ‚‚) | Removes atmospheric water vapor and COâ‚‚ from the optical path to minimize their absorption bands in FTIR spectra [23] [24]. | Check purge gas flow rates and ensure sample compartment seals are tight [24]. |

| Alignment Tools & Standards | Allows for precise realignment of the optical path, which is crucial after instrument relocation or severe vibration events [23]. | Follow the manufacturer's specific alignment protocol [23]. |

| GSK1324726A | GSK1324726A, MF:C25H23ClN2O3, MW:434.9 g/mol | Chemical Reagent |

| BRD4-IN-3 | BRD4-IN-3, MF:C20H15Cl2N3O2, MW:400.3 g/mol | Chemical Reagent |

Proactive Optimization Methods for Enhanced Signal Acquisition

Sample Preparation Best Practices to Maximize Signal Output

Troubleshooting Guides

Why is my spectrometer signal intensity low, and how can I improve it?

Low signal intensity is a common challenge in spectrometer optics research. The cause can often be traced to the sample itself, the sample preparation process, or the instrument's components. Follow this diagnostic guide to systematically identify and resolve the issue.

Table: Troubleshooting Low Signal Intensity

| Problem Area | Specific Issue to Check | Corrective Action |

|---|---|---|

| Sample & Preparation | Improper sample concentration or volume | Concentrate the sample if it's too dilute. Ensure the sample volume is sufficient and covers the measurement path [27]. |

| Matrix effects or interfering substances | Use sample pretreatment to remove interferences. For LC-MS, techniques like liquid-liquid extraction or solid-phase extraction can minimize signal suppression [28]. | |

| Suboptimal substrate or enhancement | For techniques like SERS, ensure the use of a reliable, enhancive substrate. Aggregation of metal nanoparticles can amplify signals [29]. | |

| Instrument Hardware | Aging or misaligned light source | Inspect and replace the lamp (e.g., deuterium or tungsten-halogen for UV-VIS) per the manufacturer's intervals [30]. |

| Dirty optics or sample holders | Clean cuvettes and optical components regularly with approved solutions and a soft, lint-free cloth [30]. | |

| MCT detector not cooled | For FTIR spectrometers, ensure the MCT detector has been properly cooled before use [23]. |

Diagram: Diagnostic Pathway for Low Signal Intensity

My LC-MS peaks have disappeared. What should I do?

A complete loss of signal in LC-MS, while alarming, often points to a single, catastrophic failure in the system. This guide helps you quickly isolate the problem.

- Isolate the Problem Area: Start by removing sample preparation from the equation. Make a fresh standard and perform a direct injection. If the signal returns, the issue lies with your sample preparation. If there is still no signal, the problem is with the LC or MS system [15].

- Check the MS "Engine": Verify the three key components for a stable Electrospray Ionization (ESI) spray:

- Spark (Voltages): Ensure all voltages for the spray and optics are applied.

- Air (Gas): Confirm that nitrogen or other nebulizing/drying gases are flowing.

- Fuel (Mobile Phase): Verify that mobile phase reservoirs are full and flowing [15].

- Visual Spray Check: Use a flashlight to look at the tip of the ESI needle. A visible, stable spray indicates the source is functioning [15].

- Investigate the LC System: If the MS seems functional, the LC is the likely culprit.

- Pump Prime: A common issue is an air pocket in the pumps, particularly the organic phase pump. Manually prime the pumps to dislodge any trapped air, as an auto-purge may not be sufficient [15].

- Check for Blockages: Inspect lines and the column for potential blockages.

How can I enhance signals for hard-to-ionize elements in ICP-MS?

For elements like Arsenic (As) and Selenium (Se) in ICP-MS, you can leverage carbon-enhanced plasma to achieve more complete ionization. The mechanism is an ionization enhancement where carbon ions in the plasma facilitate the ionization of analytes with ionization potentials lower than carbon (11.26 eV) [31].

Table: Carbon Enhancement Methods for ICP-MS

| Method | Principle | Protocol / Implementation | Performance & Economics |

|---|---|---|---|

| Carbon Dioxide (CO2) Addition | CO2 is mixed directly with the argon plasma gas [31]. | Deliver CO2 directly into the total argon flow using the instrument's spare gas control line and a mass flow controller. A ballast tank is used for gas storage [31]. | Signal Enhancement: Optimal between 5-9% CO2 in Ar, peaking at ~8% [31]. Economics: Higher upfront cost for equipment, but significant long-term savings over 20 years compared to chemical additives [31]. |

| Organic Solvent Addition (e.g., Acetic Acid) | The organic solvent is introduced with the sample diluent or via the internal standard line [31]. | The solvent is mixed with the sample stream using a tee-piece before introduction to the plasma [31]. | A traditional method, but ongoing reagent costs can be high for a commercial lab [31]. |

Frequently Asked Questions (FAQs)

My baseline is unstable. What environmental factors should I check?

An unstable baseline is frequently caused by environmental conditions. Take the following steps:

- Purge and Stabilize: If the instrument cover was recently opened, allow the instrument to purge for 10-15 minutes after closing it.

- Control Temperature: If the instrument was recently turned on, allow at least one hour for the temperature to stabilize.

- Check Humidity: High humidity can cause instability. Check the instrument's humidity indicator and replace the desiccant if necessary [23].

- Detector Cooling: If using a cooled detector (like MCT), ensure it has been properly cooled and allowed to stabilize for at least 15 minutes after filling [23].

What sample preparation techniques can reduce matrix effects in LC-MS?

Matrix effects, where co-eluted compounds suppress or enhance the analyte signal, are a major cause of sensitivity loss in LC-MS, particularly with ESI [28]. Several sample preparation strategies can mitigate this:

- Solid-Phase Extraction (SPE): This technique selectively extracts the target analyte from potential interfering matrix components, significantly cleaning up the sample [28].

- Liquid-Liquid Extraction: A traditional but effective method for separating analytes based on solubility.

- Dilution: For relatively clean samples with high analyte concentrations, simple filtration and dilution can reduce the concentration of interferences [28].

- Alternative Ionization: If the analytes are thermally stable and of moderate polarity, switching to Atmospheric Pressure Chemical Ionization (APCI) can reduce matrix effects, as ions are produced through gas-phase reactions instead of in the liquid droplet [28].

How can I improve the sensitivity of a paper-based colorimetric assay?

The sensitivity of colorimetric lateral flow assays (LFA) can be significantly enhanced by signal-amplification strategies that intensify the color output:

- Metallic Nanoshells: A common and effective method is to deposit a layer of another metal (e.g., silver or copper) onto the surface of gold nanoparticle (AuNP) labels. This "size enlargement" creates a more visible spot. For example, a copper nanoshell on an AuNP core can change the particle shape to a polyhedron, dramatically amplifying the signal [29].

- Particle Aggregation: Designing the assay to trigger the aggregation of AuNPs causes a distinct color change from red to blue. This assembly increases the number of markers in the test zone, improving intensity [29].

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Materials for Signal Enhancement

| Reagent / Material | Function / Application |

|---|---|

| Certified Reference Standards | Essential for regular calibration of spectrometers to ensure measurement accuracy and traceability [30]. |

| High-Purity Solvents & Acids | Used for sample digestion, dilution, and as mobile phases to minimize background contamination and noise. |

| Solid-Phase Extraction (SPE) Cartridges | Used for sample clean-up to remove interfering matrix components, thereby reducing signal suppression and enhancing the signal-to-noise ratio [28]. |

| Functionalized Nanoparticles (e.g., AuNPs) | Act as labels or substrates in techniques like SERS and LFA. Their unique optical properties are harnessed for signal amplification [29]. |

| Molecularly Imprinted Polymers (MIPs) | Synthetic polymers with specific cavities for a target molecule. Used in sample preparation to selectively capture and pre-concentrate analytes, improving selectivity and signal [27]. |

| Carbon Dioxide (CO2) Gas | A cost-effective source of carbon for enhancing the plasma ionization of hard-to-ionize elements (e.g., As, Se) in ICP-MS [31]. |

| Galiellalactone | Galiellalactone, MF:C11H14O3, MW:194.23 g/mol |

| Perzebertinib | Perzebertinib, CAS:2414056-31-6, MF:C27H26F2N8O3, MW:548.5 g/mol |

Low signal intensity is a frequent challenge in spectroscopic analysis, often leading to poor data quality, extended acquisition times, and unreliable results in critical applications like drug development. This technical guide provides targeted, practical solutions to this problem, focusing on the strategic selection and configuration of two core components: diffraction gratings and entrance slits. By optimizing these elements, researchers can significantly enhance spectrometer performance, ensuring data integrity and accelerating research outcomes.

FAQ: Optimizing Your Spectrometer Configuration

How does groove density on a diffraction grating affect my measurement?

The groove density of a diffraction grating, measured in grooves per millimeter (gr/mm), directly determines the trade-off between the spectral resolution and the spectral range of your measurement [32].

- Higher Groove Density (e.g., 1800 gr/mm, 2400 gr/mm): Provides higher spectral resolution, spreading the light over a larger area of the detector. This is essential for resolving fine spectral details, such as distinguishing closely spaced peaks in the analysis of materials like MoS2 or carbon nanotubes [32] [33].

- Lower Groove Density (e.g., 150 gr/mm, 300 gr/mm): Provides a wider spectral range in a single acquisition. This is beneficial for surveying broad spectral features or when measuring photoluminescence (PL) [32].

The table below summarizes how to select groove density based on your analytical goal:

Table 1: Selecting Grating Groove Density for Specific Assays

| Analytical Goal | Recommended Groove Density | Key Benefit | Common Applications |

|---|---|---|---|

| High-Resolution Analysis | 1800 gr/mm or higher | Resolves closely spaced peaks | Polymorph discrimination; stress/strain analysis in semiconductors [32] |

| Broad Spectral Range | 300 gr/mm or lower | Captures wide Raman/PL spectrum in a single shot | Photoluminescence measurements; initial sample screening [32] |

| Balanced Performance | 600 - 1200 gr/mm | Good compromise between range and resolution | General-purpose Raman spectroscopy with visible lasers [32] |

What is the blaze wavelength and why is it critical for signal intensity?

The blaze wavelength is the specific wavelength at which a diffraction grating delivers its maximum diffraction efficiency. Matching the blaze wavelength to your laser's excitation wavelength is crucial for maximizing signal intensity [32].

Gratings are optimized for different spectral regions. Using a grating with a blaze of 550 nm with a 785 nm laser can reduce efficiency to about 52%, whereas a grating blazed at 750 nm can achieve over 71% efficiency for the same laser. This ~20% difference directly impacts signal strength and required acquisition times [32]. For UV lasers (e.g., 325 nm), a UV-blazed grating (e.g., Blaze 300 nm) is necessary, while NIR lasers (e.g., 1064 nm) require an NIR-optimized grating (e.g., Blaze 750 nm) [32].

How do I choose the correct slit width for my experiment?

The entrance slit width controls the amount of light entering the spectrometer and the spectral bandpass. Optimizing it is a key balance between signal throughput and spectral resolution [33] [34].

- Wider Slits: Allow more light to enter, boosting signal intensity. This is ideal for weak scatterers or when high resolution is not the primary concern. However, wider slits degrade spectral resolution [33].

- Narrower Slits: Provide higher spectral resolution by reducing the spectral bandpass. The drawback is a significant reduction in light throughput, which can be detrimental for low-signal samples [33] [34].

A best practice is to start with the largest slit width your resolution requirements allow [33]. Furthermore, the slit width should be imaged onto at least three pixels on the detector to satisfy the Nyquist criterion for optimum resolution and light throughput [34].

What is the best strategy for exposure time and signal averaging?

For weak Raman scatterers, using a small number of long exposures is more effective at reducing noise than using many short exposures. This is because each readout of the detector introduces "read noise," and fewer readouts result in a cleaner signal [33].

- For quiet samples (low fluorescence): Prioritize longer exposure times over a larger number of exposures [33].

- For fluorescent samples: The improvement from longer exposures is less pronounced due to dominant shot noise from the fluorescence background. In this case, the specific combination of exposure time and number of scans has a smaller effect [33].

Troubleshooting Guide: Low Signal Intensity

Problem: My Raman signal is too weak for reliable analysis.

Follow this systematic workflow to diagnose and resolve the issue of low signal intensity in your spectrometer.

Actionable Protocols:

Check Laser Power and Focus:

- Protocol: In your instrument software, set the laser to its maximum power. If the sample is sensitive (e.g., dark-colored or biological), start with low power and incrementally increase it while monitoring for damage. Use the instrument's autofocus function or manually adjust the Z-position while observing the live spectral signal to find the point of maximum intensity [33].

Verify Grating Configuration:

- Protocol: Consult your spectrometer's manual to confirm the installed grating's specifications. In the software, select a grating with a blaze wavelength matched to your laser and a groove density that suits your need for either wide spectral range (lower gr/mm) or high resolution (higher gr/mm) [32].

Optimize Slit Width:

- Protocol: Start your experiment with the widest available slit (e.g., 50-100 μm). Collect a spectrum. If the spectral resolution is insufficient to resolve your peaks of interest, progressively narrow the slit until the required resolution is achieved, accepting the consequent signal reduction [33].

Adjust Acquisition Parameters:

- Protocol: For a total measurement time of 60 seconds, try acquiring 2 exposures of 30 seconds each instead of 60 exposures of 1 second. Compare the signal-to-noise ratio of the results [33].

Inspect Sample and Optics:

- Protocol: Regularly clean the exterior windows of the spectrometer and microscope objectives according to the manufacturer's instructions. For sampling probes, ensure the lens is correctly aligned to collect the maximum amount of light [6].

The Scientist's Toolkit: Key Components for Spectrometer Optimization

Table 2: Essential Materials and Components for Optimizing Signal Intensity

| Item | Function | Considerations for Selection |

|---|---|---|

| High-Efficiency Diffraction Gratings | Disperses light onto the detector; its efficiency dictates signal strength. | Select blaze wavelength to match your primary laser. Choose groove density based on required resolution vs. range [32]. |

| Variable Entrance Slits | Controls light throughput and spectral resolution. | A wider slit increases signal but decreases resolution. Ensure the slit-width is adjustable [33] [34]. |

| Laser Source | Provides the excitation light for techniques like Raman. | High-brightness lasers allow for tighter focus and improved scatter yield. Fine power control is essential for sensitive samples [33]. |

| Calibration Standards | Verifies and maintains wavelength and intensity accuracy. | Use standards like silicon or l-cystine for Raman. l-Cystine has sharp peaks ideal for testing spectral resolution [33]. |

| Cleaning Materials | Maintains optical clarity and throughput. | Use appropriate solvents and lint-free wipes to clean optical windows and lenses without damaging coatings [6]. |

| Clemastine | Clemastine, CAS:14976-57-9; 15686-51-8, MF:C21H26ClNO, MW:343.9 g/mol | Chemical Reagent |

| 2-Myristyldipalmitin | 2-Myristyldipalmitin, MF:C49H94O6, MW:779.3 g/mol | Chemical Reagent |

Advanced Concepts: The Signal-to-Noise Trade-Off in Slit Width Selection

The relationship between slit width and signal-to-noise ratio (SNR) is not always linear and involves a complex balance of noise sources [35].

- Very Narrow Slits: Severely limit light throughput. The signal is weak, and the SNR is poor because of dominant photon noise and detector noise.

- Very Wide Slits: Allow more light, but also admit a larger amount of continuum background emission (e.g., from fluorescence or plasma). The photon noise of this background increases with the square root of the background intensity. Furthermore, flicker noise from source turbulence increases directly with background intensity. This can cause the SNR to decrease at larger slit widths [35].

Therefore, an optimum slit width exists that maximizes the SNR for a given experiment. This optimum can be found empirically by measuring a standard and plotting the SNR against slit width [35].

FAQs: Signal Averaging Fundamentals and Implementation

Q1: What is signal averaging and how does it improve my spectrometer's Signal-to-Noise Ratio (SNR)?

Signal averaging is a technique that improves SNR by combining multiple spectral scans. Because your signal is coherent (repeats) and noise is random, averaging reinforces the signal while noise tends to cancel out. The SNR improves with the square root of the number of scans averaged. For example, an SNR of 300:1 can be improved to 3000:1 by averaging 100 scans [7].

Q2: What is the difference between traditional software averaging and hardware-accelerated averaging, like High Speed Averaging Mode (HSAM)?

Traditional software averaging occurs on your host computer after data is transferred from the spectrometer. In contrast, hardware-accelerated averaging performs the averaging directly within the spectrometer's firmware before sending the final averaged spectrum to the computer. This method is significantly faster, allowing for many more averages to be collected in the same amount of time, which yields a far superior SNR per unit time. This is critical for time-sensitive applications [7].

Q3: My signal is very weak and buried in noise. Are there advanced processing methods beyond simple averaging?

Yes, for very low SNR scenarios (e.g., SNR ~1), advanced wavelet transform-based denoising methods can be highly effective. One such method, Noise Elimination and Reduction via Denoising (NERD), can improve SNR by up to 3 orders of magnitude. It works by transforming the noisy signal into the wavelet domain, where it can intelligently separate noise coefficients from signal coefficients, even when they are of comparable magnitude, and then reconstruct a clean signal [36].

Q4: When should I use a boxcar averager instead of spectral scanning averaging?

A boxcar averager is particularly suitable for processing low-duty-cycle, repetitive signals, such as pulsed lasers or triggered events. It applies a time-domain gate window to the input signal, integrating only the portion of the signal where your data resides and ignoring the noisy intervals between pulses. This effectively isolates noise contributions that occur outside the signal period [37].

Troubleshooting Guide: Common Issues with Signal Averaging

This guide helps you diagnose and resolve common problems encountered when implementing averaging techniques.

| Problem | Possible Causes | Recommended Solutions |

|---|---|---|

| Inconsistent results between averaged runs | Sample degradation or reaction over time; unstable light source intensity; environmental fluctuations (temperature, vibrations) [38] [39]. | Ensure sample stability (e.g., protect from light); allow light source to warm up for 15-30 minutes; perform averaging sequentially on a stable sample to isolate instrument effects [39]. |

| Poor SNR even after extensive averaging | Signal intensity is too low; high baseline noise; inappropriate averaging technique for signal type [7] [36]. | Optimize signal strength (light source, fiber diameter, integration time); use hardware acceleration (e.g., HSAM) for more averages; consider advanced denoising (e.g., wavelet NERD) for very weak signals [7] [36]. |

| Signal distortion or loss of fine features | Over-averaging on a drifting signal; phase misalignment in complex averaging; boxcar gate misaligned with pulse signal [40] [37]. | Check system stability for drift; for complex phasor averaging, ensure accurate phase alignment prior to averaging; recalibrate boxcar trigger delay and gate width [40] [37]. |

| Averaging process is too slow for real-time needs | Using software-based averaging instead of hardware-accelerated averaging; communication latency between spectrometer and PC [7]. | Utilize hardware-accelerated modes like High Speed Averaging Mode (HSAM) available in spectrometers such as the Ocean SR2, which can perform averages much faster within the device firmware [7]. |

Experimental Protocols for SNR Enhancement

Protocol 1: Implementing High-Speed Hardware Averaging

This protocol details the steps to utilize High Speed Averaging Mode (HSAM) on compatible Ocean Optics spectrometers [7].

Required Materials:

- Ocean Optics spectrometer with HSAM capability (e.g., Ocean SR, ST, or HR Series).

- OceanDirect Software Developers Kit (SDK).

- OceanView spectroscopy software or custom software using the API.

Methodology:

- System Setup: Ensure your spectrometer is connected and recognized by the software. Configure your light source and optical setup for your experiment.

- Software Trigger Configuration: Set the spectrometer's acquisition mode to use a Software Trigger, as this is the only triggering mode that supports HSAM [7].

- Set Integration Time: Define the integration period (exposure time) for a single scan via the software configuration.

- Activate HSAM: Set the acquisition mode to High Speed Averaging Mode through the OceanDirect API. Define the number of averages (

n) to be performed internally by the spectrometer. - Initiate Acquisition: Send a software trigger command via the API. The spectrometer will then internally generate

nintegration cycles. - Data Retrieval: After the last integration cycle, the spectrometer will output a single averaged spectrum, which is then transferred to your computer for analysis.

Protocol 2: Wavelet Denoising for Weak Signal Extraction (NERD)

This protocol is based on the NERD methodology for retrieving very weak signals from noisy data, as demonstrated in ESR spectroscopy [36].

Required Materials:

- A noisy spectroscopic data set (e.g., magnetic field, wavelength, or time domain).

- Computational software (e.g., MATLAB, Python with PyWavelets) capable of performing discrete wavelet transforms.

Methodology:

- Signal Transformation: Perform a Discrete Wavelet Transform (DWT) on your noisy input signal. This decomposes the signal into different frequency sub-bands (detail components) and a lower-frequency residual (approximation component) [36].

- Noise Thresholding: Apply a threshold to the wavelet coefficients to eliminate those predominantly representing noise. Standard methods (e.g., universal threshold) can be used, but this step may also remove weak signal coefficients.

- Signal Identification & Windowing (Key Step): Identify the signal location "windows" in the lowest-frequency detail components where the signal is clearest. Within these defined windows, restore the coefficients in the higher-frequency detail components (e.g., levels 3, 4, and 5) that were eliminated during thresholding, as they are likely to contain masked signal information [36].

- Signal Reconstruction: Perform an Inverse Discrete Wavelet Transform (IDWT) on the modified set of coefficients (the thresholded coefficients plus the restored coefficients within the signal windows) to reconstruct the denoised signal in the original domain.

Protocol 3: Boxcar Averaging for Pulsed Signals

This protocol outlines the configuration of a boxcar averager to enhance the SNR of repetitive, pulsed signals [37].

Required Materials:

- A boxcar averager instrument (e.g., implemented on a platform like Moku:Pro) or equivalent software.

- Pulsed signal source and a trigger signal synchronized with the pulses.

Methodology:

- Trigger Adjustment: Feed the trigger signal to the boxcar averager. Adjust the trigger level to a value between the noise floor and the peak of the trigger signal to ensure stable triggering [37].

- Gate Synchronization: Adjust the trigger delay parameter to align the boxcar integration window precisely with the arrival of the pulse signal in time.

- Gate Width Optimization: Set the gate width to encompass the majority of the pulse signal. Note that the optimal SNR may not require capturing the entire pulse; sometimes, excluding sections where signal power is low compared to noise power can improve results [37].

- Averaging and Gain: Select the number of averaging cycles. A higher number improves SNR but reduces speed. Finally, adjust the gain stage to prevent output saturation or to minimize quantization errors.

Workflow and Signaling Pathways

Diagram 1: High-Speed Averaging Mode (HSAM) Workflow

This diagram illustrates the internal process of hardware-accelerated averaging within the spectrometer [7].

Diagram 2: Wavelet Denoising (NERD) Logic

This flowchart outlines the decision process for the advanced wavelet denoising method [36].

Table 1: SNR Performance of Different Averaging Techniques

This table compares the theoretical and practical performance of various signal enhancement methods.

| Technique | Core Principle | SNR Improvement Formula | Key Advantage | Best For |

|---|---|---|---|---|

| Time Averaging [7] | Averaging multiple spectral scans in software. | SNRnew = SNRoriginal × √N | Simple to implement; universal application. | General purpose; most spectroscopic applications. |

| Spatial (Boxcar) Averaging [7] | Averaging signal across adjacent pixels. | SNRnew = SNRoriginal × √M | Reduces noise within a single scan. | Smoothing spectral features; reducing high-frequency pixel noise. |

| High Speed Averaging Mode (HSAM) [7] | Hardware-accelerated averaging in spectrometer firmware. | ~3x per second improvement (vs. single scan) due to vastly higher scan rate. | Speed; superior SNR per unit time. | Real-time or high-throughput applications. |

| Complex Phasor Averaging [40] | Averaging complex-valued signals (phase & magnitude). | Higher SNR than magnitude averaging after noise-bias correction and phase alignment. | Better noise floor reduction. | Systems with stable phase information (e.g., OCT). |

| Wavelet Denoising (NERD) [36] | Noise/Signal separation in wavelet domain. | Up to ~1000x (3 orders of magnitude) SNR improvement demonstrated. | Can recover signals at very low SNR (~1). | Extremely weak signals; extracting fine features from noise. |

The Scientist's Toolkit: Essential Research Reagents & Materials

This table lists key hardware, software, and consumables essential for experiments focused on boosting SNR.

| Item | Function in Experiment |

|---|---|

| Spectrometer with HSAM (e.g., Ocean SR2) [7] | Provides hardware-accelerated averaging for fast, high-quality SNR improvement. |

| OceanDirect SDK [7] | API allowing custom control of spectrometer functions, including HSAM and triggering. |

| Stable Light Source & Optical Fibers | Provides consistent, high-intensity illumination to maximize the initial signal. |

| Quartz Cuvettes [39] | Essential for UV range measurements; standard glass/plastic cuvettes absorb UV light. |

| Lint-free Wipes | For proper cleaning of cuvette optical surfaces to prevent scattering and inaccurate blanks [39]. |

| Boxcar Averager Instrument | Specialized hardware for gating and averaging low-duty-cycle pulsed signals [37]. |

| Wavelet Analysis Software | Computational tool for implementing advanced denoising algorithms like NERD [36]. |

| Stable Temperature Controller [41] | Maintains spectrometer temperature, reducing thermal drift that can cause signal instability. |

| 6-T-GDP | 6-T-GDP, MF:C10H15N5O10P2S, MW:459.27 g/mol |

| Aquacobalamin | Aquacobalamin, MF:C62H91CoN13O15P-, MW:1348.4 g/mol |

Leveraging Optical Fibers and Accessories to Minimize Signal Loss

Troubleshooting Guide: Common FAQs

FAQ: The signal intensity in my spectrometer setup is very low. What are the most common causes?

Low signal intensity is frequently caused by issues at the connection points or along the fiber path. The most prevalent causes include:

- Contaminated Connectors: Dust, dirt, or oils on the fiber end-face can dramatically block and scatter light [42] [43].

- Physical Bending Loss: Sharp bends or kinks in the fiber cable can cause light to escape from the core [42] [44] [45].

- Poorly Mated Connectors: Using incompatible connector types (e.g., UPC with APC) or connectors with minor misalignments lead to high insertion and return loss [42] [44].

- Signal Power Level Issues: The incoming optical signal may be outside the optimal operating range of your detector—either too weak or so strong that it saturates the receiver [42].

- Aging or Degraded Components: Over time, the sensitivity of optical receivers can degrade, especially in harsh environmental conditions [42].

FAQ: How can I enhance a weak optical signal from a sample in a spectroscopic experiment?

Beyond ensuring your fiber setup is optimal, you can employ specialized techniques to enhance the signal at the sample level:

- Use a Lens System: Place a large-diameter, small f-number lens between your light source (e.g., a plasma) and the collection fiber to focus more photons into the fiber's core, thereby increasing signal strength [46].

- Investigate Signal-Enhanced Substrates: For techniques like Raman spectroscopy, using samples on substrates with metal nanoparticles (Surface Enhanced Raman Spectroscopy or SERS) can increase the Raman signal strength by many orders of magnitude [47].

- Optimize Fiber Core Diameter: For collecting light from a divergent source, switching to a fiber with a larger core aperture diameter (e.g., 200-400 µm instead of 10 µm) can capture a greater amount of light [46].

FAQ: What is an acceptable level of signal loss (dB) for a typical single-mode fiber system?

Acceptable loss depends on the application, but for single-mode fibers, a loss of 0.2 to 0.5 dB/km is considered standard [45]. The total end-to-end loss of your link must fall within the system's "optical budget," which accounts for loss from the fiber itself, every connector, and every splice [42] [44].

FAQ: What is the difference between Insertion Loss and Return Loss, and why does it matter?

- Insertion Loss (IL) is the measure of light lost between two points, for example, as it passes through a connector or splice. It is primarily caused by misalignment, contamination, or poor mating [44]. You want Insertion Loss to be as low as possible.

- Return Loss (RL) is the measure of light reflected back toward the source due to an impedance mismatch at a connection point [44]. High back reflection can disrupt laser sources. You want Return Loss to be as high as possible (e.g., >50 dB). Using Angled Physical Contact (APC) connectors is the best way to minimize back reflection [42] [44].

Quantitative Data for System Design

The following tables summarize key performance metrics and limits to guide your experimental setup and component selection.

Table 1: Typical Attenuation Standards for Optical Fibers

| Fiber Type | Core Size | Typical Attenuation | Best For |

|---|---|---|---|