Spectroscopic Method Validation in 2025: A Guide to Parameters, Regulations, and Advanced Applications for Drug Development

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on validating spectroscopic methods in line with 2025 regulatory trends, including ICH Q2(R2) and Q14.

Spectroscopic Method Validation in 2025: A Guide to Parameters, Regulations, and Advanced Applications for Drug Development

Abstract

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on validating spectroscopic methods in line with 2025 regulatory trends, including ICH Q2(R2) and Q14. It covers foundational principles, from accuracy and precision to specificity, and explores their application across modern techniques like NIR, MIR, and Raman spectroscopy. The content details troubleshooting common issues, optimizing methods using Quality-by-Design (QbD) and Artificial Intelligence (AI), and offers a practical framework for risk-based validation and cross-technique comparison to ensure regulatory compliance and analytical excellence.

Core Principles and Regulatory Landscape of Spectroscopic Method Validation

In the field of analytical science, particularly for spectroscopic techniques, the reliability of any method hinges on its properly validated performance characteristics. For researchers, scientists, and drug development professionals, demonstrating that an analytical procedure is fit for purpose is a regulatory and scientific necessity. This guide provides a detailed comparison of four fundamental validation parameters—Accuracy, Precision, Specificity, and Linearity—by framing them within the context of spectroscopic analysis. We will explore their definitions, methodologies for determination, and performance across different spectroscopic techniques, supported by experimental data and standardized protocols.

Parameter Definitions and Core Concepts

The following table summarizes the core objectives and foundational concepts for each key validation parameter.

Table 1: Core Definitions of Key Validation Parameters

| Parameter | Core Definition | Primary Objective in Validation |

|---|---|---|

| Accuracy | The closeness of agreement between a test result and the accepted true value [1] [2]. | To ensure the method provides results that are unbiased and close to the true analyte concentration [3]. |

| Precision | The closeness of agreement (degree of scatter) between a series of measurements obtained from multiple sampling of the same homogeneous sample [1]. | To quantify the random error and ensure the method produces reproducible results under specified conditions [4]. |

| Specificity | The ability to assess unequivocally the analyte in the presence of components that may be expected to be present [5] [3]. | To demonstrate that the method can accurately measure the analyte despite potential interferents like impurities, degradants, or matrix components [1]. |

| Linearity | The ability of the method to obtain test results that are directly proportional to the concentration of the analyte in a given range [5] [1]. | To establish that the method provides an accurate and precise proportional response across the intended working range [3]. |

Experimental Protocols for Determination

A robust validation requires carefully designed experiments. Below are standard protocols for determining each parameter, applicable to spectroscopic techniques such as UV-Vis, FT-IR, and Raman spectroscopy.

Accuracy

The most common protocol for determining accuracy is the spike recovery method [2].

- Protocol: A known amount of a pure reference standard of the analyte is added (spiked) into a known volume of the sample matrix. The analysis is then performed on the spiked sample using the validated method. The recovery is calculated as a percentage of the measured amount versus the theoretically added amount [1] [2].

- Standard Design: Accuracy should be established across the specified range of the procedure. According to ICH guidelines, this is typically demonstrated using a minimum of 9 determinations over a minimum of 3 concentration levels (e.g., 80%, 100%, and 120% of the test concentration) with 3 replicates each [1] [3].

- Data Interpretation: The mean recovery percentage is calculated. Acceptance criteria vary by industry and analyte but are often set within 98-102% for the drug substance at the target level [1].

Precision

Precision is investigated at multiple levels, with repeatability being the most fundamental.

- Protocol (Repeatability): Multiple samplings are taken from a single, homogeneous sample solution. Each sample is processed and analyzed independently using the same method, by the same analyst, on the same instrument, over a short time interval [1].

- Standard Design: A minimum of 6 determinations at 100% of the test concentration or a minimum of 9 determinations covering the specified range (e.g., 3 concentrations/3 replicates each) is required [1].

- Data Interpretation: Precision is expressed statistically as the standard deviation (SD) and the relative standard deviation (RSD%) of the measured results. A lower RSD indicates higher precision [1].

Specificity

For identity tests or assays in spectroscopy, specificity is demonstrated by showing that the analyte signal is unique.

- Protocol: The spectra of the following are compared: a) the pure analyte, b) a placebo or sample matrix without the analyte, and c) a sample containing the analyte plus potential interferents (impurities, degradants, excipients) [5] [6].

- Application in Spectroscopy: In techniques like Raman spectroscopy, the method's specificity is confirmed if the analyte's characteristic spectral peak(s) (e.g., a key vibration band) can be clearly identified and are unobscured by peaks from the placebo or other components [6]. For quantitative methods, it must be shown that interferents do not contribute to the quantitative measurement of the analyte.

- Data Interpretation: The method is considered specific if the signal from the analyte is resolved and unaffected by the presence of other components.

Linearity

Linearity is established by preparing and analyzing a series of standard solutions at different concentration levels.

- Protocol: A series of standard solutions are prepared, typically from a single stock solution by serial dilution or from separate weighings. The solutions are analyzed, and the instrumental response (e.g., absorbance in UV-Vis, peak area in chromatography) is recorded for each [1] [3].

- Standard Design: A minimum of 5 concentration levels is recommended to demonstrate linearity [1] [3]. The range should bracket the expected concentration of samples, for example, from 80% to 120% of the test concentration for an assay [3].

- Data Interpretation: The response is plotted against the concentration, and a regression line is calculated using the least-squares method. The correlation coefficient (R²), y-intercept, and slope of the regression line are reported. An R² value > 0.995 is often expected for a strong linear relationship, though >0.95 may be acceptable in some contexts [1]. The visual inspection of the plot is also critical [5].

Comparative Performance in Spectroscopic Techniques

Different analytical techniques present unique advantages and challenges for meeting validation parameters. The following table compares these aspects for common spectroscopic methods.

Table 2: Comparison of Validation Parameter Performance Across Spectroscopic Techniques

| Technique | Accuracy & Precision | Specificity | Linearity | Key Considerations & Supporting Data |

|---|---|---|---|---|

| FT-IR Spectroscopy | High precision due to Fellgett's and Jacquinot's advantages [7]. Accuracy can be high with proper calibration. | High for molecular structures due to unique "fingerprint" regions [7]. | Demonstrable over defined ranges; requires careful baseline correction [7]. | ✓ Application Example: Quantification of protein secondary structure showed >90% reproducibility [7].✓ Pitfall: Overlapping spectral bands in complex mixtures can challenge specificity without chemometrics. |

| Raman Spectroscopy | Can achieve high precision and accuracy, as demonstrated in pharmaceutical validation studies [6]. | High; can analyze aqueous solutions directly (water is a weak scatterer) and offers high chemical specificity [6]. | Linearity validated for paracetamol in the range of 7.0-13.0 mg/mL with a high correlation coefficient [6]. | ✓ Application Example: Paracetamol determination in a liquid product showed no significant difference from HPLC reference methods in t- and F-tests [6].✓ Advantage: Minimal sample preparation reduces errors and improves precision. |

| UV-Vis Spectroscopy | Generally high precision. Accuracy can be compromised in complex matrices without separation. | Low to Moderate; measures chromophores, so any compound with similar absorption can interfere. | Generally excellent linearity over a wide range, obeying the Beer-Lambert law. | ✓ Pitfall: Lacks inherent specificity for mixtures, often requiring separation techniques or derivative spectroscopy to resolve overlaps. |

Logical Workflow for Method Validation

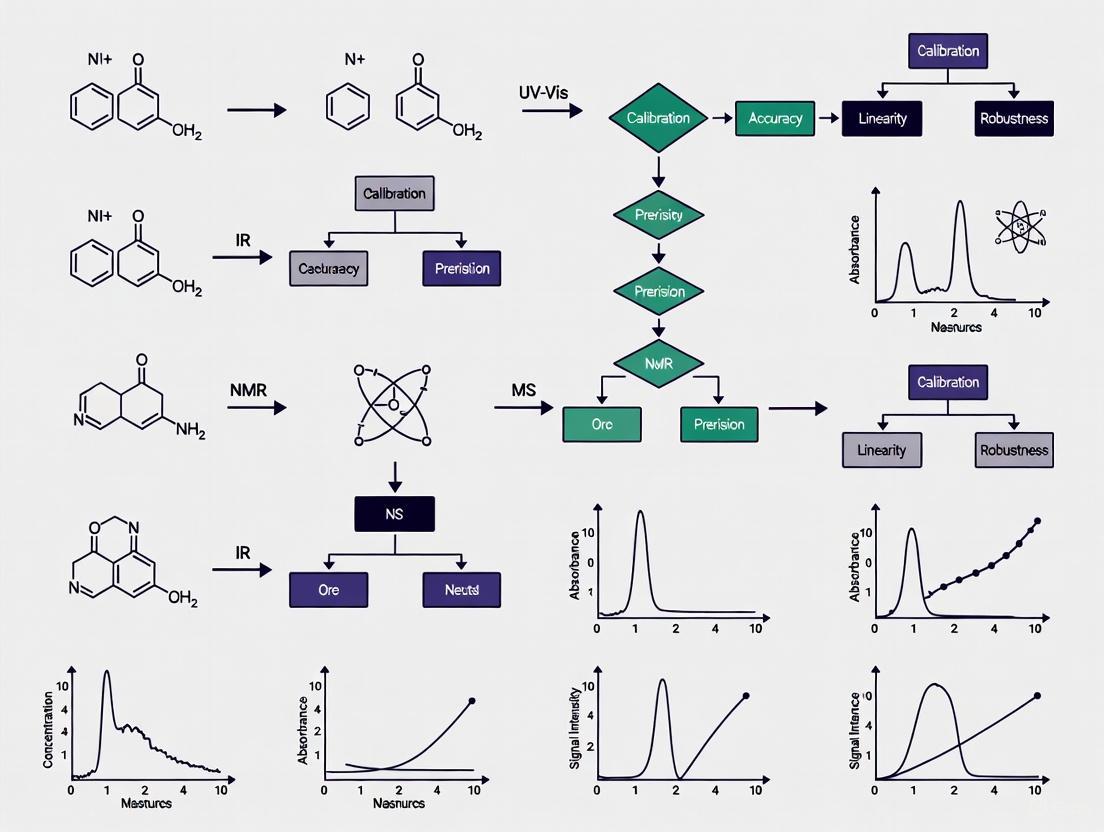

The process of validating a spectroscopic method follows a logical sequence, where earlier parameters often form the foundation for subsequent ones. The following diagram visualizes this workflow and the core objectives of each parameter.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following reagents and materials are fundamental for conducting the validation experiments described in this guide.

Table 3: Essential Research Reagents and Materials for Validation Studies

| Item | Function in Validation | Critical Application Note |

|---|---|---|

| Certified Reference Standard | Serves as the benchmark for identity, calibration (linearity), and accuracy (recovery) experiments [2]. | The purity of the standard must be well-characterized and certified, as inaccuracies here propagate through all quantitative results [2]. |

| Placebo Matrix | Used in specificity testing to demonstrate that the signal is from the analyte and not from excipients or the sample matrix [6]. | For a drug product, this is a mixture of all non-active ingredients. It is critical for proving the method's selectivity. |

| Sample Matrix for Spiking | The actual material (e.g., drug substance, placebo, biological fluid) used in spike recovery experiments to determine accuracy [1] [2]. | The matrix should be as representative as possible of the real test samples to accurately assess matrix effects. |

| High-Purity Solvents & Reagents | Used for preparing mobile phases, standard solutions, and sample dilutions. | Impurities can contribute to baseline noise, affect linearity, and lead to inaccurate quantification, especially at low analyte levels. |

| Standardized Validation Protocols | Documents (e.g., ICH Q2(R2)) providing the formal framework, experimental designs, and acceptance criteria for validation [1] [3]. | Ensures the validation study meets regulatory standards and scientific rigor, facilitating reproducibility and reliability. |

| 4-Nitrothalidomide, (+)- | 4-Nitrothalidomide, (+)-, CAS:202271-81-6, MF:C13H9N3O6, MW:303.23 g/mol | Chemical Reagent |

| 9-Deacetyl adrogolide | 9-Deacetyl adrogolide, CAS:1027586-16-8, MF:C20H23NO3S, MW:357.5 g/mol | Chemical Reagent |

The rigorous validation of analytical methods is a cornerstone of reliable scientific research and drug development. As demonstrated, Accuracy, Precision, Specificity, and Linearity are distinct yet interconnected parameters that collectively define the performance of a spectroscopic technique. While FT-IR and Raman spectroscopy often exhibit high inherent specificity due to their molecular "fingerprinting" capabilities, all techniques require systematic evaluation through standardized protocols. The choice of technique and the stringency of validation must always align with the method's intended purpose. By adhering to the detailed experimental protocols and comparative insights provided in this guide, scientists can ensure their spectroscopic methods generate data that is trustworthy, reproducible, and fit for regulatory submission.

In the pharmaceutical industry, demonstrating that analytical methods are reliable and fit for their intended purpose is a fundamental regulatory requirement. The regulatory framework for analytical procedures has recently evolved with the introduction of new and revised guidelines. The International Council for Harmonisation (ICH) finalized two key documents: ICH Q2(R2) on the validation of analytical procedures and ICH Q14 on analytical procedure development. These guidelines, adopted in late 2023, represent a significant shift towards a more holistic, science- and risk-based lifecycle approach [8].

Alongside these ICH guidelines, regional regulatory bodies like the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA) have their own expectations and guidance documents. Understanding the synergies and differences between the ICH, FDA, and EMA frameworks is crucial for researchers, scientists, and drug development professionals to ensure regulatory compliance and robust analytical practices. This guide provides a comparative analysis of these frameworks, placing them in the context of modern spectroscopic techniques and method validation.

Comparative Analysis of ICH Q2(R2), Q14, FDA, and EMA Guidelines

The following table summarizes the core focus, lifecycle view, and key tools or concepts endorsed by each regulatory guideline and agency concerning analytical procedures.

| Aspect | ICH Q2(R2) | ICH Q14 | FDA | EMA |

|---|---|---|---|---|

| Core Focus | Validation of analytical procedures; definitions and methodology for validation tests [9]. | Science- and risk-based approaches for analytical procedure development and lifecycle management [10]. | Enforcement of validation standards; has released specific guidance on topics like Bioanalytical Method Validation (BMV) for Biomarkers [11]. | Adherence to GMP; detailed requirements outlined in Annex 15; has a reflection paper on method transfer and 3Rs (Replacement, Reduction, Refinement) [12]. |

| Lifecycle Approach | Integrated with Q14; emphasizes that validation is a lifecycle activity [8]. | Explicitly outlines an Analytical Procedure Lifecycle from development through post-approval changes [10] [8]. | Supports a lifecycle model, evident in process validation guidance (3 stages) and the adoption of ICH Q12/Q14 principles for post-approval changes [13] [10]. | Supports a lifecycle approach, emphasizing ongoing process verification and product quality reviews [13]. |

| Key Tools/Concepts | Validation parameters (Accuracy, Precision, Specificity, etc.); application to multivariate or complex procedures [14]. | Analytical Target Profile (ATP); Enhanced Approach; Established Conditions (ECs); Change Management Protocols [10] [8]. | Recommends ICH M10 as a starting point for biomarker BMV, despite its stated non-applicability; encourages a Context of Use (COU)-driven strategy [11]. | Encourages use of a Validation Master Plan (VMP); more flexible on batch numbers for validation, relying on scientific justification [13]. |

Experimental Protocols for Analytical Procedure Validation

Protocol for Validation Based on ICH Q2(R2)

A robust validation protocol for a spectroscopic technique must demonstrate that the method meets predefined criteria for its intended use, as outlined in ICH Q2(R2). The following workflow details the key experiments and their logical sequence.

Figure 1. Sequential Workflow for Analytical Method Validation based on ICH Q2(R2).

Step-by-Step Methodology:

- Define the Analytical Target Profile (ATP): Before experimentation, draft an ATP. This is a foundational concept from ICH Q14 that precedes validation. The ATP is a predefined objective that articulates the procedure's intended purpose, specifying the analyte(s), the matrix, and the required performance criteria (e.g., target precision and accuracy) that the method must demonstrate upon validation [8].

- Specificity/Selectivity: Using a spectrophotometer or spectrometer, analyze a blank sample (e.g., placebo or sample matrix) and a sample spiked with the target analyte. The method should be able to unequivocally assess the analyte in the presence of components like impurities, degradation products, or matrix interferences that may be expected to be present [9].

- Linearity and Range: Prepare a minimum of 5 concentrations of the analyte across the specified range (e.g., 50-150% of the target concentration). Analyze each concentration in triplicate. Plot signal response (e.g., absorbance, peak area) versus concentration and calculate the correlation coefficient, y-intercept, and slope of the regression line using statistical software. The range is the interval between the upper and lower concentration levels for which linearity, accuracy, and precision have been demonstrated [9].

- Accuracy: Typically assessed using a spike/recovery experiment. Analyze samples of the matrix spiked with known quantities of the analyte at multiple levels (e.g., 50%, 100%, 150%) within the range. The mean value of the recovered analyte is compared to the true added value, and the percentage recovery is calculated [9].

- Precision:

- Repeatability: Inject a homogeneous sample at 100% of the test concentration at least 6 times. Calculate the relative standard deviation (RSD) of the measurements.

- Intermediate Precision: Perform the analysis on different days, with different analysts, or using different instruments. The combined RSD from the repeatability and intermediate precision studies demonstrates the method's reliability within a single laboratory [9].

- Detection Limit (LOD) & Quantitation Limit (LOQ): Based on signal-to-noise ratio, standard deviation of the response, and slope of the calibration curve. For spectroscopic techniques, a signal-to-noise ratio of 3:1 is generally accepted for LOD, and 10:1 for LOQ. Alternatively, LOD and LOQ can be calculated based on the standard deviation of the response and the slope of the calibration curve [9].

- Robustness: Deliberately introduce small, deliberate variations in method parameters (e.g., wavelength, temperature, flow rate, sample preparation time) to evaluate the method's capacity to remain unaffected by these changes. An experimental design (e.g., Design of Experiments, DoE) is often employed for this purpose.

Protocol for Managing Post-Approval Changes under ICH Q14

ICH Q14 provides a framework for managing changes to analytical procedures throughout their lifecycle. The following diagram outlines the science- and risk-based process for implementing a post-approval change.

Figure 2. ICH Q14 Workflow for Managing Post-Approval Changes to Analytical Procedures.

Step-by-Step Methodology:

- Risk Assessment: Initiate the process by conducting a risk assessment to evaluate the potential impact of the proposed change. Factors considered include the complexity of the test, the extent of the modification, and the procedure's relevance to product quality and Critical Quality Attributes (CQAs). This assessment classifies the change as high-, medium-, or low-risk [10].

- Bridging Studies: Design and execute bridging studies to directly compare the performance of the new or modified analytical procedure against the existing one. These studies use representative samples and are tailored to the nature of the change. The goal is to build a scientific understanding of the revised procedure [10].

- Confirm Performance Meets ATP: Conduct appropriate validation studies to confirm that the modified procedure continues to meet the performance criteria defined in the Analytical Target Profile (ATP). This ensures the method remains fit for its intended purpose. Update the procedure description, including parameters for system suitability and the analytical control strategy if needed [10].

- Assess Regulatory Impact: Determine the regulatory reporting category for the change (e.g., prior approval, notification, or do-and-report). This assessment is guided by tools like Established Conditions (ECs) and Post-Approval Change Management Protocols (PACMPs), which may have been agreed upon with regulatory authorities beforehand to streamline the process [10].

- Implement Change: After completing the necessary regulatory actions for each region, the change can be implemented in the Quality Control laboratory [10].

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and reagents essential for conducting validation experiments for spectroscopic techniques.

| Item Name | Function & Role in Validation |

|---|---|

| High-Purity Reference Standard | Serves as the benchmark for identifying and quantifying the analyte. Its purity is critical for accurate calibration, and for determining linearity, accuracy, and limits of detection [9]. |

| Placebo Matrix | Contains all components of the sample except the active analyte. It is used in specificity/selectivity experiments to prove the method does not generate interfering signals from excipients or the sample matrix itself [9]. |

| Forced Degradation Samples | Samples of the drug substance or product stressed under conditions (e.g., heat, light, acid/base) to generate degradants. Analysis of these samples is key to demonstrating the method's specificity and stability-indicating properties [9]. |

| Surrogate Matrix | Used in biomarker bioanalysis when a true blank matrix is unavailable. It allows for the preparation of calibration standards and is crucial for demonstrating accuracy and precision via spike/recovery experiments [11]. |

| System Suitability Standards | A prepared reference solution used to verify that the entire analytical system (instrument, reagents, columns) is performing adequately at the time of testing. It is a routine control for precision and reproducibility [10]. |

| Iloprost tromethamine | Iloprost Tromethamine - CAS 697225-02-8 - Research Use Only |

| Malt | Malt Research Reagent|For RUO |

The regulatory framework for analytical procedures is converging towards an integrated lifecycle approach, as championed by ICH Q2(R2) and Q14. While regional agencies like the FDA and EMA align with these harmonized principles, nuances remain in their implementation focus and existing guidance documents. For spectroscopic techniques, a successful validation strategy begins with a well-defined ATP and employs a risk-based approach to both initial validation and subsequent lifecycle management. Mastering this framework enables scientists to develop more robust and reliable methods, streamline post-approval changes, and ultimately ensure the consistent quality and safety of pharmaceutical products.

The Role of Data Integrity and ALCOA+ Principles in Spectroscopy

In the field of spectroscopic analysis, where data forms the fundamental basis for critical decisions in drug development and material characterization, data integrity is not merely a regulatory requirement but a scientific necessity. The ALCOA+ framework provides a structured set of principles—Attributable, Legible, Contemporaneous, Original, Accurate, Complete, Consistent, Enduring, and Available—that ensure the reliability and trustworthiness of spectroscopic data throughout its entire lifecycle [15] [16]. For researchers and scientists working with spectroscopic techniques, adhering to these principles is paramount for producing valid, reproducible results that withstand regulatory scrutiny [17] [18].

The connection between data integrity and spectroscopy has intensified with the increasing adoption of electronic data systems and automated spectroscopic platforms. Regulatory agencies including the FDA, EMA, and WHO explicitly expect implementation of ALCOA+ principles in Good Manufacturing Practice (GMP) environments where spectroscopic data supports product quality assessments [16]. This article explores the practical application of ALCOA+ in spectroscopy, comparing implementation across software platforms and providing methodological guidance for researchers seeking to validate their spectroscopic methods within this rigorous framework.

Understanding ALCOA+ Principles in Spectroscopic Context

The ALCOA+ framework forms the cornerstone of modern data integrity practices in regulated scientific environments, with each principle addressing specific aspects of data reliability essential for spectroscopic analysis [15] [16].

Core ALCOA Principles

- Attributable: Data must be traceable to the person who generated it, the specific instrument used, and the time of creation [15] [18]. In spectroscopy, this means every spectrum or measurement must be linked to the analyst, the spectrometer, and the exact date and time of acquisition [18].

- Legible: Data must remain readable and accessible throughout its required retention period [15] [16]. For spectroscopic data, this involves ensuring that spectral files remain interpretable by software systems despite technology upgrades and that human-readable forms are preserved [18].

- Contemporaneous: Documentation must occur at the time the activity is performed [15] [16]. In spectroscopic workflows, this prohibits back-dating entries or documenting measurements after the analysis has been completed [18] [16].

- Original: The first recorded observation or a "verified true copy" must be preserved [15] [16]. For spectroscopy, this means retaining the raw spectral data before any processing or transformation, maintaining its authentic form [18].

- Accurate: Data must be free from errors, with any modifications documented and justified [15] [16]. In spectroscopic analysis, this requires that instruments are properly calibrated and that any data processing does not distort the original signal [18].

Extended ALCOA+ Principles

- Complete: All spectroscopic data must be included, with no omissions [15] [16]. This encompasses the entire dataset from multiple analyses, including failed runs or outliers that might otherwise be excluded [16].

- Consistent: Data should follow a standardized sequence of events with documented date and time stamps [15] [16]. In spectroscopy, this means applying uniform procedures across all analyses and maintaining chronological records of all activities [18] [16].

- Enduring: Data must be preserved for the required retention period, typically the product's shelf life plus several years in pharmaceutical applications [15] [16].

- Available: Data must be accessible for review, inspection, and copying throughout the retention period [15] [16]. For spectroscopic data, this means ensuring that spectra and associated metadata can be retrieved long after the initial analysis [18].

Table 1: ALCOA+ Principles and Their Implementation in Spectroscopy

| Principle | Definition | Spectroscopy Implementation Examples |

|---|---|---|

| Attributable | Data traceable to source | Secure user logins, instrument identifiers, electronic signatures [15] [18] |

| Legible | Permanently readable | Export to PDF/CSV formats, maintained backups, non-erasable formats [18] |

| Contemporaneous | Recorded in real-time | Automated time-stamping, immediate database storage [18] |

| Original | First recording or certified copy | Raw spectral data preservation, audit trails [18] |

| Accurate | Error-free with documented edits | Validation checks, documented calibrations, change control [18] |

| Complete | All data included with no omissions | Protected storage, prevention of data deletion, sequence integrity [15] [16] |

| Consistent | Chronological with protected sequence | Consistent workflows, time-stamped entries, standardized procedures [18] [16] |

| Enduring | Long-term preservation | Durable storage media, regular backups, migration plans [15] |

| Available | Accessible when needed | Searchable databases, retrieval systems, organized archives [15] [18] |

Implementing ALCOA+ in Spectroscopic Workflows: A Comparative Analysis

Successful implementation of ALCOA+ principles in spectroscopy requires both technical solutions and organizational culture. The following comparative analysis examines how different software platforms address these requirements and how they integrate into broader spectroscopic workflows.

Software Solutions for ALCOA+ Compliance in Spectroscopy

Specialized software plays a crucial role in enabling ALCOA+ compliance for spectroscopic systems by providing technical controls that enforce data integrity principles [18].

Table 2: Comparison of Software Features Supporting ALCOA+ in Spectroscopy

| Software Platform | ALCOA+ Features | Spectroscopy Applications | Compliance Standards |

|---|---|---|---|

| Vision Air | Secure user authentication, automated timestamping, SQL database storage, audit trails, two-level signing for configuration changes [18] | NIR spectroscopy, quantitative analysis, method development [18] | FDA 21 CFR Part 11, GMP/GLP [18] |

| AuditSafe | Secure logins for attribution, export of human-readable audit trails, project timestamping, validation capabilities [15] | Data collection and analysis in life sciences, pharmaceutical production [15] | FDA guidelines, global regulatory standards [15] |

| Thermo Fisher Spectroscopy Solutions | OQ (Operational Qualification) automation, audit trails, access controls, electronic signatures [17] | FTIR spectroscopy, pharmacopoeia testing, identity and purity algorithms [17] | 21 CFR Part 11, pharmacopoeia standards [17] |

| SpectraFit | Output file-locking system, collection of input data/results/initial model in single file, open-source validation [19] | XAS spectral analysis, peak fitting, quantitative composition analysis [19] | Transparency and reproducibility standards [19] |

Workflow Integration of ALCOA+ Principles

The following diagram illustrates how ALCOA+ principles integrate into a typical spectroscopic analysis workflow, from sample preparation to final reporting:

Experimental Protocol for Validating ALCOA+ Implementation in Spectroscopy

To systematically assess the implementation of ALCOA+ principles in spectroscopic systems, the following experimental protocol can be employed:

Objective: Verify and validate the proper implementation of ALCOA+ principles in a spectroscopic analysis system.

Materials and Equipment:

- Spectrometer with associated data acquisition software

- Certified reference materials for system suitability testing

- Secure database or electronic notebook system

- Access to system audit trails and metadata

Methodology:

- System Configuration and Security Assessment

- Create and verify unique user accounts for all analysts

- Configure instrument identifiers and method-specific parameters

- Validate electronic signature capabilities if applicable

- Verify access controls to prevent unauthorized data modification

Data Generation and Recording Process

- Analyze a series of certified reference materials

- Document all analyses in real-time during acquisition

- Intentionally introduce and correct errors to test documentation procedures

- Generate data across multiple sessions and analysts

Data Processing and Modification Tracking

- Apply data processing techniques (baseline correction, smoothing, etc.)

- Make authorized modifications to processing parameters

- Document all processing steps and parameter changes

- Generate reports at various processing stages

Data Storage, Retrieval and Archive Testing

- Store completed analyses in primary and backup systems

- Retrieve data after specified time intervals (24 hours, 1 week, 1 month)

- Verify data integrity through checksum validation

- Generate and verify certified true copies of original data

Acceptance Criteria:

- All data must be traceable to specific analyst and instrument

- No data points should be lost or unaccounted for in the final report

- All modifications must be documented with timestamp and justification

- Data must be retrievable and readable after specified storage periods

Advanced Applications: AI and Machine Learning in Spectroscopic Data Integrity

The emergence of artificial intelligence (AI) and machine learning (ML) in spectroscopic analysis introduces both opportunities and challenges for data integrity [20]. These advanced computational methods can enhance the reliability and interpretation of spectroscopic data when properly implemented within the ALCOA+ framework.

AI-Enhanced Spectral Analysis: Modern spectroscopic techniques generate high-dimensional data that creates pressing needs for automated and intelligent analysis beyond traditional expert-based workflows [20]. Machine learning approaches, collectively termed Spectroscopy Machine Learning (SpectraML), are being applied to both forward tasks (molecule-to-spectrum prediction) and inverse tasks (spectrum-to-molecule inference) while maintaining data integrity standards [20].

Data Quality Requirements for AI Applications: The implementation of AI in spectroscopic analysis heightens the importance of ALCOA+ principles, particularly:

- Complete datasets for training and validation

- Consistent data formatting and preprocessing

- Original data preservation before feature extraction

- Accurate labeling and annotation of training data

Open-source tools like SpectraFit demonstrate how AI-assisted analysis can be integrated with data integrity safeguards through features like output file-locking systems that collect input data, results data, and the initial fitting model in a single file to promote transparency and reproducibility [19].

Implementing robust data integrity practices in spectroscopic research requires both technical solutions and methodological approaches. The following toolkit outlines essential resources for maintaining ALCOA+ compliance:

Table 3: Research Reagent Solutions for ALCOA+-Compliant Spectroscopy

| Tool Category | Specific Solutions | Function in ALCOA+ Implementation |

|---|---|---|

| Spectroscopy Software | Vision Air, AuditSafe, Thermo Fisher OQ Packages, SpectraFit [15] [17] [18] | Provide technical controls for user attribution, audit trails, data protection, and automated compliance [15] [18] |

| Data Management Systems | SQL Databases, Electronic Lab Notebooks (ELNs), Laboratory Information Management Systems (LIMS) [18] | Ensure data endurance, availability, and completeness through secure storage and retrieval mechanisms [18] |

| Quality Assurance Tools | Automated Backup Systems, Checksum Validators, Audit Trail Reviewers [18] [16] | Verify data consistency, accuracy, and completeness throughout data lifecycle [18] [16] |

| Reference Materials | Certified Reference Materials, System Suitability Standards [17] | Establish accuracy and reliability of spectroscopic measurements through instrument qualification [17] |

| Documentation Systems | Electronic Signatures, Version Control Systems, Template Libraries [18] [16] | Support attributable, contemporaneous, and consistent documentation practices [18] [16] |

The implementation of ALCOA+ principles in spectroscopy represents a fundamental requirement rather than an optional enhancement for researchers and drug development professionals. As regulatory scrutiny of electronic data intensifies, the integration of these data integrity principles into spectroscopic method validation provides both compliance benefits and scientific advantages through enhanced data reliability and reproducibility [17] [18] [16].

The comparative analysis presented demonstrates that while software solutions vary in their specific implementation approaches, the core ALCOA+ requirements remain consistent across platforms and spectroscopic techniques. Successful adoption requires a holistic approach combining technical solutions with personnel training, organizational culture, and robust quality systems [16]. As spectroscopic technologies continue to evolve with increasing incorporation of AI and automation, the foundational principles of ALCOA+ will remain essential for ensuring the trustworthiness of spectroscopic data in research and regulated environments.

Establishing Linearity, Range, and Robustness for Spectroscopic Assays

In pharmaceutical development and analytical research, the reliability of spectroscopic data is paramount. Establishing method validity is a formal prerequisite for generating results that meet regulatory standards and support critical decisions in drug development. This guide focuses on three interconnected validation parameters—linearity, range, and robustness—which collectively ensure that an analytical method performs as intended in a reliable and reproducible manner.

Linearity defines the ability of a method to obtain results that are directly proportional to the concentration of the analyte within a given range [21]. The range is the interval between the upper and lower concentration levels of analyte for which demonstrated linearity, precision, and accuracy are achieved [21]. Robustness, on the other hand, evaluates the capacity of a method to remain unaffected by small, deliberate variations in procedural parameters, indicating its reliability during normal use [22]. This guide objectively compares the performance of different spectroscopic techniques against these critical validation parameters, providing a framework for scientists to select and optimize the most appropriate assay for their specific needs.

Conceptual Foundations and Definitions

A clear understanding of terminology is crucial for proper method validation. The linear range, or linear dynamic range, is specifically defined as the concentration interval over which the analytical signal is directly proportional to the concentration of the analyte [21]. This differs from the broader term dynamic range, which may encompass concentrations where a response is observed, but the relationship may be non-linear. Finally, the working range is the span of concentrations where the method delivers results with an acceptable level of uncertainty, and it can be wider than the strictly linear range [21].

For a method to be considered linear, it must demonstrate this proportional relationship, typically confirmed through a series of calibration standards. A well-established linear range should adequately cover the intended application, often spanning from 50-150% or 0-150% of the expected target analyte concentration [21]. It is vital to recognize that linearity can be technique- and compound-dependent. For instance, the linear range for LC-MS instruments is often fairly narrow, but can be extended using strategies such as isotopically labeled internal standards (ILIS), sample dilution, or instrumental modifications like lowering the flow rate in an ESI source to reduce charge competition [21].

Experimental Protocols for Validation

Protocol for Establishing Linearity and Range

The following detailed protocol is applicable to various spectroscopic techniques, including UV-Vis, to generate a calibration model.

1. Preparation of Standard Solutions:

- Prepare a stock solution of the analyte reference standard with high accuracy.

- Serially dilute the stock solution to obtain a minimum of five to six concentration levels. The range should cover 50-150% or 0-150% of the expected sample concentration [21] [23].

2. Instrumental Analysis:

- Analyze each standard solution in triplicate to account for instrumental variability.

- Maintain consistent instrumental parameters (e.g., slit width, path length, integration time) throughout the analysis.

- For UV-Vis, measure the absorbance at the wavelength of maximum absorption (λmax) to maximize sensitivity and minimize errors [24].

3. Data Analysis and Assessment:

- Plot the mean analytical response (e.g., absorbance, peak area) against the concentration of the standard solutions.

- Calculate the regression line using the least-squares method (y = mx + c), where

yis the response,mis the slope,xis the concentration, andcis the y-intercept. - Determine the correlation coefficient (R²), sum of squared residuals, and y-intercept to evaluate linearity. A high R² value (e.g., >0.999 in a validated UV method for deferiprone) indicates strong linearity [23].

- The range is validated by demonstrating that the method meets acceptable criteria for linearity, precision, and accuracy across the specified concentration interval.

Protocol for Assessing Robustness

Robustness testing evaluates the method's resilience to deliberate, small changes in operational parameters.

1. Experimental Design:

- Identify critical method parameters that could influence the results. These are technique-specific:

- Use a structured approach, such as a full factorial or Plackett-Burman design, to efficiently test the impact of varying these parameters simultaneously.

2. Execution and Analysis:

- Perform the assay under the varied conditions while analyzing a system suitability sample or reference standard at a fixed concentration.

- Monitor the effect of these variations on critical performance attributes, such as retention time, signal intensity, spectral shape, and quantitative result (assay value).

- The method is considered robust if the quantitative results remain within predefined acceptance criteria (e.g., ±1-2% of the value obtained under standard conditions) and performance attributes show minimal deviation despite the introduced variations.

Comparative Performance Data of Spectroscopic Techniques

The following tables synthesize experimental data and characteristics from various spectroscopic methods, highlighting their performance in terms of linearity, range, and robustness.

Table 1: Comparative Linearity and Range of Spectroscopic Techniques

| Technique | Typical Linear Range (Order of Magnitude) | Example Correlation Coefficient (R²) | Key Factors Influencing Linearity |

|---|---|---|---|

| UV-Vis Spectroscopy | 1-2 (e.g., 2-12 μg/mL for Deferiprone [23]) | ≥ 0.999 [23] | Deviations from Beer-Lambert law at high concentration, stray light, instrumental noise. |

| Fluorescence Spectroscopy | 3-4 | > 0.99 (assay dependent) | Inner-filter effect, self-quenching, photobleaching, concentration saturation. |

| LC-MS | 2 (can be extended with ILIS) | > 0.99 (assay dependent) | Charge competition in the ion source (especially ESI), detector saturation. [21] |

| NIR Spectroscopy | Requires multivariate calibration (PLS, etc.) | Model dependent (e.g., R² > 0.95 for robust models) | Scattering effects, complex baseline offsets, weak and overlapping absorption bands. [25] |

Table 2: Robustness Considerations Across Spectroscopic Techniques

| Technique | Critical Parameters to Test for Robustness | Common Vulnerabilities & Mitigation Strategies |

|---|---|---|

| UV-Vis Spectroscopy | Wavelength accuracy, pH of solvent, temperature, source lamp aging. | Vulnerability: Solvent/ matrix effects. Mitigation: Use matched solvent/blanks and standardize sample preparation. [24] [23] |

| Fluorescence Spectroscopy | Excitation/Emission bandwidths, temperature, solvent viscosity, sample turbidity, quenchers. | Vulnerability: Inner-filter effects, photo-bleaching. Mitigation: Use narrow cuvettes, dilute samples, and minimize exposure. [22] |

| Vibrational Spectroscopy (IR, Raman) | Laser power/flux, sampling depth/pressure, calibration model stability. | Vulnerability: Fluorescence background (Raman), water vapor (IR). Mitigation: Use 1064 nm lasers (Raman), purge optics with dry air (IR). [26] [25] |

| Hyphenated Techniques (e.g., LC-MS) | Mobile phase composition/buffer concentration, flow rate, ion source temperature, interface parameters. | Vulnerability: Ion suppression, column degradation. Mitigation: Use stable isotope internal standards, guard columns. [21] |

Advanced Topics: Managing Nonlinearity and Enhancing Robustness

Addressing Nonlinear Effects

Real-world spectroscopic data often deviates from ideal linear behavior due to chemical, physical, and instrumental factors [25]. Identifying and managing these nonlinearities is essential for accurate quantification, especially when using multivariate calibration models.

Common sources of nonlinearity include:

- Chemical Effects: Spectral band saturation at high concentrations and molecular interactions like hydrogen bonding.

- Physical Effects: Light scattering and path length variations in diffuse reflectance measurements.

- Instrumental Effects: Detector nonlinearity, stray light, and wavelength misalignments [25].

When linear models like classical Partial Least Squares (PLS) are insufficient, several advanced calibration methods can be employed:

- Polynomial Regression: A simple extension that includes higher-order terms, but it can overfit high-dimensional spectral data.

- Kernel Partial Least Squares (K-PLS): Maps data into a higher-dimensional feature space where linear relations can be applied, effectively capturing complex nonlinearities.

- Artificial Neural Networks (ANNs): Highly flexible models suitable for large, high-dimensional datasets like hyperspectral images, though they require significant data and can be prone to overfitting [25].

Data Preprocessing for Robust Models

Robust analytical methods rely on high-quality data. Spectral preprocessing is a critical step to mitigate unwanted variance and enhance the reliability of the analytical signal, particularly for robustness. A systematic preprocessing pipeline includes:

- Cosmic Ray/Spike Removal: Identifies and removes sharp, spurious signals.

- Baseline Correction: Suppresses low-frequency drifts caused by instrumental or sample matrix effects.

- Scattering Correction: Corrects for multiplicative scattering effects (e.g., using Multiplicative Scatter Correction).

- Normalization: Minimizes systematic errors from sample-to-sample variations.

- Smoothing and Filtering: Reduces high-frequency random noise [27].

Implementing these steps before regression or model building significantly improves the accuracy, precision, and transferability of spectroscopic methods.

The Scientist's Toolkit: Essential Reagents and Materials

Table 3: Key Research Reagent Solutions for Spectroscopic Assay Validation

| Item | Function in Validation | Application Notes |

|---|---|---|

| Analytical Reference Standard | Serves as the primary material for preparing calibration standards to establish linearity and range. | Must be of high and well-defined purity (e.g., pharmacopeial grade). Its concentration is the basis for all quantitative measurements. |

| Isotopically Labeled Internal Standard (ILIS) | Compensates for analyte loss during preparation and signal variation in the instrument, widening the linear dynamic range in techniques like LC-MS. | Used in mass spectrometry; should be structurally analogous to the analyte but distinguishable by mass. [21] |

| High-Purity Solvents | Dissolve analytes and standards without introducing interfering spectral signals or contaminants. | UV-Vis grade solvents are essential for low UV absorbance. Water purity is critical (e.g., from a system like Milli-Q). [26] |

| Buffer Solutions | Control the pH of the sample matrix, which can critically affect spectral shape, intensity, and stability, thereby testing robustness. | Required for analytes with ionizable groups. Buffer type and concentration should be specified and controlled. |

| Certified Reference Materials (CRMs) | Provide an independent, matrix-matched control to verify method accuracy and precision across the validated range. | Used for final method verification; traceable to international standards. |

| Miophytocen B | Miophytocen B | Miophytocen B is a macrocyclic trichothecene for research. This product is For Research Use Only. Not for diagnostic or personal use. |

| Thiobuscaline | Thiobuscaline|C14H23NO2S|Research Chemical | Thiobuscaline, a phenethylamine derivative for neuroscience research. Study its unique pharmacological profile. For Research Use Only. Not for human consumption. |

Workflow and Decision Pathways

The following diagram illustrates the logical workflow for establishing and troubleshooting the linearity and range of a spectroscopic assay.

Assay Linearity Establishment Workflow

The rigorous establishment of linearity, range, and robustness is non-negotiable for developing trustworthy spectroscopic methods in drug development and analytical research. As demonstrated, the performance of different spectroscopic techniques varies significantly, with factors like dynamic range, susceptibility to matrix effects, and optimal calibration strategies being highly technique-specific.

The field continues to evolve, with future advancements pointing toward wider adoption of hybrid physical-statistical models that integrate fundamental spectroscopic theory with machine learning for greater interpretability and generalization. Furthermore, the development of transferable nonlinear models that maintain accuracy across different instruments and the application of Explainable AI (XAI) to complex models like neural networks will be crucial for meeting regulatory demands and enhancing scientist trust in predictive outcomes [25]. By adhering to structured experimental protocols and leveraging advanced data processing tools, scientists can ensure their spectroscopic assays are not only valid but also robust and fit-for-purpose in the modern laboratory.

Implementing Validation for Modern Spectroscopic Techniques

Validation Strategies for UV-Vis, NIR, and MIR Spectroscopy

Method validation is a critical process that establishes documented evidence providing a high degree of assurance that a specific spectroscopic technique will consistently produce results meeting predetermined analytical method requirements. For researchers and drug development professionals, implementing robust validation strategies for ultraviolet-visible (UV-Vis), near-infrared (NIR), and mid-infrared (MIR) spectroscopy ensures data integrity, regulatory compliance, and reliable decision-making throughout the product development lifecycle. Each technique possesses unique characteristics dictated by its underlying physical principles—electronic transitions for UV-Vis-NIR, and molecular vibrations for MIR—which directly influence the appropriate validation approach. This guide systematically compares validation parameters across these spectroscopic techniques, providing experimental protocols and performance data to support method development in regulated environments.

Fundamental Principles and Instrumentation

Technical Basis of Spectroscopic Techniques

The validation of any spectroscopic method must begin with understanding its fundamental principles and how they influence performance characteristics. UV-Vis-NIR spectroscopy measures the absorption of electromagnetic radiation in the 175-3300 nm range, primarily resulting from electronic transitions in molecules. These transitions occur when valence electrons are excited to higher energy states, with UV-Vis regions (175-800 nm) covering π→π* and n→π* transitions in organic molecules, while NIR (800-3300 nm) encompasses weaker overtones and combination bands of fundamental molecular vibrations [28] [29]. In contrast, MIR spectroscopy, particularly Fourier-transform infrared (FT-IR), probes fundamental molecular vibrations in the 4000-400 cmâ»Â¹ range (approximately 2500-25000 nm), providing detailed molecular fingerprint information through absorption of IR light by molecules undergoing vibrational transitions between quantized energy states [7].

Instrumentation Comparisons

The core instrumentation differences between these techniques significantly impact validation strategies. UV-Vis-NIR instruments typically employ dispersive designs with monochromators containing diffraction gratings that separate wavelengths spatially, while modern FT-IR and FT-NIR instruments utilize interferometers with moving mirrors to create interferograms that are mathematically transformed into spectra using Fourier transformation [30] [7]. Key performance advantages of FT instruments include Fellgett's (multiplex) advantage for improved signal-to-noise ratio through simultaneous measurement of all wavelengths, Jacquinot's (throughput) advantage for higher energy throughput with fewer optical slits, and Connes' advantage for superior wavelength calibration precision derived from an internal laser reference [7].

Figure 1: Comprehensive Workflow for Spectroscopic Method Validation

Critical Validation Parameters Comparison

Performance Characteristics Across Techniques

The validation of spectroscopic methods requires demonstration of several key performance parameters that vary significantly across UV-Vis, NIR, and MIR techniques due to their different physical principles and instrumentation. Specificity, the ability to measure analyte response in the presence of potential interferents, is typically highest in MIR spectroscopy due to its detailed molecular fingerprinting capabilities, followed by NIR with its complex overtone patterns, while UV-Vis may suffer from spectral overlaps in complex mixtures [7] [29]. Linear dynamic range is generally widest in UV-Vis spectroscopy (typically 2-4 absorbance units), while NIR and MIR exhibit narrower linear ranges due to deviations from Beer-Lambert law at higher concentrations, particularly for fundamental vibrations in MIR [29].

Table 1: Comparison of Key Validation Parameters Across Spectroscopic Techniques

| Validation Parameter | UV-Vis Spectroscopy | NIR Spectroscopy | MIR Spectroscopy (FT-IR) |

|---|---|---|---|

| Typical Wavelength Range | 175-800 nm [31] [29] | 800-3300 nm [31] [29] | 2500-25000 nm (4000-400 cmâ»Â¹) [7] |

| Primary Transitions | Electronic transitions [28] | Overtone/combination vibrations [28] | Fundamental molecular vibrations [7] |

| Specificity | Moderate (potential spectral overlaps) [29] | High (complex overtone patterns) [30] | Very high (molecular fingerprint region) [7] |

| Linear Dynamic Range | 2-4 AU (wide) [29] | 1-3 AU (moderate) [30] | 0.5-2 AU (narrower) [7] |

| Typical LOD (Absorbance) | 0.001-0.01 AU [31] | 0.005-0.05 AU [30] | 0.01-0.1 AU [7] |

| Precision (RSD) | 0.1-1% [31] | 0.5-2% [30] | 0.3-1.5% [7] |

| Sample Preparation Needs | Minimal (dilution) [29] | Minimal to moderate [30] | Variable (ATR, KBr pellets, etc.) [7] |

| Primary Regulatory Applications | Quantitative analysis in dissolution, content uniformity [31] | Raw material ID, polymorph screening, process monitoring [26] | Compound identification, polymorph characterization [7] |

Accuracy, Precision, and Robustness

Accuracy and precision validation approaches differ substantially across techniques. UV-Vis methods typically demonstrate excellent accuracy (98-102% recovery) and precision (RSD 0.1-1%) for quantitative analysis of single components in simple matrices, validated against certified reference materials [31] [29]. For NIR methods, accuracy validation must account for the multivariate nature of the technique, with typical RMSEP (Root Mean Square Error of Prediction) values of 0.1-0.5% for major components in pharmaceuticals when validated against primary reference methods, with precision RSD ranging from 0.5-2% depending on the sampling technique [30]. MIR accuracy varies significantly with sampling technique, with ATR-FTIR typically showing 95-105% recovery for quantitative analysis when proper calibration models are used, and precision RSD of 0.3-1.5% [7].

Robustness testing evaluates method resilience to deliberate variations in method parameters. For UV-Vis, this includes testing wavelength accuracy (±1 nm), bandwidth, and sampling pathlength variations [31]. NIR method robustness must evaluate spectral pretreatment variations, temperature effects, and sample presentation consistency due to light scattering effects [30]. MIR robustness testing focuses on ATR crystal pressure consistency, sample homogeneity, and environmental humidity control due to water vapor interference [7].

Experimental Protocols for Validation

Specificity and Selectivity Protocols

Specificity validation experimentally demonstrates that the analytical method can unequivocally assess the analyte in the presence of potential interferents. For UV-Vis methods, specificity is established by comparing analyte spectra with placebo mixtures, stressed samples, and related compounds, requiring baseline separation of analyte peak from interfering peaks [29]. A typical protocol involves preparing solutions of analyte, placebo, and synthetic mixtures, scanning from 200-400 nm or wider range as needed, and demonstrating that placebo components show no interference at the analyte λmax [31].

For NIR specificity validation, the multivariate nature requires different approaches. Using a minimum of 20-30 representative samples spanning expected variability, collect spectra in appropriate mode (diffuse reflectance for solids, transmission for liquids). Apply chemometric tools like PCA (Principal Component Analysis) to demonstrate clustering of acceptable materials and separation from unacceptable materials, with statistical distance metrics such as Mahalanobis distance establishing classification boundaries [32].

MIR specificity validation in FT-IR leverages the fingerprint region (1500-500 cmâ»Â¹) where molecules show unique absorption patterns. Using ATR or transmission sampling, collect spectra of reference standards, potential contaminants, and degraded samples. Specificity is confirmed when the analyte spectrum shows unique absorption bands not present in interferents, or through spectral library matching with match scores exceeding predefined thresholds (typically >0.95 for pure compound identification) [7].

Linearity and Range Determination

Linearity establishes that the analytical method produces results directly proportional to analyte concentration within a specified range. For UV-Vis validation, prepare a minimum of 5 concentrations spanning the expected range (typically 50-150% of target concentration) in triplicate. Plot absorbance versus concentration and calculate correlation coefficient (r > 0.999), y-intercept (not significantly different from zero), and residual sum of squares [29]. A typical UV-Vis linearity experiment for drug substance assay might use concentrations of 50, 75, 100, 125, and 150 μg/mL with 1 cm pathlength, expecting r² ≥ 0.998 [31].

NIR linearity validation follows different principles due to frequent use of multivariate calibration. Prepare 20-30 samples with concentration variation spanning expected range using appropriate experimental design. Develop PLS (Partial Least Squares) or PCR (Principal Component Regression) models with full cross-validation, reporting RMSECV (Root Mean Square Error of Cross-Validation) and R² for the calibration model. For a pharmaceutical blend analysis, RMSECV values <0.5% for API concentration typically demonstrate acceptable linearity [32].

MIR linearity using ATR-FTIR requires special consideration of the Beer-Lambert law limitations at higher concentrations due to reflection/absorption complexities. Prepare standard mixtures with 5-7 concentration levels in appropriate matrix, ensuring uniform contact with ATR crystal. Use peak height or area of specific vibrational bands, expecting linear r² > 0.995 for quantitative applications. Pathlength correction factors may be necessary for accurate quantification [7].

Precision and Accuracy Experiments

Precision validation demonstrates the degree of agreement among individual test results under prescribed conditions, while accuracy establishes agreement between test results and accepted reference values.

Table 2: Experimental Protocols for Precision and Accuracy Validation

| Validation Type | UV-Vis Protocol | NIR Protocol | MIR (FT-IR) Protocol |

|---|---|---|---|

| Repeatability (Intra-day) | 6 determinations at 100% concentration, RSD ≤ 1% [31] | 10 spectra of single sample, repositioning between scans, RSD ≤ 2% [30] | 10 measurements of single preparation with repositioning, RSD ≤ 1.5% [7] |

| Intermediate Precision (Inter-day) | 6 determinations each on 2 different days, by 2 analysts, with different instruments; RSD ≤ 2% [31] | 3 preparations each on 3 days, different operators, instrument; compare RMSEP [32] | 3 preparations analyzed over 3 days with different sample positioning; RSD ≤ 2.5% [7] |

| Accuracy Recovery | 9 determinations over 3 concentration levels (80%, 100%, 120%) with 98-102% recovery [29] | 20-30 validation set samples spanning concentration range, RMSEP < 0.5% of range [32] | Standard addition method with 3 spike levels, 95-105% recovery [7] |

| Sample Preparation | Dilution in appropriate solvent, minimal preparation [29] | Representative sampling, consistent presentation geometry [30] | Uniform contact with ATR crystal or consistent pellet preparation [7] |

Detection and Quantitation Limits

Experimental Determination Approaches

Limit of detection (LOD) and quantitation (LOQ) establish the lowest levels of analyte that can be reliably detected or quantified, with approaches varying significantly across techniques. For UV-Vis, LOD and LOQ are typically determined based on signal-to-noise ratio (S/N) of 3:1 for LOD and 10:1 for LOQ, or using standard deviation of the response and slope of the calibration curve (LOD = 3.3σ/S, LOQ = 10σ/S) [31]. A typical UV-Vis method might achieve LOD of 0.001-0.01 AU, corresponding to low μg/mL concentrations for compounds with high molar absorptivity [29].

NIR spectroscopy, dealing with weak overtone bands, generally has higher detection limits. LOD/LOQ determination requires multivariate approaches, typically based on the RMSEP of cross-validated calibration models. The LOD is often estimated as 3 times the standard error of the residual variance in the response, while LOQ is estimated as 10 times this value. Practical LOQ values for major components in pharmaceuticals typically range from 0.1-0.5% w/w using diffuse reflectance NIR [30].

MIR FT-IR detection limits depend strongly on sampling technique. For ATR-FTIR, LOD is typically determined by analyzing progressively diluted samples until the characteristic absorption bands become indistinguishable from background noise (S/N < 3). Using modern FT-IR instruments with high-sensitivity detectors, LOD values for organic compounds typically range from 0.1-1% with ATR sampling, potentially reaching ppm levels with transmission cells or specialized techniques like photoacoustic detection [7].

Advanced Validation Considerations

Data Processing and Chemometrics

Modern spectroscopic validation, particularly for NIR and MIR, increasingly relies on sophisticated data processing and chemometrics, requiring validation of both instrumental and mathematical procedures. Spectral preprocessing techniques must be validated for their intended purpose, including derivatives (Savitzky-Golay) for resolution enhancement, standard normal variate (SNV) and multiplicative scatter correction (MSC) for scatter effects, and normalization for pathlength variations [27]. Each preprocessing method introduces specific artifacts that impact validation parameters; for example, derivative operations improve specificity but may degrade signal-to-noise ratio, requiring optimization of derivative order and window size [27].

Multivariate calibration models require comprehensive validation including determination of optimal number of latent variables to avoid overfitting, outlier detection methods (leverage, residuals, and influence measures), and rigorous external validation with independent sample sets. For NIR methods, the ratio of performance to deviation (RPD), calculated as the ratio of standard deviation of reference data to SECV (Standard Error of Cross-Validation), should exceed 3 for acceptable quantitative screening applications and 5 for quality control purposes [32]. Model transferability between instruments must be validated using techniques like direct standardization or piecewise direct standardization when methods are deployed across multiple systems [30].

Regulatory and Compendial Requirements

Validation strategies must align with regulatory expectations from FDA, EMA, and compendial requirements (USP, Ph. Eur.). USP general chapters <857> (UV-Vis Spectroscopy), <1856> (NIR Spectroscopy), and <851> (Spectrophotometry and Light-Scattering) provide specific validation guidance [31]. For NIR methods, the FDA's Process Analytical Technology (PAT) guidance encourages rigorous multivariate validation approaches including real-time release testing applications [26]. Regulatory submissions should include comprehensive validation data packages demonstrating all relevant validation parameters with appropriate statistical analysis, plus ongoing monitoring procedures for method maintenance including periodic performance qualification and model updating strategies for handling raw material and process changes [31] [7].

Figure 2: Advanced Validation Workflow Incorporating Data Processing

Essential Research Reagent Solutions

Successful validation of spectroscopic methods requires appropriate reference materials and reagents with documented purity and traceability. The selection of suitable materials forms the foundation for accurate method characterization and should be carefully considered during validation planning.

Table 3: Essential Research Reagents and Materials for Spectroscopic Validation

| Reagent/Material | Technical Function | Validation Application | Technical Specifications |

|---|---|---|---|

| Certified Reference Materials (CRMs) | Primary calibration standards with documented purity and traceability [31] | Accuracy determination, method calibration | Purity ≥ 99.5%, uncertainty ≤ 0.5%, traceable to national standards |

| Holmium Oxide Filter | Wavelength accuracy verification [31] | UV-Vis/NIR wavelength calibration | Characteristic peaks at 241.5, 287.5, 361.5, 486.0, and 536.5 nm with ±0.5 nm tolerance |

| Polystyrene Standard | Wavelength and resolution validation [30] | NIR/FT-IR performance qualification | Characteristic peaks at 906.0, 1028.3, 1601.0, 2929.8 cmâ»Â¹ with ±1.0 cmâ»Â¹ tolerance |

| NIST Traceable Neutral Density Filters | Photometric accuracy verification [31] | Absorbance/transmittance accuracy validation | Specific absorbance values at multiple wavelengths with ±0.01 AU uncertainty |

| Spectroscopic Solvents (HPLC Grade) | Sample preparation and dilution [29] | Sample and standard preparation | UV-Vis transparency with cutoff below 200 nm, low fluorescent impurities |

| ATR Cleaning Solutions | Crystal surface maintenance [7] | FT-IR/ATR sampling reproducibility | Isopropanol/water mixtures, non-abrasive, residue-free, compatible with crystal material |

| Background Reference Materials | Spectral background correction [7] | Daily instrument qualification | Spectralon for NIR, KBr pellets for MIR, appropriate solvent for UV-Vis |

Validation strategies for UV-Vis, NIR, and MIR spectroscopy must be tailored to each technique's fundamental principles, instrumentation, and application requirements. UV-Vis methods excel in quantitative applications with straightforward validation approaches based on univariate calibration, while NIR and MIR techniques require sophisticated multivariate validation strategies incorporating chemometric model validation. Contemporary validation approaches must address both instrumental performance and data processing algorithms, particularly for NIR and MIR methods deployed in PAT environments. Successful validation requires thorough understanding of regulatory expectations, appropriate statistical approaches, and scientifically sound experimental designs that challenge method capabilities under realistic conditions. As spectroscopic technologies continue evolving with miniaturization, increased automation, and enhanced computational power, validation approaches must similarly advance to ensure data integrity while facilitating innovation in pharmaceutical development and manufacturing.

Multi-attribute methods (MAM) represent a paradigm shift in the analytical characterization of complex products, from biopharmaceuticals to natural products. For biologics, MAM is a liquid chromatography-mass spectrometry (LC-MS)-based peptide mapping method that enables direct identification, monitoring, and quantification of multiple product quality attributes (PQAs) at the amino acid level in a single, streamlined workflow [33] [34]. This approach provides a more informative and efficient alternative to conventional chromatographic and electrophoretic assays that typically monitor only one or a few attributes separately [35].

The core innovation of MAM lies in its two-phase workflow: an initial discovery phase to identify quality attributes for monitoring and create a targeted library, followed by a monitoring phase that uses this library for routine analysis while employing differential analysis for new peak detection (NPD) [33]. This dual capability allows for both targeted quantification of specific attributes and untargeted detection of impurities or modifications [34]. As regulatory agencies increasingly emphasize Quality by Design (QbD) principles, MAM has gained prominence for its ability to provide comprehensive product understanding throughout the development lifecycle [36] [35].

MAM for Biopharmaceuticals Characterization

Core Principles and Workflow

The MAM workflow for biopharmaceuticals involves several critical steps designed to ensure comprehensive characterization of therapeutic proteins such as monoclonal antibodies (mAbs). The process begins with proteolytic digestion of the protein sample using enzymes like trypsin to generate peptides, followed by reversed-phase chromatographic separation and high-resolution LC-MS analysis [33] [37]. This workflow enables primary sequence verification, detection and quantitation of post-translational modifications (PTMs), and identification of impurities [33].

A key differentiator of MAM from traditional peptide mapping is its data analysis approach, which includes both targeted attribute quantification (TAQ) of specific critical quality attributes (CQAs) and new peak detection (NPD) through differential analysis between test samples and reference standards [34] [35]. The NPD function is particularly valuable for detecting unexpected product variants or impurities that might not be included in targeted monitoring [34].

Experimental Protocol for MAM Implementation

Implementing a robust MAM requires careful optimization of each step in the workflow:

Sample Preparation: The protein therapeutic must be digested into peptides using highly specific proteases. Trypsin is most commonly used as it produces peptides in the optimal size range (∼4–45 amino acid residues) for mass spectrometric analysis [36]. This critical step requires 100% sequence coverage, high reproducibility, and minimal process-induced modifications (e.g., deamidation) [36]. Protocols typically take 90–120 minutes [33]. Use of immobilized trypsin kits (e.g., SMART Digest Kits) can enhance reproducibility and compatibility with automation [36].

Chromatographic Separation: Peptides are separated using reversed-phase ultra-high-pressure liquid chromatography (UHPLC) systems, which provide exceptional robustness, high gradient precision, and improved reproducibility [36]. Columns with 1.5 µm solid core particles (e.g., Accucore, Hypersil GOLD) deliver sharp peaks, maximal peak capacities, and remarkably low retention time variations essential for reliable batch-to-batch analysis [36].

Mass Spectrometric Detection: Separated peptides are analyzed using high-resolution accurate mass (HRAM) MS instrumentation [36] [37]. The high mass accuracy and resolution enable confident peptide identification and modification monitoring without the need for full chromatographic separation of all species [36].

Data Processing: Specialized software is used for automated peptide identification, relative quantification of targeted PTMs, and new peak detection [37]. For NPD, appropriate detection thresholds must be established to balance sensitivity against false positives [35].

Table 1: Key Research Reagent Solutions for MAM Workflows

| Reagent/Equipment | Function | Examples/Characteristics |

|---|---|---|

| Proteolytic Enzymes | Protein digestion into peptides | Trypsin (most common), Lys-C, Glu-C, AspN; immobilized formats enhance reproducibility |

| UHPLC System | Peptide separation | High-pressure capability (>1000 bar), high gradient precision, minimal carryover |

| HRAM Mass Spectrometer | Peptide detection & identification | High resolution (>30,000) and mass accuracy (<5 ppm); Q-TOF commonly used |

| Chromatography Columns | Peptide separation | C18 stationary phase with 1.5-2µm particles; provides sharp peaks and stable retention |

| Data Analysis Software | Attribute quantification & NPD | Targeted processing of attribute lists; differential analysis for impurity detection |

Application Scope and Replaceable Methods

MAM has the potential to replace multiple conventional analytical methods used in quality control of biopharmaceuticals, providing site-specific information with greater specificity and often superior sensitivity [34] [35]. The following table summarizes the conventional methods that can be consolidated through MAM implementation:

Table 2: Conventional QC Methods and Their MAM Replaceable Capabilities

| Conventional Method | Attributes Monitored | MAM Capability |

|---|---|---|

| Ion-Exchange Chromatography (IEC) | Charge variants (deamidation, oxidation, C-terminal lysine) | Yes – site-specific quantification [34] |

| Hydrophilic Interaction LC (HILIC) | Glycosylation profiles | Yes – site-specific glycan identification [35] [37] |

| Reduced CE-SDS | Fragments, cleaved variants | Potential – depending on fragment sequence [34] |

| Peptide Mapping (LC-UV) | Identity, sequence variant | Yes – with enhanced specificity [34] |

| ELISA | Host Cell Proteins (HCPs) | Potential – though challenging for low-level HCPs [34] |

MAM for Natural Products

Characterization Challenges and Analytical Solutions

Natural products present unique characterization challenges due to their inherent complexity, variability in composition based on source and extraction methods, and the presence of multiple active constituents [38] [39]. Unlike biologics with defined amino acid sequences, natural products such as botanicals, herbal remedies, and dietary supplements are complex mixtures where insufficient assessment of identity and chemical composition hinders reproducible research [38].

The principles of multi-attribute approaches are increasingly being applied to natural products research to address these challenges. For natural products, the focus shifts to characterizing multiple marker compounds or biologically active components rather than specific amino acid modifications [39]. This requires rigorous method validation and the use of matrix-based reference materials to ensure analytical measurements are accurate, precise, and sensitive [38].

Emerging Technologies and Applications

Recent advances in analytical technologies are enabling more comprehensive characterization of natural products:

Multi-Attribute Raman Spectroscopy (MARS): This novel approach combines Raman spectroscopy with multivariate data analysis to measure multiple product quality attributes without sample preparation [40]. MARS allows for high-throughput, nondestructive analysis of formulated products and has demonstrated capability for monitoring both protein purity-related and formulation-related attributes through generic multi-product models [40].

Integrated (Q)STR and In Vitro Approaches: For toxicity assessment, quantitative structure-toxicity relationship ((Q)STR) models integrated with in vitro assays provide a comprehensive approach to predict the toxicity of natural product components [39]. This methodology has been applied to predict acute toxicity using LD50 data from natural product databases, helping prioritize compounds for further development [39].

Single-Cell Multiomics and Network Pharmacology: Advanced technologies including single-cell multiomics and network pharmacology are being deployed to elucidate the mechanisms of action of natural products, particularly those used in traditional Chinese medicine [41]. These approaches help identify molecular targets and understand complex interactions between multiple active components.

Experimental Framework for Natural Product MAM

A robust analytical framework for natural products should include:

Comprehensive Characterization: Initial thorough characterization of the natural product using LC-MS, NMR, and other orthogonal techniques to identify major and minor constituents [38].

Reference Material Development: Establishment of well-characterized reference materials that represent the chemical complexity of the natural product [38].

Method Validation: Rigorous validation of analytical methods demonstrating they are reproducible and appropriate for the specific sample matrix (plant material, phytochemical extract, etc.) [38].

Multi-Attribute Monitoring: Implementation of monitoring protocols for multiple critical constituents that correlate with product quality, efficacy, and safety [39].

Comparative Performance Analysis

MAM Platform Comparisons

Different analytical platforms offer distinct advantages for multi-attribute analysis depending on the application requirements:

Table 3: Comparison of Multi-Attribute Method Platforms

| Platform/Technique | Attributes Monitored | Sample Preparation | Throughput | Key Applications |