Validation of Spectroscopic Methods for Pharmaceutical Analysis: A Guide to Regulatory Compliance, Advanced Applications, and Future Trends

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on the validation of spectroscopic methods in pharmaceutical analysis.

Validation of Spectroscopic Methods for Pharmaceutical Analysis: A Guide to Regulatory Compliance, Advanced Applications, and Future Trends

Abstract

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on the validation of spectroscopic methods in pharmaceutical analysis. It covers the foundational principles of major techniques like UV-Vis, IR, NMR, and Raman spectroscopy, detailing their specific applications in quality control, process monitoring, and stability testing. The content explores methodological implementation, including sample preparation and data interpretation, and addresses common troubleshooting and optimization challenges. A core focus is placed on the validation paradigms per ICH Q2(R1) and upcoming Q2(R2)/Q14 guidelines, ensuring regulatory compliance. Finally, the article examines emerging trends such as the integration of artificial intelligence, real-time release testing, and the analysis of complex biopharmaceuticals, offering a forward-looking perspective on the field.

Core Principles and Regulatory Landscape of Spectroscopic Analysis

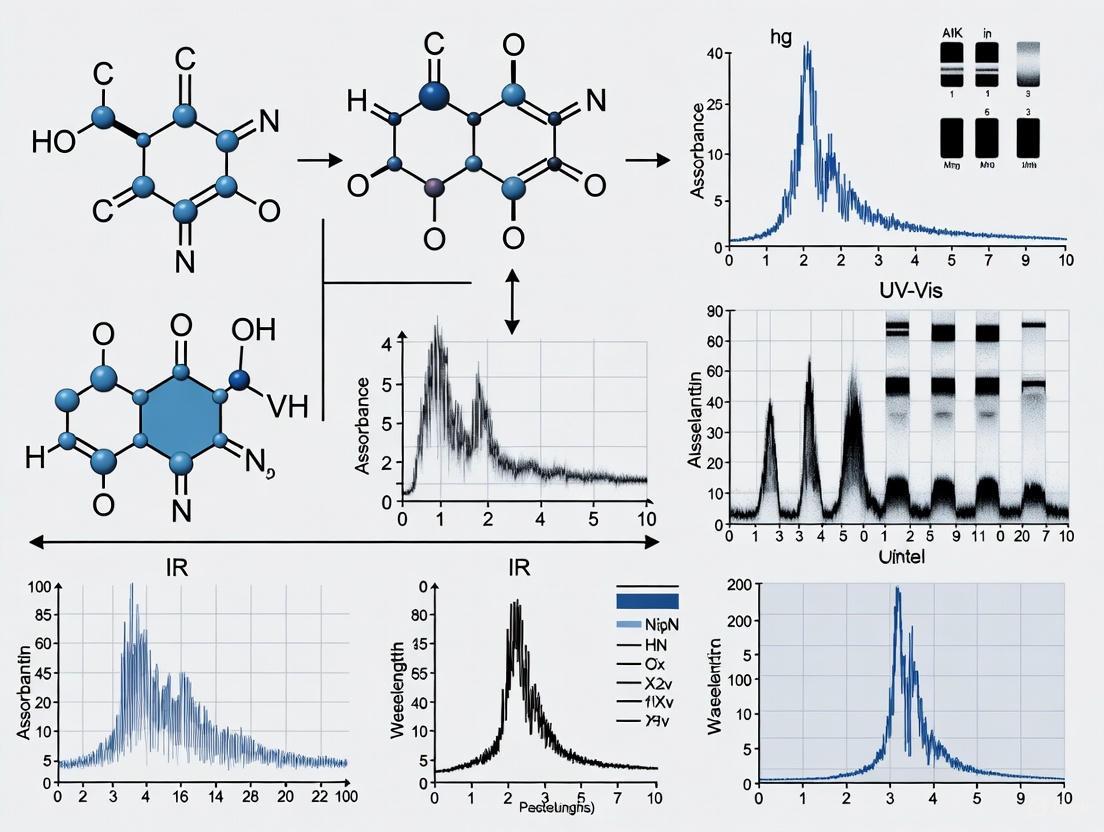

Spectroscopic analytical techniques are pivotal in the pharmaceutical and biopharmaceutical industries, providing essential tools for the classification and quantification of processes and finished products. In the context of drug development, process monitoring, and quality control, techniques such as Ultraviolet-Visible (UV-Vis), Infrared (IR), Nuclear Magnetic Resonance (NMR), and Raman spectroscopy offer complementary information about the molecular structure, identity, and environment of active pharmaceutical ingredients (APIs) and excipients. The selection of an appropriate spectroscopic method depends on the specific analytical question, whether it involves identifying functional groups, determining conformational subtleties, or quantifying analyte concentration in complex mixtures. This guide provides an objective comparison of these four key techniques, supported by experimental data and contextualized within the rigorous demands of pharmaceutical method validation, to aid researchers and scientists in making informed decisions for their analytical strategies.

The table below summarizes the core attributes, primary applications, and key performance metrics of UV-Vis, IR, NMR, and Raman spectroscopy, providing a foundation for their comparison.

Table 1: Core Attributes and Pharmaceutical Applications of Key Spectroscopic Techniques

| Technique | Fundamental Principle | Key Measurable Parameters | Typical Pharmaceutical Applications | Key Performance Metrics |

|---|---|---|---|---|

| UV-Vis | Electronic transitions in molecules | Absorbance (A), Absorption Wavelength (λmax), Extinction Coefficient (ϵ) | Concentration measurement of analytes; Reaction monitoring; Dissolution testing [1] | High sensitivity for chromophores; Linear range for quantification; Good for kinetics |

| IR | Molecular vibrations | Wavenumber (cmâ»Â¹), Transmittance (T%), Absorbance (A) | Identification of chemical bonds/functional groups; Polymorph screening; Raw material ID [2] | Strong specificity for functional groups; Effective for fingerprinting |

| NMR | Interaction of nuclear spins with magnetic fields | Chemical Shift (δ, ppm), Scalar Coupling (J, Hz), Relaxation Times (T1, T2) | Molecular structure and conformational analysis; Protein-ligand interactions; Impurity profiling [1] | High information content on structure; Quantitative without calibration |

| Raman | Inelastic scattering of light | Raman Shift (cmâ»Â¹), Intensity (Counts) | Molecular imaging and fingerprinting; Polymorph identification; Process monitoring [1] | Minimal sample prep; Suitable for aqueous solutions; Spatial resolution for imaging |

Detailed Techniques Comparison: Performance and Data

Quantitative Performance and Validation Data

Beyond their basic principles, the practical utility of these techniques in a regulated environment depends on their quantitative performance, sensitivity, and robustness. The following table consolidates key validation data and comparative attributes from experimental studies.

Table 2: Experimental Performance and Validation Metrics for Spectroscopic Techniques

| Technique | Reported Quantitative Performance | Comparative Sensitivity & Specificity | Key Advantages for Validation | Noted Limitations |

|---|---|---|---|---|

| UV-Vis | Linear correlations between computational and experimental λmax (R² not specified) [3]. Provides rapid quantification of nanoplastic concentrations consistent with mass-based techniques in terms of order of magnitude [4]. | High sensitivity for chromophores. Lower specificity as it probes broad electronic transitions. | Rapid, non-destructive; High-throughput capability; Easily integrated into PAT [1]. | Generally limited to molecules with chromophores; Susceptible to interference from absorbing impurities. |

| IR | Machine learning model for structure elucidation achieved 44.4% top-1 accuracy on experimental spectra [2]. Hit Quality Index (HQI) for database matching can exceed 90 for pure compounds [5]. | High specificity for functional groups and overall molecular fingerprint. Sensitivity is lower than UV-Vis. | Extensive, searchable spectral libraries; Strong fingerprinting capability; Universal applicability for organic molecules [2] [5]. | Water absorption can complicate sample prep; Difficulties with low-concentration analytes. |

| NMR | Good linear correlation between experimental and DFT-calculated ¹H NMR chemical shifts (Mean deviation of 0.3 ppm reported) [6]. High accuracy (up to 95.9%) for geographical origin discrimination of walnuts using SVM classifiers [7]. | Exceptional specificity for chemical environment and connectivity. Inherently low sensitivity, requiring more sample or time. | Non-destructive; Provides definitive structural information; Can probe higher-order structure of biologics [1]. | High instrument cost; Requires expert knowledge for data interpretation; Low sensitivity. |

| Raman | Q² values >0.8 for models predicting 27 components in cell culture media [1]. Real-time monitoring of product aggregation every 38 seconds with high accuracy [1]. | High spatial resolution for imaging. Specificity is high in the fingerprint region. Fluorescence can interfere. | Minimal sample preparation; Suitable for in-line PAT and real-time release; Non-destructive and water-compatible [1]. | Susceptible to fluorescence; inherently weak signal. |

Experimental Protocols and Methodologies

To ensure the reliability and reproducibility of spectroscopic methods, standardized protocols are essential. Below are detailed methodologies for key experiments cited in this guide, which can serve as templates for pharmaceutical validation studies.

1. Protocol for UV-Vis Method Validation and Comparison with Computational Data

- Objective: To validate UV-Vis absorption maxima (λmax) and extinction coefficients (ϵ) against computationally derived data for a set of organic compounds.

- Materials: Corpus of scientific documents (e.g., 402,034 articles), text-mining toolkit (ChemDataExtractor), high-performance computing (HPC) resource, validated chemical compounds [3].

- Method Details:

- Data Extraction: Use a tailored text-mining toolkit (e.g., ChemDataExtractor v1.3) to automatically extract experimental {compound, λmax, ϵ} paired data from a large corpus of HTML and XML scientific articles. Execute this process on a parallelized HPC resource for efficiency [3].

- Computational Calculation: Execute high-throughput electronic-structure calculations using density functional theory (DFT) and its time-dependent variant (TD-DFT) to predict the cognate computational attributes (λmax and oscillation strength, f) for the subset of validated compounds [3].

- Data Correlation: Statistically analyze the paired experimental and computational data sets to determine correlation coefficients for λmax and the relationship between experimental ϵ and computational f [3].

2. Protocol for NMR-Based Discrimination with Chemometrics

- Objective: To discriminate the geographical origin of botanical samples (e.g., walnuts) using different types of 1D and 2D NMR spectra combined with multivariate statistics.

- Materials: 128 authentic walnut samples, deuterated methanol and acetonitrile (1:1) for extraction, NMR spectrometer [7].

- Method Details:

- Sample Preparation: Extract each walnut sample with a methanol/acetonitrile-d³ (1:1) solvent mixture to obtain a mid-polar extract containing a wide range of metabolites [7].

- Data Acquisition: For each extract, acquire three types of NMR spectra: 1D ¹H NOESY (with water suppression), 1D ¹H PSYCHE (pure shift), and 2D ¹H-¹³C ASAP-HSQC. The use of accelerated 2D methods like ASAP-HSQC is critical for high-throughput analysis [7].

- Data Analysis: Employ multivariate statistical analysis. Use Principal Component Analysis (PCA) for an unsupervised overview of the data. Then, build classification models using a Support Vector Machine (SVM) classifier with a repeated nested cross-validation procedure to avoid overfitting. Compare the accuracy of models derived from the different NMR experiment types [7].

3. Protocol for FT-IR Spectral Database Matching and Quality Assessment

- Objective: To identify an unknown material by matching its FT-IR spectrum against a reference database and to assess the quality of the match.

- Materials: FT-IR spectrometer, unknown sample (pure compound or mixture), commercial reference spectral database (e.g., Aldrich/ICHEM complete ATR FT-IR library with >36,000 compounds) [5].

- Method Details:

- Spectral Acquisition: Acquire a high-quality FT-IR spectrum of the unknown sample using an appropriate sampling technique (e.g., ATR).

- Database Search: Search the reference database using the unknown spectrum and a suitable algorithm (e.g., Euclidean distance or first derivative Euclidean distance). The software will return a ranked list of hits with a Hit Quality Index (HQI) [5].

- Match Quality Assessment: Do not rely solely on the top HQI value. Critically evaluate the search results by:

- Calculating the gap (difference in HQI between successive hits, e.g., HQIâ‚ - HQIâ‚‚).

- Calculating the gap percentage: (HQIâ‚ - HQIâ‚‚) / (HQIâ‚ - HQIâ‚₀₀) [5].

- Visually comparing the unknown spectrum with the top several reference spectra. A large gap after the first hit suggests a unique match, while a small gap suggests a cluster of structurally similar compounds [5].

4. Protocol for In-line Raman Spectroscopy for Real-time Bioprocess Monitoring

- Objective: To monitor product quality attributes (e.g., aggregation and fragmentation) in real-time during clinical bioprocessing.

- Materials: Bioreactor, in-line Raman spectrometer probe, automation and machine learning software platform [1].

- Method Details:

- System Integration: Integrate an in-line Raman probe directly into the bioreactor and connect it to a robotic system that automates calibration and data collection [1].

- Data Acquisition & Modeling: Collect Raman spectra automatically at high frequency (e.g., every 38 seconds). Use machine learning models (e.g., chemometrics) built from calibration data to convert spectral data into real-time estimates of critical quality attributes like aggregation and fragmentation [1].

- Process Control: Use the real-time predictions to enhance process understanding and enable active control of the bioprocess, ensuring consistent product quality and facilitating real-time release [1].

Workflow Visualization for Method Selection and Validation

The following diagram outlines a logical decision pathway for selecting and validating a spectroscopic technique based on the analytical objective, leveraging the comparative data presented in this guide.

Essential Research Reagent Solutions

The successful implementation of spectroscopic methods relies on specific reagents and materials. The following table details key solutions used in the experimental protocols cited herein.

Table 3: Essential Research Reagents and Materials for Spectroscopic Analysis

| Item Name | Function / Application | Specific Example from Research |

|---|---|---|

| Deuterated Solvents | Provides an NMR-inactive signal-free environment for NMR spectroscopy, allowing for accurate locking and shimming of the magnetic field. | Deuterated cyclohexane (C6D12) for studying azo dye photoisomers; Deuterated methanol (CD3OD) and DMSO (DMSO-d6) for curcumin analysis [6] [8]. |

| Reference Spectral Databases | Enables identification of unknown compounds by matching their acquired spectrum against a curated library of known reference spectra. | Aldrich/ICHEM complete ATR FT-IR library (36,639 compounds); EPA-NIST Vapor Phase library (5,228 compounds) for FT-IR identification [5]. |

| Text-Mining & Cheminformatics Toolkits | Automates the large-scale extraction of structured experimental data from the vast corpus of scientific literature to create validation datasets. | ChemDataExtractor toolkit, used to auto-generate a database of 18,309 UV/vis absorption records from 402,034 documents [3]. |

| Process Analytical Technology (PAT) Probes | Allows for non-invasive, in-line, real-time monitoring of critical process parameters (CPPs) and critical quality attributes (CQAs) during manufacturing. | In-line Raman probes integrated into bioreactors for real-time monitoring of product aggregation and fragmentation every 38 seconds [1]. |

| Force Fields for Computational Spectroscopy | Enables the simulation of spectroscopic properties, such as IR spectra, by modeling molecular vibrations and dynamics, serving as a pretraining base for machine learning models. | Class II Polymer Consistent Force Field (PCFF), used to simulate 634,585 IR spectra via molecular dynamics for machine learning model training [2]. |

For researchers validating spectroscopic methods in pharmaceutical analysis, navigating the interplay between procedural guidelines and data integrity principles is fundamental. This guide examines the evolving standards of ICH Q2(R1), Q2(R2), and Q14, and their critical relationship with the ALCOA+ framework for data integrity.

Analytical Procedure Lifecycle and Data Integrity: An Integrated Workflow

The diagram below illustrates how ICH guidelines and ALCOA+ principles integrate throughout the analytical procedure lifecycle.

Comparative Analysis of ICH Guidelines

The following table summarizes the key characteristics and evolution of the relevant ICH guidelines.

| Guideline | Full Title | Scope & Objective | Key Updates & Features | Status & Implementation |

|---|---|---|---|---|

| ICH Q2(R1) [9] | Validation of Analytical Procedures: Text and Methodology | Provides a standard for validating analytical procedures to demonstrate they are suitable for their intended purpose [9]. | Combined original Q2A (Text) and Q2B (Methodology); defines classic validation characteristics like accuracy, precision, specificity [9]. | Finalized (September 2021); represents the previous standard [9]. |

| ICH Q2(R2) [10] [11] | Validation of Analytical Procedures | Expands on Q2(R1) for application to more complex procedures; provides guidance on deriving and evaluating validation tests [10] [11]. | New/Updated Sections:• Validation during the analytical procedure lifecycle• Considerations for multivariate procedures• Enhanced guidance on specificity for stability-indicating methods [10] | Finalized; to be implemented with ICH Q14 to modernize and harmonize approaches [10]. |

| ICH Q14 [10] | Analytical Procedure Development | Describes scientific, risk-based approaches for developing and maintaining analytical procedures over their lifecycle [10]. | Key Features:• Establishes an Analytical Procedure Development (APD) plan• Promotes a lifecycle approach linked to the product lifecycle• Encourages more robust procedures and enhanced regulatory communication [10] | Finalized; provides the development foundation that ICH Q2(R2) validates [10]. |

Experimental Protocol: Validation of a Spectroscopic Assay Method

For a UV-Vis spectrophotometric assay for drug substance potency, the following validation protocol aligns with ICH Q2(R2) and data integrity principles.

1. Accuracy (Recovery Study):

- Procedure: Prepare a sample of the drug substance at 100% of the test concentration (e.g., 10 µg/mL) in triplicate. Spike the placebo with known quantities of the drug substance at 80%, 100%, and 120% of the test concentration. Measure the absorbance of all samples.

- Data Integrity (ALCOA+): All sample weights and preparations must be Attributable (who prepared), Contemporaneous (recorded immediately), and Original (raw data file from the spectrometer) [12] [13]. The calibration curve used must be Traceable [12].

2. Precision:

- Repeatability: Inject six independent preparations of the 100% test concentration sample. The %RSD of the measured potency is calculated.

- Data Integrity (ALCOA+): The sequence of injections must be Consistent with the run timeline, and the audit trail must be Complete, capturing all injections without gaps [12] [14].

3. Specificity/Selectivity:

- Procedure: Compare the spectra and absorbance of the drug substance sample against a placebo and a known degradation product (e.g., from a forced degradation study). Demonstrate that the assay is unaffected and can quantify the analyte accurately.

- Data Integrity (ALCOA+): The original, unprocessed spectra for all samples must be Enduring and Available for review during regulatory inspection [12] [14].

The ALCOA+ Framework for Data Integrity

ALCOA+ is a set of principles ensuring data is reliable and trustworthy throughout its lifecycle [13] [14]. The framework has evolved to ALCOA++, which some sources describe as including further principles like Traceability [12].

Core Principles and Implementation

| Principle | Core Question | Practical Application in Spectroscopy |

|---|---|---|

| Attributable [12] [13] | Who generated the data and when? | Use unique login IDs for spectrometer software; audit trails automatically link data to the user [12]. |

| Legible [12] [13] | Is the data readable? | Ensure electronic data files are secure and readable throughout the retention period; avoid proprietary formats that become obsolete [12] [14]. |

| Contemporaneous [12] [13] | Was the data recorded at the time of the activity? | Use spectrometers with integrated, network-synchronized clocks (NTP) to timestamp data at the moment of acquisition [12] [14]. |

| Original [12] [13] | Where is the source data? | Preserve the first capture of the raw spectral data file. Any printed copy is not the original record [12]. |

| Accurate [12] [13] | Is the data error-free? | Ensure instruments are calibrated and qualified. Any changes to data must not obscure the original record and must be justified [12] [14]. |

| Complete [12] [13] | Is all data including repeats present? | All data must be retained, including invalidated runs. The audit trail must be enabled and reviewed to ensure no data is deleted [12]. |

| Consistent [12] [13] | Is the sequence of events logical? | Date and time stamps should follow a logical sequence, and procedures should be standardized to prevent contradictions [12]. |

| Enduring [12] [13] | Is the data secured for the long term? | Archive electronic data in a stable, non-rewritable format with regular backups for the required retention period [12] [14]. |

| Available [12] [13] | Can the data be found and accessed? | Implement a data management system with indexing and search capabilities to retrieve data for review and inspection over its lifetime [12] [14]. |

| Traceable [12] | Can changes be fully tracked? | An audit trail should document who, what, when, and why for any change, allowing full reconstruction of the data's history [12]. |

Essential Research Reagent Solutions for Compliant Analysis

The table below details key materials and systems required for implementing validated and integrity-compliant analytical methods.

| Item / Solution | Critical Function in Validation & Analysis |

|---|---|

| Validated Chromatographic Data System (CDS) | Manages data from analytical instruments, enforcing user access controls, generating secure audit trails, and ensuring data is Attributable and Traceable [12]. |

| Certified Reference Standards | Provides the Accurate and definitive measurement standard for quantifying the analyte, essential for establishing method accuracy, linearity, and range [10]. |

| System Suitability Test (SST) Solutions | Verifies that the entire analytical system (instrument, reagents, column, analyst) is performing adequately before and during a validation run or sample analysis. |

| Stable & Qualified Reagents/Solvents | Ensures Consistent and reliable method performance. Use of unqualified reagents can introduce variability, invalidating precision and accuracy data. |

| Audit Trail Review Software | Technology-assisted tools that help in performing risk-based, ongoing reviews of audit trails for critical data, as expected by regulators [12]. |

The modern regulatory framework for spectroscopic method validation is a cohesive system. ICH Q14 provides the roadmap for development, ICH Q2(R2) offers the updated criteria for proving fitness-for-purpose, and the ALCOA+ principles form the non-negotiable foundation for data integrity throughout the process. Success in regulatory submissions depends on the simultaneous application of all three components, ensuring that methods are not only scientifically sound but also generate data that is fundamentally reliable, trustworthy, and defensible.

Strategic Importance of Analytical Excellence in QbD and RTRT

In modern pharmaceutical development, analytical excellence has evolved from a supportive function to a core strategic pillar enabling robust Quality by Design (QbD) and reliable Real-Time Release Testing (RTRT). The paradigm shift from traditional end-product testing to proactive, science-based quality assurance is fundamentally dependent on advanced analytical capabilities [15]. This transformation is driven by regulatory frameworks like ICH Q8-Q11 for QbD and supported by emerging guidelines including ICH Q14 and Q2(R2) for analytical procedure lifecycle management [16] [17].

Analytical methods provide the critical data streams necessary to define Critical Quality Attributes (CQAs), establish design spaces, and implement control strategies [15]. Within QbD frameworks, analytical excellence ensures that process understanding is based on accurate, reliable data, enabling manufacturers to build quality into products rather than testing it in retrospectively [15] [18]. For RTRT, where traditional batch release tests are replaced by process data and PAT, analytical methods must deliver real-time, actionable information with exceptional reliability [16] [18]. This article examines the instrumental role of analytical excellence in successful QbD and RTRT implementation through comparative analysis of spectroscopic techniques and their validation frameworks.

Analytical Quality by Design: Building Robustness into Analytical Methods

Analytical Quality by Design (AQbD) applies the principles of QbD to analytical method development, creating a systematic framework for building robustness and reliability into analytical procedures [17]. Where traditional method development often relied on empirical, trial-and-error approaches, AQbD employs science- and risk-based methodologies to ensure methods remain fit-for-purpose throughout their lifecycle [17] [19].

The foundation of AQbD is the Analytical Target Profile (ATP), a predefined objective that clearly states the method's required performance characteristics [17]. As one industry expert notes: "The foundation of this methodology is the Analytical Target Profile (ATP), a clear and measurable statement of the intended purpose and required performance characteristics of each analytical method" [17]. The ATP guides systematic method development and establishes the Method Operable Design Region (MODR), the multidimensional combination of method parameters that have been demonstrated to provide suitable quality assurance [17].

This approach transforms analytical method lifecycle management. Under traditional models, method changes required costly revalidation, but with AQbD and the MODR, adjustments within the design space can be made efficiently while maintaining regulatory compliance [17]. The enhanced approach to analytical procedure development formalized in ICH Q14 represents a significant advancement over minimal approaches, incorporating risk assessment, structured experimentation, and continuous improvement practices [19].

AQbD Implementation Workflow

The following diagram illustrates the key stages in the Analytical Quality by Design workflow, from defining requirements to continuous monitoring:

Real-Time Release Testing: The Role of Advanced Analytics

Real-Time Release Testing (RTRT) represents a fundamental shift from discrete end-product testing to continuous quality verification based on process data and PAT [16] [18]. This approach relies on analytical systems capable of generating reliable, real-time data on Critical Process Parameters (CPPs) and Critical Quality Attributes (CQAs) during manufacturing [18]. By implementing RTRT, manufacturers can achieve significant reductions in release times while improving quality assurance through more comprehensive data collection [16].

The foundation of a successful RTRT strategy is the integration of Process Analytical Technology (PAT) tools that monitor quality attributes throughout the manufacturing process [18]. As identified in recent research, "Various frameworks and methods, such as quality by design (QbD), real time release test (RTRT), and continuous process verification (CPV), have been introduced to improve drug product quality in the pharmaceutical industry" [18]. These technologies recognize that appropriate combination of process controls and predefined material attributes may provide greater assurance of product quality than end-product testing alone [18].

Advanced spectroscopic techniques serve as the backbone of PAT implementations for RTRT. Raman spectroscopy, for instance, has been successfully deployed for real-time monitoring of product aggregation and fragmentation during clinical bioprocessing, with hardware automation and machine learning enabling measurements every 38 seconds [1]. Similarly, UV-vis spectroscopy has been utilized for inline monitoring of Protein A affinity chromatography in monoclonal antibody purification, optimizing separation conditions to achieve 95.92% recovery and 49.98% host cell protein removal [1].

Comparative Analysis of Spectroscopic Techniques for Pharmaceutical Analysis

The selection of appropriate analytical techniques is critical for successful QbD and RTRT implementation. Different spectroscopic methods offer distinct advantages and limitations for various pharmaceutical applications. The table below provides a comparative analysis of major spectroscopic techniques used in pharmaceutical analysis:

Table 1: Comparison of Spectroscopic Techniques for Pharmaceutical Analysis

| Technique | Spectral Range | Primary Applications | Sensitivity | Selectivity | Suitability for PAT |

|---|---|---|---|---|---|

| UV-Vis | 100 nm - 1 µm | API quantification, dissolution testing, concentration measurement | Moderate | Low to Moderate | Excellent (inline probes) |

| NIR | 1 - 2.5 µm | Raw material ID, blend uniformity, moisture content | Low to Moderate | Moderate (with chemometrics) | Excellent (fiber optics compatible) |

| Raman | Varies with laser | Polymorph identification, content uniformity, reaction monitoring | Variable (enhanced with SERS) | High | Good (non-contact) |

| FT-IR | 2.5 - 25 µm | Chemical structure, functional groups, protein secondary structure | High | High | Good (ATR probes) |

| Fluorescence | 200-800 nm | Protein folding, aggregation, impurity profiling | Very High | High | Moderate |

| ICP-MS | - | Elemental impurities, metal quantification in biologics | Extremely High | High for elements | Poor (lab-based) |

Technique Selection Criteria

Choosing the appropriate spectroscopic technique requires careful consideration of multiple factors:

- Nature of the analyte: Molecular size, structural complexity, and chemical properties dictate suitable techniques [20].

- Detection requirements: Sensitivity and specificity needs vary significantly between applications like impurity detection versus API quantification [20].

- Sample matrix effects: Complex formulations may require techniques with higher selectivity [20].

- Process compatibility: PAT applications demand robust, reproducible methods suitable for inline or online implementation [18] [20].

As noted in recent industry analysis, "When high sensitivity and specificity are required, absorption-based methods are typically the best choice. Qualitative information can be derived from the spectral positions of the signal and quantification is possible through the common Beer-Lambert law" [20]. However, for aqueous systems or complex matrices, scattering techniques like Raman spectroscopy may be preferable due to water's low scattering cross-section [20].

Validation Frameworks: ICH Q2(R2) and Q14 Guidelines

The recent adoption of ICH Q14 and Q2(R2) guidelines represents a significant evolution in analytical method validation, formally incorporating lifecycle management and risk-based approaches into regulatory expectations [17] [19]. These guidelines shift the paradigm from static validation to dynamic, science-based analytical procedure management.

ICH Q14 "presents strategies that allow for a more comprehensive analytical procedure change management and risk assessment" [19]. It introduces key AQbD elements including the Analytical Target Profile (ATP) and Method Operable Design Region (MODR), which provide flexibility for post-approval changes and can reduce regulatory burden [19]. The guideline formalizes both minimal (traditional) and enhanced approaches to analytical procedure development, with the enhanced approach incorporating systematic development, risk management, and structured knowledge management [19].

ICH Q2(R2) complements Q14 by modernizing validation practices, providing "support for validation of multivariate procedures such as PAT using other techniques rather than just chromatographic procedures or offline procedures" [19]. This expansion is particularly significant for RTRT implementations that rely on multivariate models and real-time monitoring techniques [16] [18].

Traditional vs. Modern Validation Approaches

The implementation of ICH Q14 and Q2(R2) has transformed key activities throughout the analytical method lifecycle:

Table 2: Impact of ICH Q14 and Q2(R2) on Analytical Method Lifecycle Activities

| Activity | Traditional Approach | Modern Q14/Q2(R2) Approach |

|---|---|---|

| Method Development | Empirical, often dependent on implicit knowledge | ATP-driven, rigorously risk-assessed |

| Validation | Static, locked pre-submission | Continual, performance-based, focused on critical attributes |

| Method Transfer | Laborious, manual, prone to errors | Rigorous assurance of performance in new environment |

| Change Control | Typically required regulatory revalidation | Flexible, efficient within pre-validated MODR |

| Knowledge Management | Siloed, fragmented, informal | Structured, centralized, traceable |

Experimental Protocols and Research Reagent Solutions

Development and Validation of a UV Spectroscopic Method

A recent study demonstrates the development and validation of a UV spectroscopic method for quantification of gepirone hydrochloride in dissolution media [21]. The methodology provides a practical example of analytical method validation within pharmaceutical quality systems.

Experimental Protocol:

- Instrumentation: UV spectrophotometer with matched quartz cells

- Solvent Systems: 0.1N HCl and phosphate buffer (pH 6.8)

- Wavelength Selection: Scanning from 200-400 nm to identify λmax (233 nm in 0.1N HCl, 235 nm in buffer)

- Linearity Study: Concentrations from 2-20 μg/mL with correlation coefficients of 0.998 and 0.996

- Precision Evaluation: Repeatability (intra-day) and intermediate precision (inter-day)

- Accuracy Assessment: Recovery studies via standard addition

- Robustness Testing: Deliberate variations in pH, temperature, and source wavelength

This method demonstrated excellent accuracy with recovery rates of 98-102%, high precision with minimal variability, and appropriate robustness to minor method modifications [21]. The validated method was successfully applied to dissolution testing of tablet formulations, demonstrating suitability for quality control applications [21].

Essential Research Reagent Solutions

The following table outlines key reagents and materials essential for spectroscopic method development and validation in pharmaceutical analysis:

Table 3: Essential Research Reagent Solutions for Spectroscopic Pharmaceutical Analysis

| Reagent/Material | Function | Application Examples |

|---|---|---|

| High-Purity Reference Standards | Method calibration and validation | API quantification, impurity monitoring |

| HPLC-Grade Solvents | Mobile phase preparation, sample dissolution | UV-Vis, HPLC method development |

| Buffer Salts | pH control, mimicking physiological conditions | Dissolution testing, stability studies |

| Derivatization Agents | Enhancing detection sensitivity | Fluorescence detection, chromatographic analysis |

| SERS Substrates | Signal enhancement in Raman spectroscopy | Trace analysis, low-concentration detection |

| Stable Isotope-Labeled Compounds | Internal standards for mass spectrometry | Quantitative bioanalysis, metabolite ID |

Analytical excellence serves as the fundamental enabler of modern pharmaceutical quality systems, providing the scientific foundation for QbD implementation and RTRT strategies. The evolution from traditional analytical methods to AQbD approaches, supported by ICH Q14 and Q2(R2) guidelines, represents a transformative shift in how quality is built into pharmaceutical manufacturing [17] [19].

The strategic importance of analytical capabilities extends beyond regulatory compliance to encompass manufacturing efficiency, product quality, and patient safety. As the industry advances toward increasingly sophisticated manufacturing approaches including continuous manufacturing and personalized medicines, the role of analytical excellence will only grow in significance [16]. Organizations that invest in robust analytical development, modern validation approaches, and advanced PAT capabilities will be positioned to achieve sustainable competitive advantage through enhanced quality, reduced costs, and accelerated development timelines [15] [16].

The integration of multivariate modeling, machine learning, and digital twin technologies with traditional analytical techniques promises to further enhance analytical capabilities, enabling more predictive quality systems and increasingly sophisticated RTRT applications [15] [16]. As these technologies mature, analytical excellence will continue to evolve as a strategic asset rather than a supportive function, fundamentally shaping the future of pharmaceutical quality assurance.

The pharmaceutical industry faces intensifying pressure to accelerate drug development while ensuring product quality, safety, and efficacy. This drive is fueled by market demands for faster access to therapies and stringent regulatory requirements for quality control, particularly for complex biopharmaceuticals. Spectroscopic methods have emerged as powerful tools to meet these challenges, offering rapid, non-destructive analysis crucial for streamlining development timelines. This guide objectively compares the performance of recent spectroscopic instrumentation advances, providing experimental data and protocols to validate their application in pharmaceutical analysis.

The global biopharmaceutical market, projected to reach USD 740 billion by 2030, underscores the critical need for efficient analytical technologies [22]. The industry's response has been a strategic shift toward advanced Process Analytical Technology (PAT) frameworks and real-time monitoring, which rely heavily on modern spectroscopic techniques to provide the immediate data required for rapid process optimization and quality assurance [23].

Comparative Analysis of Spectroscopic Techniques

The following section provides a data-driven comparison of contemporary spectroscopic instruments, focusing on their technical capabilities, performance metrics, and applicability to pharmaceutical research challenges.

Table 1: Recent Advances in Spectroscopic Instrumentation (2024-2025)

| Technique | Example Instrument (Vendor) | Key Features | Primary Pharmaceutical Application |

|---|---|---|---|

| FT-IR Spectrometry | Vertex NEO Platform (Bruker) [24] | Vacuum optical path to remove atmospheric interference; multiple detector positions. | Protein studies, far-IR analysis, stability testing. |

| Fluorescence Spectroscopy | Veloci A-TEEM Biopharma Analyzer (Horiba) [24] | Simultaneous Absorbance, Transmittance, & Fluorescence EEM. | Monoclonal antibody analysis, vaccine characterization. |

| Near-IR (NIR) Spectroscopy | OMNIS NIRS Analyzer (Metrohm) [24] | Nearly maintenance-free; simplified method development. | Raw material identification, quality control (QC). |

| Handheld NIR | SciAps vis-NIR [24] | Field instrument with laboratory-quality performance. | Agriculture, geochemistry, pharmaceutical QC. |

| Raman Spectroscopy | PoliSpectra (Horiba) [24] | Fully automated Raman plate reader for 96-well plates. | High-throughput screening in drug discovery. |

| Handheld Raman | TaticID-1064ST (Metrohm) [24] | Analysis guidance for users; onboard camera for documentation. | Hazardous material identification, raw material verification. |

| Microwave Spectroscopy | Commercial Platform (BrightSpec) [24] | Broadband chirped pulse microwave spectrometer. | Unambiguous determination of molecular structure. |

| QCL Microscopy | LUMOS II ILIM (Bruker) [24] | Quantum Cascade Laser source; imaging rate of 4.5 mm² per s. | High-resolution chemical imaging of formulations. |

Performance Comparison for Key Applications

Table 2: Technique Performance in Critical Pharmaceutical Workflows

| Analytical Challenge | Recommended Technique | Performance & Advantages | Experimental Data/Output |

|---|---|---|---|

| Protein Characterization & Stability | FT-IR (e.g., Vertex NEO) [24] | Elimates atmospheric vapor interference for precise protein spectrum acquisition. | High-quality spectra in the amide I and amide II regions, enabling accurate secondary structure analysis. |

| Biologics High-Throughput Screening | Raman Plate Reader (e.g., PoliSpectra) [24] | Full automation and integration with liquid handling systems. | Rapid analysis of 96-well plates; data compatible with high-throughput screening protocols. |

| Raw Material Identification | Handheld NIR (e.g., SciAps) [24] | Non-destructive, rapid analysis (< 30 seconds) at point-of-use. | Pass/Fail result against a spectral library; reduced testing cycle time. |

| Structural Elucidation | Microwave Spectroscopy (e.g., BrightSpec) [24] | Gas-phase analysis for unambiguous determination of molecular structure and configuration. | Unique rotational spectrum providing a definitive molecular fingerprint. |

| Contaminant Analysis | QCL Microscopy (e.g., LUMOS II) [24] | High-speed imaging (4.5 mm²/s) in transmission or reflection mode. | Chemical images identifying and locating micron-sized contaminants in solid dosage forms. |

Experimental Protocols for Method Validation

Validating spectroscopic methods is imperative for regulatory compliance and ensuring data reliability. Below are detailed protocols for implementing and validating two key techniques.

Protocol for In-line Bioprocess Monitoring with Fluorescence Spectroscopy

This protocol outlines the use of fluorescence spectroscopy as a PAT tool for real-time monitoring of cell culture processes, such as fermentations [23].

1. Research Reagent Solutions & Essential Materials

Table 3: Essential Materials for In-line Bioprocess Monitoring

| Item | Function |

|---|---|

| Bioreactor | Provides a controlled environment (pH, temperature, DO) for the bioprocess. |

| Non-invasive Fluorescence Probe | For in-line, real-time data acquisition directly from the process stream. |

| Standard Solutions (e.g., Tryptophan, NADPH) | Used for calibrating the spectroscopic system and verifying signal response. |

| Chemometrics Software | For data pre-processing and developing multivariate models (e.g., PCA, PLS). |

2. Procedure

- Step 1: Probe Installation and Sterilization. Install the fluorescence probe into a dedicated port on the bioreactor. Follow standard sterilization procedures (e.g., in-situ steam sterilization) to maintain aseptic conditions.

- Step 2: System Calibration. Prior to inoculation, collect background spectra. Use standard solutions of key fluorophores (e.g., Tryptophan, NADPH) to establish a correlation between fluorescence intensity and concentration.

- Step 3: Data Acquisition. Initiate the fermentation process. Collect fluorescence emission spectra at regular intervals (e.g., every 5-10 minutes) throughout the run duration.

- Step 4: Data Pre-processing. Process raw spectra to reduce noise and correct for baseline drift. Common techniques include Savitzky-Golay smoothing and standard normal variate (SNV) correction.

- Step 5: Model Building & Validation. Use Partial Least Squares (PLS) regression to build a model correlating spectral data with critical process parameters (CPPs) like cell density or product titer. Validate the model using an independent data set.

3. Experimental Workflow

Protocol for Protein Characterization using FT-IR Spectroscopy

This protocol describes the use of advanced FT-IR for characterizing the higher-order structure of proteins, a critical quality attribute for biopharmaceuticals [24] [22].

1. Research Reagent Solutions & Essential Materials

- FT-IR Spectrometer: Equipped with a vacuum system (e.g., Bruker Vertex NEO) to minimize water vapor interference.

- ATR Accessory: Vacuum ATR accessory with a sample compartment at normal pressure.

- Protein Sample: Purified protein solution at a known concentration.

- Buffer Solution: Corresponding buffer for background measurement.

2. Procedure

- Step 1: System Purge. Activate the spectrometer's vacuum system and allow sufficient time for the optical path to purge, effectively removing atmospheric water vapor and COâ‚‚.

- Step 2: Background Collection. Place a drop of the buffer solution onto the ATR crystal and collect a background spectrum.

- Step 3: Sample Measurement. Carefully wipe the crystal clean. Apply the protein sample to the crystal and ensure even coverage. Collect the sample spectrum.

- Step 4: Data Processing. Subtract the background spectrum from the sample spectrum. Perform baseline correction and second derivative analysis on the amide I region (1600-1700 cmâ»Â¹) to enhance spectral resolution.

- Step 5: Spectral Analysis. Deconvolute the amide I band to identify the contributions of different secondary structure elements (e.g., α-helix, β-sheet, random coil).

3. Method Validation Pathway

The comparative data and experimental protocols presented demonstrate a clear trend in spectroscopic technology: the move toward higher sensitivity, greater automation, and enhanced portability and ruggedness for both lab and field use. Techniques like QCL microscopy and chirped-pulse microwave spectroscopy push the boundaries of sensitivity and structural elucidation, while handheld NIR and Raman instruments decentralize testing, accelerating decision-making [24].

A critical driver for adopting these technologies is their compatibility with Quality by Design (QbD) principles and PAT initiatives. The ability of in-line fluorescence and NIR probes to provide real-time data enables manufacturers to shift from traditional batch-end testing to continuous quality verification, significantly compressing development cycles and reducing the risk of batch failure [23].

In conclusion, the validation and implementation of modern spectroscopic methods are no longer merely an analytical choice but a strategic imperative. The instruments reviewed here, backed by rigorous experimental protocols, provide the scientific community with a powerful toolkit to navigate the intersecting pressures of market speed and regulatory compliance, ultimately accelerating the delivery of safe and effective medicines to patients.

Practical Implementation and Advanced Applications in Pharma and Biopharma

In pharmaceutical analysis, the journey from a raw sample to an interpretable and validated result is a complex, multi-stage process. Method development encompasses everything from initial sample preparation to final data interpretation, and its robustness directly impacts drug safety, efficacy, and quality. The contemporary laboratory is witnessing a paradigm shift, driven by technological advancements in automation, the integration of Process Analytical Technology (PAT), and the powerful application of Artificial Intelligence (AI) and machine learning (ML) for data processing. This guide objectively compares the performance of traditional approaches against these modern alternatives, providing a structured comparison to help researchers and drug development professionals navigate the evolving landscape of spectroscopic method development.

The Evolving Landscape of Sample Preparation

Sample preparation, historically a manual and time-intensive bottleneck, is being transformed by automation and standardized kits. These innovations directly address key challenges in pharmaceutical analysis: variability, throughput, and the complexity of modern drug modalities like oligonucleotides and biotherapeutics.

Performance Comparison: Manual vs. Automated Preparation

The table below summarizes a comparative analysis of manual versus automated sample preparation techniques, based on current vendor solutions and methodologies.

Table 1: Comparison of Manual and Automated Sample Preparation Approaches

| Feature | Traditional Manual Preparation | Automated & Kit-Based Solutions |

|---|---|---|

| Throughput | Low to moderate; limited by analyst speed and endurance. | High; capable of unsupervised operation for numerous samples [25]. |

| Consistency & Error Rate | Prone to human error and inter-analyst variability; consistency is a challenge. | Greatly reduces human error; ensures highly reproducible workflows [25]. |

| Typical Applications | Broad but often require extensive method development. | Targeted workflows for specific challenges (e.g., PFAS, oligonucleotides, peptide mapping) [25]. |

| Solvent Consumption | Often high due to manual washing and extraction steps. | Designed to reduce or eliminate solvent use, aligning with green chemistry principles [25]. |

| Integration with Analysis | Offline; requires manual transfer, increasing contamination risk. | Can be integrated into online preparation, merging extraction, cleanup, and separation [25]. |

| Expertise Barrier | Can be intimidating and requires significant training to master. | Simplified via ready-made kits with standards and optimized protocols [25]. |

Experimental Protocol: Automated SPE for Oligonucleotide Analysis

Objective: To reproducibly extract and purify oligonucleotide therapeutics from a complex biological matrix for subsequent LC-MS analysis, minimizing manual handling and variability.

Materials & Reagents:

- Sample: Plasma containing the oligonucleotide drug candidate and its metabolites.

- Automated System: A liquid handling robot or a chromatographic system with an automated sample preparation module.

- Extraction Kit: A commercial weak anion exchange (WAX) solid-phase extraction (SPE) plate or cartridge, specifically designed for oligonucleotides [25].

- Reagents: Traceable buffering and elution reagents provided in the kit (e.g., binding buffer, wash buffer, elution buffer) [25].

Method:

- Sample Loading: The automated system dispenses the plasma sample onto the conditioned WAX SPE cartridge.

- Binding & Washing: The system sequentially applies binding and wash buffers according to the optimized protocol. Anion exchange interactions selectively retain the oligonucleotides while impurities are washed away.

- Elution: A precise volume of a specialized elution buffer is applied to release the purified oligonucleotides from the solid phase.

- Direct Injection: The eluate is automatically transferred and injected into the LC-MS system for separation and quantification. This direct transfer minimizes processing time and the potential for sample loss or contamination [25].

Instrumentation and Analysis: Core Analytical Technologies

The core analysis stage is where spectroscopic and chromatographic techniques characterize the prepared sample. Recent advancements highlight a divergence between high-precision laboratory instruments and portable field-ready devices, alongside a growing trend of hyphenated techniques.

Performance Comparison of Recent Spectroscopic Instrumentation

The following table compares selected new spectroscopic instruments introduced from 2024 to 2025, highlighting their application-specific strengths.

Table 2: Comparison of Selected New Spectroscopic Instrumentation (2024-2025)

| Instrument / Platform | Technique | Key Feature | Primary Application in Pharma/Biopharma |

|---|---|---|---|

| Horiba Veloci A-TEEM | A-TEEM (Absorbance, Transmittance, EEM) | Simultaneously collects multiple data dimensions from a single sample. | Biopharmaceutical analysis, including monoclonal antibodies, vaccine characterization, and protein stability [24]. |

| Bruker Vertex NEO | FT-IR Spectrometer | Vacuum optical path to remove atmospheric interferences. | High-sensitivity protein studies and work in the far-IR region [24]. |

| ProteinMentor | QCL Microscopy | Designed specifically for protein-containing samples. | Protein and product impurity identification, stability information, monitoring deamidation [24]. |

| Metrohm TaticID-1064ST | Handheld Raman (1064 nm) | Built-in camera and note-taking for documentation; analysis guidance. | Identification of hazardous materials; raw material verification [24]. |

| BrightSpec MW Spectrometer | Broadband Chirped Pulse Microwave | First commercial instrument of its type for unambiguous gas-phase structure determination. | Determination of molecular structure and configuration of small molecules [24]. |

Data Interpretation: The Rise of AI and Machine Learning

Data interpretation is undergoing the most significant transformation, with AI and ML moving from niche applications to mainstream tools. These methods are enhancing everything from spectral calibration to the classification of complex samples.

Performance Comparison: Conventional Chemometrics vs. AI/ML

Table 3: Comparison of Conventional Chemometrics and AI/ML Approaches for Spectral Data Interpretation

| Feature | Conventional Chemometrics (e.g., PCA, PLS) | AI/ML Approaches (e.g., CNNs, Transformers) |

|---|---|---|

| Model Flexibility | Primarily linear; variants exist (e.g., Kernel PLS) but are less common. | Can capture complex, non-linear relationships within high-dimensional data [26] [27]. |

| Data Handling | Effective for structured, lower-dimensional data. | Excels with large, complex datasets (e.g., spectral images, high-throughput screens) [26]. |

| Prediction Accuracy | Robust for many applications but may miss subtle, non-linear spectral patterns. | Can show superior prediction accuracy by identifying hierarchical features traditional models miss [28] [26]. |

| Interpretability | Highly interpretable; components and loadings have chemical meaning. | Often a "black box"; though attention mechanisms in transformers are improving interpretability [26]. |

| Automation & Adaptability | Static models requiring manual recalibration. | Potential for adaptive calibration systems that self-correct for instrument or sample drift [26]. |

| Computational Demand | Lower. | Higher, especially for deep learning models during training. |

Experimental Protocol: AI-Developed Method for LIBS Spectral Discrimination

Objective: To implement a novel AI-developed method for discriminating between toner samples from various printers and photocopiers using Laser-Induced Breakdown Spectroscopy (LIBS) data, without user-led preprocessing [28].

Materials & Software:

- Spectral Data: LIBS spectra collected from toner samples.

- Computing Environment: Python or a similar platform capable of running machine learning workflows.

- Algorithms: A combination of normalization, interpolation, and peak detection techniques, integrated into an AI model. For comparison, standard PCA and PLS-DA scripts are required [28].

Method:

- Data Acquisition: Collect LIBS spectra from the set of toner samples.

- AI Model Processing: Feed the raw spectral data into the AI-developed model, which automatically performs:

- Normalization: Scales the spectra to a common baseline.

- Interpolation: Ensures all spectra are on a consistent wavelength axis.

- Peak Detection: Identifies and characterizes significant spectral peaks [28].

- Conventional Processing: Process the same dataset using conventional PCA and PLS-DA, which may require manual preprocessing steps like baseline correction and alignment.

- Performance Evaluation: Quantitatively evaluate and compare the discrimination accuracy of both methods using statistical analysis, including:

- Accuracy difference percentage.

- Component-wise variance analysis.

- Paired t-test.

- Cross-validation tests [28].

Result: The cited study confirmed a significant improvement in accuracy with the AI-developed method compared to conventional PCA and PLS-DA, demonstrating the potential of AI to enhance efficiency and accuracy in spectroscopic classification for forensic and related applications [28].

The Scientist's Toolkit: Key Research Reagent Solutions

Modern method development relies on a suite of specialized reagents and consumables that are critical for success.

Table 4: Essential Research Reagent Solutions for Spectroscopic Method Development

| Item | Function |

|---|---|

| Weak Anion Exchange (WAX) SPE Kits | Selective extraction and purification of acidic analytes like oligonucleotides from complex matrices prior to LC-MS analysis [25]. |

| Stacked SPE Cartridges (e.g., GCB/WAX) | Combined phases for comprehensive cleanup; used for isolating challenging compounds like PFAS while minimizing background interference [25]. |

| Rapid Peptide Mapping Kits | Streamline the digestion of proteins into peptides for characterization by mass spectrometry, reducing digestion time significantly (e.g., from overnight to under 2.5 hours) [25]. |

| Ultrapure Water (e.g., from Milli-Q SQ2) | Provides water of consistent, high purity for sample preparation, buffer and mobile phase preparation, and sample dilution to prevent contamination and background interference [24]. |

| Natural Deep Eutectic Solvents (NADES) | Serve as green alternatives for organic solvents in sample preparation prior to LC-MS, aligning with sustainable chemistry principles [29]. |

| Sildenafil | Sildenafil|PDE5 Inhibitor for Research |

| Risperidone | Risperidone|High-Purity Reference Standard |

Integrated Workflow Visualization

The following diagram synthesizes the key stages of the modern analytical method development process, from sample to insight, integrating the concepts of automation, PAT, and AI-driven interpretation.

Diagram Title: Modern Analytical Method Development Workflow

This diagram illustrates the integrated, technology-driven workflow of modern analytical method development. The linear progression from sample to validated result is powered by key technological advancements at each stage: automation in sample preparation, PAT and hyphenated systems for analysis, and AI/ML for data interpretation.

The integration of PAT is strongly encouraged by regulatory agencies like the U.S. FDA for realizing Quality by Design (QbD) concepts. The ultimate goal of PAT is not merely process monitoring but to validate and ensure Good Manufacturing Practice (GMP) compliance, guaranteeing safe, effective, and quality-controlled products [30]. Successful PAT integration into a GMP framework requires a thorough understanding of regulatory requirements throughout the entire technology lifecycle, from selection and implementation to operation and maintenance [30].

In conclusion, the field of spectroscopic method development is moving towards tighter integration, greater intelligence, and enhanced robustness. The comparison data and protocols presented herein demonstrate that while conventional methods remain valid for many applications, the adoption of automated sample preparation, advanced PAT instrumentation, and AI-driven data interpretation offers tangible benefits in accuracy, efficiency, and consistency. For researchers in pharmaceutical analysis, embracing these technologies within a sound regulatory framework is key to developing validated methods that meet the demands of modern drug development.

In the biopharmaceutical industry, ensuring the quality, safety, and efficacy of products is paramount. Quality Assurance (QA) and Quality Control (QC) represent two pillars of a comprehensive Quality Management System (QMS). QA is a proactive, process-oriented approach focused on preventing defects by establishing robust systems and procedures. In contrast, QC is a reactive, product-oriented function centered on detecting defects in final products through inspection and testing [31] [32]. For complex biopharmaceuticals—including recombinant proteins, monoclonal antibodies (mAbs), and cell therapies—spectroscopic techniques provide indispensable tools for critical quality attributes (CQAs) like identity, purity, and potency [22].

The global biopharmaceutical market, valued at approximately USD 452 billion in 2024, relies on advanced analytical techniques to characterize products whose structural complexity and heterogeneity present significant analytical challenges [22]. This guide objectively compares the performance of key spectroscopic techniques in addressing these challenges, framed within the essential context of analytical method validation to ensure reliability and regulatory compliance.

Analytical Method Validation in Spectroscopy

The validation of analytical methods is a foundational requirement in pharmaceutical QC. Validation is the process of experimentally proving the degree of confidence in analytical results, ensuring their reliability, precision, and accuracy [33]. The concept of an analytical method lifecycle, as advocated by the US Pharmacopeia (USP), encompasses stages from initial method design to ongoing procedure performance verification [34]. A "fit-for-purpose" approach is often adopted, where validation requirements are tailored to the product's development stage, with full validation required for commercial products according to guidelines like ICH Q2(R1) [34].

Key validation parameters include accuracy, precision, specificity, and detection limits. The Lower Limit of Detection (LLD), for instance, defines the smallest amount of analyte detectable with 95% confidence and is crucial for trace analysis [33]. Method validation is not a one-time event; it extends to method transfer between laboratories, often managed through risk-based approaches like comparative testing or covalidation to ensure consistency across different testing sites [34].

Identity Testing

Identity testing confirms that a material is what it claims to be. This is a fundamental QA/QC requirement to prevent mix-ups and ensure patient safety.

Experimental Protocols for Identity Testing

- Near-Infrared (NIR) Spectroscopy: For raw material identification, collect spectra of unknown samples and reference standards using a handheld or benchtop NIR spectrometer. Pre-process spectra (e.g., Savitzky-Golay smoothing, standard normal variate correction) and compare against a validated spectral library using a statistical metric like the correlation coefficient or Mahalanobis distance. A match above a pre-defined threshold confirms identity [24] [35].

- Raman Spectroscopy: For finished product identification, particularly in blister packs or glass vials, use a 1064nm laser Raman spectrometer to minimize fluorescence. Acquire spectra through the packaging and compare the biochemical fingerprint region (e.g., 1800-600 cmâ»Â¹) to a reference spectrum of the approved drug product using chemometric algorithms [24].

- Ultraviolet-Visible (UV-Vis) Spectroscopy: For solution-based biologics like mAbs, dilute the sample to an appropriate concentration and acquire a full UV-Vis spectrum (e.g., 240-350 nm). The second derivative spectrum provides a unique fingerprint based on the tyrosine and tryptophan content and environment, which should be visually and statistically identical to the reference standard [22].

Comparison of Spectroscopic Techniques for Identity Testing

Table 1: Performance Comparison for Identity Testing

| Technique | Simplicity & Speed | Specificity | Key Applications | Limitations |

|---|---|---|---|---|

| NIR Spectroscopy | High; seconds per measurement | Moderate to High | Raw material identification, counterfeit drug detection [35] | Limited for aqueous solutions; requires robust spectral libraries |

| Raman Spectroscopy | High; non-contact & through packaging | High | In-process verification, finished product inspection [24] | Can be affected by fluorescence; weak signal for some compounds |

| UV-Vis Spectroscopy | Very High; minimal sample prep | Moderate | Protein identity confirmation, mAb screening [22] | Low structural specificity; primarily for solution-state analysis |

Purity Analysis

Purity analysis detects and quantifies impurities, which can include product-related variants (e.g., aggregates, fragments) or process-related contaminants.

Experimental Protocols for Purity Analysis

- Size-Exclusion Chromatography with UV/FLS (SEC-UV/FLS): This is the gold standard for quantifying protein aggregates and fragments. Use a calibrated SEC column with a phosphate-saline buffer at neutral pH as the mobile phase. Monitor the eluent with both UV (e.g., 280 nm) and fluorescence (FLS) detectors. Quantify the percentage of high-molecular-weight (HMW) and low-molecular-weight (LMW) species by integrating their peak areas relative to the main monomer peak [34].

- A-TEEM for Protein Aggregation: Use a specialized A-TEEM (Absorbance-Transmission-Excitation-Emission Matrix) instrument. For a mAb sample, first acquire the absorbance spectrum to determine concentration. Then, collect the fluorescence EEM from 240-300 nm excitation and 300-500 nm emission. The EEM signature is highly sensitive to conformational changes and can detect and quantify small, soluble aggregates that may be missed by SEC [24].

- High-Resolution Mass Spectrometry (HR-MS) for Product-Related Impurities: While not a classical spectroscopic technique, MS is often coupled with optical detectors. Desalt the protein sample and introduce it via electrospray ionization. The resulting mass spectrum will show the main intact mass peak along with smaller peaks representing impurities like glycated, oxidized, or truncated species, which are quantified based on relative abundance [22].

Comparison of Spectroscopic Techniques for Purity Analysis

Table 2: Performance Comparison for Purity Analysis

| Technique | Key Impurity Type Detected | Quantitative Performance (LOQ) | Information Depth | Throughput |

|---|---|---|---|---|

| SEC-UV | Size variants (Aggregates, Fragments) | ~0.1% for HMW/LMW [34] | Low (size-based separation only) | Medium (10-30 min/run) |

| A-TEEM | Soluble aggregates, Conformational changes | Information not provided in search results | High (conformational & compositional data) | High (minutes per sample) |

| HR-MS | Chemical modifications (Oxidation, Deamidation) | ~0.01% for some PTMs [22] | High (exact mass identification) | Low to Medium |

Potency Determination

Potency is a critical quality attribute reflecting the biological activity of a drug product. Spectroscopic methods can serve as orthogonal or surrogate methods for traditional cell-based bioassays.

Experimental Protocols for Potency Determination

- Circular Dichroism (CD) for Higher-Order Structure (HOS): For a protein therapeutic, prepare a solution in a suitable buffer. Acquire far-UV CD spectra (e.g., 190-250 nm) to assess secondary structure (α-helix, β-sheet) and near-UV CD spectra (250-350 nm) to probe tertiary structure. Compare the spectral signature to a reference standard with known potency. A significant deviation indicates a change in HOS that likely impacts biological activity [22].

- QCL Microscopy for Protein Particle Analysis: Use a Quantum Cascade Laser (QCL)-based infrared microscope. Place a volume of the protein formulation on an IR-transparent slide and allow it to dry. Acquire infrared images in transmission mode across a defined area. The system can identify and count sub-visible particles based on their protein-specific IR absorption, providing a physical stability metric correlated to potency [24].

- Bioassays with Spectroscopic Detection (e.g., ELISA): While ELISA is an immunoassay, its readout is often spectroscopic. Incubate the sample in an antigen-coated plate, followed by an enzyme-linked detection antibody. Add a colorimetric or chemiluminescent substrate and measure the resulting signal with a UV-Vis plate reader. The signal intensity is proportional to the concentration of active protein, allowing for potency determination relative to a standard curve [22].

Comparison of Techniques for Potency Determination

Table 3: Performance Comparison for Potency Determination

| Technique | Mechanism of Action | Correlation to Bioactivity | Precision (RSD) | Key Advantage |

|---|---|---|---|---|

| Cell-Based Bioassay | Direct measurement of biological response | Direct (Gold Standard) | Can be >15% [22] | Measures true biological function |

| CD Spectroscopy | Probing Higher-Order Structure (HOS) | High (Surrogate) | ~1-3% | Rapid, high-precision HOS assessment |

| QCL Microscopy | Quantifying sub-visible particles | Indirect (Correlative) | Information not provided in search results | Links physical stability to potency loss |

| ELISA (UV-Vis Readout) | Binding to target antigen | High (for binding assays) | ~5-10% | High throughput and sensitivity |

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Research Reagents and Materials for Spectroscopic QA/QC

| Item | Function/Application | Critical Quality Attributes |

|---|---|---|

| Ag-Cu Alloy Standards | Calibration and validation of XRF spectrometers for elemental impurity analysis [33]. | Certified composition (e.g., Ag₀.₇₅Cu₀.₂₅), homogeneity, traceability to SI units. |

| USP/EP Reference Standards | System suitability testing and validation of compendial methods (e.g., verification of a USP method) [34]. | Purity, identity, and potency as defined by pharmacopeial monographs. |

| Forced Degradation Samples | Establishing assay specificity during method validation [34]. | Intentionally generated samples containing known impurities (e.g., oxidized, aggregated species). |

| Size-Exclusion Columns | Separation of protein aggregates and fragments for SEC-UV/FLS analysis. | Pore size, resolution, recovery, and minimal non-specific binding for proteins. |

| Ultrapure Water | Solvent for mobile phase and sample preparation in LC-UV and other sensitive analyses [24]. | Resistivity >18 MΩ·cm, low TOC, free of particulates and endotoxins. |

| Bekanamycin sulfate | Bekanamycin sulfate, CAS:25389-94-0, MF:C18H38N4O15S, MW:582.6 g/mol | Chemical Reagent |

| Docetaxel | Docetaxel | High-purity Docetaxel, a microtubule-stabilizing taxane for oncology research. For Research Use Only. Not for human consumption. |

Spectroscopic methods provide a powerful, often complementary, toolkit for addressing the core QA/QC applications of identity, purity, and potency in pharmaceutical analysis. The choice of technique involves a careful balance of specificity, sensitivity, throughput, and regulatory fit-for-purpose. NIR and Raman spectroscopies offer rapid, non-destructive identity testing, while more advanced techniques like A-TEEM and QCL microscopy are emerging as powerful tools for deep purity and structural analysis. The transformative power of these techniques, particularly NIR, lies in their potential for non-destructive, rapid analysis that can be deployed at the point of care [35].

A critical overarching theme is that the analytical value of any technique is contingent upon a rigorous method validation framework, ensuring that the data generated is reliable, accurate, and precise [34] [33]. As the biopharmaceutical landscape grows in complexity, the integration of advanced spectroscopic techniques, supported by robust validation and a fit-for-purpose strategy, will be crucial for ensuring the quality, safety, and efficacy of future medicines [22].

Role in Process Analytical Technology (PAT) for Real-Time Monitoring

Process Analytical Technology (PAT) is a system for designing, analyzing, and controlling manufacturing through timely measurements of critical quality and performance attributes of raw and in-process materials, with the goal of ensuring final product quality [36]. The U.S. Food and Drug Administration (FDA) has encouraged its adoption to facilitate a science-based approach to manufacturing, aimed at minimizing variability and enhancing product quality [30]. Spectroscopic techniques form the backbone of modern PAT frameworks, enabling real-time monitoring, reduced production cycles, and immediate product release by providing non-invasive, molecular-level insights directly from the process stream [30] [23]. This guide objectively compares the performance of major spectroscopic PAT tools, providing experimental data and methodologies relevant to pharmaceutical researchers and development professionals.

Comparative Analysis of Major Spectroscopic PAT Techniques

The selection of an appropriate spectroscopic technique is critical for effective PAT implementation. The table below provides a quantitative comparison of the most widely used spectroscopic methods based on recent research and applications.

Table 1: Performance Comparison of Key Spectroscopic PAT Techniques

| Technique | Spectral Range | Key Measurable Parameters | Detection Limits | Analysis Time | Major Strengths | Key Limitations |

|---|---|---|---|---|---|---|

| NIR Spectroscopy [30] | 780–2500 nm | Concentration of C–H, O–H, N–H bonds; moisture content; blend uniformity | Moderate (%~ppm) | Real-time (seconds) | Non-invasive; deep penetration; fiber-optic compatible | Weak absorption bands; complex chemometrics required; water interference |

| Raman Spectroscopy [1] [37] | Varies with laser | Molecular fingerprints; crystal forms; API concentration | Low (ppm) | Real-time (seconds-minutes) | Minimal sample prep; insensitive to water; specific molecular information | Fluorescence interference; weak signals; equipment cost |

| FTIR Spectroscopy [1] [37] | Mid-IR: 4000-400 cmâ»Â¹ | Functional groups; protein secondary structure; media quality | Moderate | Real-time (seconds) | Excellent molecular specificity; robust identification | Limited to surface analysis (ATR); water absorption interferes |

| UV-Vis Spectroscopy [1] [38] | 190–800 nm | API concentration; dissolved oxygen; cell density | High (ppb for some analytes) | Real-time (seconds) | Simple operation; inexpensive; high throughput | Limited structural info; requires chromophores; scattering issues |

| Fluorescence Spectroscopy [1] [23] | Varies with fluorophore | Protein folding; cellular metabolism; microenvironments | Very High (single molecule) | Real-time (seconds) | Extreme sensitivity; minimal sample volume; spatial resolution | Requires fluorophores; photo-bleaching; background interference |

Experimental Protocols for Spectroscopic PAT

Protocol: In-line NIR for Blend Potency Monitoring

This methodology, derived from a commercial pharmaceutical implementation for triple-active oral solid dosage forms, demonstrates a complete PAT workflow for real-time potency assessment [39].

Objective: To monitor and control the potency of three active pharmaceutical ingredients (APIs) in a final blend using in-line NIR spectroscopy, enabling real-time release testing.

Materials and Equipment:

- NIR Spectrometer with fiber-optic probe (1100–2200 nm range)

- Integration Platform for continuous manufacturing line

- Chemometric Software for multivariate model development

- Reference Method: High-performance liquid chromatography (HPLC) system

- Sample Set: Final blend powders with controlled variations in API concentration (90-110% of target), excipient ratios, and process parameters

Experimental Workflow:

- Data Collection: Spectra are collected in-line from the final blend powder using a NIR probe positioned directly in the blending unit. Experiments are designed using Quality by Design (QbD) principles, incorporating variations in APIs, excipients, multiple lots, and process parameters [39].

- Spectral Pre-processing: Raw spectra undergo three treatment steps to enhance signal quality:

- Smoothing across the entire spectrum (1100–2200 nm)

- Standard Normal Variate (SNV) applied to the 1200–2100 nm range to reduce scatter effects

- Mean centering within the specific prediction ranges (1245–1415 nm and 1480–1970 nm) [39]

- Model Development: Partial Least Squares (PLS) regression models are built for each API, correlating pre-processed NIR spectra with HPLC reference data. Linear Discriminant Analysis (LDA) models are additionally developed to classify potency as "typical" (95-105%), "exceeding low" (<94.5%), or "exceeding high" (>105%) [39].

- Validation: Models are challenged with:

- A dedicated sample set not used in calibration

- Hundreds of samples with wider potency ranges analyzed by HPLC

- Historical production data (tens of thousands of spectra) to ensure robustness across batch and lot variability [39]

- Implementation: Deployed models provide real-time potency results during manufacturing. System diagnostics monitor for lack of fit or excessive variation from the model center, triggering alarms if thresholds are exceeded [39].

Protocol: Multi-Spectroscopy for Cell Culture Media Quality Assessment

This 2024 study directly compares multiple spectroscopic PAT tools for rapid quality evaluation of CHO cell culture media, demonstrating a methodology for bioprocess application [37].

Objective: To rapidly assess the impact of media preparation parameters (temperature and pH) on media quality using multiple spectroscopic PAT tools and correlate findings with cell culture performance.

Materials and Equipment:

- FTIR Spectrometer

- Raman Spectrometer

- Excitation-Emission Matrix (EEM) Fluorescence Spectroscope

- Bioreactor System for fed-batch CHO cell cultures

- Analytical Tools for product quality assessment (titer, charge variants, glycosylation)

Experimental Workflow:

- Sample Preparation: Cell culture media are prepared under a wide range of temperatures (40-80°C) and pH (7.6-10.0) to intentionally introduce quality variations [37].

- Spectroscopic Analysis: Media samples are analyzed in real-time using:

- FTIR spectroscopy to identify changes in functional groups and molecular structure

- Raman spectroscopy for molecular fingerprinting

- EEM spectroscopy to detect fluorescence profiles indicative of media composition changes [37]

- Reference Analysis: The same media batches are used in fed-batch CHO cell cultures producing three different monoclonal antibodies. Cell growth (VCD, viability), productivity (titer), and critical quality attributes (charge variants, glycosylation) are measured [37].

- Data Correlation: Spectroscopic profiles are correlated with cell culture performance data using multivariate analysis to identify spectral signatures predictive of suboptimal media quality [37].

PAT Implementation Workflow

The integration of spectroscopic techniques into a PAT framework follows a systematic lifecycle that aligns with regulatory expectations for model maintenance and process control. The diagram below illustrates the core workflow and management cycle for a robust PAT system.

Figure 1: PAT Implementation and Management Lifecycle

PAT Model Lifecycle Management

Spectroscopic PAT applications rely on chemometric models whose accuracy can be affected by factors such as aging equipment, changes in raw materials, or previously unidentified process variations [39]. Effective management of these "living" models is essential for maintaining PAT system performance over time.